从0开始搭建prometheus监控平台

文章目录

-

- Prometheus监控平台安装配置

-

- 一、环境准备

-

- 平台对比

- 1.2 环境说明

- 1.3 prometheus架构图

- 1.4 prometheus介绍

-

- 1.4.1 基本原理

- 1.4.2 服务过程

- 1.4.3 三大套件

- 二、环境准备

-

- 2.1 安装go

- 2.2 docker安装

- 2.3 安装docker-compose

- 三、prometheus安装

-

- 3.1 安装Prometheus

- 3.2 安装Grafana

- 3.3 alertmanager告警组件安装

- 四、consul 普罗米修斯配置热更新

-

- 4.1 Prometheus 支持的多种服务发现机制

- 4.2 consul优点

- 4.3 基于docker-conpose安装consul集群

- 4.4 浏览器访问 ip:8502

- 4.5 使用接口注册服务

- 4.6 修改 prometheus 使用 consul 服务发现

- 五、prometheus监控配置

-

- 5.1 mysql监控

-

- 5.1.1 mysqld_exporter组件安装

- 5.1.2 grafana可视化监控数据

- 5.2 http/https、tcp、icmp、dns黑盒监控

- 5.3 linux服务器监控

-

- 5.3.1 安装linux采集组件node_exporter

- 5.3.2 grafana监控数据可视化

- 5.4 redis服务监控

-

- 5.4.1 redis_exporter采集组件安装

- 5.4.2 grafana监控数据可视化

- 5.5 springboot应用服务监控

-

- 5.5.1 开启应用服务监控数据

- 5.5.2 应用服务加入普罗米修斯监控

- 5.5.3 grafana监控图形配置

- 5.6 elasticsearch监控

-

- 5.6.1 安装elasticsearch采集组件(elasticsearch服务所在主机)

- 5.6.2 grafana监控图形配置

- 5.7 nginx监控

-

- 5.7.1 安装nginx-module-vts、geoip_module模块

- 5.7.2 安装nginx采集组件(nginx服务所在主机)

- 5.7.3 grafana监控图形配置

Prometheus监控平台安装配置

一、环境准备

平台对比

1.2 环境说明

| 名称 | 地址 | 说明 |

|---|---|---|

| 官方采集组件 | https://prometheus.io/download/ | 包含prometheus、alertmanager、blackbox_exporter、consul_exporter、graphite_exporter、haproxy_exporter、memcached_exporter、mysqld_exporter、node_exporter、pushgateway、statsd_exporter等组件 |

| 其他采集组件 | https://prometheus.io/docs/instrumenting/exporters/ | 上面不包含的组件都可以来这里查找 |

| grafana dashboards json模板 | https://grafana.com/grafana/dashboards?search=kafka | 搜索grafana图形化展示prometheus监控数据模板 |

| prometheus中文文档1 | https://www.prometheus.wang/exporter/use-promethues-monitor-mysql.html | |

| prometheus中文文档2 | https://prometheus.fuckcloudnative.io/di-san-zhang-prometheus/storage |

- 本文档使用资源

| 名称 | 版本 | 下载链接 | 描述 |

|---|---|---|---|

| 操作系统 | centos7.8 | ||

| Prometheus | prometheus-2.24.0.linux-amd64.tar.gz | https://prometheus.io/download/ | 普罗米修斯服务 |

| go | 1.11.4 | https://golang.org/dl/ | go环境 |

| Grafana | 7.3.7-1 | wget https://dl.grafana.com/oss/release/grafana-7.3.7-1.x86_64.rpm | 普罗米修斯监控数据图形化组件 |

| alertmanager | alertmanager-0.21.0.linux-amd64.tar.gz | https://prometheus.io/download/ | 普罗米修斯告警组件 |

| blackbox_exporter | blackbox_exporter-0.18.0.linux-amd64.tar.gz | https://prometheus.io/download/ | 黑盒探测组件 |

| mysqld_exporter | mysqld_exporter-0.12.1.linux-amd64.tar.gz | https://prometheus.io/download/ | mysql监控组件 |

| node_exporter | node_exporter-1.0.1.linux-amd64.tar.gz | https://github.com/prometheus/node_exporter/releases/download/v1.0.1/node_exporter-1.0.1.linux-amd64.tar.gz | 服务器资源监控组件 |

| redis_exporter | redis_exporter-v1.15.0.linux-amd64.tar.gz | https://github.com/oliver006/redis_exporter/releases/download/v1.15.0/redis_exporter-v1.15.0.linux-amd64.tar.gz | redis监控组件 |

| elasticsearch_exporter | https://github.com/justwatchcom/elasticsearch_exporter/releases/download/v1.1.0/elasticsearch_exporter-1.1.0.linux-amd64.tar.gz | es监控组件 | |

| kafka_exporter | https://github.com/danielqsj/kafka_exporter/releases/download/v1.2.0/kafka_exporter-1.2.0.linux-amd64.tar.gz | kafka采集组件 | |

| nginx-module-vts插件 | git://github.com/vozlt/nginx-module-vts.git | nginx-module-vts插件暴露nginx监控数据给普罗米修斯 | |

| nginx-vts-exporter | https://github.com/hnlq715/nginx-vts-exporter/releases/download/v0.9.1/nginx-vts-exporter-0.9.1.linux-amd64.tar.gz | nginx-vts采集组件 | |

| grafana-piechart-panel | https://grafana.com/api/plugins/grafana-piechart-panel/versions/latest/download | grafana饼图插件 |

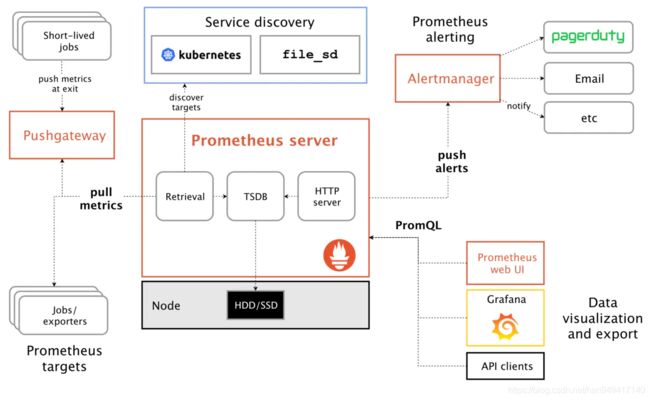

1.3 prometheus架构图

1.4 prometheus介绍

Prometheus 是一个开源的服务监控系统和时间序列数据库。

1.4.1 基本原理

Prometheus的基本原理是通过HTTP协议周期性抓取被监控组件的状态,任意组件只要提供对应的HTTP接口就可以接入监控。不需要任何SDK或者其他的集成过程。这样做非常适合做虚拟化环境监控系统,比如VM、Docker、Kubernetes等。输出被监控组件信息的HTTP接口被叫做exporter 。目前互联网公司常用的组件大部分都有exporter可以直接使用,比如Varnish、Haproxy、Nginx、MySQL、Linux系统信息(包括磁盘、内存、CPU、网络等等)。

1.4.2 服务过程

- Prometheus Daemon负责定时去目标上抓取metrics(指标)数据,每个抓取目标需要暴露一个http服务的接口给它定时抓取。Prometheus支持通过配置文件、文本文件、Zookeeper、Consul、DNS SRV Lookup等方式指定抓取目标。Prometheus采用PULL的方式进行监控,即服务器可以直接通过目标PULL数据或者间接地通过中间网关来Push数据。

- Prometheus在本地存储抓取的所有数据,并通过一定规则进行清理和整理数据,并把得到的结果存储到新的时间序列中。

- Prometheus通过PromQL和其他API可视化地展示收集的数据。Prometheus支持很多方式的图表可视化,例如Grafana、自带的Promdash以及自身提供的模版引擎等等。Prometheus还提供HTTP API的查询方式,自定义所需要的输出。

- PushGateway支持Client主动推送metrics到PushGateway,而Prometheus只是定时去Gateway上抓取数据。

- Alertmanager是独立于Prometheus的一个组件,可以支持Prometheus的查询语句,提供十分灵活的报警方式。

1.4.3 三大套件

- Server 主要负责数据采集和存储,提供PromQL查询语言的支持。

- Alertmanager 警告管理器,用来进行报警。

- Push Gateway 支持临时性Job主动推送指标的中间网关。

二、环境准备

2.1 安装go

- 解压安装

tar -C /usr/local/ -xvf go1.11.4.linux-amd64.tar.gz

- 配置环境变量

vim /etc/profile

------------------------------------------------------------------

export PATH=$PATH:/usr/local/go/bin

------------------------------------------------------------------

//配置生效

source /etc/profile

- 验证

[root@iZ2zejaz33icbod2k4cvy6Z ~]# go version

go version go1.11.5 linux/amd64

2.2 docker安装

- 安装docker

# 阿里云 docker hub 镜像

export REGISTRY_MIRROR=https://registry.cn-hangzhou.aliyuncs.com

# 卸载旧版本

yum remove -y docker \

docker-client \

docker-client-latest \

docker-ce-cli \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine

# 设置 yum repository

yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 安装并启动 docker

yum install -y docker-ce-19.03.11 docker-ce-cli-19.03.11 containerd.io-1.2.13

mkdir /etc/docker || true

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["${REGISTRY_MIRROR}"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

EOF

mkdir -p /etc/systemd/system/docker.service.d

# Restart Docker

systemctl daemon-reload

systemctl enable docker

systemctl restart docker

2.3 安装docker-compose

- 下载docker-compose包并上传到linux下:

下载地址:https://download.csdn.net/download/han949417140/14801125 - 安装docker-compose

mv docker-compose-Linux-x86_64 /usr/local/bin/docker-compose

chomd -R 777 /usr/local/bin/docker-compose

//校验

docker-compose -v

------------------------------------------------------------------------------------------

docker-compose version 1.27.4, build 40524192

-----------------------------------------------------------------------------------------

三、prometheus安装

3.1 安装Prometheus

- 安装

tar -zxvf prometheus-2.24.0.linux-amd64.tar.gz

mv prometheus-2.24.0.linux-amd64 /usr/local/Prometheus

- 启动

普罗米修斯默认配置文件 /usr/local/Prometheus/prometheus.yml

nohup /usr/local/Prometheus/prometheus --config.file=/usr/local/Prometheus/prometheus.yml &

3.2 安装Grafana

普罗米修斯默认的页面可能没有那么直观,我们可以安装grafana使监控看起来更直观

- 安装

wget https://dl.grafana.com/oss/release/grafana-7.3.7-1.x86_64.rpm

sudo yum install grafana-7.3.7-1.x86_64.rpm

- 启动

sudo /bin/systemctl daemon-reload

sudo /bin/systemctl enable grafana-server.service

sudo /bin/systemctl start grafana-server.service

- 访问grafana

浏览器访问IP:3000端口,即可打开grafana页面,默认用户名密码都是admin,初次登录会要求修改默认的登录密码

- 添加prometheus数据源

1)点击主界面的“Add data source”

2)选择Prometheus

3)Dashboards页面选择“Prometheus 2.0 Stats”

4)Settings页面填写普罗米修斯地址并保存

5)切换到我们刚才添加的“Prometheus 2.0 Stats”即可看到整个监控页面

grafana默认无法展示饼图,所以需要下载安装饼图插件piechart

- 安装piechart插件(离线安装)

下载地址:https://grafana.com/grafana/plugins/grafana-piechart-panel?pg=plugins&plcmt=featured-undefined&src=grafana_plugin_list

grafana的默认插件目录是/var/lib/grafana/plugins,可以将下载好的插件解压到这个目录,重启grafana即可

// 查看已安装插件列表

[root@bogon sbin]# /usr/sbin/grafana-cli plugins ls

------------------------------------------------------------------------------------------------------

Restart grafana after installing plugins . <service grafana-server restart>

-----------------------------------------------------------------------------------------------------

//解压插件包到对应目录下,重启grafana服务

[root@bogon resources]# unzip grafana-piechart-panel-5f249d5.zip

[root@bogon resources]# mv grafana-piechart-panel-5f249d5 /var/lib/grafana/plugins/

[root@bogon resources]# service grafana-server restart

Restarting grafana-server (via systemctl): [ 确定 ]

[root@bogon resources]# /usr/sbin/grafana-cli plugins ls

------------------------------------------------------------------------------------

installed plugins:

grafana-piechart-panel @ 1.3.3

Restart grafana after installing plugins . <service grafana-server restart>

------------------------------------------------------------------------------------

- 安装piechart插件(在线安装)

[root@bogon plugins]# /usr/sbin/grafana-cli plugins install grafana-piechart-panel

installing grafana-piechart-panel @ 1.6.1

from: https://grafana.com/api/plugins/grafana-piechart-panel/versions/1.6.1/download

into: /var/lib/grafana/plugins

✔ Installed grafana-piechart-panel successfully

Restart grafana after installing plugins . <service grafana-server restart>

[root@bogon plugins]# service grafana-server restart

Restarting grafana-server (via systemctl): [ 确定 ]

[root@bogon plugins]# /usr/sbin/grafana-cli plugins ls

installed plugins:

grafana-piechart-panel @ 1.6.1

Restart grafana after installing plugins . <service grafana-server restart>

3.3 alertmanager告警组件安装

Alertmanager是一个独立的告警模块,接收Prometheus等客户端发来的警报,之后通过分组、删除重复等处理,并将它们通过路由发送给正确的接收器;告警方式可以按照不同的规则发送给不同的模块负责人,Alertmanager支持Email, Slack,等告警方式, 也可以通过webhook接入钉钉等国内IM工具。

- 安装alertmanager

tar -zxvf alertmanager-0.21.2.linux-amd64.tar.gz

mv /opt/resource/alertmanager-0.21.2.linux-amd64 /usr/local/

- 修改alertmanager配置

vi /usr/local/alertmanager-0.21.2.linux-amd64/alertmanager.yml

-------------------------------------------------------------------------------

global:

smtp_smarthost: 'smtp.qiye.163.com:25' # smtp地址

smtp_from: 'xxx.com' # 谁发邮件

smtp_auth_username: 'xxx.com' # 邮箱用户

smtp_auth_password: 'xxx' # 邮箱密码

smtp_require_tls: false

route:

receiver: email

receivers:

- name: 'email'

email_configs:

- to: 'xxx.com'

-------------------------------------------------------------------------------

- 修改prometheus.yml配置,添加告警job

vi /usr/local/Prometheus/prometheus.yml

--------------------------------------------------------------------------------

- job_name: 'alertmanager' #告警job配置

static_configs:

- targets: ['xxx:9093'] #alertmanager默认是9093端口

--------------------------------------------------------------------------------

- 启动alertmanager

#启动alertmanager服务

nohup /usr/local/alertmanager-0.21.0.linux-amd64/bin/alertmanager --config.file="/usr/local/alertmanager-0.18.0.linux-amd64/config/alertmanager.yml" &

- 重启prometheus服务

先 ps -ef|grep prometheus, kill掉prometheus进程然后执行下面命令启动服务

#重启prometheus

nohup /usr/local/Prometheus/prometheus --config.file=/usr/local/Prometheus/prometheus.yml &

四、consul 普罗米修斯配置热更新

4.1 Prometheus 支持的多种服务发现机制

#Prometheus数据源的配置主要分为静态配置和动态发现, 常用的为以下几类:

1)static_configs: #静态服务发现

2)file_sd_configs: #文件服务发现

3)dns_sd_configs: DNS #服务发现

4)kubernetes_sd_configs: #Kubernetes 服务发现

5)consul_sd_configs: Consul #服务发现

#在监控kubernetes的应用场景中,频繁更新的pod,svc,等等资源配置应该是最能体现Prometheus监控目标自动发现服务的好处

4.2 consul优点

在没有使用 consul 服务自动发现的时候,我们需要频繁对 Prometheus 配置文件进行修改,无疑给运维人员带来很大的负担。引入consul之后,只需要在consul中维护监控组件配置,prometheus就能够动态发现配置了。

4.3 基于docker-conpose安装consul集群

- 创建相关目录

mkdir -p /data0/consul

- 创建docker-compose.yaml docker编排

cat > /data0/consul/docker-compose.yaml << \EOF

version: '2'

networks:

byfn:

services:

consul1:

image: consul

container_name: node1

volumes:

- /data0/consul/conf_with_acl:/consul/config

- /data0/consul/consul/node1:/consul/data

command: agent -server -bootstrap-expect=3 -node=node1 -bind=0.0.0.0 -client=0.0.0.0 -config-dir=/consul/config

networks:

- byfn

consul2:

image: consul

container_name: node2

volumes:

- /data0/consul/conf_with_acl:/consul/config

- /data0/consul/consul/node2:/consul/data

command: agent -server -retry-join=node1 -node=node2 -bind=0.0.0.0 -client=0.0.0.0 -config-dir=/consul/config

ports:

- 8500:8500

depends_on:

- consul1

networks:

- byfn

consul3:

image: consul

volumes:

- /data0/consul/conf_with_acl:/consul/config

- /data0/consul/consul/node3:/consul/data

container_name: node3

command: agent -server -retry-join=node1 -node=node3 -bind=0.0.0.0 -client=0.0.0.0 -config-dir=/consul/config

depends_on:

- consul1

networks:

- byfn

consul4:

image: consul

container_name: node4

volumes:

- /data0/consul/conf_with_acl:/consul/config

- /data0/consul/consul/node4:/consul/data

command: agent -retry-join=node1 -node=ndoe4 -bind=0.0.0.0 -client=0.0.0.0 -ui -config-dir=/consul/config

ports:

- 8501:8500

depends_on:

- consul2

- consul3

networks:

- byfn

consul5:

image: consul

container_name: node5

volumes:

- /data0/consul/conf_without_acl:/consul/config

- /data0/consul/consul/node5:/consul/data

command: agent -retry-join=node1 -node=ndoe5 -bind=0.0.0.0 -client=0.0.0.0 -ui -config-dir=/consul/config

ports:

- 8502:8500

depends_on:

- consul2

- consul3

networks:

- byfn

EOF

- docker-compose启动consul服务

cd /data0/consul/

docker-compose up -d

4.4 浏览器访问 ip:8502

4.5 使用接口注册服务

# 注册服务

curl -X PUT -d '{"id": "redis","name": "redis","address": "182.92.219.202","port": 9121,"tags": ["service"],"checks": [{"http": "http://182.92.219.202:9121/","interval": "5s"}]}' http://182.92.219.202:8502/v1/agent/service/register

# 查询指定节点以及指定的服务信息

[root@iZ2zejaz33icbod2k4cvy6Z ~]# curl http://182.92.219.202:8500/v1/catalog/service/redis

[{

"ID":"9d76becb-c557-e605-de13-a906ef32497c","Node":"ndoe5","Address":"172.20.0.6","Datacenter":"dc1","TaggedAddresses":{

"lan":"172.20.0.6","lan_ipv4":"172.20.0.6","wan":"172.20.0.6","wan_ipv4":"172.20.0.6"},"NodeMeta":{

"consul-network-segment":""},"ServiceKind":"","ServiceID":"redis","ServiceName":"redis","ServiceTags":["service"],"ServiceAddress":"182.92.219.202","ServiceTaggedAddresses":{

"lan_ipv4":{

"Address":"182.92.219.202","Port":9121},"wan_ipv4":{

"Address":"182.92.219.202","Port":9121}},"ServiceWeights":{

"Passing":1,"Warning":1},"ServiceMeta":{

},"ServicePort":9121,"ServiceEnableTagOverride":false,"ServiceProxy":{

"MeshGateway":{

},"Expose":{

}},"ServiceConnect":{

},"CreateIndex":458,"ModifyIndex":458}][root@iZ2zejaz33icbod2k4cvy6Z ~]#

#删除指定服务 redis为要删除服务的id

curl -X PUT http://182.92.219.202:8502/v1/agent/service/deregister/redis

4.6 修改 prometheus 使用 consul 服务发现

- prometheus添加consul监控

vi prometheus.yml

--------------------------------------------------------------------------------------------------------------------------------------------------------------

#新增如下配置

- job_name: 'consul-prometheus'

consul_sd_configs:

- server: '182.92.219.202:8502'

services: []

---------------------------------------------------------------------------------------------------------------------------------------------------------------

- 重启prometheus服务

#重启prometheus

nohup /usr/local/Prometheus/prometheus --config.file=/usr/local/Prometheus/prometheus.yml &

下文普罗米修斯增加监控实例都采用consul动态发现配置,就不使用修改prometheus.yaml然后重启普罗米修斯方式了

五、prometheus监控配置

5.1 mysql监控

5.1.1 mysqld_exporter组件安装

- 监控mysql需要用到mysqld_exporter采集组件

下载地址:mysqld_exporter-0.12.1.linux-amd64.tar.gz - 被监控mysql机器安装mysqld-exporter

tar -C /usr/local/ -xvf mysqld_exporter-0.12.1.linux-amd64.tar.gz

- 设置采集组件配置文件

vi /usr/local/mysqld_exporter-0.12.1.linux-amd64/.my.cnf

----------------------------------------------------------------------

[client]

user=root #mysql数据库账号

password=123456 #mysql数据库密码

--------------------------------------------------------------------

- 启动mysqld-exporter

nohup /usr/local/mysqld_exporter-0.12.1.linux-amd64/mysqld_exporter --config.my-cnf="/usr/local/mysqld_exporter-0.12.1.linux-amd64/.my.cnf" &

- consul动态增加配置(consul服务主机上执行)

//去consul服务器上执行命令:

curl -X PUT -d '{"id": "192.168.100.132_mysql","name": "192.168.100.132_mysql","address": "192.168.100.132","port": 9104,"tags": ["service"],"checks": [{"http": "http://192.168.100.132:9104/","interval": "5s"}]}' http://192.168.100.175:8502/v1/agent/service/register

5.1.2 grafana可视化监控数据

6) prometheus告警规则配置

配置告警规则文件目录

vi /usr/local/Prometheus/prometheus.yml

---------------------------------------------------------------------

rule_files:

- /usr/local/Prometheus/conf/rule/*.yml #告警规则配置放入该目录下

---------------------------------------------------------------------

配置具体告警规则

vi /usr/local/Prometheus/conf/rule/mysql.yml

----------------------------------------------------------------------------------------

groups:

- name: mysql_alert

rules:

### 慢查询 ###

# 默认慢查询告警策略

- alert: mysql慢查询5分钟100条

expr: floor(delta(mysql_global_status_slow_queries{

mysql_addr!~"10.8.6.44:3306|10.8.9.20:3306|10.8.12.212:3306"}[5m])) >= 100

for: 3m

labels:

severity: warning

annotations:

description: "[{

{ $labels.group }}_{

{ $labels.role }}],地址:[{

{ $labels.mysql_addr }}],告警值为:[{

{ $value }}条],告警初始时长为3分钟."

### qps ###

# 默认qps告警策略

- alert: mysql_qps大于8000

expr: floor(sum(irate(mysql_global_status_commands_total{

group!~"product|product_backend"}[5m])) by (group, role, mysql_addr)) > 8000

for: 6m

labels:

severity: warning

annotations:

description: "[{

{ $labels.group }}_{

{ $labels.role }}],地址:[{

{ $labels.mysql_addr }}],告警值为:[{

{ $value }}],告警初始时长为6分钟."

# 商品库等qps告警策略

- alert: mysql_qps大于25000

expr: floor(sum(irate(mysql_global_status_commands_total{

group=~"product|product_backend"}[5m])) by (group, role, mysql_addr)) > 25000

for: 3m

labels:

severity: warning

annotations:

description: "[{

{ $labels.group }}_{

{ $labels.role }}],地址:[{

{ $labels.mysql_addr }}],告警值为:[{

{ $value }}],告警初始时长为3分钟."

### 内存 ###

# 默认内存告警策略

- alert: mysql内存99%

expr: mysql_mem_used_rate >= 99

for: 6m

labels:

severity: warning

annotations:

description: "[{

{ $labels.group }}_{

{ $labels.role }}],地址:[{

{ $labels.mysql_addr }}],告警值为:[{

{ $value }}%],告警初始时长为6分钟."

### 磁盘 ###

# 默认磁盘告警策略

- alert: mysql磁盘85%

expr: mysql_disk_used_rate{

mysql_addr!~"10.8.161.53:3306|10.8.115.31:3306"} >= 85

for: 3m

labels:

severity: warning

annotations:

description: "[{

{ $labels.group }}_{

{ $labels.role }}],地址:[{

{ $labels.mysql_addr }}],告警值为:[{

{ $value }}%],告警初始时长为3分钟."

# 磁盘95%告警策略

- alert: mysql磁盘95%

expr: mysql_disk_used_rate{

mysql_addr=~"10.8.161.53:3306|10.8.115.31:3306"} >= 95

for: 3m

labels:

severity: warning

annotations:

description: "[{

{ $labels.group }}_{

{ $labels.role }}],地址:[{

{ $labels.mysql_addr }}],告警值为:[{

{ $value }}%],告警初始时长为3分钟."

#### IO上限告警 ###

## SSD盘IO上限告警策略

# - alert: mysqlSSD盘IO上限预警

# expr: (floor(mysql_ioops) >= mysql_disk_total_size * 50 * 0.9) and (mysql_ssd == 1) and on() hour() >= 0 < 16

# for: 6m

# labels:

# severity: warning

# annotations:

# description: "[{

{ $labels.group }}_{

{ $labels.role }}],地址:[{

{ $labels.mysql_addr }}],告警值为:[{

{ $value }}],告警初始时长为6分钟."

## 普通盘IO上限告警策略

# - alert: mysql普通盘IO上限预警

# expr: (floor(mysql_ioops) >= mysql_disk_total_size * 10 * 0.9) and (mysql_ssd == 0) and on() hour() >= 0 < 16

# for: 6m

# labels:

# severity: warning

# annotations:

# description: "[{

{ $labels.group }}_{

{ $labels.role }}],地址:[{

{ $labels.mysql_addr }}],告警值为:[{

{ $value }}],告警初始时长为6分钟."

### 连接数 ###

# 默认连接数告警策略

- alert: mysql连接数80%

expr: floor(mysql_global_status_threads_connected / mysql_global_variables_max_connections * 100) >= 80

for: 3m

labels:

severity: warning

annotations:

description: "[{

{ $labels.group }}_{

{ $labels.role }}],地址:[{

{ $labels.mysql_addr }}],告警值为:[{

{ $value }}%],告警初始时长为3分钟."

### 运行进程数 ###

# 默认运行进程数告警策略

- alert: mysql运行进程数5分钟增长>150

expr: floor(delta(mysql_global_status_threads_running{

mysql_addr!~"10.8.136.10:3306|10.10.129.116:3306|10.8.67.153:3306"}[5m])) >= 150

for: 3m

labels:

severity: warning

annotations:

description: "[{

{ $labels.group }}_{

{ $labels.role }}],地址:[{

{ $labels.mysql_addr }}],告警值为:[{

{ $value }}],告警初始时长为3分钟."

# 6分钟运行进程数告警策略

- alert: mysql运行进程数5分钟增长>150

expr: floor(delta(mysql_global_status_threads_runningi{

mysql_addr=~"10.8.136.10:3306|10.10.129.116:3306|10.8.67.153:3306"}[5m])) >= 150

for: 6m

labels:

severity: warning

annotations:

description: "[{

{ $labels.group }}_{

{ $labels.role }}],地址:[{

{ $labels.mysql_addr }}],告警值为:[{

{ $value }}],告警初始时长为6分钟."

### 主从同步异常 ###

# 默认主从同步告警策略

- alert: mysql主从同步异常

expr: (mysql_slave_status_slave_io_running{

role!="master"} == 0) or (mysql_slave_status_slave_sql_running{

role!="master"} == 0)

for: 1m

labels:

severity: warning

annotations:

description: "[{

{ $labels.group }}_{

{ $labels.role }}],地址:[{

{ $labels.mysql_addr }}],主从同步异常,告警初始时长为1分钟."

### 主从同步延时 ###

# 默认主从同步延时告警策略

- alert: mysql主从同步延时>30s

expr: floor(mysql_slave_status_seconds_behind_master{

mysql_addr!~"10.8.137.173:3306|10.8.11.17:3306|10.8.2.17:3306|10.10.29.6:3306|10.8.61.153:3306"}) >= 30

for: 3m

labels:

severity: warning

annotations:

description: "[{

{ $labels.group }}_{

{ $labels.role }}],地址:[{

{ $labels.mysql_addr }}],告警值为:[{

{ $value }}s],告警初始时长为3分钟."

# 主从同步延时较大告警策略

- alert: mysql主从同步延时>300s

expr: floor(mysql_slave_status_seconds_behind_master{

mysql_addr=~"10.8.137.173:3306|10.8.11.17:3306|10.10.29.6:3306|10.8.61.153:3306"}) >= 300

for: 12m

labels:

severity: warning

annotations:

description: "[{

{ $labels.group }}_{

{ $labels.role }}],地址:[{

{ $labels.mysql_addr }}],告警值为:[{

{ $value }}s],告警初始时长为12分钟."

-------------------------------------------------------------------

5.2 http/https、tcp、icmp、dns黑盒监控

blackbox_exporter 是 Prometheus 拿来对 http/https、tcp、icmp、dns、进行的黑盒监控工具

下载地址:https://github.com/prometheus/blackbox_exporter/releases/download/v0.18.0/blackbox_exporter-0.18.0.linux-amd64.tar.gz

- 安装 blackbox_exporter采集组件

wget https://github.com/prometheus/blackbox_exporter/releases/download/v0.18.0/blackbox_exporter-0.18.0.linux-amd64.tar.gz

tar -C /usr/local/ -xvf blackbox_exporter-0.18.0.linux-amd64.tar.gz

- 官方默认配置blackbox.yml

cat /usr/local/blackbox_exporter-0.18.0.linux-amd64/blackbox.yml

---------------------------------------------------------------------------

modules:

http_2xx:

prober: http

http_post_2xx:

prober: http

http:

method: POST

tcp_connect:

prober: tcp

pop3s_banner:

prober: tcp

tcp:

query_response:

- expect: "^+OK"

tls: true

tls_config:

insecure_skip_verify: false

ssh_banner:

prober: tcp

tcp:

query_response:

- expect: "^SSH-2.0-"

irc_banner:

prober: tcp

tcp:

query_response:

- send: "NICK prober"

- send: "USER prober prober prober :prober"

- expect: "PING :([^ ]+)"

send: "PONG ${1}"

- expect: "^:[^ ]+ 001"

icmp:

prober: icmp

--------------------------------------------------------------------------------

- 启动blockbox采集组件

nohup /usr/local/blackbox_exporter-0.18.0.linux-amd64/blackbox_exporter --config.file=/usr/local/blackbox_exporter-0.18.0.linux-amd64/blackbox.yml &

- prometheus http配置

vi /usr/local/Prometheus/prometheus.yml

---------------------------------------------------------------------------

- job_name: 'blackbox'

metrics_path: /probe

params:

module: [http_2xx] # 模块对应 blackbox.yml

static_configs:

- targets:

- http://baidu.com # http

- https://baidu.com # https

- http://182.92.219.202:8761/actuator/ # 8080端口的域名

- http://182.92.219.202:8543 # 8543端口无服务,测试不通的情况

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: xxxx:9115 # blackbox安装在哪台机器,端口默认9115

-----------------------------------------------------------------------

- prometheus TCP配置

vi /usr/local/Prometheus/prometheus.yml

-------------------------------------------------------------------------

- job_name: blackbox_tcp

metrics_path: /probe

params:

module: [tcp_connect]

static_configs:

- targets:

- 192.168.1.2:280

- 192.168.1.2:7013

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: 192.168.1.99:9115 # Blackbox exporter.

-------------------------------------------------------------------------

- 重启prometheus服务

第一种方式:热加载

curl -X POST http://xxxx:9090/-/reload

第二种方式: 先 ps -ef|grep prometheus, kill掉prometheus进程然后执行下面命令启动服务

#重启prometheus

nohup /usr/local/Prometheus/prometheus --config.file=/usr/local/Prometheus/prometheus.yml &

- 验证

icmp、tcp、http、post 监测是否正常可以观察probe_success 这一指标

probe_success == 0 ##联通性异常

probe_success == 1 ##联通性正常

告警一般判断这个指标是否等于0,如等于0 则触发异常报警

- 配置告警规则

[root@iZ2zejaz33icbod2k4cvy6Z rule]# vi /usr/local/Prometheus/conf/rule/http.yml

groups:

- name: http

rules:

- alert: xxx域名解析失败

expr: probe_success == 0

for: 5m

labels:

severity: "error"

annotations:

summary: "xxx域名解析失败"

- grafana配置监控图表

node_exporter监控json模板:https://grafana.com/grafana/dashboards/9965/revisions

导入json模板

注意:每个图表选择的DataSource要和导入json模板时保持一致,不然图表没有数据。如下:

5.3 linux服务器监控

5.3.1 安装linux采集组件node_exporter

- 下载node_exporter组件

下载地址:node_exporter-1.0.1.linux-amd64.tar.gz - 被监控的机器安装node-exporter

tar -xvf node_exporter-1.0.1.linux-amd64.tar.gz -C /usr/local/

- 启动node-exporter

nohup /usr/local/node_exporter-1.0.1.linux-amd64/node_exporter &

- consul动态增加配置

//去consul服务器上执行命令:

curl -X PUT -d '{"id": "182.92.219.202_linux","name": "182.92.219.202_linux","address": "182.92.219.202","port": 9100,"tags": ["service"],"checks": [{"http": "http://182.92.219.202:9100/","interval": "5s"}]}' http://182.92.219.202:8502/v1/agent/service/register

5.3.2 grafana监控数据可视化

5.4 redis服务监控

5.4.1 redis_exporter采集组件安装

- redis_exporter下载

下载地址:https://github.com/oliver006/redis_exporter/releases/download/v1.16.0/redis_exporter-v1.16.0.linux-amd64.tar.gz - 安装redis_exporter

tar -xvf redis_exporter-v1.16.0.linux-amd64.tar.gz -C /usr/local/

- 启动redis_exporter

#指定redis服务地址和访问密码

nohup /usr/local/redis_exporter-v1.16.0.linux-amd64/redis_exporter -redis.addr 182.92.219.202:6379 -redis.password 123456 &

- consul动态增加配置(consul服务主机上执行)

//去consul服务器上执行命令:

curl -X PUT -d '{"id": "182.92.219.202_mysql","name": "182.92.219.202_mysql","address": "182.92.219.202","port": 9104,"tags": ["service"],"checks": [{"http": "http://182.92.219.202:9104/","interval": "5s"}]}' http://182.92.219.202:8502/v1/agent/service/register

5.4.2 grafana监控数据可视化

-

manager ==> import ==> load 导入监控模板

2)查看监控数据

注意:Memory Usage这个图表,一直是N/A。是因为redis_memory_max_bytes 获取的值为0,导致 redis_memory_used_bytes / redis_memory_max_bytes 结果不正常。 -

解决办法:将redis_memory_max_bytes 改为服务器的真实内存大小。更改计算公式:

redis_memory_used_bytes{

instance=~"$instance"} / 8193428

5.5 springboot应用服务监控

5.5.1 开启应用服务监控数据

- pom.xml 新增依赖

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-prometheus</artifactId>

<version>1.6.3</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

<version>2.4.2</version>

</dependency>

- application启动类新增配置

@Bean

MeterRegistryCustomizer<MeterRegistry> configurer(

@Value("${spring.application.name}") String applicationName) {

return (registry) -> registry.config().commonTags("application", applicationName);

}

- appliction.yml新增配置配置

management:

endpoints:

web: #开启web监控端点

exposure:

include: 'prometheus'

path-mapping:

prometheus: metrics #端点映射路径

base-path: / # 初始路径 (规范访问路径 方便加入consul动态配置)

enabled-by-default: true #自定义端点的启用和关闭

metrics:

tags:

application: ${

spring.application.name} #对外暴露tag

health:

redis:

enabled: false #如果项目未使用redis 配置关闭redis链接监控,否则启动会报错

5.5.2 应用服务加入普罗米修斯监控

- consul动态增加配置(consul服务主机上执行)

//执行命令,参数根据实际情况进行替换.例:application.name:proDemo

curl -X PUT -d '{"id": "182.92.219.202_{application.name}","name": "182.92.219.202_{application.name}","address": "182.92.219.202","port": 30240,"tags": ["service"],"checks": [{"http": "http://182.92.219.202:30240/metrics","interval": "5s"}]}' http://182.92.219.202:8502/v1/agent/service/register

5.5.3 grafana监控图形配置

5.6 elasticsearch监控

5.6.1 安装elasticsearch采集组件(elasticsearch服务所在主机)

elasticsearch_exporter主机采集组件下载地址:https://github.com/justwatchcom/elasticsearch_exporter/releases/download/v1.1.0/elasticsearch_exporter-1.1.0.linux-amd64.tar.gz

- 解压采集组件

tar -zxvf elasticsearch_exporter-1.1.0.linux-amd64.tar.gz

mv /opt/resources/elasticsearch_exporter-1.1.0.linux-amd64.tar.gz /usr/local/elasticsearch_exporter

- 启动elasticsearch_exporter(默认9114端口)

nohup ./elasticsearch_exporter --es.uri http://182.92.219.202:9200 &

- consul动态增加配置(consul服务主机上执行)

//去consul服务器上执行命令:

curl -X PUT -d '{"id": "182.92.219.202_elasticsearch","name": "182.92.219.202_elasticsearch","address": "182.92.219.202","port": 9114,"tags": ["service"],"checks": [{"http": "http://182.92.219.202:9114/","interval": "5s"}]}' http://182.92.219.202:8502/v1/agent/service/register

5.6.2 grafana监控图形配置

5.7 nginx监控

5.7.1 安装nginx-module-vts、geoip_module模块

- git、nginx环境准备

yum install git

yum -y install make zlib zlib-devel gcc-c++ libtool openssl openssl-devel

yum -y install epel-release geoip-devel

ldd /usr/local/nginx/sbin/nginx |grep libGeoIP

- 下载nginx-module-vts源码包

cd /usr/local

git clone git://github.com/vozlt/nginx-module-vts.git

- 在nginx编译时添加所需模块

//nginx安装包目录根据实际情况做调整

/usr/local/nginx-1.16.1/configure --add-module=/usr/local/nginx-module-vts --with-http_realip_module --with-http_geoip_module

make

# 已安装nginx

#make install

- 替换nginx可执行文件

//备份nginx 用来报错回滚

cp /usr/local/nginx/sbin/nginx /usr/local/nginx/sbin/nginx.back

//杀死nginx进程,否则无法替换nginx文件

ps -ef|grep nginx

kill -9 pid

//替换文件

cp /usr/local/nginx-1.16.1/objs/nginx /usr/local/nginx/sbin/nginx

- 配置nginx-module-vts模块

vi /usr/local/nginx/conf/nginx.conf

---------------------------------------------------------------------------------------------------------------------------

#http下新增配置:

vhost_traffic_status_zone;

vhost_traffic_status_filter_by_host on;

# 80端口下 新增status接口监控

location /status {

vhost_traffic_status_display;

vhost_traffic_status_display_format html;

}

---------------------------------------------------------------------------------------------------------------------------

vim /usr/local/nginx/conf/nginx.conf

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

http {

...

# 增加geoip配置

geoip_country /usr/share/GeoIP/GeoIP.dat;

...

}

------------------------------------------------------------------------------------------------------------------------------------------------

- 配置自定义监控指标

通过以上部署只能拿到默认的指标,生产中可能还会需要监控uri的请求量,监控IP访问情况(同一个IP出现大量访问时可能被攻击),获取不同agent请求量用于分析等,通过vts模块的vhost_traffic_status_filter_by_set_key功能可以自定义需要获取的指标。此处的指标需要加到对应的server配置中

$ vim /usr/local/nginx/conf/nginx.conf

-------------------------------------------------------------------------

http {

...

vhost_traffic_status_zone;

vhost_traffic_status_filter_by_host on;

geoip_country /usr/share/GeoIP/GeoIP.dat;

vhost_traffic_status_filter_by_set_key $uri uri::$server_name; #每个uri访问量

vhost_traffic_status_filter_by_set_key $geoip_country_code country::$server_name; #不同国家/区域请求量

vhost_traffic_status_filter_by_set_key $status $server_name; #http code统计

vhost_traffic_status_filter_by_set_key $upstream_addr upstream::backend; #后端转发统计

vhost_traffic_status_filter_by_set_key $remote_port client::ports::$server_name; #请求端口统计

vhost_traffic_status_filter_by_set_key $remote_addr client::addr::$server_name; #请求IP统计

server {

listen 80;

server_name localhost;

location /status {

vhost_traffic_status_display;

vhost_traffic_status_display_format html;

}

location ~ ^/storage/(.+)/.*$ {

set $volume $1;

vhost_traffic_status_filter_by_set_key $volume storage::$server_name; #请求路径统计

}

}

}

5.7.2 安装nginx采集组件(nginx服务所在主机)

nginx-vts-exporter主机采集组件下载地址:https://github.com/hnlq715/nginx-vts-exporter/releases/download/v0.9.1/nginx-vts-exporter-0.9.1.linux-amd64.tar.gz

- 解压采集组件

tar -zxvf nginx-vts-exporter-0.9.1.linux-amd64.tar.gz

mv /opt/resources/nginx-vts-exporter-0.9.1.linux-amd64 /usr/local/nginx-vts-exporter

- 启动nginx-vts-exporter(默认9913端口)

./nginx-vts-exporter -nginx.scrape_timeout 10 -nginx.scrape_uri http://182.92.219.202/status/format/json

- consul动态增加配置(consul服务主机上执行)

//执行命令:

curl -X PUT -d '{"id": "182.92.219.202_nginx","name": "182.92.219.202_nginx","address": "182.92.219.202","port": 9913,"tags": ["service"],"checks": [{"http": "http://182.92.219.202:9913/","interval": "5s"}]}' http://192.168.100.175:8502/v1/agent/service/register