上次我们在Android Studio中新建了项目,集成了实现直播推流所需要的工具,分别是:

- rtmpdump:推流

- x264:视频编码

- faac:音频编码

文章地址:NDK--Android Studio中直播推流框架的搭建

直播推流还需要流媒体服务器支持,我这边使用的是虚拟机,有条件的也可以使用真正的服务器,具体的流媒体服务器搭建方法可以参考我以前的文章:Nginx流媒体服务器搭建

基本工作完成后,今天我们来实现直播推流。

1.首先界面非常简单,布局文件如下:

一个按钮开始推流,一个按钮停止推流,一个按钮切换前后置摄像头,一个SurfaceView显示摄像头画面

2.定义好native方法,用于获取Java的摄像头视频和录音音频数据,以及音视频参数

public class NativePush {

private static final String TAG = NativePush.class.getSimpleName();

static {

System.loadLibrary("native-lib");

}

private LiveStateChangeListener mListener;

public void setLiveStateChangeListener(LiveStateChangeListener listener) {

mListener = listener;

}

/**

* native层回调

*

* @param code -96:音频编码器设置失败

* -97:音频编码器打开失败

* -98:打开视频编码器失败

* -99:建立rtmp连接失败

* -100:rtmp断开

*/

public void onPostNativeError(int code) {

Log.e(TAG, "onPostNativeError:" + code);

//停止推流

stopPush();

Log.d("NativePush", code + "");

if (null != mListener) {

mListener.onErrorPusher(code);

}

}

/**

* native层回调

* 推流连接建立和线程退出

*

* @param state

*/

public void onPostNativeState(int state) {

if (state == 100) {

mListener.onStartPusher();

} else if (state == 101) {

mListener.onStopPusher();

}

}

//设置视频参数

public native void setVideoParams(int width, int height, int bitrate, int fps);

//设置音频参数

public native void setAudioParams(int sample, int channel);

//推视频帧

public native void pushVideo(byte[] buffer);

//推音频帧

public native void pushAudio(byte[] buffer, int size);

//开始推流线程

public native void startPush(String url);

//停止推流

public native void stopPush();

//获取音频缓冲区大小

public native int getInputSamples();

}

3.定义视频和音频的参数类,方便后期统一管理

package com.aruba.rtmppushapplication.push.params;

/**

* 视频参数

* Created by aruba on 2021/1/12.

*/

public class VideoParams {

//帧数

private int fps;

private int videoWidth;

private int videoHeight;

//码率

private int bitrate;

private int cameraId;

private VideoParams(int videoWidth, int videoHeight, int cameraId) {

this.videoWidth = videoWidth;

this.videoHeight = videoHeight;

this.cameraId = cameraId;

}

public int getFps() {

return fps;

}

public void setFps(int fps) {

this.fps = fps;

}

public int getVideoWidth() {

return videoWidth;

}

public void setVideoWidth(int videoWidth) {

this.videoWidth = videoWidth;

}

public int getVideoHeight() {

return videoHeight;

}

public void setVideoHeight(int videoHeight) {

this.videoHeight = videoHeight;

}

public int getBitrate() {

return bitrate;

}

public void setBitrate(int bitrate) {

this.bitrate = bitrate;

}

public int getCameraId() {

return cameraId;

}

public void setCameraId(int cameraId) {

this.cameraId = cameraId;

}

public static class Builder {

private int fps = 25;

private int videoWidth = -1;

private int videoHeight = -1;

private int bitrate = 480000;

private int cameraId = -1;

public Builder fps(int fps) {

this.fps = fps;

return this;

}

public Builder videoSize(int videoWidth, int videoHeight) {

this.videoHeight = videoHeight;

this.videoWidth = videoWidth;

return this;

}

public Builder bitrate(int bitrate) {

this.bitrate = bitrate;

return this;

}

public Builder cameraId(int cameraId) {

this.cameraId = cameraId;

return this;

}

public VideoParams build() {

if (videoWidth == -1 || videoHeight == -1 || cameraId == -1) {

throw new RuntimeException("videoWidth,videoHeight,cameraId must be config");

}

VideoParams videoParams = new VideoParams(videoWidth, videoHeight, cameraId);

videoParams.setBitrate(bitrate);

videoParams.setFps(fps);

return videoParams;

}

}

}

视频需要的参数为视频宽高、fps、比特率、摄像头id(前置还是后置)

package com.aruba.rtmppushapplication.push.params;

/**

* 音频参数

* Created by aruba on 2021/1/12.

*/

public class AudioParams {

//采样率

private int sampleRate;

//声道数

private int channel;

private AudioParams() {

}

public int getSampleRate() {

return sampleRate;

}

public void setSampleRate(int sampleRate) {

this.sampleRate = sampleRate;

}

public int getChannel() {

return channel;

}

public void setChannel(int channel) {

this.channel = channel;

}

public static class Builder {

//采样率

private int sampleRate = 44100;

//声道数

private int channel = 1;

public Builder sampleRate(int sampleRate) {

this.sampleRate = sampleRate;

return this;

}

public Builder channel(int channel) {

this.channel = channel;

return this;

}

public AudioParams build(){

AudioParams audioParams = new AudioParams();

audioParams.setSampleRate(sampleRate);

audioParams.setChannel(channel);

return audioParams;

}

}

}

音频的参数为采样率和声道数,采样位数我们统一使用16bit

4.定义统一接口,用于音视频推流实现

package com.aruba.rtmppushapplication.push;

/**

* Created by aruba on 2020/12/30.

*/

public interface IPush {

/**

* 初始化

*/

void init();

/**

* 开始推流

*/

int startPush();

/**

* 停止推流

*/

void stopPush();

}

5.定义统一管理类,用于管理音视频推流

package com.aruba.rtmppushapplication.push;

import android.app.Activity;

import android.hardware.Camera;

import android.view.SurfaceHolder;

import com.aruba.rtmppushapplication.push.natives.LiveStateChangeListener;

import com.aruba.rtmppushapplication.push.natives.NativePush;

import com.aruba.rtmppushapplication.push.params.AudioParams;

import com.aruba.rtmppushapplication.push.params.VideoParams;

import java.lang.ref.WeakReference;

/**

* 直播推流工具类

* Created by aruba on 2021/1/12.

*/

public class PushHelper {

//显示摄像头画面的surface

private SurfaceHolder surfaceHolder;

//音频推流

private AudioPush audioPush;

//视频推流

private VideoPush videoPush;

private WeakReference activity;

//native层对象

private NativePush nativePush;

public PushHelper(Activity activity, SurfaceHolder surfaceHolder) {

this.activity = new WeakReference<>(activity);

this.surfaceHolder = surfaceHolder;

init();

}

/**

* 初始化

*/

private void init() {

nativePush = new NativePush();

//设置回调

nativePush.setLiveStateChangeListener(new LiveStateChangeListener() {

@Override

public void onErrorPusher(int code) {

videoPush.stopPush();

audioPush.stopPush();

}

@Override

public void onStartPusher() {

//等待rtmp连接开启后,再开始推视频和音频

videoPush.startPush();

audioPush.startPush();

}

@Override

public void onStopPusher() {

videoPush.stopPush();

audioPush.stopPush();

}

});

//初始化视频参数

VideoParams videoParams = new VideoParams.Builder()

.videoSize(1920, 1080)

.bitrate(960000)

.cameraId(Camera.CameraInfo.CAMERA_FACING_BACK)

.build();

videoPush = new VideoPush(activity.get(), videoParams, surfaceHolder);

videoPush.setNativePush(nativePush);

//初始化音频参数

AudioParams audioParams = new AudioParams.Builder()

.channel(1)

.sampleRate(44100)

.build();

audioPush = new AudioPush(audioParams);

audioPush.setNativePush(nativePush);

videoPush.init();

audioPush.init();

}

/**

* 开始推流

*

* @param url 服务器地址

*/

public void startPush(String url) {

nativePush.startPush(url);

}

/**

* 停止推流

*/

public void stopPush() {

nativePush.stopPush();

}

/**

* 切换摄像头

*/

public void swtichCamera() {

if (videoPush != null)

videoPush.swtichCamera();

}

}

到目前为止,基本框架已经构建好,接下来来分别获取摄像头数据和麦克风数据,并将数据传入native层

1.摄像头数据获取,并传入native层

package com.aruba.rtmppushapplication.push;

import android.app.Activity;

import android.graphics.ImageFormat;

import android.hardware.Camera;

import android.util.Log;

import android.view.Surface;

import android.view.SurfaceHolder;

import com.aruba.rtmppushapplication.push.natives.NativePush;

import com.aruba.rtmppushapplication.push.params.VideoParams;

import java.io.IOException;

import java.lang.ref.WeakReference;

import java.util.Iterator;

import java.util.List;

/**

* 对应视频推流的native层

* Created by aruba on 2021/1/12.

*/

public class VideoPush implements IPush, Camera.PreviewCallback {

private final static String TAG = VideoPush.class.getSimpleName();

private VideoParams videoParams;

//摄像头

private Camera camera;

//显示摄像头数据

private SurfaceHolder surfaceHolder;

//摄像头画面数据缓冲区

private byte[] buffers;

private boolean isSurfaceCreate;

private NativePush nativePush;

private WeakReference mActivity;

private int screen;

private byte[] raw;

private final static int SCREEN_PORTRAIT = 0;

private final static int SCREEN_LANDSCAPE_LEFT = 90;

private final static int SCREEN_LANDSCAPE_RIGHT = 270;

private boolean isPushing;

public VideoPush(Activity activity, VideoParams videoParams, SurfaceHolder surfaceHolder) {

this.mActivity = new WeakReference<>(activity);

this.videoParams = videoParams;

this.surfaceHolder = surfaceHolder;

}

@Override

public void init() {

if (videoParams == null) {

throw new NullPointerException("videoParams is null");

}

surfaceHolder.addCallback(new SurfaceHolder.Callback() {

@Override

public void surfaceCreated(SurfaceHolder surfaceHolder) {

isSurfaceCreate = true;

resetPreview(surfaceHolder);

}

@Override

public void surfaceChanged(SurfaceHolder surfaceHolder, int i, int i1, int i2) {

// stopPreview();

// startPreview();

}

@Override

public void surfaceDestroyed(SurfaceHolder surfaceHolder) {

isSurfaceCreate = false;

}

});

}

/**

* 开始预览

*/

private synchronized void startPreview() {

try {

camera = Camera.open(videoParams.getCameraId());

Camera.Parameters parameters = camera.getParameters();

parameters.setPreviewFormat(ImageFormat.NV21);//yuv

setPreviewSize(parameters);

setPreviewOrientation(parameters);

// parameters.setPreviewSize(videoParams.getVideoWidth(), videoParams.getVideoHeight());

camera.setParameters(parameters);

if (isSurfaceCreate)

camera.setPreviewDisplay(surfaceHolder);

//创建缓冲区 长 * 宽 * 一像素所占用字节

int bytePerPixel = ImageFormat.getBitsPerPixel(ImageFormat.NV21);

buffers = new byte[videoParams.getVideoWidth() * videoParams.getVideoHeight()

* bytePerPixel];

raw = new byte[videoParams.getVideoWidth() * videoParams.getVideoHeight()

* bytePerPixel];

camera.addCallbackBuffer(buffers);

camera.setPreviewCallbackWithBuffer(this);

camera.startPreview();

} catch (IOException e) {

e.printStackTrace();

}

}

@Override

public void onPreviewFrame(byte[] bytes, Camera camera) {

if (isPushing) {

switch (screen) {//根据屏幕位置,旋转像素数据

case SCREEN_PORTRAIT://竖屏

portraitData2Raw(buffers);

break;

case SCREEN_LANDSCAPE_LEFT:

raw = buffers;

break;

case SCREEN_LANDSCAPE_RIGHT:// 横屏 头部在右边

landscapeData2Raw(buffers);

break;

}

if (camera != null) {

//每次必须再调用该方法,不然onPreviewFrame只会回调一次 注:bytes就是buffers

camera.addCallbackBuffer(bytes);

}

nativePush.pushVideo(raw);

} else {

stopPreview();

}

// Log.i(TAG, "获取到了视频数据");

}

private synchronized void resetPreview(SurfaceHolder surfaceHolder) {

if (camera != null) {

try {

camera.setPreviewDisplay(surfaceHolder);

} catch (IOException e) {

e.printStackTrace();

}

}

}

private synchronized void stopPreview() {

if (camera != null) {

camera.stopPreview();

camera.release();

camera = null;

}

}

@Override

public int startPush() {

synchronized (TAG) {

if (isPushing) {

return -1;

}

isPushing = true;

}

startPreview();

return 0;

}

@Override

public void stopPush() {

synchronized (TAG) {

isPushing = false;

}

}

public void swtichCamera() {

if (videoParams.getCameraId() == Camera.CameraInfo.CAMERA_FACING_BACK) {

videoParams.setCameraId(Camera.CameraInfo.CAMERA_FACING_FRONT);

} else {

videoParams.setCameraId(Camera.CameraInfo.CAMERA_FACING_BACK);

}

stopPreview();

startPreview();

}

public void setVideoParams(VideoParams videoParams) {

this.videoParams = videoParams;

}

public VideoParams getVideoParams() {

return videoParams;

}

public void setNativePush(NativePush nativePush) {

this.nativePush = nativePush;

}

/**

* 获取摄像头支持的分辨率,并设置最佳分辨率

*

* @param parameters

*/

private void setPreviewSize(Camera.Parameters parameters) {

List supportedPreviewFormats = parameters.getSupportedPreviewFormats();

for (Integer integer : supportedPreviewFormats) {

System.out.println("支持:" + integer);

}

List supportedPreviewSizes = parameters.getSupportedPreviewSizes();

Camera.Size size = supportedPreviewSizes.get(0);

Log.d(TAG, "支持 " + size.width + "x" + size.height);

int m = Math.abs(size.height * size.width - videoParams.getVideoHeight() * videoParams.getVideoWidth());

supportedPreviewSizes.remove(0);

Iterator iterator = supportedPreviewSizes.iterator();

while (iterator.hasNext()) {

Camera.Size next = iterator.next();

Log.d(TAG, "支持 " + next.width + "x" + next.height);

int n = Math.abs(next.height * next.width - videoParams.getVideoHeight() * videoParams.getVideoWidth());

if (n < m) {

m = n;

size = next;

}

}

videoParams.setVideoHeight(size.height);

videoParams.setVideoWidth(size.width);

parameters.setPreviewSize(videoParams.getVideoWidth(), videoParams.getVideoHeight());

Log.d(TAG, "预览分辨率 width:" + size.width + " height:" + size.height);

}

/**

* 获取屏幕方向

*

* @param parameters

*/

private void setPreviewOrientation(Camera.Parameters parameters) {

if (mActivity.get() == null) return;

Camera.CameraInfo info = new Camera.CameraInfo();

Camera.getCameraInfo(videoParams.getCameraId(), info);

int rotation = mActivity.get().getWindowManager().getDefaultDisplay().getRotation();

screen = 0;

switch (rotation) {

case Surface.ROTATION_0:

screen = SCREEN_PORTRAIT;

nativePush.setVideoParams(videoParams.getVideoHeight(), videoParams.getVideoWidth(), videoParams.getBitrate(), videoParams.getFps());

break;

case Surface.ROTATION_90: // 横屏 左边是头部(home键在右边)

screen = SCREEN_LANDSCAPE_LEFT;

nativePush.setVideoParams(videoParams.getVideoWidth(), videoParams.getVideoHeight(), videoParams.getBitrate(), videoParams.getFps());

break;

case Surface.ROTATION_180:

screen = 180;

break;

case Surface.ROTATION_270:// 横屏 头部在右边

screen = SCREEN_LANDSCAPE_RIGHT;

nativePush.setVideoParams(videoParams.getVideoWidth(), videoParams.getVideoHeight(), videoParams.getBitrate(), videoParams.getFps());

break;

}

int result;

if (info.facing == Camera.CameraInfo.CAMERA_FACING_FRONT) {

result = (info.orientation + screen) % 360;

result = (360 - result) % 360; // compensate the mirror

} else { // back-facing

result = (info.orientation - screen + 360) % 360;

}

camera.setDisplayOrientation(result);

}

private void landscapeData2Raw(byte[] data) {

int width = videoParams.getVideoWidth();

int height = videoParams.getVideoHeight();

int y_len = width * height;

int k = 0;

// y数据倒叙插入raw中

for (int i = y_len - 1; i > -1; i--) {

raw[k] = data[i];

k++;

}

// v1 u1 v2 u2

// v3 u3 v4 u4

// 需要转换为:

// v4 u4 v3 u3

// v2 u2 v1 u1

int maxpos = data.length - 1;

int uv_len = y_len >> 2; // 4:1:1

for (int i = 0; i < uv_len; i++) {

int pos = i << 1;

raw[y_len + i * 2] = data[maxpos - pos - 1];

raw[y_len + i * 2 + 1] = data[maxpos - pos];

}

}

private void portraitData2Raw(byte[] data) {

// if (mContext.getResources().getConfiguration().orientation !=

// Configuration.ORIENTATION_PORTRAIT) {

// raw = data;

// return;

// }

int width = videoParams.getVideoWidth(), height = videoParams.getVideoHeight();

int y_len = width * height;

int uvHeight = height >> 1; // uv数据高为y数据高的一半

int k = 0;

if (videoParams.getCameraId() == Camera.CameraInfo.CAMERA_FACING_BACK) {

for (int j = 0; j < width; j++) {

for (int i = height - 1; i >= 0; i--) {

raw[k++] = data[width * i + j];

}

}

for (int j = 0; j < width; j += 2) {

for (int i = uvHeight - 1; i >= 0; i--) {

raw[k++] = data[y_len + width * i + j];

raw[k++] = data[y_len + width * i + j + 1];

}

}

} else {

for (int i = 0; i < width; i++) {

int nPos = width - 1;

for (int j = 0; j < height; j++) {

raw[k] = data[nPos - i];

k++;

nPos += width;

}

}

for (int i = 0; i < width; i += 2) {

int nPos = y_len + width - 1;

for (int j = 0; j < uvHeight; j++) {

raw[k] = data[nPos - i - 1];

raw[k + 1] = data[nPos - i];

k += 2;

nPos += width;

}

}

}

}

}

当rtmp连接建立后,native层会回调方法:onPostNativeState,最终在PushHelper中调用VideoPush的startPush方法,该方法开启摄像头预览,将参数传给native层,并会不断调用onPreviewFrame方法将摄像头数据传递给native层。

需要注意的是:安卓手机摄像头的特殊性,需要我们根据屏幕方向来对摄像头数据进行旋转

2.音频数据获取,并传入native层

package com.aruba.rtmppushapplication.push;

import android.media.AudioFormat;

import android.media.AudioRecord;

import android.media.MediaRecorder;

import com.aruba.rtmppushapplication.push.natives.NativePush;

import com.aruba.rtmppushapplication.push.params.AudioParams;

/**

* 对应音频推流的native层

* Created by aruba on 2021/1/12.

*/

public class AudioPush implements IPush {

private final static String tag = AudioPush.class.getSimpleName();

private AudioParams audioParams;

//录音

private AudioRecord audioRecord;

private int bufferSize;

private RecordThread recordThread;

private NativePush nativePush;

public AudioPush(AudioParams audioParams) {

this.audioParams = audioParams;

}

@Override

public void init() {

if (audioParams == null) {

throw new NullPointerException("audioParams is null");

}

int channel = audioParams.getChannel() == 1 ?

AudioFormat.CHANNEL_IN_MONO : AudioFormat.CHANNEL_IN_STEREO;

//最小缓冲区大小

bufferSize = AudioRecord.getMinBufferSize(audioParams.getSampleRate(),

channel, AudioFormat.ENCODING_PCM_16BIT);

audioRecord = new AudioRecord(MediaRecorder.AudioSource.MIC,//麦克风

audioParams.getSampleRate(),

channel,

AudioFormat.ENCODING_PCM_16BIT,

bufferSize

);

}

@Override

public int startPush() {

if (recordThread != null && recordThread.isPushing) {

return -1;

}

stopRecord();

recordThread = new RecordThread();

recordThread.start();

return 0;

}

@Override

public void stopPush() {

stopRecord();

}

private synchronized void stopRecord() {

if (recordThread != null) {

recordThread.isPushing = false;

}

}

public void setAudioParams(AudioParams audioParams) {

this.audioParams = audioParams;

}

public AudioParams getAudioParams() {

return audioParams;

}

public void setNativePush(NativePush nativePush) {

this.nativePush = nativePush;

}

class RecordThread extends Thread {

private boolean isPushing = true;

@Override

public void run() {

audioRecord.startRecording();

nativePush.setAudioParams(audioParams.getSampleRate(), audioRecord.getChannelCount());

while (isPushing) {

//采样数 * 2字节(16bit:一个采样占的比特数)

byte[] buffer = new byte[nativePush.getInputSamples() * 2];

int len = audioRecord.read(buffer, 0, buffer.length);

if (len > 0) {

//交由native层处理

// Log.i(tag, "获取到了音频数据");

nativePush.pushAudio(buffer, len);

}

}

audioRecord.stop();

}

}

}

初始化AudioRecord后,需要开启一个线程,不断读取数据,并传入native层

注意:一次可以读取的数据大小需要通过faac编译器获取,并不能直接使用初始化AudioRecord时的bufferSize

Java层代码到此已经完成了,接下来是重头戏:native层代码编写。

1.在Java层我们第一步是调用native方法开启推流线程:

pthread_t *pid;

pthread_mutex_t mutex;

pthread_cond_t cond;

//开始推流的时间

uint32_t start_time;

//推流地址

char *path;

//回调java

JavaVM *jvm;

jobject jPublisherObj;

JNIEXPORT jint JNICALL JNI_OnLoad(JavaVM *vm, void *reserved) {

jvm = vm;

JNIEnv *env = NULL;

jint result = -1;

if (jvm) {

LOGD("jvm init success");

}

if (vm->GetEnv((void **) &env, JNI_VERSION_1_4) != JNI_OK) {

return result;

}

return JNI_VERSION_1_4;

}

/**

* 调用java方法

* @param env

* @param methodId

* @param code

*/

void throwNativeInfo(JNIEnv *env, jmethodID methodId, int code) {

if (env && methodId && jPublisherObj) {

env->CallVoidMethodA(jPublisherObj, methodId, (jvalue *) &code);

}

}

/**

* 开始推流线程

*/

extern "C"

JNIEXPORT void JNICALL

Java_com_aruba_rtmppushapplication_push_natives_NativePush_startPush(JNIEnv *env, jobject instance,

jstring url_) {

if (isPublishing)//线程在运行

return;

if (!jPublisherObj) {

jPublisherObj = env->NewGlobalRef(instance);

}

LOGE("开始推流");

pthread_t id;

pthread_t *pid = &id;

const char *url = env->GetStringUTFChars(url_, 0);

//存放url路径

int url_len = strlen(url) + 1;

path = (char *) (malloc(url_len));

memset(path, 0, url_len);

memcpy(path, url, url_len - 1);

pthread_cond_init(&cond, NULL);

pthread_mutex_init(&mutex, NULL);

start_time = RTMP_GetTime();

pthread_create(pid, NULL, startPush, NULL);

env->ReleaseStringUTFChars(url_, url);

}

2.编写线程执行的代码,开启rtmp连接

bool isPublishing = false;

/**

* 推流线程

* @param arg

* @return

*/

void *startPush(void *arg) {

pthread_mutex_lock(&mutex);

isPublishing = true;

pthread_mutex_unlock(&mutex);

JNIEnv *env;

jvm->AttachCurrentThread(&env, 0);

jclass clazz = env->GetObjectClass(jPublisherObj);

jmethodID errorId = env->GetMethodID(clazz, "onPostNativeError", "(I)V");

jmethodID stateId = env->GetMethodID(clazz, "onPostNativeState", "(I)V");

//rtmp连接

RTMP *connect = RTMP_Alloc();

RTMP_Init(connect);

connect->Link.timeout = 5;//超时时间

RTMP_SetupURL(connect, path);//设置地址

RTMP_EnableWrite(connect);

if (!RTMP_Connect(connect, NULL)) {//建立socket

//建立失败

LOGE("建立rtmp连接失败");

//回调java层

throwNativeInfo(env, errorId, -99);

pthread_mutex_lock(&mutex);

isPublishing = false;

RTMP_Close(connect);

RTMP_Free(connect);

free(path);

path = NULL;

pthread_mutex_unlock(&mutex);

release(env);

jvm->DetachCurrentThread();

pthread_exit(0);

}

RTMP_ConnectStream(connect, 0);//连接流

LOGE("推流连接建立");

throwNativeInfo(env, stateId, 100);

while (isPublishing) {

RTMPPacket *packet = get();

if (packet == NULL) {

continue;

}

//推流

packet->m_nInfoField2 = connect->m_stream_id;

int ret = RTMP_SendPacket(connect, packet, 1);//1:使用rtmp本身的上传队列

if (!ret) {

LOGE("rtmp断开");

throwNativeInfo(env, errorId, -100);

}

RTMPPacket_Free(packet);

free(packet);

}

LOGE("结束推流");

//释放

RTMP_Close(connect);

RTMP_Free(connect);

free(path);

path = NULL;

throwNativeInfo(env, stateId, 101);

release(env);

jvm->DetachCurrentThread();

pthread_exit(0);

}

3.编写生产者消费者模式,线程中使用生产者消费者模式进行线程同步,取出数据并推流,RTMPPacket就是封装好的编码过后的数据(音视频数据经过x264、faac编码压缩后,还需要封装成rtmp可识别的数据,实际上就是一个组包的过程,后面会详细介绍如何将x264、faac编码的数据封装成RTMPPacket)

//RTMPPacket队列

std::queue queue;

//生产者

void put(RTMPPacket *pPacket) {

pthread_mutex_lock(&mutex);

if (isPublishing) {

queue.push(pPacket);

}

pthread_cond_signal(&cond);

pthread_mutex_unlock(&mutex);

}

//消费者

RTMPPacket *get() {

pthread_mutex_lock(&mutex);

if (queue.empty()) {

pthread_cond_wait(&cond, &mutex);

}

RTMPPacket *packet = NULL;

if (!queue.empty()) {

packet = queue.front();

queue.pop();

}

pthread_mutex_unlock(&mutex);

return packet;

}

4.设置音视频参数,初始化缓冲区

//y u v 分别所占字节

int y_len, u_len, v_len;

//裸数据

x264_picture_t *pic;

//编码后的数据

x264_picture_t *pic_out;

//编码器

x264_t *encoder;

extern "C"

JNIEXPORT void JNICALL

Java_com_aruba_rtmppushapplication_push_natives_NativePush_setVideoParams(JNIEnv *env,

jobject instance,

jint width, jint height,

jint bitrate, jint fps) {

if (pic != NULL) {

x264_picture_clean(pic);

free(pic);

free(pic_out);

pic = NULL;

pic_out = NULL;

}

y_len = width * height;

u_len = y_len / 4;

v_len = u_len;

//设置参数

x264_param_t param;

// zerolatency预设以下内容

// param->rc.i_lookahead = 0;

// param->i_sync_lookahead = 0;

// param->i_bframe = 0;

// param->b_sliced_threads = 1;

// param->b_vfr_input = 0;

// param->rc.b_mb_tree = 0;

x264_param_default_preset(¶m, x264_preset_names[0], "zerolatency");

//设置支持的分辨率,默认就是51

param.i_level_idc = 51;

//推流的格式

param.i_csp = X264_CSP_I420;

//视频宽高

param.i_width = width;

param.i_height = height;

param.i_threads = 1;

//1秒多少帧

param.i_timebase_num = fps;

param.i_timebase_den = 1;

param.i_fps_num = fps;

param.i_fps_den = 1;

//关键帧最大间隔时间的帧率

param.i_keyint_max = fps * 2;

//ABR:平均码率 CQP:恒定质量 CRF:恒定码率

param.rc.i_rc_method = X264_RC_ABR;

//码率

param.rc.i_bitrate = bitrate / 1000;

//最大码率

param.rc.i_vbv_max_bitrate = bitrate / 1000 * 1.2;

//缓冲区大小

param.rc.i_vbv_buffer_size = bitrate / 1000;

//0:别的客户端使用pts做同步 1:推流端计算timebase做同步

param.b_vfr_input = 0;

//使用sps pps

param.b_repeat_headers = 1;

//码流级别,baseline只提供i和p帧,降低延迟,提供很好的兼容性

x264_param_apply_profile(¶m, "baseline");

//获取解码器

encoder = x264_encoder_open(¶m);

if (!encoder) {

LOGE("打开视频编码器失败");

jmethodID errorId = env->GetMethodID(env->GetObjectClass(instance), "onPostNativeError",

"(I)V");

throwNativeInfo(env, errorId, -98);

return;

}

pic = (x264_picture_t *) (malloc(sizeof(x264_picture_t)));

//调用内置函数初始化pic,pic存放yuv420数据

x264_picture_alloc(pic, X264_CSP_I420, width, height);

pic_out = (x264_picture_t *) (malloc(sizeof(x264_picture_t)));

LOGE("视频编码器打开完成");

}

//音频编码器

faacEncHandle handle;

//音频缓冲区

unsigned long inputSamples;

//缓冲区最大字节数

unsigned long maxOutputBytes;

extern "C"

JNIEXPORT void JNICALL

Java_com_aruba_rtmppushapplication_push_natives_NativePush_setAudioParams(JNIEnv *env,

jobject instance,

jint sample,

jint channel) {

handle = faacEncOpen(sample, channel, &inputSamples, &maxOutputBytes);

if (!handle) {

LOGE("音频编码器打开失败");

jmethodID errorId = env->GetMethodID(env->GetObjectClass(instance), "onPostNativeError",

"(I)V");

throwNativeInfo(env, errorId, -97);

return;

}

//配置

faacEncConfigurationPtr config = faacEncGetCurrentConfiguration(handle);

config->mpegVersion = MPEG4;

config->allowMidside = 1;//中等压缩

config->aacObjectType = LOW;//音质

config->outputFormat = 0;//输出格式

config->useTns = 1;//消除爆破声

config->useLfe = 0;

config->inputFormat = FAAC_INPUT_16BIT;

config->quantqual = 100;

config->bandWidth = 0; //频宽

config->shortctl = SHORTCTL_NORMAL;//编码方式

int ret = faacEncSetConfiguration(handle, config);

if (!ret) {

LOGE("音频编码器设置失败");

jmethodID errorId = env->GetMethodID(env->GetObjectClass(instance), "onPostNativeError",

"(I)V");

throwNativeInfo(env, errorId, -96);

return;

}

LOGE("音频编码器设置成功");

}

视频编码器和音频编码器的设置看看注释就行了,毕竟不是专业人员,这边不作过多描述,感兴趣的同学可以网上查下资料

5.编写编码代码,编码Java层传递的音视频裸数据

/**

* 编码视频

*/

extern "C"

JNIEXPORT void JNICALL

Java_com_aruba_rtmppushapplication_push_natives_NativePush_pushVideo(JNIEnv *env, jobject instance,

jbyteArray buffer_) {

if (!isPublishing || !encoder || !pic) {

return;

}

jbyte *buffer = env->GetByteArrayElements(buffer_, NULL);

uint8_t *u = pic->img.plane[1];

uint8_t *v = pic->img.plane[2];

//将nv21转换为yuv420

for (int i = 0; i < u_len; i++) {

*(u + i) = *(buffer + y_len + i * 2 + 1);

*(v + i) = *(buffer + y_len + i * 2);

}

memcpy(pic->img.plane[0], buffer, y_len);

// pic->img.plane[0] = buffer;

//nalu

x264_nal_t *nal = 0;

//nalu数量

int pi_nal;

int ret = x264_encoder_encode(encoder, &nal, &pi_nal, pic, pic_out);

if (ret < 0) {

env->ReleaseByteArrayElements(buffer_, buffer, 0);

LOGE("编码失败");

return;

}

//解包,将获取的有效数据交由rtmp编码

unsigned char sps[100];

unsigned char pps[100];

int sps_len = 0;

int pps_len = 0;

for (int i = 0; i < pi_nal; i++) {

if (nal[i].i_type == NAL_SPS) {//序列参数集

//去除分隔符(占4个字节)

sps_len = nal[i].i_payload - 4;

//获取到有效数据

memcpy(sps, nal[i].p_payload + 4, sps_len);

} else if (nal[i].i_type == NAL_PPS) {//图像参数集

pps_len = nal[i].i_payload - 4;

memcpy(pps, nal[i].p_payload + 4, pps_len);

//sps和pps都获取到后,发送头信息

send_264_header(sps, pps, sps_len, pps_len);

} else {//发送关键帧和非关键帧

send_264_body(nal[i].p_payload, nal[i].i_payload);

}

}

env->ReleaseByteArrayElements(buffer_, buffer, 0);

}

/**

* 编码音频

*/

extern "C"

JNIEXPORT void JNICALL

Java_com_aruba_rtmppushapplication_push_natives_NativePush_pushAudio(JNIEnv *env, jobject instance,

jbyteArray buffer_,

jint size) {

if (!isPublishing || !handle)

return;

jbyte *buffer = env->GetByteArrayElements(buffer_, NULL);

unsigned char *outputBuffer = (unsigned char *) (malloc(

sizeof(unsigned char) * maxOutputBytes));

//编码

int len = faacEncEncode(handle, (int32_t *) buffer, inputSamples, outputBuffer,

maxOutputBytes);

if (len > 0) {

// LOGE("rtmp音频推流");

send_aac_body(outputBuffer, len);

}

env->ReleaseByteArrayElements(buffer_, buffer, 0);

if (outputBuffer)

free(outputBuffer);

}

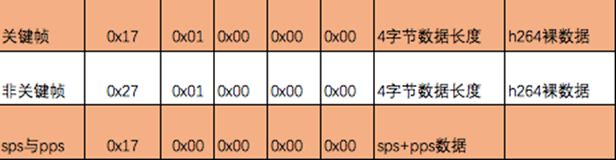

比较难理解的是视频编码,之前介绍说h264主要是i帧,b帧,p帧,他们承载着像素数据,由于进行了压缩,可以这样理解:压缩后数据显然没有原数据的大小,所以原始数据的大小(画面的宽高),压缩比例等信息也要存储,他们就存储在sps和pps中,类似于http的headers,播放时也需要用到这些信息(毕竟解码时起码要知道画面的宽高吧),sps和pps的数据呢,又有4个字节作为分隔符,我们不需要这4个没用数据,所以要去掉它们

接下来就是将编码后的音视频数据进行组包,成为RTMPPacket

先来组包视频数据,我们组包时参考下面的文档

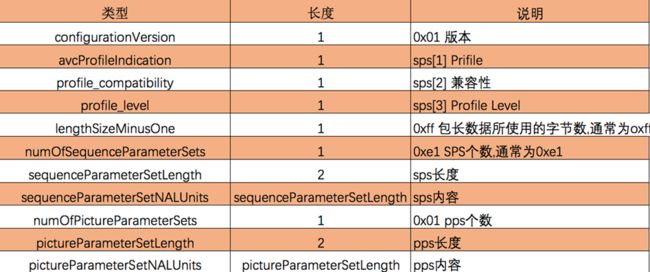

1.先是sps和pps,第一个字节为0x17,具体组包代码如下:

/**

* rtmp发送头信息

* @param sps

* @param pps

* @param len

* @param pps_len

*/

void send_264_header(unsigned char *sps, unsigned char *pps, int sps_len, int pps_len) {

int size = sps_len + pps_len + 16;//组包rtmp头信息需要额外16个字节

RTMPPacket *packet = static_cast(malloc(sizeof(RTMPPacket)));

//初始化内部缓冲区

RTMPPacket_Alloc(packet, size);

//组包

unsigned char *body = reinterpret_cast(packet->m_body);

int i = 0;

body[i++] = 0x17;

body[i++] = 0x00;

body[i++] = 0x00;

body[i++] = 0x00;

body[i++] = 0x00;

//版本号

body[i++] = 0x01;

//profile

body[i++] = sps[1];

//兼容性

body[i++] = sps[2];

//profile_level baseline

body[i++] = sps[3];

body[i++] = 0xff;

body[i++] = 0xe1;

//sps长度

body[i++] = (sps_len >> 8) & 0xff;

body[i++] = sps_len & 0xff;

//sps内容

memcpy(&body[i], sps, sps_len);

i += sps_len;//指针偏移长度

//pps

body[i++] = 0x01;

//pps长度

body[i++] = (pps_len >> 8) & 0xff;

body[i++] = pps_len & 0xff;

memcpy(&body[i], pps, pps_len);

//packet参数设置

packet->m_packetType = RTMP_PACKET_TYPE_VIDEO;//视频类型

packet->m_nBodySize = size;

//客户端通过pts自己做同步

packet->m_nTimeStamp = 0;

packet->m_hasAbsTimestamp = 0;

//指定通道

packet->m_nChannel = 4;

packet->m_headerType = RTMP_PACKET_SIZE_MEDIUM;

//放入队列

put(packet);

}

2.然后是关键帧和非关键帧:

/**

* RTMP发送关键帧和非关键帧

* @param payload

* @param i_payload

*/

void send_264_body(uint8_t *payload, int i_payload) {

if (payload[2] == 0x00) {//第三位为0x00的情况,无用信息为前4位:0000 0001

payload += 4;

i_payload -= 4;

} else if (payload[2] == 0x01) {//第三位为0x01的情况,无用信息为前3位:0000 01

payload += 3;

i_payload -= 3;

}

//组包

int size = i_payload + 9;//组包rtmp帧数据需要额外的9个字节

RTMPPacket *packet = static_cast(malloc(sizeof(RTMPPacket)));

//初始化内部缓冲区

RTMPPacket_Alloc(packet, size);

char *body = packet->m_body;

int type = payload[0] & 0x1f;

int index = 0;

if (type == NAL_SLICE_IDR) {//关键帧

body[index++] = 0x17;

} else {//非关键帧

body[index++] = 0x27;

}

body[index++] = 0x01;

body[index++] = 0x00;

body[index++] = 0x00;

body[index++] = 0x00;

//长度,占4个字节

body[index++] = (i_payload >> 24) & 0xff;

body[index++] = (i_payload >> 16) & 0xff;

body[index++] = (i_payload >> 8) & 0xff;

body[index++] = i_payload & 0xff;

//存放数据

memcpy(&body[index], payload, i_payload);

//packet参数设置

packet->m_packetType = RTMP_PACKET_TYPE_VIDEO;//视频类型

packet->m_nBodySize = size;

//客户端通过pts自己做同步

packet->m_nTimeStamp = RTMP_GetTime() - start_time;//为了让客户端知道播放进度

packet->m_hasAbsTimestamp = 0;

//指定通道

packet->m_nChannel = 0x04;

packet->m_headerType = RTMP_PACKET_SIZE_LARGE;

put(packet);

}

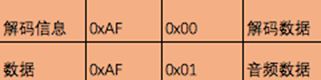

最后组包音频数据,先看下音频数据的文档:

音频组包很简单,代码如下:

/**

* 组包音频packet

* @param buffer

* @param len

*/

void send_aac_body(unsigned char *buffer, int len) {

int size = len + 2;

RTMPPacket *packet = static_cast(malloc(sizeof(RTMPPacket)));

//初始化内部缓冲区

RTMPPacket_Alloc(packet, size);

char *body = packet->m_body;

body[0] = 0xAF;

body[1] = 0x01;

memcpy(&body[2], buffer, len);

//packet参数设置

packet->m_packetType = RTMP_PACKET_TYPE_AUDIO;//音频类型

packet->m_nBodySize = size;

//客户端通过pts自己做同步

packet->m_nTimeStamp = RTMP_GetTime() - start_time;//为了让客户端知道播放进度

packet->m_hasAbsTimestamp = 0;

//指定通道

packet->m_nChannel = 0x04;

packet->m_headerType = RTMP_PACKET_SIZE_MEDIUM;

put(packet);

}