背景####

公司大促期间,由于扩容了大量云主机,采用LVS DR模式的Zabbix-Proxy端请求巨增,导致监控数据采集的队列长时间堆积,已经在前端界面无法展示。所以这个时候负责监控的同事从我这里申请了20多台虚拟机用于抗监控流量。

Zabbix-Proxy的集群框架是Keepalived + lvs,原本以为只需要给安装Keepavlived的两台虚拟机分配一个Vip就好了(让虚拟机支持高可用的vrrp协议),结果过了一阵监控的同事告诉我vip的端口访问超时,我便开始觉得自己想得太简单。

看下问题现场:

# nc -vz 10.1.26.252 10051

nc: connect to 10.1.26.252 port 10051 (tcp) failed: Connection timed out

客户端返回内容很简单,连接超时。

$ ipvsadm -l

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.1.26.252:zabbix-trapper sh

-> 10.1.26.136:zabbix-trapper Route 100 0 0

-> 10.1.26.137:zabbix-trapper Route 100 0 0

-> 10.1.26.138:zabbix-trapper Route 100 0 0

-> 10.1.26.139:zabbix-trapper Route 100 0 0

-> 10.1.26.140:zabbix-trapper Route 100 0 0

-> 10.1.26.141:zabbix-trapper Route 100 0 0

-> 10.1.26.142:zabbix-trapper Route 100 0 0

-> 10.1.26.143:zabbix-trapper Route 100 0 0

$ ipvsadm -lnc

IPVS connection entries

pro expire state source virtual destination

TCP 01:57 SYN_RECV 10.0.19.92:37358 10.1.26.252:10051 10.1.26.139:10051

TCP 01:57 SYN_REVV 10.0.19.92:37359 10.1.26.252:10051 10.1.26.139:10051

LVS端看到问题很明显,客户端请求过来的连接状态全部卡在SYN_RECV,说明请求已经到达LVS,但是在丢给后端Real Server的时候处理异常。

那么问题点出在哪儿呢?

- 虚拟机iptables

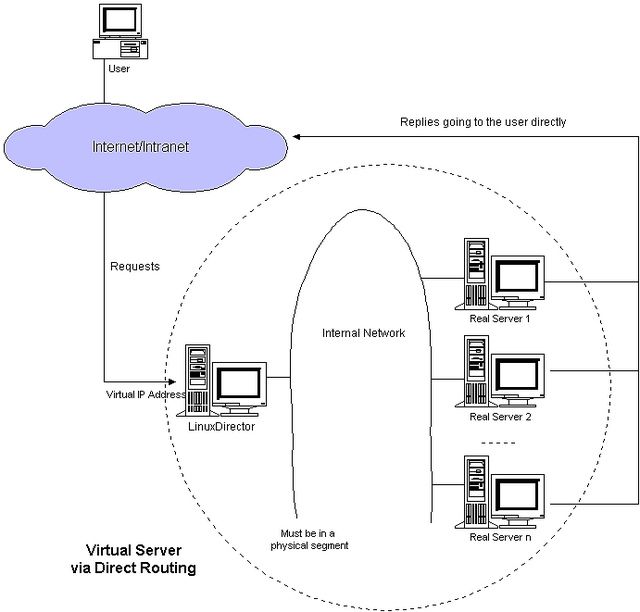

最开始怀疑是虚拟机上iptables规则影响DR模式的报文转发,因为DR模式下的LVS在接收到请求后,会将报头拆解,将dst mac改成后端RealServer的MAC地址,然后重新封装好报文通过广播vip地址丢到后端的RealServer处理。

于是我清空所有虚拟机的iptales,并关闭selinux。但问题仍然存在,说明问题不在虚拟机上。

- LVS配置

根据LVS DR模式的特性,后端的RealServer上的lo网卡需要绑定vip地址,否则当接受到转发过来dst ip为vip的报文时,默认也会丢弃,造成客户端访问超时的问题。

通过和监控的同事沟通,确定排除这块问题,我又想到会不会需要把vip同时也绑定到RealServer上。我再用allowed_address_pairs参数更新了后端虚拟机的Port。客户端请求依然超时,说明问题并不是这里。

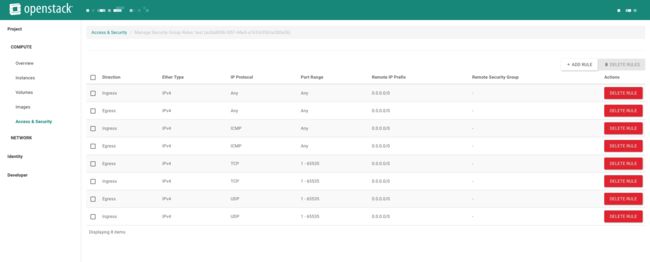

- Security Group

由于排除了虚拟机问题,我这边将解决问题的重点移至安全组策略。我们知道Neutron通过iptables规则来实现虚拟机进出流量的限制。

于是我将安全组策略全部打开,如下图:

客户端还是超时!!!这个时候我已经感觉无计可施。

转折

过了一会,我觉得应该做最后一搏,于是我将宿主机上iptables全部清空,客户端居然通了!

$ nc -vz 10.1.26.252 10051

Connection to 10.1.26.252 10051 port [tcp/*] succeeded!

排查

看来问题点在宿主机上的Iptables,找到这个方向后,我便开始根据VIP对iptables进行反向排查。

Chain neutron-openvswi-o38833ad6-c

$ iptables-save | grep 10.1.26.252

-A neutron-openvswi-s38833ad6-c -s 10.1.26.252/32 -m mac --mac-source FA:16:3E:C4:20:C7 -m comment --comment "Allow traffic from defined IP/MAC pairs." -j RETURN

$ iptables-save | grep neutron-openvswi-o38833ad6-c

-A neutron-openvswi-o38833ad6-c -j neutron-openvswi-s38833ad6-c

-A neutron-openvswi-s38833ad6-c -s 10.1.26.252/32 -m mac --mac-source neutron-openvswi-o38833ad6-c -m comment --comment "Allow traffic from defined IP/MAC pairs." -j RETURN

-A neutron-openvswi-s38833ad6-c -s 10.1.26.144/32 -m mac --mac-source FA:16:3E:C4:20:C7 -m comment --comment "Allow traffic from defined IP/MAC pairs." -j RETURN

-A neutron-openvswi-s38833ad6-c -m comment --comment "Drop traffic without an IP/MAC allow rule." -j DROP

上面这一条意思很明确,就是绑定虚拟机IP和MAC地址,只允许MAC地址为FA:16:3E:C4:20:C7,IP地址为10.1.26.252和10.1.26.144通过,其余DROP掉。neutron-openvswi-s38833ad6-c这条Chain是从neutron-openvswi-o38833ad6-c跳转下来的。

Chain neutron-openvswi-o38833ad6-c

$ iptables-save | grep neutron-openvswi-o38833ad6-c

:neutron-openvswi-o38833ad6-c - [0:0]

-A neutron-openvswi-INPUT -m physdev --physdev-in tap38833ad6-c6 --physdev-is-bridged -m comment --comment "Direct incoming traffic from VM to the security group chain." -j neutron-openvswi-o38833ad6-c

-A neutron-openvswi-o38833ad6-c -s 0.0.0.0/32 -d 255.255.255.255/32 -p udp -m udp --sport 68 --dport 67 -m comment --comment "Allow DHCP client traffic." -j RETURN

-A neutron-openvswi-o38833ad6-c -j neutron-openvswi-s38833ad6-c

-A neutron-openvswi-o38833ad6-c -p udp -m udp --sport 68 --dport 67 -m comment --comment "Allow DHCP client traffic." -j RETURN

-A neutron-openvswi-o38833ad6-c -p udp -m udp --sport 67 -m udp --dport 68 -m comment --comment "Prevent DHCP Spoofing by VM." -j DROP

-A neutron-openvswi-o38833ad6-c -m state --state RELATED,ESTABLISHED -m comment --comment "Direct packets associated with a known session to the RETURN chain." -j RETURN

-A neutron-openvswi-o38833ad6-c -p icmp -j RETURN

-A neutron-openvswi-o38833ad6-c -p tcp -m tcp -m multiport --dports 1:65535 -j RETURN

-A neutron-openvswi-o38833ad6-c -j RETURN

-A neutron-openvswi-o38833ad6-c -p udp -m udp -m multiport --dports 1:65535 -j RETURN

-A neutron-openvswi-o38833ad6-c -m state --state INVALID -m comment --comment "Drop packets that appear related to an existing connection (e.g. TCP ACK/FIN) but do not have an entry in conntrack." -j DROP

-A neutron-openvswi-o38833ad6-c -m comment --comment "Send unmatched traffic to the fallback chain." -j neutron-openvswi-sg-fallback

-A neutron-openvswi-sg-chain -m physdev --physdev-in tap38833ad6-c6 --physdev-is-bridged -m comment --comment "Jump to the VM specific chain." -j neutron-openvswi-o38833ad6-c

neutron-openvswi-o38833ad6-c这条Chain是用于规则虚拟机出口流量,可以看到它是从两条Chian上跳转下来的neutron-openvswi-INPUT和neutron-openvswi-sg-chain。

neutron-openvswi-o38833ad6-c主要定义虚拟机engress的安全规则。这里可以看到之前创建的规则已经下发到ipables上应用成功。将没匹配的到规则丢给neutron-openvswi-sg-fallback,fallback里面就一条规则,丢弃进来的所有报文。

Chain neutron-openvswi-i38833ad6-c

$ iptables-save | grep neutron-openvswi-i38833ad6-c

:neutron-openvswi-i38833ad6-c - [0:0]

-A neutron-openvswi-i38833ad6-c -m state --state RELATED,ESTABLISHED -m comment --comment "Direct packets associated with a known session to the RETURN chain." -j RETURN

-A neutron-openvswi-i38833ad6-c -s 10.1.26.120/32 -p udp -m udp --sport 67 -m udp --dport 68 -j RETURN

-A neutron-openvswi-i38833ad6-c -p icmp -j RETURN

-A neutron-openvswi-i38833ad6-c -j RETURN

-A neutron-openvswi-i38833ad6-c -p tcp -m tcp -m multiport --dports 1:65535 -j RETURN

-A neutron-openvswi-i38833ad6-c -p udp -m udp -m multiport --dports 1:65535 -j RETURN

-A neutron-openvswi-i38833ad6-c -m state --state INVALID -m comment --comment "Drop packets that appear related to an existing connection (e.g. TCP ACK/FIN) but do not have an entry in conntrack." -j DROP

-A neutron-openvswi-i38833ad6-c -m comment --comment "Send unmatched traffic to the fallback chain." -j neutron-openvswi-sg-fallback

-A neutron-openvswi-sg-chain -m physdev --physdev-out tap38833ad6-c6 --physdev-is-bridged -m comment --comment "Jump to the VM specific chain." -j neutron-openvswi-i38833ad6-c

neutron-openvswi-i38833ad6-c这条Chain是用于规则虚拟机入口流量,这里看住它只从neutron-openvswi-sg-chain上面跳转下来,同时将没匹配的到规则丢给neutron-openvswi-sg-fallback。

Chain neutron-openvswi-sg-chain

$ iptables-save | grep neutron-openvswi-sg-chain

:neutron-openvswi-sg-chain - [0:0]

-A neutron-openvswi-FORWARD -m physdev --physdev-out tap38833ad6-c6 --physdev-is-bridged -m comment --comment "Direct traffic from the VM interface to the security group chain." -j neutron-openvswi-sg-chain

-A neutron-openvswi-FORWARD -m physdev --physdev-in tap38833ad6-c6 --physdev-is-bridged -m comment --comment "Direct traffic from the VM interface to the security group chain." -j neutron-openvswi-sg-chain

-A neutron-openvswi-sg-chain -m physdev --physdev-out tap38833ad6-c6 --physdev-is-bridged -m comment --comment "Jump to the VM specific chain." -j neutron-openvswi-i38833ad6-c

-A neutron-openvswi-sg-chain -m physdev --physdev-in tap38833ad6-c6 --physdev-is-bridged -m comment --comment "Jump to the VM specific chain." -j neutron-openvswi-o38833ad6-c

-A neutron-openvswi-sg-chain -j ACCEPT

这里可以看到neutron-openvswi-sg-chain处理虚拟机入口和出口流量,同时由neutron-openvswi-FORWARD跳转而来。

physdev模块是iptables用于过滤linuxbridge上的网络包,

参数说明

-m physdev

--physdev-in 发出虚拟机的数据包,作用于INPUT, FORWARD and PREROUTING

--physdev-out 接受虚拟机的数据包、作用于FORWARD, OUTPUT and POSTROUTING

Chain neutron-openvswi-FORWARD

$ iptables-save | grep neutron-openvswi-FORWARD

:neutron-openvswi-FORWARD - [0:0]

-A FORWARD -j neutron-openvswi-FORWARD

-A neutron-openvswi-FORWARD -j neutron-openvswi-scope

根据上下文可以确定,neutron-openvswi-FORWARD是由filter表的FORWARD跳转过来。

Chain FORWARD

$ iptables-save | grep FORWARD

-A FORWARD -j neutron-filter-top

-A FORWARD -j neutron-openvswi-FORWARD

这里看到报文在转发之前还进入了neutron-filter-top链,继续往下。

$ iptables-save | grep neutron-filter-top

:neutron-filter-top - [0:0]

-A neutron-filter-top -j neutron-openvswi-local

$ iptables-save | grep neutron-openvswi-local

:neutron-openvswi-local - [0:0]

看到这里我想大部分同学应该明白了,LVS DR模式的虚拟机在转发报文时,默认的FORWARD链里面并没有针对处理报文转发的规则。于是我在neutron-openvswi-local加了一条ACCEPT的语句,终于客户端就能正常请求VirtualServer了。

思考

由于LVS的DR模式的特殊性,VirtualServer在收到客户端请求时,并不响应请求,只修改报头重新转发给RealServer。从宏观来看过程并不是VS去请求RS。当请求走到VS所在宿主机上面时,自然会分到FORWARD链上,而这时Netron处理虚拟机iptables时,只做了对虚拟机访问的限制,而当虚拟机需要转发自己数据包时就没有处理规则。从而导致客户端在连接时出现超时的现象。

由于Neutron有基于Haproxy的负载均衡服务,并不鼓励在虚拟机上直接搭建负载均衡器,所以我想在处理这类的问题应该都转到FAAS上面了。总之通过这次排点,对Neutron这块又有了新的认识。