一、参考

二、cluster监控指标分析

| 指标 | 说明 |

|---|---|

cluster_status |

集群状态,red, yellow 为异常 |

pending_task |

等待任务,超过100 为异常 |

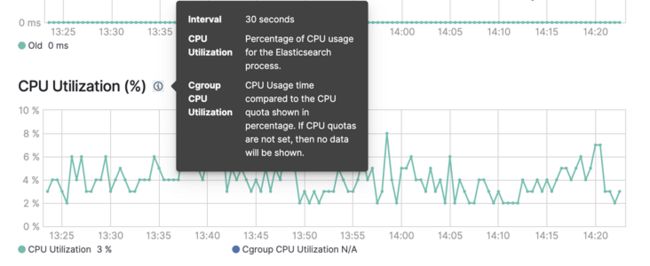

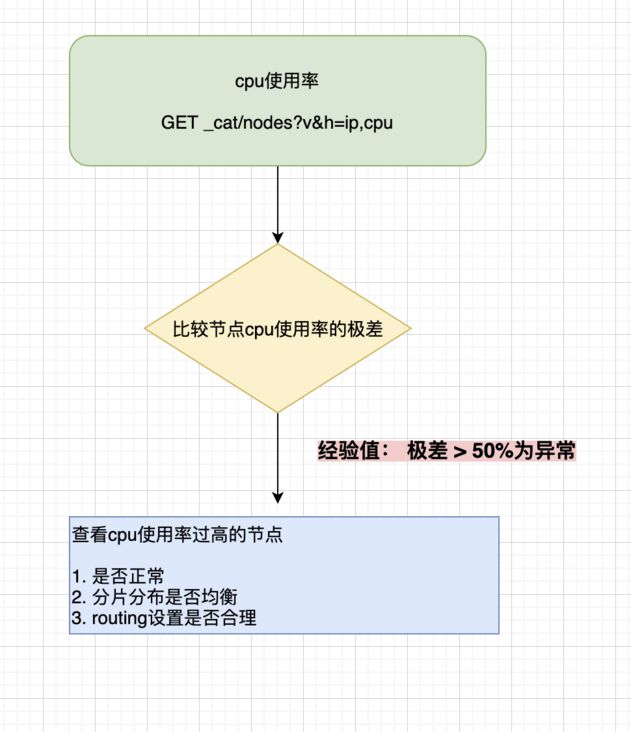

cpu_util |

cpu使用率,各个节点的cpu使用率的极差(最大值减去最小值)大于50%,判断为异常, cpu_util(max) - cp u_util(min) > 50% |

query |

查询次数,各个节点的查询次数极差,超过2,判断为异常 query(max)/query (min) > 2 |

indexing |

indexing_total(stacked) /s > 1000 且 indexing_total / thread_pool_write_completed < 100 |

2.1 集群状态 cluster status

GET _cluster/health

GET _cluster/allocation/explain2.2 任务状态 pending_tasks

pending_tasks反映了master节点还没有执行的集群级别的更改任务

(例如:创建索引,更新mapping,分配分片等)

一般在大的集群(高并发场景),由于任务不能及时处理完成,会导致任务长时间积压,且积压任务越来越多,如果积压量达到某个阀值,则可能需要触发告警

执行任务的级别分为

public enum Priority {

IMMEDIATE((byte) 0), // 马上立即

URGENT((byte) 1), // 紧急

HIGH((byte) 2), // 高

NORMAL((byte) 3), // 普通

LOW((byte) 4), // 低

LANGUID((byte) 5); // 延迟

}GET _cat/pending_tasks?v

{

"tasks": [

{

"insert_order": 101,

"priority": "URGENT",

"source": "create-index [foo_9], cause [api]",

"time_in_queue_millis": 86,

"time_in_queue": "86ms"

},

{

"insert_order": 46,

"priority": "HIGH",

"source": "shard-started ([foo_2][1], node[tMTocMvQQgGCkj7QDHl3OA], [P], s[INITIALIZING]), reason [after recovery from shard_store]",

"time_in_queue_millis": 842,

"time_in_queue": "842ms"

},

{

"insert_order": 45,

"priority": "HIGH",

"source": "shard-started ([foo_2][0], node[tMTocMvQQgGCkj7QDHl3OA], [P], s[INITIALIZING]), reason [after recovery from shard_store]",

"time_in_queue_millis": 858,

"time_in_queue": "858ms"

}

]

}2.3 cpu使用率的极差

cpu使用率是判断集群健康的重要指标,直接影响集群的吞吐量、查询请求的响应

如果:

(1)多个节点的cpu使用率都很高,表示资源瓶颈,添加资源即可以解决

(2)有个别节点cpu使用率过高,则需要查看该节点本身是否

a. 分片过多; b. 查询或者索引routing设计不合理; c. 节点硬件异常

注意:要考虑到节点角色可能不同(冷热分离),本身cpu使用率就有差别

GET _cat/nodes?v&h=ip,cpu

ip cpu

10.15.17.201 2

10.15.17.202 2

10.15.25.194 4

2.4 data节点的qps极差

节点qps指标受到shard分配, routing的影响,极差过大,代表集群数据分配不均,或者有参数干预,集群负载不均衡

但是,为该指标设置阈值时候,需要考虑到角色分离的场景,由于业务的不同,不同集群的qps相差巨大,可以通过换算成瞬时流量的百分比来做计算

2.5 一次bulk请求的数量

三、node监控指标分析

| 指标 | 说明 |

|---|---|

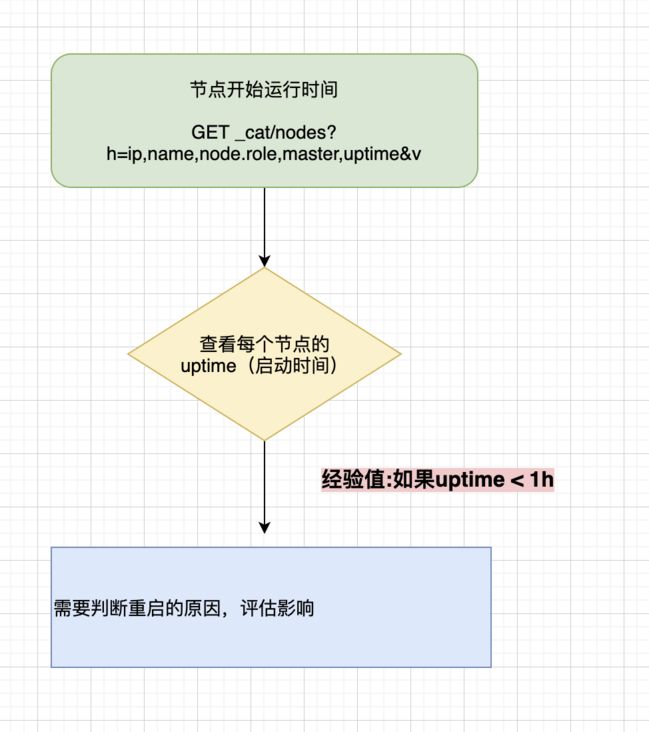

uptime |

节点开始运行的时间 |

free_disk |

磁盘剩余空间 |

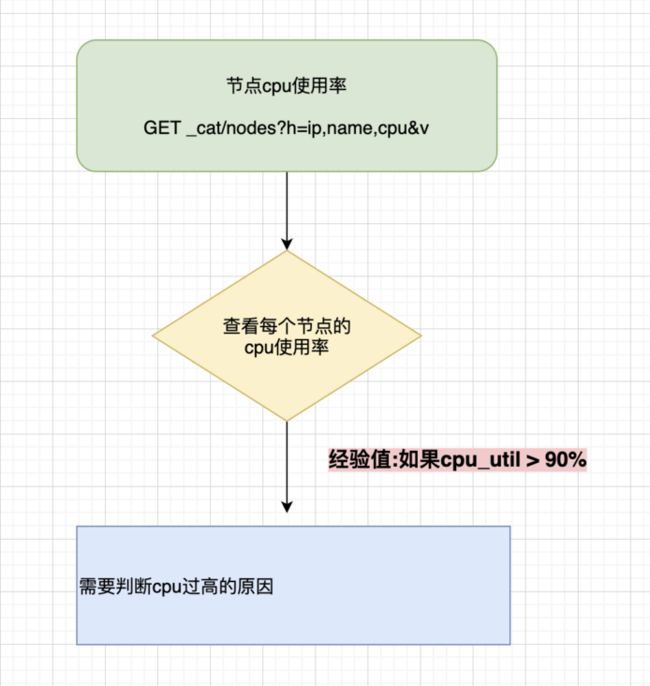

cpu_util |

cpu使用率 |

| 分片数量 | 查看节点分片数量是否是 0 |

3.1 uptime重启状态

节点重启往往对集群性能有巨大的影响,接收到的流量会异常,减少了吞吐量,

对于大集群,节点重启会

(1) 重建缓存,(2)分片迁移,

都会对集群造成影响

GET _cat/nodes?h=ip,name,node.role,master,uptime&v

ip name node.role master uptime

172.20.75.201 instance-0000000000 himrst - 14.7d

172.20.76.29 instance-0000000001 himrst * 14.7d

172.20.81.245 tiebreaker-0000000002 mv - 14.7d

3.2 free_disk磁盘剩余空间

# 查看diskwater

GET /_cluster/settings?include_defaults&flat_settings

"cluster.routing.allocation.disk.watermark.enable_for_single_data_node" : "true",

"cluster.routing.allocation.disk.watermark.flood_stage" : "95%",

"cluster.routing.allocation.disk.watermark.flood_stage.frozen" : "95%",

"cluster.routing.allocation.disk.watermark.flood_stage.frozen.max_headroom" : "20GB",

"cluster.routing.allocation.disk.watermark.high" : "90%",

"cluster.routing.allocation.disk.watermark.low" : "85%",# 查看磁盘使用情况

GET _cat/allocation?v

shards disk.indices disk.used disk.avail disk.total disk.percent host ip node

48 1.1gb 2.3gb 117.6gb 120gb 1 172.20.75.201 172.20.75.201 instance-0000000000

48 1014.5mb 1.2gb 118.7gb 120gb 1 172.20.76.29 172.20.76.29 instance-0000000001

3.3 cpu_util节点cpu使用率

cpu使用率过高,会极大影响集群性能,

(1) 读异常,响应时间过长

(2) 写异常,无法及时处理写入请求

但是,由于业务流量存在周期性波峰波谷,可以将该阈值设置大一些(90%)

GET _cat/nodes?h=ip,name,cpu&v

ip name cpu

172.20.75.201 instance-0000000000 13

172.20.76.29 instance-0000000001 4

172.20.81.245 tiebreaker-0000000002 5

3.4 节点上的分片数量

如果节点未分配分片,需要确认资源分配是否合理

一般发生在

(1) 索引分片数量设置不合理

(2) 迁移过程(存在 exclude)

# 查看节点分片数量

GET _cat/allocation?v

shards disk.indices disk.used disk.avail disk.total disk.percent host ip node

48 1.1gb 2.3gb 117.6gb 120gb 1 172.20.75.201 172.20.75.201 instance-0000000000

48 1014.5mb 1.2gb 118.7gb 120gb 1 172.20.76.29 172.20.76.29 instance-0000000001

四、shard监控指标分析

| 指标 | 说明 |

|---|---|

number |

分片数量 |

shard_size |

分片大小 |

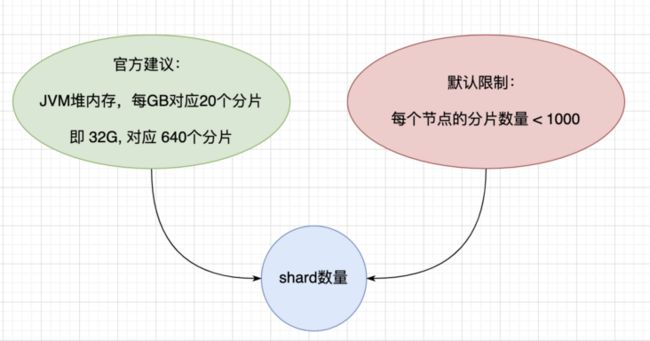

4.1 分片数量

过多或者过少的 shard数量都会影响写入或者查询性能

# 查看分片详情

GET _cat/shards?h=index,shard,prirep,state,docs,store,ip,node&v&s=store:desc

index shard prirep state docs store ip node

elastic-cloud-logs-7-2021.06.03-000001 0 r STARTED 2076953 399.3mb 172.20.75.201 instance-0000000000

elastic-cloud-logs-7-2021.06.03-000001 0 p STARTED 2076953 333.7mb 172.20.76.29 instance-0000000001

.monitoring-es-7-2021.06.17 0 r STARTED 473930 273mb 172.20.76.29 instance-0000000001

.monitoring-es-7-2021.06.16 0 r STARTED 471958 265.9mb 172.20.76.29 instance-0000000001

.monitoring-es-7-2021.06.17 0 p STARTED 473930 257.8mb 172.20.75.201 instance-0000000000

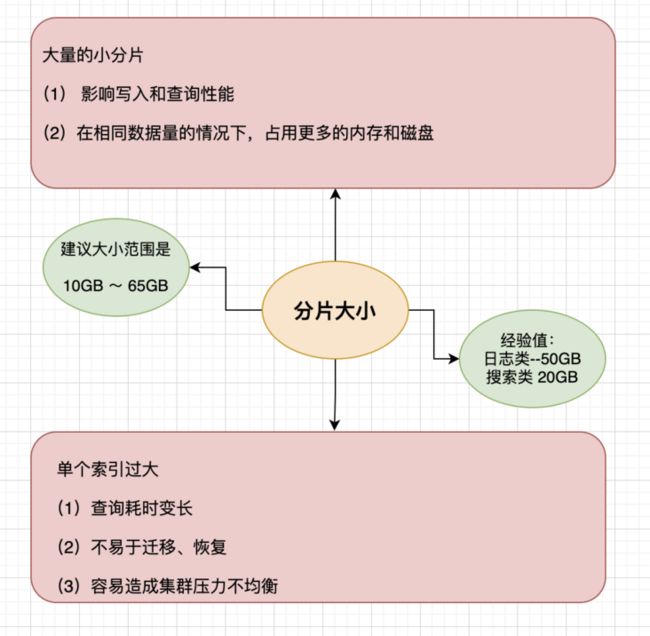

4.2 分片大小

# 查看分片详情

GET _cat/shards?h=index,shard,prirep,state,docs,store,ip,node&v&s=store:desc

index shard prirep state docs store ip node

elastic-cloud-logs-7-2021.06.03-000001 0 r STARTED 2076953 399.3mb 172.20.75.201 instance-0000000000

elastic-cloud-logs-7-2021.06.03-000001 0 p STARTED 2076953 333.7mb 172.20.76.29 instance-0000000001

.monitoring-es-7-2021.06.17 0 r STARTED 473930 273mb 172.20.76.29 instance-0000000001

.monitoring-es-7-2021.06.16 0 r STARTED 471958 265.9mb 172.20.76.29 instance-0000000001

.monitoring-es-7-2021.06.17 0 p STARTED 473930 257.8mb 172.20.75.201 instance-0000000000

五、index监控指标分析

| 指标 | 说明 |

|---|---|

replica |

索引是否设置了副本 |

dynamic mapping |

索引mapping是否配置为动态 |

refresh_interval |

索引refresh频率 |

max_result_window |

单次请求的,最大返回文档数量 |

5.1 replica

如果没有设置副本,当节点crash时候,可能会导致数据丢失

# number_of_replicas 为副本数量

GET kibana_sample_data_logs/_settings

{

"kibana_sample_data_logs" : {

"settings" : {

"index" : {

"routing" : {

"allocation" : {

"include" : {

"_tier_preference" : "data_content"

}

}

},

"number_of_shards" : "1",

"auto_expand_replicas" : "0-1",

"blocks" : {

"read_only_allow_delete" : "false"

},

"provided_name" : "kibana_sample_data_logs",

"creation_date" : "1622706464015",

"number_of_replicas" : "1",

"uuid" : "KIsQkeGpTpi9-WO0rz-ACw",

"version" : {

"created" : "7130199"

}

}

}

}

}

5.2 动态mapping

默认值为true

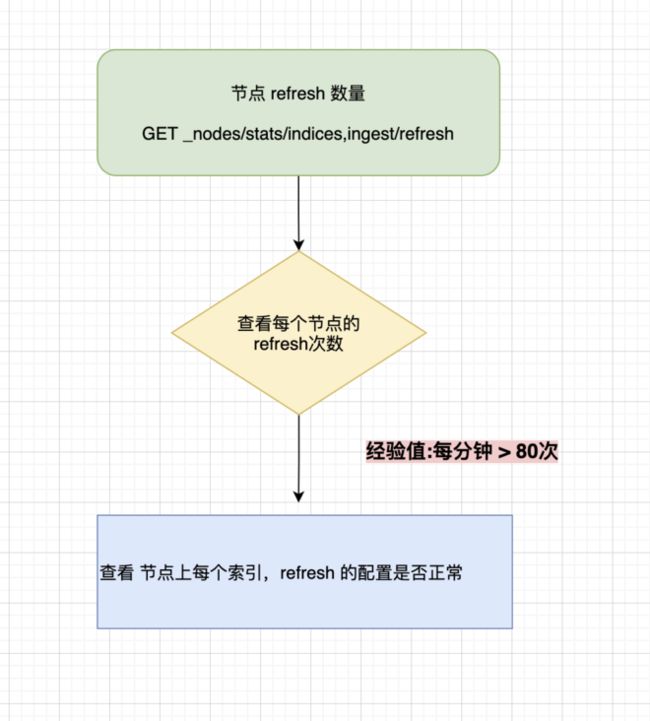

5.3 refresh频率

refresh的频率影响着 segment的生成速度和大小,

segment过多会影响查询性能,且消耗更多的内存和磁盘空间

GET kibana_sample_data_logs/_settings?include_defaults&flat_settings

"index.refresh_interval" : "1s"GET _nodes/stats/indices,ingest/refresh

"indices" : {

"refresh" : {

"total" : 1180173,

"total_time_in_millis" : 18280039,

"external_total" : 1065957,

"external_total_time_in_millis" : 17922710,

"listeners" : 0

}

},5.4 单次查询最大返回文档数量

GET kibana_sample_data_logs/_settings?include_defaults&flat_settings

"index.max_result_window" : "10000"六、jvm监控指标分析

| 指标 | 说明 |

|---|---|

jvm heap |

堆内存使用率 |

jdk version |

jdk版本一致 |

segment memory |

段占用内存 |

full gc |

full gc次数 |

6.1 jvm heap堆内存使用

GET _nodes/stats/indices,jvm?human

"mem" : {

"heap_used" : "448.1mb",

"heap_used_in_bytes" : 469957120,

"heap_used_percent" : 22,

"heap_committed" : "1.9gb",

"heap_committed_in_bytes" : 2055208960,

"heap_max" : "1.9gb",

"heap_max_in_bytes" : 2055208960,

"non_heap_used" : "286.9mb",

"non_heap_used_in_bytes" : 300867368,

"non_heap_committed" : "294mb",

"non_heap_committed_in_bytes" : 308346880,

"pools" : {

"young" : {

"used" : "136mb",

"used_in_bytes" : 142606336,

"max" : "0b",

"max_in_bytes" : 0,

"peak_used" : "1.1gb",

"peak_used_in_bytes" : 1228931072,

"peak_max" : "0b",

"peak_max_in_bytes" : 0

},

"old" : {

"used" : "290.1mb",

"used_in_bytes" : 304282112,

"max" : "1.9gb",

"max_in_bytes" : 2055208960,

"peak_used" : "584.8mb",

"peak_used_in_bytes" : 613298176,

"peak_max" : "1.9gb",

"peak_max_in_bytes" : 2055208960

},

"survivor" : {

"used" : "22mb",

"used_in_bytes" : 23068672,

"max" : "0b",

"max_in_bytes" : 0,

"peak_used" : "68mb",

"peak_used_in_bytes" : 71303168,

"peak_max" : "0b",

"peak_max_in_bytes" : 0

}

}

}6.2 jdk version的一致性

分布式集群中,需要保证每个节点的jdk版本一致

6.3 段内存

GET _nodes/stats/indices,ingest/segments?human

"segments" : {

"count" : 213,

"memory" : "2.3mb",

"memory_in_bytes" : 2504412,

"terms_memory" : "912.8kb",

"terms_memory_in_bytes" : 934720,

"stored_fields_memory" : "104.5kb",

"stored_fields_memory_in_bytes" : 107080,

"term_vectors_memory" : "0b",

"term_vectors_memory_in_bytes" : 0,

"norms_memory" : "15kb",

"norms_memory_in_bytes" : 15424,

"points_memory" : "0b",

"points_memory_in_bytes" : 0,

"doc_values_memory" : "1.3mb",

"doc_values_memory_in_bytes" : 1447188,

"index_writer_memory" : "7.4mb",

"index_writer_memory_in_bytes" : 7806056,

"version_map_memory" : "316b",

"version_map_memory_in_bytes" : 316,

"fixed_bit_set" : "387kb",

"fixed_bit_set_memory_in_bytes" : 396336,

"max_unsafe_auto_id_timestamp" : 1622706879405,

"file_sizes" : { }

}

6.4 full gc的次数

默认使用的G1垃圾收集器,所以一般会是 young gc或者mixed gc

但是,如果 mixed gc无法满足新对象分配内存的速度,会使用full gc,

G1中没有 full gc, 对应使用的是serial old gc

GET _nodes/stats/indices,jvm?human

"gc" : {

"collectors" : {

"young" : {

"collection_count" : 22324,

"collection_time" : "6.3m",

"collection_time_in_millis" : 378376

},

"old" : {

"collection_count" : 0,

"collection_time" : "0s",

"collection_time_in_millis" : 0

}

}

},七、threadPool监控指标分析

| 指标 | 说明 |

|---|---|

bulk reject |

写入拒绝次数 |

search reject |

查询拒绝次数 |

7.1 bulk reject写入拒绝

GET _cat/thread_pool?v&s=rejected:desc

node_name name active queue rejected

instance-0000000000 analyze 0 0 0

instance-0000000000 ccr 0 0 0

instance-0000000000 fetch_shard_started 0 0 0

instance-0000000000 fetch_shard_store 0 0 0

instance-0000000000 flush 0 0 0

instance-0000000000 force_merge 0 0 0

instance-0000000000 generic 0 0 0

instance-0000000000 get 0 0 07.2 search reject查询拒绝

GET _cat/thread_pool?v&s=rejected:desc

node_name name active queue rejected

instance-0000000000 analyze 0 0 0

instance-0000000000 ccr 0 0 0

instance-0000000000 fetch_shard_started 0 0 0

instance-0000000000 fetch_shard_store 0 0 0

instance-0000000000 flush 0 0 0

instance-0000000000 force_merge 0 0 0

instance-0000000000 generic 0 0 0

instance-0000000000 get 0 0 0