Python《爬取手机和桌面壁纸》

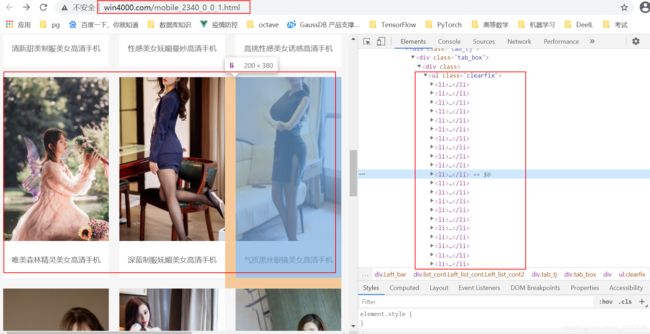

此次爬取壁纸网站,此网站全是静态的,没有反爬虫手段,感觉是适合新手练手。

http://www.win4000.com/mobile.html

http://www.win4000.com/wallpaper.html

分别是手机壁纸和桌面壁纸。

比如点开手机壁纸,我们会发现有很多标签。

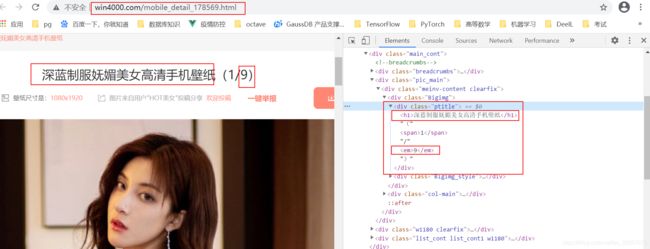

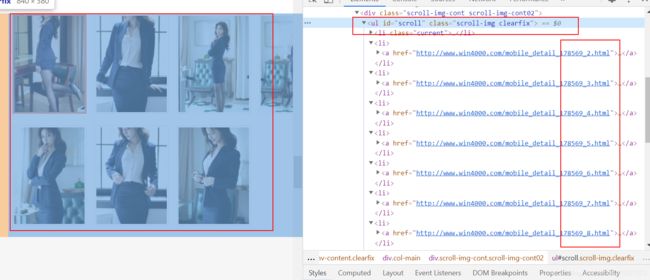

一个组图中,html页面的url是有规律的。

http://www.win4000.com/mobile_detail_178569_1.html

http://www.win4000.com/mobile_detail_178569_2.html

http://www.win4000.com/mobile_detail_178569_3.html

http://www.win4000.com/mobile_detail_178569_4.html

………

好了,页面分析完毕。直接整代码:

import time

from concurrent.futures import ThreadPoolExecutor

import time

import os

import re

import requests

from bs4 import BeautifulSoup

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

rootrurl = 'http://www.win4000.com/'

save_dir = 'D:/estimages/'

headers = {

"Referer": rootrurl,

'User-Agent': "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36",

'Accept-Language': 'en-US,en;q=0.8',

'Cache-Control': 'max-age=0',

'Connection': 'keep-alive'

} ###设置请求的头部,伪装成浏览器

def saveOneImg(dir, img_url):

new_headers = {

"Referer": img_url,

'User-Agent': "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36",

'Accept-Language': 'en-US,en;q=0.8',

'Cache-Control': 'max-age=0',

'Connection': 'keep-alive'

} ###设置请求的头部,伪装成浏览器,实时换成新的 header 是为了防止403 http code问题,防止反盗链,

try:

img = requests.get(img_url, headers=new_headers) # 请求图片的实际URL

if (str(img).find('200') > 1):

with open(

'{}/{}.jpg'.format(dir, img_url.split('/')[-1].split('?')[0]), 'wb') as jpg: # 请求图片并写进去到本地文件

jpg.write(img.content)

print(img_url)

jpg.close()

return True

else:

return False

except Exception as e:

print('exception occurs: ' + img_url)

print(e)

return False

def processOnePage(tag, url):

print('current group page is: %s' % url)

html = BeautifulSoup(requests.get(url).text, features="html.parser")

div = html.find('div', {

'class': 'ptitle'})

title = div.find('h1').get_text()

num = int(div.find('em').get_text())

tmpDir = '{}{}'.format(tag, title)

if not os.path.exists(tmpDir):

os.makedirs(tmpDir)

for i in range(1, (num + 1)):

tmpurl = '{}_{}{}'.format(url[:-5], i, '.html')

html = BeautifulSoup(requests.get(tmpurl).text, features="html.parser")

saveOneImg(tmpDir, html.find('img', {

'class': 'pic-large'}).get('src'))

pass

def processPages(tag, a_s):

for a in a_s:

processOnePage(tag, a.get('href'))

time.sleep(1)

pass

def tagSpiders(tag, url):

while 1:

html = BeautifulSoup(requests.get(url).text, features="html.parser")

a_s = html.find('div', {

'class': 'tab_box'}).find_all('a')

processPages(tag, a_s)

next = html.find('a', {

'class': 'next'})

if next is None:

break

url = next.get('href')

time.sleep(1)

pass

def getAllTags(taglist):

list = {

}

for tag, url in taglist.items():

html = BeautifulSoup(requests.get(url).text, features="html.parser")

tags = html.find('div', {

'class': 'cont1'}).find_all('a')[1:]

for a in tags:

list['{}{}/{}/'.format(save_dir, tag, a.get_text())] = a.get('href')

return list

if __name__ == '__main__':

# 获得所有标签

list = {

'手机壁纸': 'http://www.win4000.com/mobile.html',

'桌面壁纸': 'http://www.win4000.com/wallpaper.html'}

taglist = getAllTags(list)

print(taglist)

#

# 给每个标签配备一个线程

with ThreadPoolExecutor(max_workers=40) as t: # 创建一个最大容纳数量为20的线程池

for tag, url in taglist.items():

t.submit(tagSpiders, tag, url)

# 单个连接测试下下

# tagSpiders('D:/estimages/手机壁纸/美女/', 'http://www.win4000.com/mobile_2340_0_0_1.html')

# 等待所有线程都完成。

while 1:

print('-------------------')

time.sleep(1)

效果如下: