接下来的一段时间将要学习Kubernetes源码,为了更好的查看源码和调试程序,因此搭建了一个Kubernetes开发调试环境,该环境可以结合断点调试理解代码的运行过程。

准备虚拟机并安装必要软件

$ yum install -y docker wget curl vim golang etcd openssl git g++ gcc

$ echo "

export GOROOT=/usr/local/go

export GOPATH=/home/workspace

export PATH=$PATH:$GOROOT/bin:$GOPATH/bin

" >> /etc/profile

$ source /etc/profile安装证书生成工具

$ go get -u -v github.com/cloudflare/cfssl/cmd/...安装调试工具

$ go get -u -v github.com/go-delve/delve/cmd/dlv 注意: 如果上述两种工具安装失败或者由于网络的原因无法安装可以直接在github上将源码下载到$GOPATH/pkg/github.com下进入对应的目录执行 make install进行安装,安装成功后在$GOPATH 目录的bin目录下生成可执行程序

准备源码及编译出调试版本

clone Kubernetes代码

$ git clone https://github.com/kubernetes/kubernetes.git $GOPATH/src/k8s.io/kubernetes

$ cd $GOPATH/src/k8s.io/kubernetes

$ git checkout -b v1.20.1 # 切换到v1.20.1分支clone etcd代码

$ git clone https://https://github.com/etcd-io/etcd.git $GOPATH/src/k8s.io/etcd

$ cd $GOPATH/src/k8s.io/etcd

$ git checkout -b v3.4.13 # 切换到v3.4.13分支执行单机集群启动脚本

$ cd $GOPATH/src/k8s.io/kubernetes

$ ./hack/local-up-cluster.sh # 执行时间较长,耐心等待

make: Entering directory `/home/workspace/src/k8s.io/kubernetes'

make[1]: Entering directory `/home/workspace/src/k8s.io/kubernetes'

make[1]: Leaving directory `/home/workspace/src/k8s.io/kubernetes'

make[1]: Entering directory `/home/workspace/src/k8s.io/kubernetes'

+++ [0818 08:46:21] Building go targets for linux/amd64:

./vendor/k8s.io/code-generator/cmd/prerelease-lifecycle-gen

+++ [0818 08:46:29] Building go targets for linux/amd64:

./vendor/k8s.io/code-generator/cmd/deepcopy-gen

+++ [0818 08:46:49] Building go targets for linux/amd64:

./vendor/k8s.io/code-generator/cmd/defaulter-gen

+++ [0818 08:47:10] Building go targets for linux/amd64:

./vendor/k8s.io/code-generator/cmd/conversion-gen

+++ [0818 08:48:04] Building go targets for linux/amd64:

./vendor/k8s.io/kube-openapi/cmd/openapi-gen

+++ [0818 08:48:35] Building go targets for linux/amd64:

./vendor/github.com/go-bindata/go-bindata/go-bindata

make[1]: Leaving directory `/home/workspace/src/k8s.io/kubernetes'

+++ [0818 08:48:38] Building go targets for linux/amd64:

cmd/kubectl

cmd/kube-apiserver

cmd/kube-controller-manager

cmd/cloud-controller-manager

cmd/kubelet

cmd/kube-proxy

cmd/kube-scheduler

make: Leaving directory `/home/workspace/src/k8s.io/kubernetes'

Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

Kubelet cgroup driver defaulted to use:

/home/workspace/src/k8s.io/kubernetes/third_party/etcd:/usr/lib64/qt-3.3/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/usr/local/go/bin:/home/workspace/bin:/root/bin

etcd version 3.4.13 or greater required.

You can use 'hack/install-etcd.sh' to install a copy in third_party/.解决上述问题

$ ./hack/install-etcd.sh # 执行时间较长,耐心等待

Downloading https://github.com/coreos/etcd/releases/download/v3.4.13/etcd-v3.4.13-linux-amd64.tar.gz succeed

etcd v3.4.13 installed. To use:

export PATH="/home/workspace/src/k8s.io/kubernetes/third_party/etcd:${PATH}"

# 如果在内网环境无法下载上述包可以通过手动编译并建立软连接解决

$ go env -w GOPROXY=https://goproxy.cn,direct

$ cd $GOPATH/src/k8s.io/etcd

$ go mod vendor

$ make

$ cp bin/etcd $GOPATH/src/k8s.io/kubernetes/third_party/etcd-v3.4.13-linux-amd64

$ ln -fns etcd-v3.4.13-linux-amd64 etcd

# 再次执行下面脚本

$ ./hack/local-up-cluster.sh -O # 前提确保docker服务正常, 其中-O参数表示直接运行上次编译后的kubernetes相关组件,不再编译,如果可以成功启动则表示单机集群启动成功

skipped the build.

WARNING: You're not using the default seccomp profile

WARNING: bridge-nf-call-iptables is disabled

WARNING: bridge-nf-call-ip6tables is disabled

Kubelet cgroup driver defaulted to use: systemd

/home/workspace/src/k8s.io/kubernetes/third_party/etcd:/usr/lib64/qt-3.3/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/usr/local/go/bin:/home/workspace/bin:/root/bin

API SERVER secure port is free, proceeding...

Detected host and ready to start services. Doing some housekeeping first...

Using GO_OUT /home/workspace/src/k8s.io/kubernetes/_output/bin

Starting services now!

Starting etcd

etcd --advertise-client-urls http://127.0.0.1:2379 --data-dir /tmp/tmp.oUDPwStJvj --listen-client-urls http://127.0.0.1:2379 --log-level=debug > "/tmp/etcd.log" 2>/dev/null

Waiting for etcd to come up.

+++ [0818 09:28:25] On try 2, etcd: : {"health":"true"}

{"header":{"cluster_id":"14841639068965178418","member_id":"10276657743932975437","revision":"2","raft_term":"2"}}Generating a 2048 bit RSA private key

..........................................+++

........+++

writing new private key to '/var/run/kubernetes/server-ca.key'

-----

Generating a 2048 bit RSA private key

.....................+++

..............................+++

writing new private key to '/var/run/kubernetes/client-ca.key'

-----

Generating a 2048 bit RSA private key

......................+++

...........+++

writing new private key to '/var/run/kubernetes/request-header-ca.key'

-----

2021/08/18 09:28:26 [INFO] generate received request

2021/08/18 09:28:26 [INFO] received CSR

2021/08/18 09:28:26 [INFO] generating key: rsa-2048

2021/08/18 09:28:26 [INFO] encoded CSR

2021/08/18 09:28:26 [INFO] signed certificate with serial number 194466819854548695917808765884807705197966893514

2021/08/18 09:28:26 [INFO] generate received request

2021/08/18 09:28:26 [INFO] received CSR

2021/08/18 09:28:26 [INFO] generating key: rsa-2048

2021/08/18 09:28:26 [INFO] encoded CSR

2021/08/18 09:28:26 [INFO] signed certificate with serial number 260336220793200272831981224847592195960126439635

2021/08/18 09:28:26 [INFO] generate received request

2021/08/18 09:28:26 [INFO] received CSR

2021/08/18 09:28:26 [INFO] generating key: rsa-2048

2021/08/18 09:28:27 [INFO] encoded CSR

2021/08/18 09:28:27 [INFO] signed certificate with serial number 65089740821038541129206557808263443740004405579

2021/08/18 09:28:27 [INFO] generate received request

2021/08/18 09:28:27 [INFO] received CSR

2021/08/18 09:28:27 [INFO] generating key: rsa-2048

2021/08/18 09:28:27 [INFO] encoded CSR

2021/08/18 09:28:27 [INFO] signed certificate with serial number 132854604953276655528110960324766766236121022003

2021/08/18 09:28:27 [INFO] generate received request

2021/08/18 09:28:27 [INFO] received CSR

2021/08/18 09:28:27 [INFO] generating key: rsa-2048

2021/08/18 09:28:28 [INFO] encoded CSR

2021/08/18 09:28:28 [INFO] signed certificate with serial number 509837311282419173394280736377387964609293588759

2021/08/18 09:28:28 [INFO] generate received request

2021/08/18 09:28:28 [INFO] received CSR

2021/08/18 09:28:28 [INFO] generating key: rsa-2048

2021/08/18 09:28:29 [INFO] encoded CSR

2021/08/18 09:28:29 [INFO] signed certificate with serial number 315360946686297324979111988589987330989361014387

2021/08/18 09:28:29 [INFO] generate received request

2021/08/18 09:28:29 [INFO] received CSR

2021/08/18 09:28:29 [INFO] generating key: rsa-2048

2021/08/18 09:28:29 [INFO] encoded CSR

2021/08/18 09:28:29 [INFO] signed certificate with serial number 100953735645209745033637871773067470306482613638

2021/08/18 09:28:29 [INFO] generate received request

2021/08/18 09:28:29 [INFO] received CSR

2021/08/18 09:28:29 [INFO] generating key: rsa-2048

2021/08/18 09:28:30 [INFO] encoded CSR

2021/08/18 09:28:30 [INFO] signed certificate with serial number 719845605910186326782888521350059363008185160184

Waiting for apiserver to come up

+++ [0818 09:28:40] On try 7, apiserver: : ok

clusterrolebinding.rbac.authorization.k8s.io/kube-apiserver-kubelet-admin created

clusterrolebinding.rbac.authorization.k8s.io/kubelet-csr created

Cluster "local-up-cluster" set.

use 'kubectl --kubeconfig=/var/run/kubernetes/admin-kube-aggregator.kubeconfig' to use the aggregated API server

service/kube-dns created

serviceaccount/kube-dns created

configmap/kube-dns created

deployment.apps/kube-dns created

Kube-dns addon successfully deployed.

WARNING : The kubelet is configured to not fail even if swap is enabled; production deployments should disable swap.

2021/08/18 09:28:43 [INFO] generate received request

2021/08/18 09:28:43 [INFO] received CSR

2021/08/18 09:28:43 [INFO] generating key: rsa-2048

2021/08/18 09:28:44 [INFO] encoded CSR

2021/08/18 09:28:44 [INFO] signed certificate with serial number 185139553486611438539303446897387693685807865483

kubelet ( 120208 ) is running.

wait kubelet ready

No resources found

No resources found

No resources found

No resources found

No resources found

No resources found

No resources found

127.0.0.1 NotReady 2s v1.20.1

2021/08/18 09:29:02 [INFO] generate received request

2021/08/18 09:29:02 [INFO] received CSR

2021/08/18 09:29:02 [INFO] generating key: rsa-2048

2021/08/18 09:29:02 [INFO] encoded CSR

2021/08/18 09:29:02 [INFO] signed certificate with serial number 88607895468152274682757139244368051799851531300

Create default storage class for

storageclass.storage.k8s.io/standard created

Local Kubernetes cluster is running. Press Ctrl-C to shut it down.

Logs:

/tmp/kube-apiserver.log

/tmp/kube-controller-manager.log

/tmp/kube-proxy.log

/tmp/kube-scheduler.log

/tmp/kubelet.log

To start using your cluster, you can open up another terminal/tab and run:

export KUBECONFIG=/var/run/kubernetes/admin.kubeconfig

cluster/kubectl.sh

Alternatively, you can write to the default kubeconfig:

export KUBERNETES_PROVIDER=local

cluster/kubectl.sh config set-cluster local --server=https://localhost:6443 --certificate-authority=/var/run/kubernetes/server-ca.crt

cluster/kubectl.sh config set-credentials myself --client-key=/var/run/kubernetes/client-admin.key --client-certificate=/var/run/kubernetes/client-admin.crt

cluster/kubectl.sh config set-context local --cluster=local --user=myself

cluster/kubectl.sh config use-context local

cluster/kubectl.sh 查看Kubernetes各组件启动参数及PID

$ ps -ef|grep kube|grep -v grep可以看到移动7个进程,分别如下:

root 119660 119248 10 09:28 pts/4 00:00:50 /home/workspace/src/k8s.io/kubernetes/_output/bin/kube-apiserver --authorization-mode=Node,RBAC --cloud-provider= --cloud-config= --v=3 --vmodule= --audit-policy-file=/tmp/kube-audit-policy-file --audit-log-path=/tmp/kube-apiserver-audit.log --authorization-webhook-config-file= --authentication-token-webhook-config-file= --cert-dir=/var/run/kubernetes --client-ca-file=/var/run/kubernetes/client-ca.crt --kubelet-client-certificate=/var/run/kubernetes/client-kube-apiserver.crt --kubelet-client-key=/var/run/kubernetes/client-kube-apiserver.key --service-account-key-file=/tmp/kube-serviceaccount.key --service-account-lookup=true --service-account-issuer=https://kubernetes.default.svc --service-account-signing-key-file=/tmp/kube-serviceaccount.key --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,Priority,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota --disable-admission-plugins= --admission-control-config-file= --bind-address=0.0.0.0 --secure-port=6443 --tls-cert-file=/var/run/kubernetes/serving-kube-apiserver.crt --tls-private-key-file=/var/run/kubernetes/serving-kube-apiserver.key --storage-backend=etcd3 --storage-media-type=application/vnd.kubernetes.protobuf --etcd-servers=http://127.0.0.1:2379 --service-cluster-ip-range=10.0.0.0/24 --feature-gates=AllAlpha=false --external-hostname=localhost --requestheader-username-headers=X-Remote-User --requestheader-group-headers=X-Remote-Group --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-client-ca-file=/var/run/kubernetes/request-header-ca.crt --requestheader-allowed-names=system:auth-proxy --proxy-client-cert-file=/var/run/kubernetes/client-auth-proxy.crt --proxy-client-key-file=/var/run/kubernetes/client-auth-proxy.key --cors-allowed-origins=/127.0.0.1(:[0-9]+)?$,/localhost(:[0-9]+)?$

root 120034 119248 3 09:28 pts/4 00:00:16 /home/workspace/src/k8s.io/kubernetes/_output/bin/kube-controller-manager --v=3 --vmodule= --service-account-private-key-file=/tmp/kube-serviceaccount.key --service-cluster-ip-range=10.0.0.0/24 --root-ca-file=/var/run/kubernetes/server-ca.crt --cluster-signing-cert-file=/var/run/kubernetes/client-ca.crt --cluster-signing-key-file=/var/run/kubernetes/client-ca.key --enable-hostpath-provisioner=false --pvclaimbinder-sync-period=15s --feature-gates=AllAlpha=false --cloud-provider= --cloud-config= --kubeconfig /var/run/kubernetes/controller.kubeconfig --use-service-account-credentials --controllers=* --leader-elect=false --cert-dir=/var/run/kubernetes --master=https://localhost:6443

root 120036 119248 1 09:28 pts/4 00:00:05 /home/workspace/src/k8s.io/kubernetes/_output/bin/kube-scheduler --v=3 --config=/tmp/kube-scheduler.yaml --feature-gates=AllAlpha=false --master=https://localhost:6443

root 120208 119248 0 09:28 pts/4 00:00:00 sudo -E /home/workspace/src/k8s.io/kubernetes/_output/bin/kubelet --v=3 --vmodule= --chaos-chance=0.0 --container-runtime=docker --hostname-override=127.0.0.1 --cloud-provider= --cloud-config= --bootstrap-kubeconfig=/var/run/kubernetes/kubelet.kubeconfig --kubeconfig=/var/run/kubernetes/kubelet-rotated.kubeconfig --config=/tmp/kubelet.yaml

root 120211 120208 3 09:28 pts/4 00:00:15 /home/workspace/src/k8s.io/kubernetes/_output/bin/kubelet --v=3 --vmodule= --chaos-chance=0.0 --container-runtime=docker --hostname-override=127.0.0.1 --cloud-provider= --cloud-config= --bootstrap-kubeconfig=/var/run/kubernetes/kubelet.kubeconfig --kubeconfig=/var/run/kubernetes/kubelet-rotated.kubeconfig --config=/tmp/kubelet.yaml

root 120957 119248 0 09:29 pts/4 00:00:00 sudo /home/workspace/src/k8s.io/kubernetes/_output/bin/kube-proxy --v=3 --config=/tmp/kube-proxy.yaml --master=https://localhost:6443

root 120980 120957 0 09:29 pts/4 00:00:00 /home/workspace/src/k8s.io/kubernetes/_output/bin/kube-proxy --v=3 --config=/tmp/kube-proxy.yaml --master=https://localhost:6443配置远程调试

我这里使用的远程共享文件工具是samba,Samba的配置这里就不赘述了,可以在网上找到一大堆

使用Windows或者Linux上的Goland工具将虚拟机中的Kubernetes源码打开

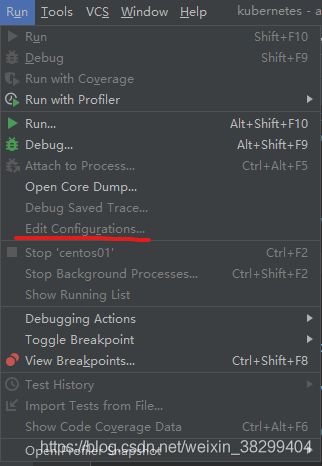

Main menu->Run->Edit Configurations…

如果看到Edit Configurations…为灰色,无法点击,这时可以点击Debug按钮在弹出框中选择Edit Configurations…

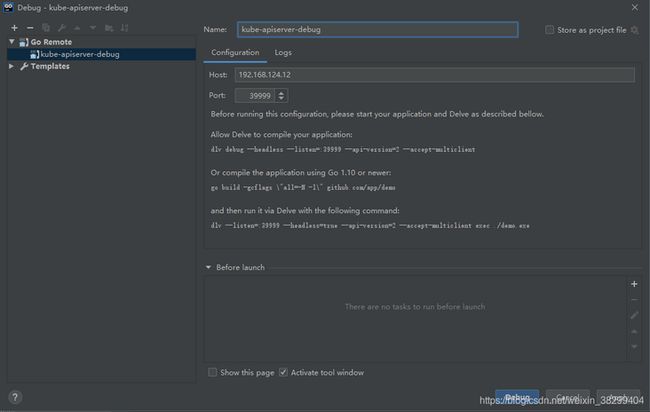

点击+选择Go Remote

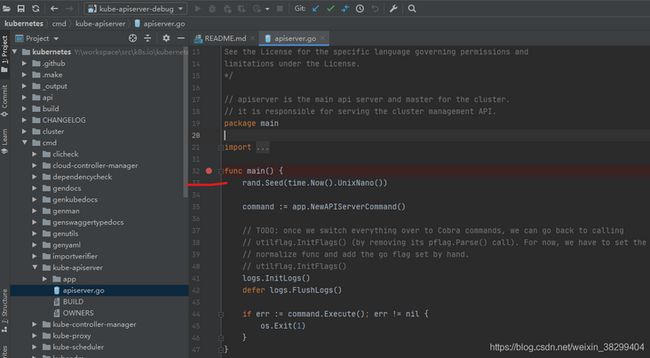

其中Name根据自己的需要起一个,Host为虚拟机的IP,Port为后续dlv服务的端口号,dlv服务的启动命令也在中间位置显示出来了,一会我们就用这个命令启动kube-apiserver的含有断点信息的二进制文件,接下来在kubernetes/cmd/kube-apiserver/apiserver.go的main函数的地方加一个断点,其他地方也可以

保存上面修改的代码和断点信息,执行以下代码即可单独编译该模块的DEBUG版本,这里以kube-apiserver为例:

$ make GOLDFLAGS="" WHAT="cmd/kube-apiserver"再次执行单集群启动脚本进行调试kube-apiserver :

$ ./hack/local-up-cluster.sh -O # 直接启动单节点集群,不能去掉-O,不然会重新编译,覆盖kube-apiserver的DEBUG信息手动停止kube-apiserver的进程

$ ps -ef|grep kube-apiserver|grep -v grep

$ kill -15 119660

$ ps -ef|grep kube-apiserver|grep -v grep使用dlv启动kube-apiserver进程

dlv --listen=:39999 --headless=true --api-version=2 exec /home/workspace/src/k8s.io/kubernetes/_output/bin/kube-apiserver -- --authorization-mode=Node,RBAC --cloud-provider= --cloud-config= --v=3 --vmodule= --audit-policy-file=/tmp/kube-audit-policy-file --audit-log-path=/tmp/kube-apiserver-audit.log --authorization-webhook-config-file= --authentication-token-webhook-config-file= --cert-dir=/var/run/kubernetes --client-ca-file=/var/run/kubernetes/client-ca.crt --kubelet-client-certificate=/var/run/kubernetes/client-kube-apiserver.crt --kubelet-client-key=/var/run/kubernetes/client-kube-apiserver.key --service-account-key-file=/tmp/kube-serviceaccount.key --service-account-lookup=true --service-account-issuer=https://kubernetes.default.svc --service-account-signing-key-file=/tmp/kube-serviceaccount.key --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,Priority,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota --disable-admission-plugins= --admission-control-config-file= --bind-address=0.0.0.0 --secure-port=6443 --tls-cert-file=/var/run/kubernetes/serving-kube-apiserver.crt --tls-private-key-file=/var/run/kubernetes/serving-kube-apiserver.key --storage-backend=etcd3 --storage-media-type=application/vnd.kubernetes.protobuf --etcd-servers=http://127.0.0.1:2379 --service-cluster-ip-range=10.0.0.0/24 --feature-gates=AllAlpha=false --external-hostname=localhost --requestheader-username-headers=X-Remote-User --requestheader-group-headers=X-Remote-Group --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-client-ca-file=/var/run/kubernetes/request-header-ca.crt --requestheader-allowed-names=system:auth-proxy --proxy-client-cert-file=/var/run/kubernetes/client-auth-proxy.crt --proxy-client-key-file=/var/run/kubernetes/client-auth-proxy.key --cors-allowed-origins='/127.0.0.1(:[0-9]+)?$,/localhost(:[0-9]+)?$'上述启动命令在配置远程调试界面已经见过一次了,exec后面的为kube-apiserver的二进制程序 -- 后面的为kube-apiserver的启动参数,这些启动参数是通过命令ps -ef|grep kube-apiserver|grep -v grep查到的,同时启动参数使用dlv启动时需要修改一下,如上