Faster-RCNN预测过程详解

看完faster-rcnn的代码以后想写一篇博客,当作自己的一个笔记,也算分享,今天把一直拖着没写的这个给写了吧。

本文仅涉及faster-rcnn的前向预测过程及其相关文件、函数,训练及loss函数、ROIpooling、NMS以及各种细节函数等具体实现读者可以自行阅读源代码及其他相关博客

simple-faster-rcnn相关博客

simple-faster-rcnn源代码

本人自己对simple-faster-rcnn添加的注释版(代码中可能有某些改动,请不要直接使用该代码去运行)

faster-rcnn原文

简单介绍

Faster-RCNN作为目标检测领域一个经典的two-stage检测算法,不仅常常出现在各种paper中,其算法思想也影响着后面的很多two-stage检测算法。因此有必要详细了解其具体实现,对我来说也是学习一个完整项目的好机会。本文选择的simple-faster-rcnn是对faster-rcnn的简化,就像在作者的github里写的一样,Simple is better than complex,Flat is better than nested。这个项目基于pytorch,所以需要读者熟悉pytorch基本用法。OK,不多废话,直入正题。

各部分介绍

这一部分比较长,主要介绍各个主要文件中的函数和类,不想看这部分介绍的可以直接拖到后面的预测过程部分看整体预测过程。

bbox_tools

在这个文件中一共有四个函数,分别为loc2bbox,bbox2loc,bbox_iou,以及generate_anchor_base。

loc2bbox和bbox2loc的作用分别为:将输入的偏移值加到anchor_base上计算得出目标框,根据输入的源box和目标box计算出两者之间的偏移值大小。显然前者是用于预测过程中的(rpn和head预测出各个bbox的偏移值,之后加到原bbox上),后者是用于训练的(计算生成的bbox和Ground-truth之间的差距,从而计算loss函数进行训练)

bbox_iou的作用为计算两个bbox之间的iou,用于后续训练过程。

generate_anchor_base作用为在原图经过卷积的特征图上的第一个像素点对应的原图位置上生成不同大小以及不同长宽比的各种基础anchor(原文中为9种,三种倍数三种长宽比,3×3=9)

def generate_anchor_base(base_size=16, ratios=[0.5, 1, 2],

anchor_scales=[8, 16, 32]):

py = base_size / 2.

px = base_size / 2.

anchor_base = np.zeros((len(ratios) * len(anchor_scales), 4),

dtype=np.float32)

for i in six.moves.range(len(ratios)):

for j in six.moves.range(len(anchor_scales)):

h = base_size * anchor_scales[j] * np.sqrt(ratios[i])

w = base_size * anchor_scales[j] * np.sqrt(1. / ratios[i])

index = i * len(anchor_scales) + j

anchor_base[index, 0] = py - h / 2.

anchor_base[index, 1] = px - w / 2.

anchor_base[index, 2] = py + h / 2.

anchor_base[index, 3] = px + w / 2.

return anchor_base

creator_tool

在这个文件中一共有三个类:ProposalTargetCreator,AnchorTargetCreator,ProposalCreator。前两者主要用于后续训练rpn和head,后者在rpn预测过程中使用。ProposalCreator的主要作用是将输入的偏移值加到anchor上后得到bbox,根据输入的各bbox的score值进行NMS算法,去除冗余的边界框,最后返回剩余的bbox即为ROI。

class ProposalCreator:

# import in region_proposal_network

# unNOTE: I'll make it undifferential

# unTODO: make sure it's ok

# It's ok

"""Proposal regions are generated by calling this object.

The :meth:`__call__` of this object outputs object detection proposals by

applying estimated bounding box offsets

to a set of anchors.

This class takes parameters to control number of bounding boxes to

pass to NMS and keep after NMS.

If the paramters are negative, it uses all the bounding boxes supplied

or keep all the bounding boxes returned by NMS.

This class is used for Region Proposal Networks introduced in

Faster R-CNN [#]_.

.. [#] Shaoqing Ren, Kaiming He, Ross Girshick, Jian Sun. \

Faster R-CNN: Towards Real-Time Object Detection with \

Region Proposal Networks. NIPS 2015.

Args:

nms_thresh (float): Threshold value used when calling NMS.

n_train_pre_nms (int): Number of top scored bounding boxes

to keep before passing to NMS in train mode.

n_train_post_nms (int): Number of top scored bounding boxes

to keep after passing to NMS in train mode.

n_test_pre_nms (int): Number of top scored bounding boxes

to keep before passing to NMS in test mode.

n_test_post_nms (int): Number of top scored bounding boxes

to keep after passing to NMS in test mode.

force_cpu_nms (bool): If this is :obj:`True`,

always use NMS in CPU mode. If :obj:`False`,

the NMS mode is selected based on the type of inputs.

min_size (int): A paramter to determine the threshold on

discarding bounding boxes based on their sizes.

"""

def __init__(self,

parent_model,

nms_thresh=0.7,

n_train_pre_nms=12000,

n_train_post_nms=2000,

n_test_pre_nms=6000,

n_test_post_nms=300,

min_size=16

):

self.parent_model = parent_model

self.nms_thresh = nms_thresh

self.n_train_pre_nms = n_train_pre_nms

self.n_train_post_nms = n_train_post_nms

self.n_test_pre_nms = n_test_pre_nms

self.n_test_post_nms = n_test_post_nms

self.min_size = min_size

def __call__(self, loc, score,

anchor, img_size, scale=1.):

"""input should be ndarray

Propose RoIs.

Inputs :obj:`loc, score, anchor` refer to the same anchor when indexed

by the same index.

On notations, :math:`R` is the total number of anchors. This is equal

to product of the height and the width of an image and the number of

anchor bases per pixel.

Type of the output is same as the inputs.

Args:

loc (array): Predicted offsets and scaling to anchors.

Its shape is :math:`(R, 4)`.

score (array): Predicted foreground probability for anchors.

Its shape is :math:`(R,)`.

anchor (array): Coordinates of anchors. Its shape is

:math:`(R, 4)`.

img_size (tuple of ints): A tuple :obj:`height, width`,

which contains image size after scaling.

scale (float): The scaling factor used to scale an image after

reading it from a file.

Returns:

array:

An array of coordinates of proposal boxes.

Its shape is :math:`(S, 4)`. :math:`S` is less than

:obj:`self.n_test_post_nms` in test time and less than

:obj:`self.n_train_post_nms` in train time. :math:`S` depends on

the size of the predicted bounding boxes and the number of

bounding boxes discarded by NMS.

"""

# NOTE: when test, remember

# faster_rcnn.eval()

# to set self.traing = False

if self.parent_model.training:

n_pre_nms = self.n_train_pre_nms

n_post_nms = self.n_train_post_nms

else:

n_pre_nms = self.n_test_pre_nms

n_post_nms = self.n_test_post_nms

# Convert anchors into proposal via bbox transformations.

# roi = loc2bbox(anchor, loc)

roi = loc2bbox(anchor, loc)

# Clip predicted boxes to image.

roi[:, slice(0, 4, 2)] = np.clip(

roi[:, slice(0, 4, 2)], 0, img_size[0])

roi[:, slice(1, 4, 2)] = np.clip(

roi[:, slice(1, 4, 2)], 0, img_size[1]) # 将不在image_size(w,h)范围内的roi调整至图像内

# Remove predicted boxes with either height or width < threshold.

min_size = self.min_size * scale

hs = roi[:, 2] - roi[:, 0]

ws = roi[:, 3] - roi[:, 1]

keep = np.where((hs >= min_size) & (ws >= min_size))[0] # 仅保留大于最低要求的roi

roi = roi[keep, :]

score = score[keep]

# Sort all (proposal, score) pairs by score from highest to lowest.

# Take top pre_nms_topN (e.g. 6000).

order = score.ravel().argsort()[:: -1]

if n_pre_nms > 0:

order = order[:n_pre_nms]

roi = roi[order, :]

# Apply nms (e.g. threshold = 0.7).

# Take after_nms_topN (e.g. 300).

# unNOTE: somthing is wrong here!

# TODO: remove cuda.to_gpu

keep = non_maximum_suppression(

cp.ascontiguousarray(cp.asarray(roi)),

thresh=self.nms_thresh)

if n_post_nms > 0:

keep = keep[:n_post_nms]

roi = roi[keep]

return roi

faster-rcnn

显然,这个文件里面主要包括faster-rcnn的相关内容,里面指定了faster-rcnn的extractor(就是对图像进行各种卷积操作得到最终生成anchor的特征图的那部分网络)、rpn和head使用什么结构,整个网络的前向过程,以及网络的预测过程。该类中定义了一个_suppress函数,目的是对整个网络预测产生的所有bbox进行NMS从而得到最终所需的结果。(请注意,该类并没有定义网络各部分具体的结构如rpn,extractor等,这些组件的具体实现在该类的一个子类faster-rcnn-vgg16中具体定义,这意味着你可以重写faster-rcnn-vgg16中的内容已实现各种不同网络主体结构的faster-rcnn)

class FasterRCNN(nn.Module):

"""Base class for Faster R-CNN.

This is a base class for Faster R-CNN links supporting object detection

API [#]_. The following three stages constitute Faster R-CNN.

1. **Feature extraction**: Images are taken and their \

feature maps are calculated.

2. **Region Proposal Networks**: Given the feature maps calculated in \

the previous stage, produce set of RoIs around objects.

3. **Localization and Classification Heads**: Using feature maps that \

belong to the proposed RoIs, classify the categories of the objects \

in the RoIs and improve localizations.

Each stage is carried out by one of the callable

:class:`torch.nn.Module` objects :obj:`feature`, :obj:`rpn` and :obj:`head`.

There are two functions :meth:`predict` and :meth:`__call__` to conduct

object detection.

:meth:`predict` takes images and returns bounding boxes that are converted

to image coordinates. This will be useful for a scenario when

Faster R-CNN is treated as a black box function, for instance.

:meth:`__call__` is provided for a scnerario when intermediate outputs

are needed, for instance, for training and debugging.

Links that support obejct detection API have method :meth:`predict` with

the same interface. Please refer to :meth:`predict` for

further details.

.. [#] Shaoqing Ren, Kaiming He, Ross Girshick, Jian Sun. \

Faster R-CNN: Towards Real-Time Object Detection with \

Region Proposal Networks. NIPS 2015.

Args:

extractor (nn.Module): A module that takes a BCHW image

array and returns feature maps.

rpn (nn.Module): A module that has the same interface as

:class:`model.region_proposal_network.RegionProposalNetwork`.

Please refer to the documentation found there.

head (nn.Module): A module that takes

a BCHW variable, RoIs and batch indices for RoIs. This returns class

dependent localization paramters and class scores.

loc_normalize_mean (tuple of four floats): Mean values of

localization estimates.

loc_normalize_std (tupler of four floats): Standard deviation

of localization estimates.

"""

def __init__(self, extractor, rpn, head,

loc_normalize_mean = (0., 0., 0., 0.),

loc_normalize_std = (0.1, 0.1, 0.2, 0.2)

):

super(FasterRCNN, self).__init__()

self.extractor = extractor

self.rpn = rpn

self.head = head

# mean and std

self.loc_normalize_mean = loc_normalize_mean

self.loc_normalize_std = loc_normalize_std

self.use_preset('evaluate')

@property

def n_class(self):

# Total number of classes including the background.

return self.head.n_class

def forward(self, x, scale=1.):

"""Forward Faster R-CNN.

Scaling paramter :obj:`scale` is used by RPN to determine the

threshold to select small objects, which are going to be

rejected irrespective of their confidence scores.

Here are notations used.

* :math:`N` is the number of batch size

* :math:`R'` is the total number of RoIs produced across batches. \

Given :math:`R_i` proposed RoIs from the :math:`i` th image, \

:math:`R' = \\sum _{i=1} ^ N R_i`.

* :math:`L` is the number of classes excluding the background.

Classes are ordered by the background, the first class, ..., and

the :math:`L` th class.

Args:

x (autograd.Variable): 4D image variable.

scale (float): Amount of scaling applied to the raw image

during preprocessing.

Returns:

Variable, Variable, array, array:

Returns tuple of four values listed below.

* **roi_cls_locs**: Offsets and scalings for the proposed RoIs. \

Its shape is :math:`(R', (L + 1) \\times 4)`.

* **roi_scores**: Class predictions for the proposed RoIs. \

Its shape is :math:`(R', L + 1)`.

* **rois**: RoIs proposed by RPN. Its shape is \

:math:`(R', 4)`.

* **roi_indices**: Batch indices of RoIs. Its shape is \

:math:`(R',)`.

"""

img_size = x.shape[2:]

h = self.extractor(x)

rpn_locs, rpn_scores, rois, roi_indices, anchor = \

self.rpn(h, img_size, scale)

roi_cls_locs, roi_scores = self.head(

h, rois, roi_indices)

return roi_cls_locs, roi_scores, rois, roi_indices # roi_scores为roi的各类分数

def use_preset(self, preset):

"""Use the given preset during prediction.

This method changes values of :obj:`self.nms_thresh` and

:obj:`self.score_thresh`. These values are a threshold value

used for non maximum suppression and a threshold value

to discard low confidence proposals in :meth:`predict`,

respectively.

If the attributes need to be changed to something

other than the values provided in the presets, please modify

them by directly accessing the public attributes.

Args:

preset ({'visualize', 'evaluate'): A string to determine the

preset to use.

"""

if preset == 'visualize':

self.nms_thresh = 0.3

self.score_thresh = 0.7

elif preset == 'evaluate':

self.nms_thresh = 0.3

self.score_thresh = 0.05

else:

raise ValueError('preset must be visualize or evaluate')

def _suppress(self, raw_cls_bbox, raw_prob): # 输入每个roi对应每个类的bbox以及每个roi的各类分数的softmax

bbox = list()

label = list()

score = list()

# skip cls_id = 0 because it is the background class

for l in range(1, self.n_class):

cls_bbox_l = raw_cls_bbox.reshape((-1, self.n_class, 4))[:, l, :] # 取每个roi第L类的bbox(20)

prob_l = raw_prob[:, l] # 取第L类的概率值

mask = prob_l > self.score_thresh # 返回bool值

cls_bbox_l = cls_bbox_l[mask] # 返回prob_l大于阈值的元素

prob_l = prob_l[mask]

keep = non_maximum_suppression(

cp.array(cls_bbox_l), self.nms_thresh, prob_l) # 调用NMS,返回保留的bbox的索引

keep = cp.asnumpy(keep)

bbox.append(cls_bbox_l[keep])

# The labels are in [0, self.n_class - 2].

label.append((l - 1) * np.ones((len(keep),))) # 将保留下的bbox的所有L类bbox的标签标记为L-1

score.append(prob_l[keep]) # 将所有roi剩余的第L类分数保存

bbox = np.concatenate(bbox, axis=0).astype(np.float32)

label = np.concatenate(label, axis=0).astype(np.int32)

score = np.concatenate(score, axis=0).astype(np.float32)

return bbox, label, score # 每行为第L类的所有bbox,一共L行

@nograd

def predict(self, imgs, sizes=None, visualize=False):

"""Detect objects from images.

This method predicts objects for each image.

Args:

imgs (iterable of numpy.ndarray): Arrays holding images.

All images are in CHW and RGB format

and the range of their value is :math:`[0, 255]`.

Returns:

tuple of lists:

This method returns a tuple of three lists,

:obj:`(bboxes, labels, scores)`.

* **bboxes**: A list of float arrays of shape :math:`(R, 4)`, \

where :math:`R` is the number of bounding boxes in a image. \

Each bouding box is organized by \

:math:`(y_{min}, x_{min}, y_{max}, x_{max})` \

in the second axis.

* **labels** : A list of integer arrays of shape :math:`(R,)`. \

Each value indicates the class of the bounding box. \

Values are in range :math:`[0, L - 1]`, where :math:`L` is the \

number of the foreground classes.

* **scores** : A list of float arrays of shape :math:`(R,)`. \

Each value indicates how confident the prediction is.

"""

self.eval()

if visualize:

self.use_preset('visualize')

prepared_imgs = list()

sizes = list()

for img in imgs:

size = img.shape[1:]

img = preprocess(at.tonumpy(img))

prepared_imgs.append(img)

sizes.append(size)

else:

prepared_imgs = imgs

bboxes = list()

labels = list()

scores = list()

for img, size in zip(prepared_imgs, sizes):

img = at.totensor(img[None]).float()

scale = img.shape[3] / size[1]

roi_cls_loc, roi_scores, rois, _ = self.forward(img, scale=scale)

# We are assuming that batch size is 1.

roi_score = roi_scores.data

roi_cls_loc = roi_cls_loc.data

roi = at.totensor(rois) / scale

# Convert predictions to bounding boxes in image coordinates.

# Bounding boxes are scaled to the scale of the input images.

mean = t.Tensor(self.loc_normalize_mean).cuda(). \

repeat(self.n_class)[None]

std = t.Tensor(self.loc_normalize_std).cuda(). \

repeat(self.n_class)[None]

roi_cls_loc = (roi_cls_loc * std + mean)

roi_cls_loc = roi_cls_loc.view(-1, self.n_class, 4)

roi = roi.view(-1, 1, 4).expand_as(roi_cls_loc)

cls_bbox = loc2bbox(at.tonumpy(roi).reshape((-1, 4)),

at.tonumpy(roi_cls_loc).reshape((-1, 4)))

cls_bbox = at.totensor(cls_bbox)

cls_bbox = cls_bbox.view(-1, self.n_class * 4) # 每个bbox对每一个目标类产生一个相应结果

# clip bounding box

cls_bbox[:, 0::2] = (cls_bbox[:, 0::2]).clamp(min=0, max=size[0]) # 将顶点在图像外的bbox调整至图像内

cls_bbox[:, 1::2] = (cls_bbox[:, 1::2]).clamp(min=0, max=size[1])

prob = at.tonumpy(F.softmax(at.totensor(roi_score), dim=1)) # 每个roi的各类分数的softmax值,每个roi只生成20个分数每个分数对应一个bbox

raw_cls_bbox = at.tonumpy(cls_bbox)

raw_prob = at.tonumpy(prob)

bbox, label, score = self._suppress(raw_cls_bbox, raw_prob) # 对产生的所有bbox和相应分数进行NMS

bboxes.append(bbox)

labels.append(label)

scores.append(score)

self.use_preset('evaluate')

self.train()

return bboxes, labels, scores

def get_optimizer(self):

"""

return optimizer, It could be overwriten if you want to specify

special optimizer

"""

lr = opt.lr

params = []

for key, value in dict(self.named_parameters()).items():

if value.requires_grad:

if 'bias' in key:

params += [{

'params': [value], 'lr': lr * 2, 'weight_decay': 0}] # 如果是bias则加快学习,不进行权重衰减

else:

params += [{

'params': [value], 'lr': lr, 'weight_decay': opt.weight_decay}]

if opt.use_adam: # 如果是weight则按照lr去学习,同时进行权重衰减

self.optimizer = t.optim.Adam(params)

else:

self.optimizer = t.optim.SGD(params, momentum=0.9)

return self.optimizer

def scale_lr(self, decay=0.1): # 控制学习率

for param_group in self.optimizer.param_groups:

param_group['lr'] *= decay

return self.optimizer

faster-rcnn-vgg16

该文件中重构了VGG16的最后几层,改变成为faster-rcnn所需的网络结构。其中FasterRCNNVGG16这个类继承自之前的FasterRCNN这个类,指定了FasterRCNN的各个结构使用什么网络实现。这个重构完的VGG16后面是用来构造extractor和head的。整个网络的实现结构可以在这个文件中清晰的看到。

def decom_vgg16():

# the 30th layer of features is relu of conv5_3

if opt.caffe_pretrain:

model = vgg16(pretrained=False)

if not opt.load_path:

model.load_state_dict(t.load(opt.caffe_pretrain_path))

else:

model = vgg16(not opt.load_path)

features = list(model.features)[:30]

classifier = model.classifier # vgg16的全连接层

'''(0)linear(in=25088,out=4096)

(1)relu (2)dropout

(3)linear(in=4096,out=4096) (4)relu (5)dropout

(6)linear(in=4096,out=1000)

'''

classifier = list(classifier)

del classifier[6] # 重构自己需要的vgg16结构

if not opt.use_drop:

del classifier[5]

del classifier[2]

classifier = nn.Sequential(*classifier)

# freeze top4 conv

for layer in features[:10]:

for p in layer.parameters():

p.requires_grad = False

return nn.Sequential(*features), classifier

class FasterRCNNVGG16(FasterRCNN):

"""Faster R-CNN based on VGG-16.

For descriptions on the interface of this model, please refer to

:class:`model.faster_rcnn.FasterRCNN`.

Args:

n_fg_class (int): The number of classes excluding the background.

ratios (list of floats): This is ratios of width to height of

the anchors.

anchor_scales (list of numbers): This is areas of anchors.

Those areas will be the product of the square of an element in

:obj:`anchor_scales` and the original area of the reference

window.

"""

feat_stride = 16 # downsample 16x for output of conv5 in vgg16

def __init__(self,

n_fg_class=20,

ratios=[0.5, 1, 2],

anchor_scales=[8, 16, 32]

):

extractor, classifier = decom_vgg16()

rpn = RegionProposalNetwork(

512, 512,

ratios=ratios,

anchor_scales=anchor_scales,

feat_stride=self.feat_stride,

)

head = VGG16RoIHead(

n_class=n_fg_class + 1,

roi_size=7,

spatial_scale=(1. / self.feat_stride),

classifier=classifier

)

super(FasterRCNNVGG16, self).__init__(

extractor,

rpn,

head,

)

class VGG16RoIHead(nn.Module):

"""Faster R-CNN Head for VGG-16 based implementation.

This class is used as a head for Faster R-CNN.

This outputs class-wise localizations and classification based on feature

maps in the given RoIs.

Args:

n_class (int): The number of classes possibly including the background.

roi_size (int): Height and width of the feature maps after RoI-pooling.

spatial_scale (float): Scale of the roi is resized.

classifier (nn.Module): Two layer Linear ported from vgg16

"""

def __init__(self, n_class, roi_size, spatial_scale,

classifier):

# n_class includes the background

super(VGG16RoIHead, self).__init__()

self.classifier = classifier # 非输出全连接层的最后一层

self.cls_loc = nn.Linear(4096, n_class * 4) # 输出每个roi的所有类的偏移量(神经网络层)

self.score = nn.Linear(4096, n_class) # 输出每个roi的各个类的得分(神经网络层)

normal_init(self.cls_loc, 0, 0.001) # 对两个输出的神经网络层进行权重初始化

normal_init(self.score, 0, 0.01)

self.n_class = n_class

self.roi_size = roi_size # roi在pooling后的尺寸

self.spatial_scale = spatial_scale

self.roi = RoIPooling2D(self.roi_size, self.roi_size, self.spatial_scale)

def forward(self, x, rois, roi_indices):

"""Forward the chain.

We assume that there are :math:`N` batches.

Args:

x (Variable): 4D image variable.

rois (Tensor): A bounding box array containing coordinates of

proposal boxes. This is a concatenation of bounding box

arrays from multiple images in the batch.

Its shape is :math:`(R', 4)`. Given :math:`R_i` proposed

RoIs from the :math:`i` th image,

:math:`R' = \\sum _{i=1} ^ N R_i`.

roi_indices (Tensor): An array containing indices of images to

which bounding boxes correspond to. Its shape is :math:`(R',)`.

"""

# in case roi_indices is ndarray

roi_indices = at.totensor(roi_indices).float()

rois = at.totensor(rois).float()

indices_and_rois = t.cat([roi_indices[:, None], rois], dim=1)

# NOTE: important: yx->xy

xy_indices_and_rois = indices_and_rois[:, [0, 2, 1, 4, 3]]

indices_and_rois = xy_indices_and_rois.contiguous()

pool = self.roi(x, indices_and_rois)

pool = pool.view(pool.size(0), -1)

fc7 = self.classifier(pool)

roi_cls_locs = self.cls_loc(fc7)

roi_scores = self.score(fc7)

return roi_cls_locs, roi_scores

region_proposal_network

这个文件主要定义了RPN的网络(两个经过same-padding后进行卷积的分支,一个预测每个特征图像素点对应的原图范围的各个bbox的偏移值,一个预测相应位置的各种bbox的分数),在两个分支预测完后,经过creator_tool中的ProposalCreator函数处理后得到最终结果。这个文件中还定义了一个_enumerate_shifted_anchor函数,该函数计算原图中每个特征图像素点对应原图位置相对于第一个位置的偏移值,将偏移值增加到anchor_base上后即为各个位置的anchor位置坐标。

class RegionProposalNetwork(nn.Module):

"""Region Proposal Network introduced in Faster R-CNN.

This is Region Proposal Network introduced in Faster R-CNN [#]_.

This takes features extracted from images and propose

class agnostic bounding boxes around "objects".

.. [#] Shaoqing Ren, Kaiming He, Ross Girshick, Jian Sun. \

Faster R-CNN: Towards Real-Time Object Detection with \

Region Proposal Networks. NIPS 2015.

Args:

in_channels (int): The channel size of input.

mid_channels (int): The channel size of the intermediate tensor.

ratios (list of floats): This is ratios of width to height of

the anchors.

anchor_scales (list of numbers): This is areas of anchors.

Those areas will be the product of the square of an element in

:obj:`anchor_scales` and the original area of the reference

window.

feat_stride (int): Stride size after extracting features from an

image.

initialW (callable): Initial weight value. If :obj:`None` then this

function uses Gaussian distribution scaled by 0.1 to

initialize weight.

May also be a callable that takes an array and edits its values.

proposal_creator_params (dict): Key valued paramters for

:class:`model.utils.creator_tools.ProposalCreator`.

.. seealso::

:class:`~model.utils.creator_tools.ProposalCreator`

"""

def __init__(

self, in_channels=512, mid_channels=512, ratios=[0.5, 1, 2],

anchor_scales=[8, 16, 32], feat_stride=16,

proposal_creator_params=dict(),

):

super(RegionProposalNetwork, self).__init__()

self.anchor_base = generate_anchor_base(

anchor_scales=anchor_scales, ratios=ratios)

self.feat_stride = feat_stride # 在抽取特征后在原图上的步长

self.proposal_layer = ProposalCreator(self, **proposal_creator_params)

n_anchor = self.anchor_base.shape[0] # 总共9类anchor的个数

self.conv1 = nn.Conv2d(in_channels, mid_channels, 3, 1, 1)

self.score = nn.Conv2d(mid_channels, n_anchor * 2, 1, 1, 0)

self.loc = nn.Conv2d(mid_channels, n_anchor * 4, 1, 1, 0)

normal_init(self.conv1, 0, 0.01)

normal_init(self.score, 0, 0.01)

normal_init(self.loc, 0, 0.01)

def forward(self, x, img_size, scale=1.):

# 输入的x为卷积后的特征图

"""Forward Region Proposal Network.

Here are notations.

* :math:`N` is batch size.

* :math:`C` channel size of the input.

* :math:`H` and :math:`W` are height and witdh of the input feature.

* :math:`A` is number of anchors assigned to each pixel.

Args:

x (~torch.autograd.Variable): The Features extracted from images.

Its shape is :math:`(N, C, H, W)`.

img_size (tuple of ints): A tuple :obj:`height, width`,

which contains image size after scaling.

scale (float): The amount of scaling done to the input images after

reading them from files.

Returns:

(~torch.autograd.Variable, ~torch.autograd.Variable, array, array, array):

This is a tuple of five following values.

* **rpn_locs**: Predicted bounding box offsets and scales for \

anchors. Its shape is :math:`(N, H W A, 4)`.

* **rpn_scores**: Predicted foreground scores for \

anchors. Its shape is :math:`(N, H W A, 2)`.

* **rois**: A bounding box array containing coordinates of \

proposal boxes. This is a concatenation of bounding box \

arrays from multiple images in the batch. \

Its shape is :math:`(R', 4)`. Given :math:`R_i` predicted \

bounding boxes from the :math:`i` th image, \

:math:`R' = \\sum _{i=1} ^ N R_i`.

* **roi_indices**: An array containing indices of images to \

which RoIs correspond to. Its shape is :math:`(R',)`.

* **anchor**: Coordinates of enumerated shifted anchors. \

Its shape is :math:`(H W A, 4)`.

"""

n, _, hh, ww = x.shape

anchor = _enumerate_shifted_anchor(

np.array(self.anchor_base),

self.feat_stride, hh, ww) # 将9种anchor撒满整个特征图

n_anchor = anchor.shape[0] // (hh * ww)

h = F.relu(self.conv1(x)) # 进一步将输入的特征图卷积

rpn_locs = self.loc(h) # 预测出的每个像素点上anchor的偏移值

# UNNOTE: check whether need contiguous

# A: Yes

rpn_locs = rpn_locs.permute(0, 2, 3, 1).contiguous().view(n, -1, 4) # 交换维度为(N,H,W,C) C为anchor数*4

rpn_scores = self.score(h)

rpn_scores = rpn_scores.permute(0, 2, 3, 1).contiguous() # 交换维度为(N,H,W,C) C为anchor*2

rpn_softmax_scores = F.softmax(rpn_scores.view(n, hh, ww, n_anchor, 2), dim=4) # 在通道方向上进行softmax(前背景)

rpn_fg_scores = rpn_softmax_scores[:, :, :, :, 1].contiguous()

rpn_fg_scores = rpn_fg_scores.view(n, -1)

rpn_scores = rpn_scores.view(n, -1, 2)

rois = list()

roi_indices = list()

for i in range(n): # n为batch_size

roi = self.proposal_layer(

rpn_locs[i].cpu().data.numpy(), # 取rpn输出的第i张image的roi位置数据

rpn_fg_scores[i].cpu().data.numpy(), # 取第i张image上的各个roi的前景分数

anchor, img_size, # 图像上的所有anchor和图像大小

scale=scale) # ProposalCreater() 使用nms初步处理不符合要求的roi

batch_index = i * np.ones((len(roi),), dtype=np.int32) # 给初步处理过后的roi标记

rois.append(roi) # 将第i张image的roi添加至结果中

roi_indices.append(batch_index)

rois = np.concatenate(rois, axis=0)

roi_indices = np.concatenate(roi_indices, axis=0)

return rpn_locs, rpn_scores, rois, roi_indices, anchor

预测过程

OK,函数文件介绍了这么多,终于到了本文的正题了:整个网络如何预测的。faster-rcnn为何被称为two-stage算法,它的two-stage体现在哪里?为何他速度比较慢?整个网络在卷积操作过程中图像的维度是如何变化的?网络对于特征图像上每个像素点的预测是如何最终改变anchor的大小和位置从而产生最终结果的?这些都会在这一部分解释。

首先,读过faster-rcnn的读者肯定知道,在经过一系列卷积操作后,特征图从原图像的(C1,W, H)变成了(C2, W/16, H/16)。这意味着特征图的长宽变成了原图的1/16(在实际操作中倍数约为1/16),之后对特征图上每个像素点的偏移值预测和分数预测也就是对原图上相应位置16×16的基础位置的偏移值和分数预测(本人理解是特征图上每个像素在原图上的感受野是特征图上的16倍,也即一个像素点感受原图16×16像素点大小的面积)。faster-rcnn预测的做法是首先在每个特征图像素点对应的各个原图位置分别撒上9个anchor_box([8, 16, 32]×[0.5, 1, 2],注意,每个组合如8×0.5最终是要乘anchor的边长16的),这样生成了8种不同大小不同比例的anchor_box去框住不同大小不同形状的物体,在整张原图上即产生了W/16×H/16×9个基础anchor。这样一来只需要在RPN中生成特征图上各个像素点对应的9种anchor的位置偏移以及评分,再经过NMS算法即可得到想要的ROI。

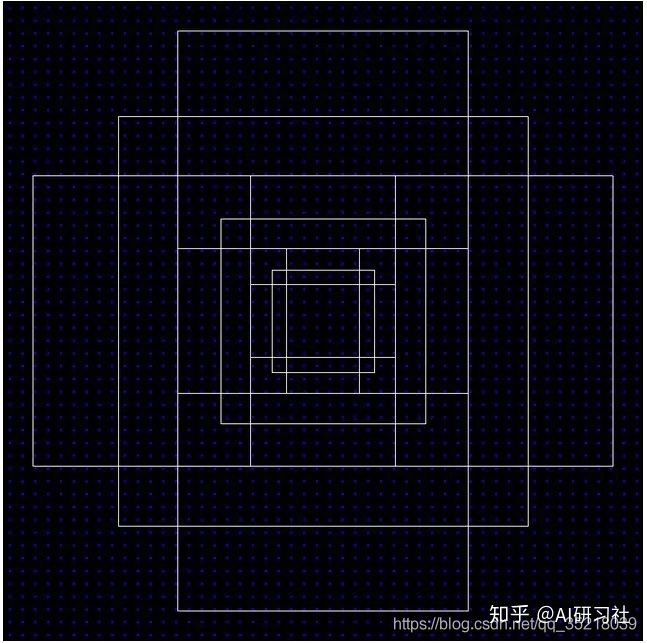

这张图就是RPN所做的操作。可以看到输入的特征图经过一次3×3的same-padding卷积后分为两个分支,一个分支最终的输出维度为(18, W/16, H/16)一个分支的输出维度为(36, W/16, H/16)。18即为每个位置对应的9种anchor的两个打分:前景和背景,36为每个位置对应的9种anchor的(x, y, w, h)的偏移值9×4。很显然,经过两个卷积网络生成的是所有anchor的偏移值结果,这其中还有很多的冗余框,经过RPN处理后我们需要的是较少的ROI,因此在将位置(通道数为36的那个)分支输出的结果加到anchor上后,根据另一分支输出的经过softmax处理的得分值以及NMS算法处理后,即为初步的ROI结果。

经过RPN生成的ROI结果已经相对较少并且能够大概框住我们想要框住的目标了,但是结果相对于GT来说仍然是不准确且有冗余的,而且我们需要预测每个bbox中目标的类型,因此我们需要进一步过滤多余的bbox并且进一步调整bbox的位置和大小并预测类型。顺着这一思路我们自然想到可以对ROI的方框位置进行进一步的卷积操作,预测出每个ROI的相应偏移值再经过NMS算法过滤掉冗余的边界框即可得到最终的结果。由此引出了ROIPooling这一操作。ROiPooing的做法是将原图上产生的所有ROI映射到输入RPN之前的特征图上,对特征图上原来ROI位置的特征进行ROI池化操作,将不同大小的ROI池化为相同长宽的特征图,再在该特征图上进行卷积操作,并在最后添加全连接层即可输出每张图上各个ROI的偏移值和类别。

这张图就是整个网络的head部分,对应代码的VGG16ROIHead这个类。在经过ROI poolong后得到的特征图在一维展开后先经过一次全连接层,之后与两个全连接层分支相连,两个全连接分支分别输出1×21和1×84的数据,其中21为相应于VOC数据集上的20类分数,由于增加了一个背景类,因此为21类,同理,84为每个ROI相对21类的(x, y, w, h)偏移值21×4。也就是说每个ROI在这一阶段又产生了21个偏移值,即产生了21个bbox。最后,每个ROI产生的所有21种bbox在根据其对应该类的打分和NMS算法处理后得到的即为最终整个网络的输出结果。

现在回顾一下整个预测过程可以看到,整个过程中进行了两次预测,第一次根据基础anchor_box产生每个anchor_box的偏移值,由此在这些anchor_box中产生所需的ROI,第二次根据每个ROI生成每个ROI相应于数据集上20类的偏移值及其分数,从而得到最终的整个网络的结果,这也是faster-rcnn被称为two-stage算法的原因。也正是由于使用了两个神经网络在两个阶段去预测结果,因此不管在预测还是训练阶段他的速度都是比one-stage的算法速度要慢的。

整个faster-rcnn的预测过程大概就是这样,其实这篇博客很早就想写了,期间一些乱七八糟的事没时间写(其实是我懒╮(╯▽╰)╭),下一篇博客我可能会写YOLO,同样既是做笔记也是督促自己学习。如果觉得这篇博客对你有用记得给我的博客点个赞(CSDN有点赞吗?╮(╯▽╰)╭)或者如果源代码读不懂可以下载我github上带注释的代码阅读,有用可以给我一个star,谢谢~~