lgb 分类回归 网格搜索调参数 + 数据生成csv

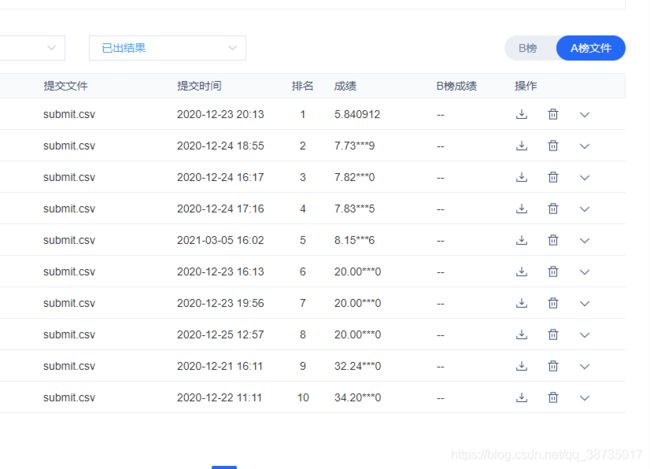

山东省第二届数据应用创新创业大赛-临沂分赛场-供水管网压力预测

张师兄的网格调参:

https://blog.csdn.net/qq_38237214/article/details/114402977

主要写一写lgb得基础和怎么用

lgb网格调参怎么用:

lgb.LGBMClassifier(objective='binary',分类

lgb.LGBMRegressor(objective = 'regression', # 回归 设置

import pandas as pd # 数据科学计算工具

import numpy as np # 数值计算工具

import matplotlib.pyplot as plt # 可视化

import seaborn as sns # matplotlib的高级API

from sklearn.model_selection import StratifiedKFold #交叉验证

from sklearn.model_selection import GridSearchCV #网格搜索

from sklearn.model_selection import train_test_split #将数据集分开成训练集和测试集

from xgboost import XGBClassifier

# lgb_train = lgb.Dataset(x_train, y_train)

# lgb_eval = lgb.Dataset(x_test, y_test, reference=lgb_train)

parameters = {

'max_depth': range(3,8,2), # max_depth :设置树深度,深度越大可能过拟合

'num_leaves': range(50, 170, 30),

#num_leaves:因为 LightGBM 使用的是 leaf-wise 的算法,因此在调节树的复杂程度时,使用的是 num_leaves 而不是 max_depth。

#大致换算关系:num_leaves = 2^(max_depth),但是它的值的设置应该小于 2^(max_depth),否则可能会导致过拟合。

# 这是提高精确度的最重要的参数

}

#gbm = lgb.LGBMClassifier(objective='binary',num_leaves=31,learning_rate=0.05,n_estimators=20) 分类

gbm = lgb.LGBMRegressor(objective = 'regression', # 回归 设置

metric = 'binary_logloss,auc', # 评估函数

learning_rate = 0.1,

feature_fraction = 0.7, #建树的特征选择比例

min_child_samples=21,

min_child_weight=0.001,

bagging_fraction = 1,

bagging_freq = 2,

reg_alpha = 0.001,

reg_lambda = 8,

cat_smooth = 0,

num_iterations = 200,

verbose=-1 ## <0 显示致命的, =0 显示错误 (警告), >0 显示信息

)

gsearch = GridSearchCV(gbm, param_grid=parameters, scoring='roc_auc', cv=3)

gsearch.fit(x_train, y_train.astype('str'))

print('参数的最佳取值:{0}'.format(gsearch.best_params_))

print('最佳模型得分:{0}'.format(gsearch.best_score_))

print(gsearch.cv_results_['mean_test_score'])

print(gsearch.cv_results_['params'])

参数说明

# params = {

# 'task': 'train',

# 'boosting_type': 'gbdt', # 设置提升类型

# 'objective': 'regression', # 目标函数

# 'metric': {'l2', 'auc'}, # 评估函数

# 'num_leaves': 31, # 叶子节点数

# 'learning_rate': 0.05, # 学习速率

# 'feature_fraction': 0.9, # 建树的特征选择比例

# 'bagging_fraction': 0.8, # 建树的样本采样比例

# 'bagging_freq': 5, # k 意味着每 k 次迭代执行bagging

# 'verbose': 1 # <0 显示致命的, =0 显示错误 (警告), >0 显示信息lgb怎么用

lgb_train = lgb.Dataset(x_train, y_train)

lgb_eval = lgb.Dataset(x_test, y_test, reference=lgb_train)

# 上边是加载数据比较快

params = {

'max_depth':3,

'boosting_type': 'gbdt',

'objective': 'regression',

'metric': {'l2', 'l1'},

'num_leaves':50,

'learning_rate': 0.05,

'feature_fraction': 0.9,

'bagging_fraction': 0.8,

'bagging_freq': 5,

'verbose': 1

}

gbm = lgb.train(params,

lgb_train,

num_boost_round=300,

valid_sets=lgb_eval,

early_stopping_rounds=5)

y1_pred = gbm.predict(test_x_2020_1_guiyihua, num_iteration=gbm.best_iteration)

# eval

数据生成csv

# data是一个以为度得列表 ,这样是把数据写进去一列

name=['pressure']

test=pd.DataFrame(columns=name,data=data)

test.to_csv('jieguo.csv')