import torch

import torchvision.models as models

from torch import nn

class SeparableConv2d_same(nn.Module):

def __init__(self, inplanes, planes, kernel_size=3, stride=1, dilation=1, bias=False):

super(SeparableConv2d_same, self).__init__()

self.conv1 = nn.Conv2d(inplanes, inplanes, kernel_size, stride, 0, dilation,

groups=inplanes, bias=bias)

self.pointwise = nn.Conv2d(inplanes, planes, 1, 1, 0, 1, 1, bias=bias)

def forward(self, x):

x = self.conv1(x)

x = self.pointwise(x)

return x

class Block(nn.Module):

def __init__(self, inplanes, planes, reps, stride=1

, dilation=1, start_with_rule=True, grow_first=True, is_last=False):

super(Block, self).__init__()

if planes != inplanes or stride != 1:

self.skip = nn.Conv2d(

inplanes, planes, 1, stride=stride, bias=False

)

self.skipbn = nn.BatchNorm2d(planes)

else:

self.skip = None

self.relu = nn.ReLU(inplace=True)

rep = []

filters = inplanes

if grow_first:

rep.append(self.relu)

rep.append(SeparableConv2d_same(

filters, planes, 3, stride=1, dilation=dilation

))

rep.append(nn.BatchNorm2d(planes))

filters = planes

for i in range(reps - 1):

rep.append(self.relu)

rep.append(SeparableConv2d_same(

filters, filters, 3, stride=1, dilation=dilation

))

rep.append(nn.BatchNorm2d(filters))

if not grow_first:

rep.append(self.relu)

rep.append(SeparableConv2d_same(

inplanes, planes, 3, stride=1, dilation=dilation

))

rep.append(nn.BatchNorm2d(planes))

if not start_with_rule:

rep = rep[1:]

if stride != 1:

rep.append(SeparableConv2d_same(planes,

planes, 3, stride=2))

if stride == 1 and is_last:

self.rep = nn.Sequential(*rep)

def forward(self, input):

x = self.rep(input)

if self.skip is not None:

skip = self.skip(input)

skip = self.skipbn(skip)

else:

skip = input

x += skip

return x

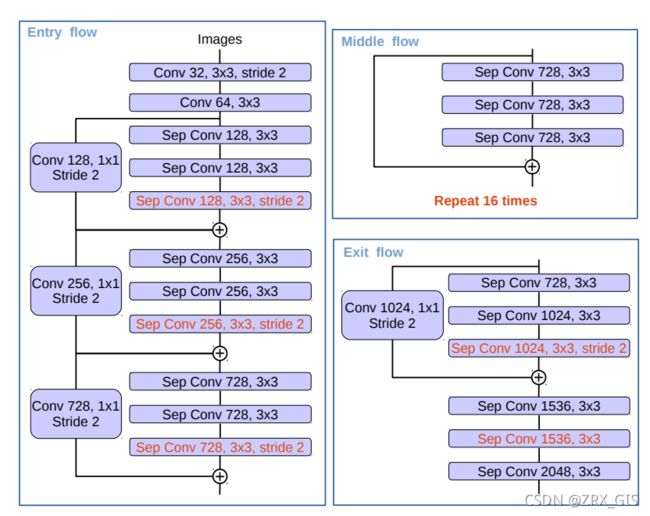

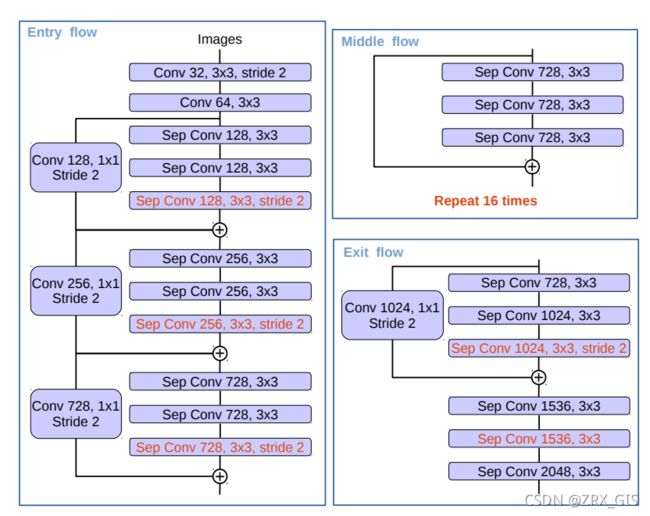

class Xception(nn.Module):

def __init__(self, inplanes=3):

super(Xception, self).__init__()

entry_block3_stride = 2

middle_block_dilation = 1

exit_block_dilations = (1, 2)

self.conv1 = nn.Conv2d(inplanes, 32, 3, stride=2, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(32)

self.relu = nn.ReLU(inplace=True)

self.conv2 = nn.Conv2d(32, 64, 3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(64)

self.block1 = Block(64, 128, reps=2, stride=2, start_with_relu=False)

self.block2 = Block(128, 256, reps=2, stride=2, start_with_relu=True, grow_first=True)

self.block3 = Block(256, 728, reps=2, stride=entry_block3_stride, start_with_relu=True, grow_first=True,

is_last=True)

self.block4 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True,

grow_first=True)

self.block5 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True,

grow_first=True)

self.block6 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True,

grow_first=True)

self.block7 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True,

grow_first=True)

self.block8 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True,

grow_first=True)

self.block9 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True,

grow_first=True)

self.block10 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True,

grow_first=True)

self.block11 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True,

grow_first=True)

self.block12 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True,

grow_first=True)

self.block13 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True,

grow_first=True)

self.block14 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True,

grow_first=True)

self.block15 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True,

grow_first=True)

self.block16 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True,

grow_first=True)

self.block17 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True,

grow_first=True)

self.block18 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True,

grow_first=True)

self.block19 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True,

grow_first=True)

self.block20 = Block(728, 1024, reps=2, stride=1, dilation=exit_block_dilations[0],

start_with_relu=True, grow_first=False, is_last=True)

self.conv3 = SeparableConv2d_same(1024, 1536, 3, stride=1, dilation=exit_block_dilations[1])

self.bn3 = nn.BatchNorm2d(1536)

self.conv4 = SeparableConv2d_same(1536, 1536, 3, stride=1, dilation=exit_block_dilations[1])

self.bn4 = nn.BatchNorm2d(1536)

self.conv5 = SeparableConv2d_same(1536, 2048, 3, stride=1, dilation=exit_block_dilations[1])

self.bn5 = nn.BatchNorm2d(2048)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.conv2(x)

x = self.bn2(x)

x = self.relu(x)

x = self.block1(x)

low_level_feat = x

x = self.block2(x)

x = self.block3(x)

x = self.block4(x)

x = self.block5(x)

x = self.block6(x)

x = self.block7(x)

x = self.block8(x)

x = self.block9(x)

x = self.block10(x)

x = self.block11(x)

x = self.block12(x)

x = self.block13(x)

x = self.block14(x)

x = self.block15(x)

x = self.block16(x)

x = self.block17(x)

x = self.block18(x)

x = self.block19(x)

x = self.block20(x)

x = self.conv3(x)

x = self.bn3(x)

x = self.relu(x)

x = self.conv4(x)

x = self.bn4(x)

x = self.relu(x)

x = self.conv5(x)

x = self.bn5(x)

x = self.relu(x)

return x, low_level_feat