基本去噪自编码器

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

gpus = tf.config.experimental.list_physical_devices(device_type='GPU')

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu,True)

gpu_ok = tf.test.is_gpu_available()

print("tf version:", tf.__version__)

print("use GPU", gpu_ok)

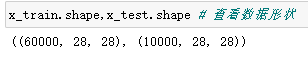

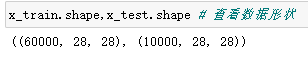

(x_train,y_train),(x_test,y_test) = tf.keras.datasets.mnist.load_data()

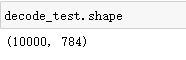

x_train = x_train.reshape(x_train.shape[0],-1)

x_test = x_test.reshape(x_test.shape[0],-1)

x_train = tf.cast(x_train,tf.float32)/255

x_test = tf.cast(x_test,tf.float32)/255

factor = 0.5

x_train_noise = x_train + factor*np.random.normal(size = x_train.shape)

x_test_noise = x_test + factor*np.random.normal(size = x_test.shape)

x_train_noise = np.clip(x_train_noise,0.,1.)

x_test_noise = np.clip(x_test_noise,0.,1.)

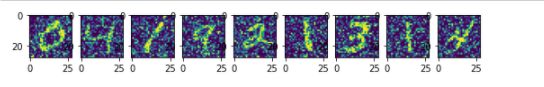

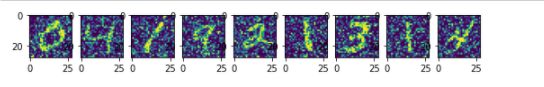

n = 10

plt.figure(figsize=(10,2))

for i in range(1,n):

ax = plt.subplot(1,n,i)

plt.imshow(x_train_noise[i].reshape(28,28))

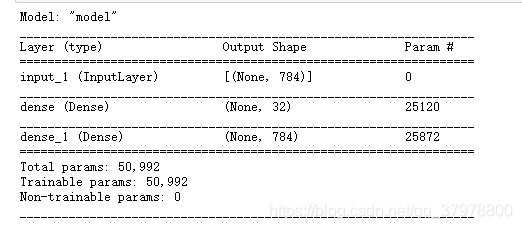

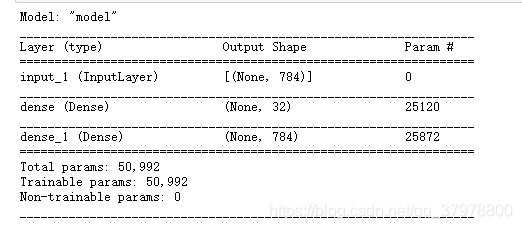

input_size = 784

hidden_size = 32

output_size = 784

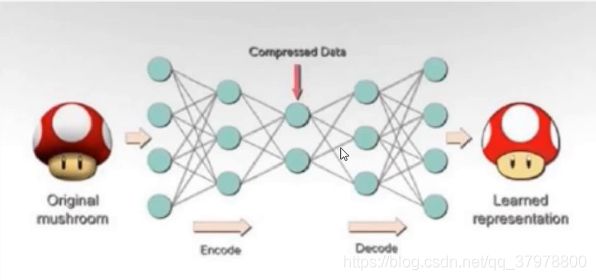

input = tf.keras.layers.Input(shape=(input_size,))

en = tf.keras.layers.Dense(hidden_size,activation="relu")(input)

de = tf.keras.layers.Dense(output_size,activation="sigmoid")(en)

model = tf.keras.Model(inputs=input,outputs=de)

model.summary()

model.compile(

optimizer="adam",

loss="mse"

)

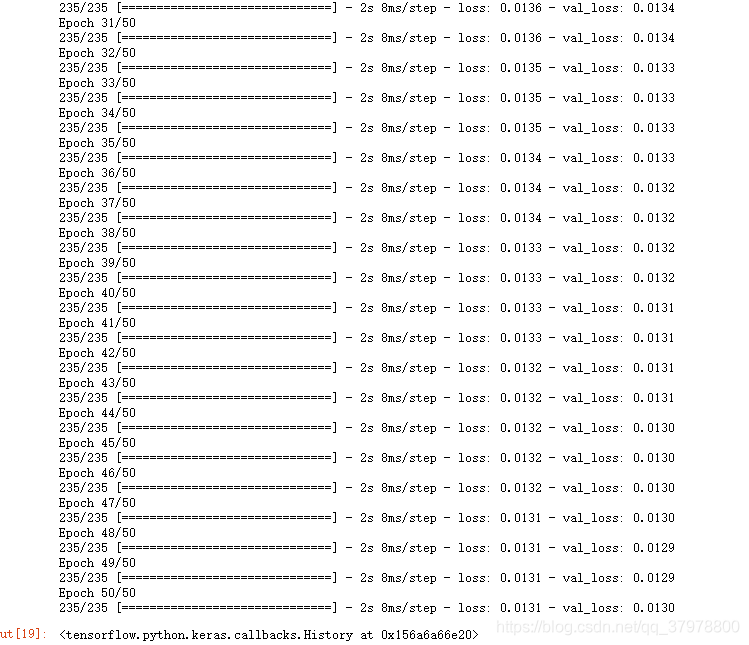

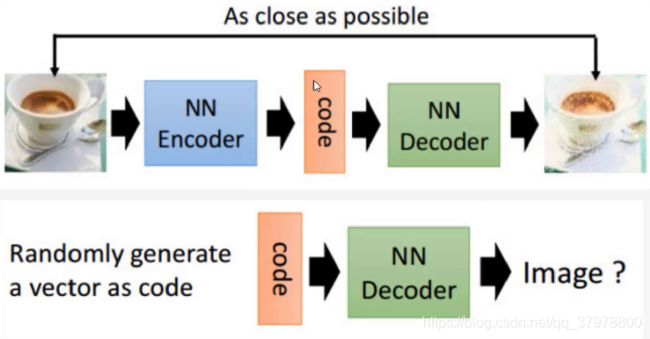

model.fit(x_train_noise,x_train,

epochs=50,

batch_size = 256,

shuffle=True,

validation_data=(x_test_noise,x_test)

)

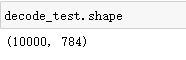

encode = tf.keras.Model(inputs=input,outputs=en)

input_de = tf.keras.layers.Input(shape=(hidden_size,))

output_de = model.layers[-1](input_de)

decode = tf.keras.Model(inputs=input_de,outputs=output_de)

encode_test = encode(x_test_noise)

decode_test = decode.predict(encode_test)

x_test_noise = x_test.numpy()

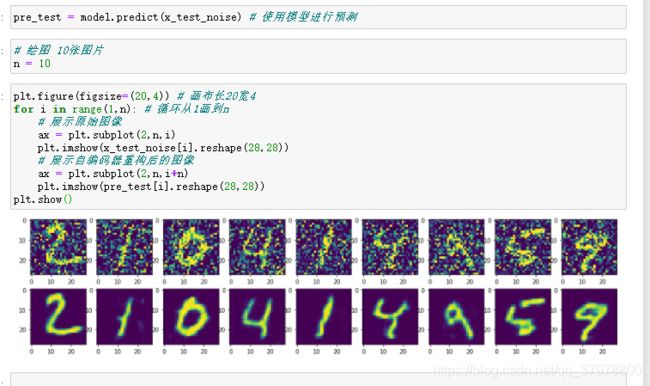

n = 10

plt.figure(figsize=(20,4))

for i in range(1,n):

ax = plt.subplot(2,n,i)

plt.imshow(x_test_noise[i].reshape(28,28))

ax = plt.subplot(2,n,i + n)

plt.imshow(decode_test[i].reshape(28,28))

卷积去噪自编码器

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

gpus = tf.config.experimental.list_physical_devices(device_type='GPU')

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu,True)

gpu_ok = tf.test.is_gpu_available()

print("tf version:", tf.__version__)

print("use GPU", gpu_ok)

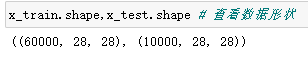

(x_train,y_train),(x_test,y_test) = tf.keras.datasets.mnist.load_data()

x_train = np.expand_dims(x_train,-1)

x_test = np.expand_dims(x_test,-1)

x_train = tf.cast(x_train,tf.float32)/255

x_test = tf.cast(x_test,tf.float32)/255

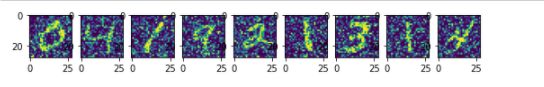

factor = 0.5

x_train_noise = x_train + factor*np.random.normal(size = x_train.shape)

x_test_noise = x_test + factor*np.random.normal(size = x_test.shape)

x_train_noise = np.clip(x_train_noise,0.,1.)

x_test_noise = np.clip(x_test_noise,0.,1.)

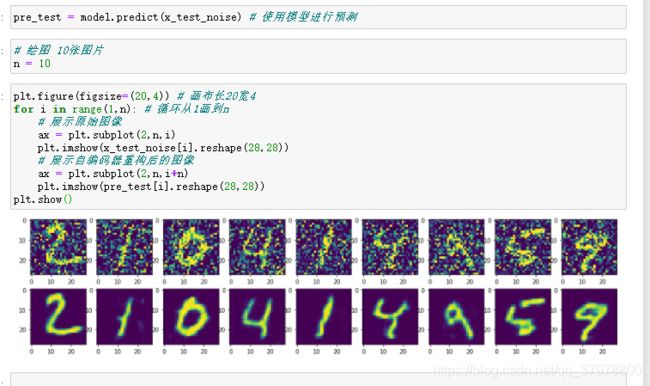

n = 10

plt.figure(figsize=(10,2))

for i in range(1,n):

ax = plt.subplot(1,n,i)

plt.imshow(x_train_noise[i].reshape(28,28))

input_size = 784

hidden_size = 32

output_size = 784

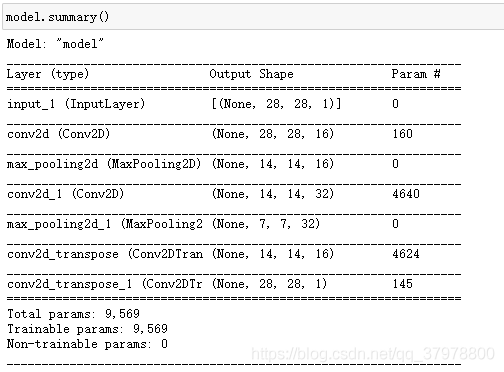

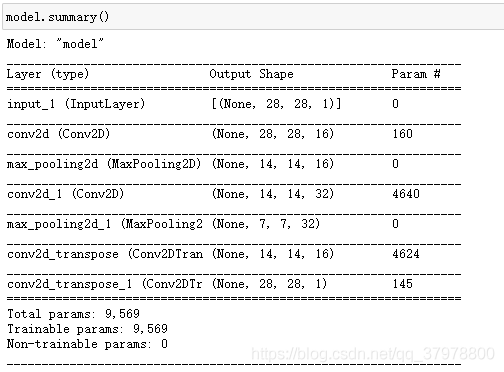

input = tf.keras.layers.Input(shape=x_train.shape[1:])

x = tf.keras.layers.Conv2D(16,3,activation="relu",padding="same")(input)

x = tf.keras.layers.MaxPooling2D(padding="same")(x)

x = tf.keras.layers.Conv2D(32,3,activation="relu",padding="same")(x)

x = tf.keras.layers.MaxPooling2D(padding="same")(x)

x = tf.keras.layers.Conv2DTranspose(16,3,strides=2,

activation="relu",

padding="same")(x)

x = tf.keras.layers.Conv2DTranspose(1,3,strides=2,

activation="sigmoid",

padding="same")(x)

model = tf.keras.Model(inputs=input,outputs=x)

model.compile(

optimizer="adam",

loss="mse"

)

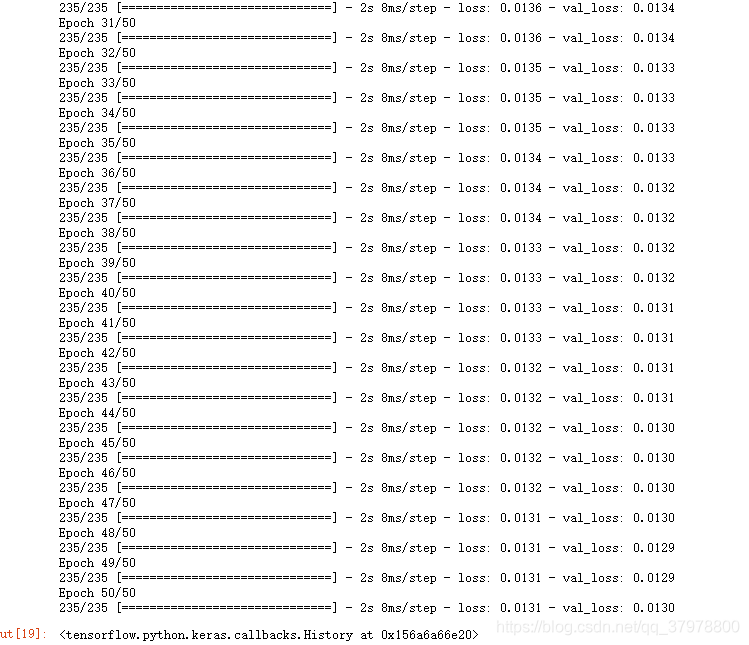

model.fit(x_train_noise,x_train,

epochs=50,

batch_size = 256,

shuffle=True,

validation_data=(x_test_noise,x_test)

)