python爬虫(二十二)scrapy案例--爬取腾讯招聘数据

scrapy爬取腾讯招聘数据

需求分析

爬取腾讯招聘–社会招聘–技术类的工作岗位,实现翻页的爬取

页面分析

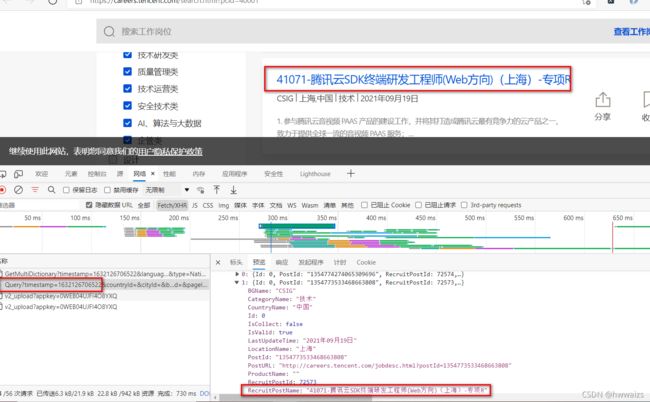

复制任意职位名称,点右键,查看网页源代码,在里面并未找到相关内容,说明页面不是静态加载出来的,是动态加载出来的。点击Network —> XHR,刷新页面,在左侧name下出现4个文件,依次点击查找数据接口,在Query开头的文件中Preview中可以看到需要的职位名称,因此这个文件下Headers里的url就是我们需要爬取的url。

当我们点击页面尾部下一页的时候,在name下面出另加载出来一个Query开头的文件,依次点开几页,我们可以从url中找到规律。

https://careers.tencent.com/tencentcareer/api/post/Query?timestamp=1632126706522&countryId=&cityId=&bgIds=&productId=&categoryId=&parentCategoryId=40001&attrId=&keyword=&pageIndex=1&pageSize=10&language=zh-cn&area=cn 第一页

https://careers.tencent.com/tencentcareer/api/post/Query?timestamp=1632127143042&countryId=&cityId=&bgIds=&productId=&categoryId=40001001,40001002,40001003,40001004,40001005,40001006&parentCategoryId=&attrId=&keyword=&pageIndex=2&pageSize=10&language=zh-cn&area=cn 第二页

https://careers.tencent.com/tencentcareer/api/post/Query?timestamp=1632127244688&countryId=&cityId=&bgIds=&productId=&categoryId=40001001,40001002,40001003,40001004,40001005,40001006&parentCategoryId=&attrId=&keyword=&pageIndex=3&pageSize=10&language=zh-cn&area=cn 第三页

timestamp是时间戳,是访问页面的时间

按照第三页的url,把pageIndex改为1,同样能访问到第一页,说明 做翻页处理时候pageIndex=n,代表的是第n页。

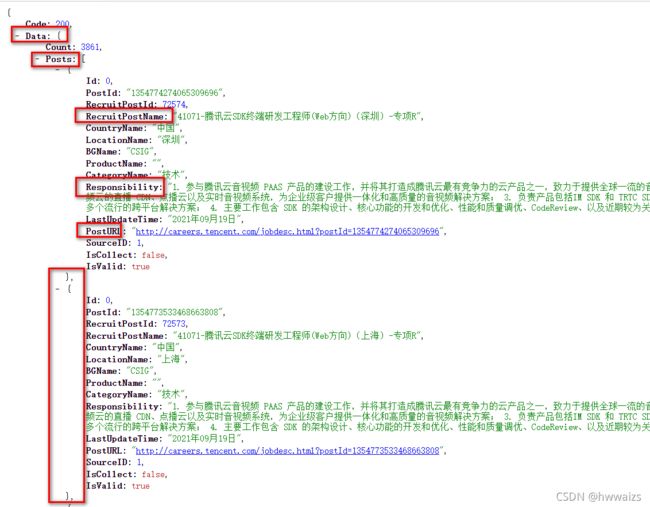

复制任一页的网页打开后

我们可以发现要爬取的数据是以字典的形式存放在网页代码中,访问的url就是要爬取的url。

代码实现

实现对职位、地区、工作职责、详情页url的爬取

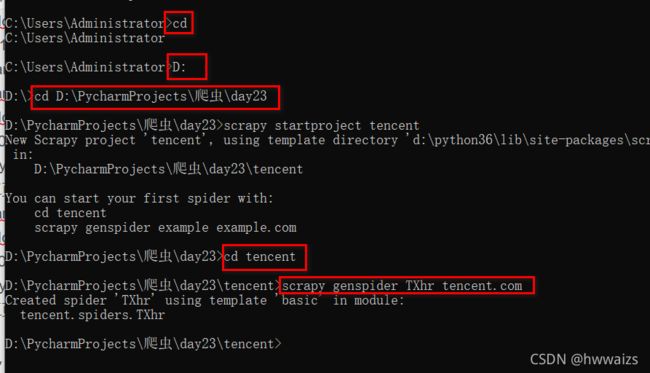

创建scrapy文件,这里是在cmd下创建的,我的爬虫文件在D盘,也可以在pycharm中的Terminal中直接创建。

start.py

from scrapy import cmdline

# cmdline.execute(['scrapy', 'crawl', 'TXhr']) # 方法一

cmdline.execute('scrapy crawl TXhr'.split(" ")) # 方法二

items.py

import scrapy

class TencentItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

RecruitPostName = scrapy.Field()

LocationName = scrapy.Field()

Responsibility = scrapy.Field()

PostURL = scrapy.Field()

LOG_LEVEL = 'WARNING'

BOT_NAME = 'tencent'

SPIDER_MODULES = ['tencent.spiders']

NEWSPIDER_MODULE = 'tencent.spiders'

ROBOTSTXT_OBEY = False

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.107 Safari/537.36 Edg/92.0.902.62'

}

ITEM_PIPELINES = {

'tencent.pipelines.TencentPipeline': 300,

}

pipelines.py

import json

import csv

class TencentPipeline:

def process_item(self, item, spider):

item_lis = []

# item_json = json.dumps(dict(item), ensure_ascii=False)

# print(item_json)

item_lis.append(dict(item))

# print(item_lis)

header = ['RecruitPostName', 'LocationName', 'Responsibility', 'PostURL']

with open('tx.csv', 'a', encoding='utf-8', newline="") as f:

writ = csv.DictWriter(f, header)

writ.writeheader()

writ.writerows(item_lis)

return item

TXhr.py

import scrapy

from tencent.items import TencentItem

class TxhrSpider(scrapy.Spider):

name = 'TXhr'

allowed_domains = ['tencent.com']

# 可以在列表中存放多个url

start_urls = ['https://careers.tencent.com/tencentcareer/api/post/Query?timestamp=1632126706522&countryId=&cityId=&bgIds=&productId=&categoryId=&parentCategoryId=40001&attrId=&keyword=&pageIndex=1&pageSize=10&language=zh-cn&area=cn',

'https://careers.tencent.com/tencentcareer/api/post/Query?timestamp=1632126706522&countryId=&cityId=&bgIds=&productId=&categoryId=&parentCategoryId=40001&attrId=&keyword=&pageIndex=2&pageSize=10&language=zh-cn&area=cn',

'https://careers.tencent.com/tencentcareer/api/post/Query?timestamp=1632126706522&countryId=&cityId=&bgIds=&productId=&categoryId=&parentCategoryId=40001&attrId=&keyword=&pageIndex=3&pageSize=10&language=zh-cn&area=cn',

'https://careers.tencent.com/tencentcareer/api/post/Query?timestamp=1632126706522&countryId=&cityId=&bgIds=&productId=&categoryId=&parentCategoryId=40001&attrId=&keyword=&pageIndex=4&pageSize=10&language=zh-cn&area=cn']

def parse(self, response):

posts = response.json()['Data']['Posts']

for post in posts:

item = TencentItem(RecruitPostName=post['RecruitPostName'], LocationName=post['LocationName'], Responsibility=post['Responsibility'].strip(), PostURL=post['PostURL'])

# print(item)

yield item

自动翻页的实现

import scrapy

from tencent.items import TencentItem

class TxhrSpider(scrapy.Spider):

name = 'TXhr'

allowed_domains = ['tencent.com']

every_url = 'https://careers.tencent.com/tencentcareer/api/post/Query?timestamp=1632126706522&countryId=&cityId=&bgIds=&productId=&categoryId=&parentCategoryId=40001&attrId=&keyword=&pageIndex={}&pageSize=10&language=zh-cn&area=cn'

start_urls = [every_url.format(1)]

def parse(self, response):

posts = response.json()['Data']['Posts']

for job_post in posts:

item = TencentItem()

item['title'] = job_post['RecruitPostName']

post_id = job_post['postId']

# yield scrapy.Request(

# url=self.every_url.format(2),

# # self.parse后面不要有括号

# callback=self.parse

# )

# 实现翻页,解析完第一页之后,进行翻页处理,然后调用上面的解析函数继续处理

for page in range(2,5):

next_url = self.every_url.format(page)

yield scrapy.Request(

url=next_url,

# 如果用到的解析函数还是当前的解析函数的话,可以不用回调函数

# callback=self.parse

)

以上代码实现了,对职位、地区、工作职责、详情页url的爬取。

实现每个岗位详情页工作要求的爬取

下面的代码要实现对详情页工作要求的爬取。

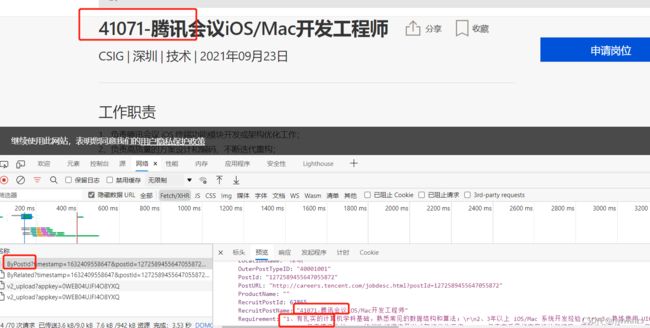

复制详情页的工作要求去网页源码中查看,发现详情页工作要求的数据不在网页源码中,是动态加载出来的。可以通过点右键,检查,Network,XHR中Preview查找数据。

通过检查,可以看到工作要求数据存放在ypostld?开头的文件中,复制几个岗位的url。https://careers.tencent.com/tencentcareer/api/post/ByPostId?timestamp=1632409558647&postId=1272589455647055872&language=zh-cn

https://careers.tencent.com/tencentcareer/api/post/ByPostId?timestamp=1632409729213&postId=1272589329310425088&language=zh-cn

timestamp是时间戳,是页面访问的时间,可以看到不同职位url详情页的区别在于postId里的内容,而从第一次获取的数据可以看出postId存在于页面首页的字典里。

我们可以在第一次爬取的基础上,找到postId,拼接到爬取详情页的url中。

TXhr.py

import scrapy

from tencent.items import TencentItem

import json

class TxhrSpider(scrapy.Spider):

name = 'TXhr'

allowed_domains = ['tencent.com']

# 起始页的url

every_url = 'https://careers.tencent.com/tencentcareer/api/post/Query?timestamp=1632126706522&countryId=&cityId=&bgIds=&productId=&categoryId=&parentCategoryId=40001&attrId=&keyword=&pageIndex={}&pageSize=10&language=zh-cn&area=cn'

# 详情页的url

detail_url = 'https://careers.tencent.com/tencentcareer/api/post/ByPostId?timestamp=1632409558647&postId={}&language=zh-cn'

start_urls = [every_url.format(1)]

# 解析列表页面数据的函数

def parse(self, response):

posts = response.json()['Data']['Posts']

for job_post in posts:

item = TencentItem()

item['title'] = job_post['RecruitPostName']

post_id = job_post['postId']

# 拼接详情页的url

new_detail_url = self.detail_url.format(post_id)

# 发送新的请求,获取响应

yield scrapy.Request(

url=new_detail_url,

# self.parse后面不要有括号

callback=self.parse_detail,

meta={

'items': item} # 用字典的格式进行数据传输,字典的value就是要传输的数据

# key值是自己定义的变量,在函数中接收的时候就用key值接收

)

# 实现翻页,解析完第一页之后,进行翻页处理,然后调用上面的解析函数继续处理

for page in range(2, 5):

next_url = self.every_url.format(page)

yield scrapy.Request(

url=next_url,

# 如果用到的解析函数还是当前的解析函数的话,可以不用回调函数

# callback=self.parse

)

# 拿到拼接好的url,交给引擎yield,引擎给调度器入列,返回给引擎一个url,经过下载中间件给下载器,

# 下载器发送请求获取响应,把获取到的response通过下载中间交给引擎,引擎通过爬虫中间件给爬虫程序,

# 在解析函数中得到response的响应结果

# 获取详情页面的工作职责

def parse_detail(self,response):

# 第一种数据接收方式

# item = response.meta['items']

# 第二种数据接收方式

item = response.meta.get('items')

# 把获取到的网页数据转为json格式

data = json.loads(response.text)

item['job_duty'] = data['data']['Requirement']

print(item)