seata源码解析:RPC模块详解

前言

Seata是一个分布式事务解决方案框架,既然是分布式性质的事务解决方案,那么Seata必然涉及到网络通信。Seata内部实现了一个RPC模块用于RM、TM、TC进行事务的创建、提交、回滚等操作之间的通信。

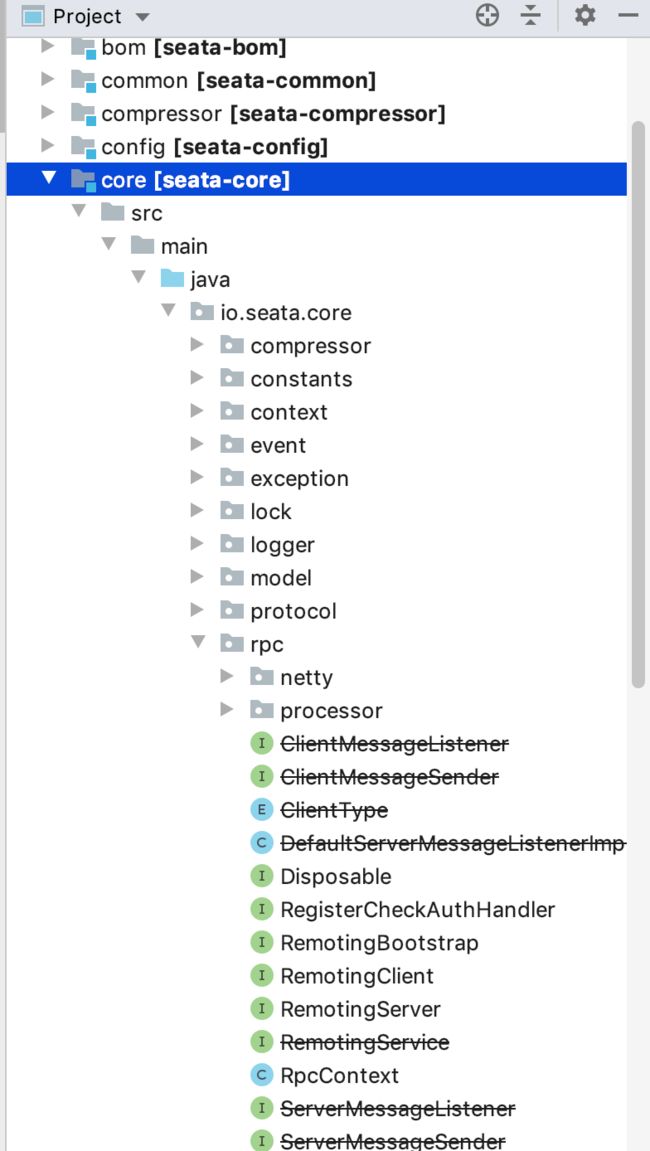

项目结构

Seata rpc模块位于core项目中,代码结构整体预览如下所示:

源码分析

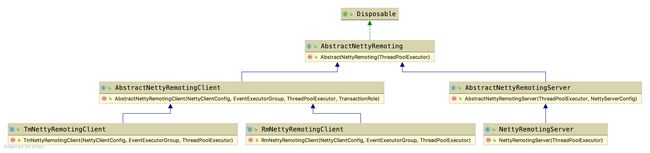

Seata是使用netty做为RPC的底层通信,接下来我们先分析下Seata对netty模块的使用。

Seata封装的rpc通信类图如下:

1.Netty Server通信模块

1.1 初始化Netty Server

NettyServerBootstrap类中构造函数对netty的bossGroup、workGroup进行了初始化:

public NettyServerBootstrap(NettyServerConfig nettyServerConfig) {

this.nettyServerConfig = nettyServerConfig;

if (NettyServerConfig.enableEpoll()) {

this.eventLoopGroupBoss = new EpollEventLoopGroup(nettyServerConfig.getBossThreadSize(),

new NamedThreadFactory(nettyServerConfig.getBossThreadPrefix(), nettyServerConfig.getBossThreadSize()));

this.eventLoopGroupWorker = new EpollEventLoopGroup(nettyServerConfig.getServerWorkerThreads(),

new NamedThreadFactory(nettyServerConfig.getWorkerThreadPrefix(),

nettyServerConfig.getServerWorkerThreads()));

} else {

this.eventLoopGroupBoss = new NioEventLoopGroup(nettyServerConfig.getBossThreadSize(),

new NamedThreadFactory(nettyServerConfig.getBossThreadPrefix(), nettyServerConfig.getBossThreadSize()));

this.eventLoopGroupWorker = new NioEventLoopGroup(nettyServerConfig.getServerWorkerThreads(),

new NamedThreadFactory(nettyServerConfig.getWorkerThreadPrefix(),

nettyServerConfig.getServerWorkerThreads()));

}

// init listenPort in constructor so that getListenPort() will always get the exact port

setListenPort(nettyServerConfig.getDefaultListenPort());

}NettyServerBootstrap.start()方法用于启动netty server,加载一系列启动所需要的参数,以及初始化Seata业务处理handler:

@Override

public void start() {

this.serverBootstrap.group(this.eventLoopGroupBoss, this.eventLoopGroupWorker)

.channel(NettyServerConfig.SERVER_CHANNEL_CLAZZ)

.option(ChannelOption.SO_BACKLOG, nettyServerConfig.getSoBackLogSize())

.option(ChannelOption.SO_REUSEADDR, true)

.childOption(ChannelOption.SO_KEEPALIVE, true)

.childOption(ChannelOption.TCP_NODELAY, true)

.childOption(ChannelOption.SO_SNDBUF, nettyServerConfig.getServerSocketSendBufSize())

.childOption(ChannelOption.SO_RCVBUF, nettyServerConfig.getServerSocketResvBufSize())

.childOption(ChannelOption.WRITE_BUFFER_WATER_MARK,

new WriteBufferWaterMark(nettyServerConfig.getWriteBufferLowWaterMark(),

nettyServerConfig.getWriteBufferHighWaterMark()))

.localAddress(new InetSocketAddress(listenPort))

.childHandler(new ChannelInitializer() {

@Override

public void initChannel(SocketChannel ch) {

ch.pipeline().addLast(new IdleStateHandler(nettyServerConfig.getChannelMaxReadIdleSeconds(), 0, 0))

.addLast(new ProtocolV1Decoder())

.addLast(new ProtocolV1Encoder());

if (channelHandlers != null) {

addChannelPipelineLast(ch, channelHandlers);

}

}

});

try {

ChannelFuture future = this.serverBootstrap.bind(listenPort).sync();

LOGGER.info("Server started, listen port: {}", listenPort);

RegistryFactory.getInstance().register(new InetSocketAddress(XID.getIpAddress(), XID.getPort()));

initialized.set(true);

future.channel().closeFuture().sync();

} catch (Exception exx) {

throw new RuntimeException(exx);

}

} 从上面代码来看,我们可以看到Seata使用4个Handler来处理rpc的心跳、编解码、业务请求处理:

- IdleStateHandler Netty内置的心跳检测handler

- ProtocolV1Decoder Seata解码器

- ProtocolV1Encoder Seata编码器

- ServerHandler 业务请求处理handler

Seata 使用到的Netty参数:

| 参数 |

默认值 |

说明 |

| ChannelOption.SO_BACKLOG |

1024 |

连接请求存放到队列中的数量。 |

| ChannelOption.SO_REUSEADDR |

true |

开启端口重用。 |

| ChannelOption.SO_KEEPALIVE |

true |

连接保持。 |

| ChannelOption.TCP_NODELAY |

true |

禁止使用Nagle算法,降低rpc接口时延。 |

| ChannelOption.SO_SNDBUF ChannelOption.SO_RCVBUF |

153600 |

设置tcp发送、接收缓冲区大小 |

| ChannelOption.WRITE_BUFFER_WATER_MARK |

1048576 1M 67108864 64M |

设置netty高水位、低水位线,保护系统不被压垮。 |

1.2 ProtocolV1Decoder详解

Seata rpc协议头设计:

| Len |

Param |

Desc |

Desc in chinese |

| 2B |

Magic Code |

0xdada |

魔术位 |

| 1B |

ProtocolVersion |

1 |

协议版本:用于非兼容性升级 |

| 4B |

FullLength |

include front 3 bytes and self 4 bytes |

总长度 :用于拆包,包括前3位和自己4位 |

| 2B |

HeadLength |

include front 7 bytes, self 4 bytes, and head map |

头部长度:包括前面7位,自己4位,以及 HeadMap |

| 1B |

Message type |

request(oneway/twoway)/response/heartbeat/callback |

消息类型:请求(单向/双向)/响应/心跳/回调/go away等 |

| 1B |

Serialization |

custom, hessian, pb |

序列化类型:内置/hessian/protobuf等 |

| 1B |

CompressType |

None/gzip/snappy... |

压缩算法:无/gzip/snappy |

| 4B |

MessageId |

Integer |

消息 Id |

| 2B |

TypeCode |

code in AbstractMessage |

消息类型: AbstractMessage 里的类型 |

| ?B |

HeadMap[Optional] |

exists when if head length > 16 |

消息Map(可选的,如果头部长度大于16,代表存在HeadMap) |

| ATTR_KEY(?B) key:string:length(2B)+data ATTR_TYPE(1B) 1:int; 2:string; 3:byte; 4:short ATTR_VAL(?B) int:(4B); string:length(2B)+data; byte:(1B); short:(2B) } |

Key: 字符串 Value 类型 Value 值 |

||

| ?b |

Body |

(FullLength-HeadLength) |

请求体:长度为总长度-头长度 |

由上图可知,Seata rpc协议头如果不包括头拓展字段:HeadMap[Optional] 的话,总共头长度是18byte,总体来说还是比较小的。Seata rpc协议设计有一个亮点,那就是支持协议头拓展(HeadLength、HeadMap[Optional]),他这种设计既灵活性能又高(一些透传参数可以不用放在Body里面,这样就不需要对body进行编解码直接在协议头部获取)

ProtocolV1Decoder.decodeFrame

public Object decodeFrame(ByteBuf frame) {

byte b0 = frame.readByte();//@1

byte b1 = frame.readByte();

if (ProtocolConstants.MAGIC_CODE_BYTES[0] != b0

|| ProtocolConstants.MAGIC_CODE_BYTES[1] != b1) {

throw new IllegalArgumentException("Unknown magic code: " + b0 + ", " + b1);

}//@2

byte version = frame.readByte();

// TODO check version compatible here

int fullLength = frame.readInt();//@3

short headLength = frame.readShort();

byte messageType = frame.readByte();

byte codecType = frame.readByte();

byte compressorType = frame.readByte();

int requestId = frame.readInt();

RpcMessage rpcMessage = new RpcMessage();

rpcMessage.setCodec(codecType);

rpcMessage.setId(requestId);

rpcMessage.setCompressor(compressorType);

rpcMessage.setMessageType(messageType);//@4

// direct read head with zero-copy

int headMapLength = headLength - ProtocolConstants.V1_HEAD_LENGTH;//@5

if (headMapLength > 0) {

Map map = HeadMapSerializer.getInstance().decode(frame, headMapLength);

rpcMessage.getHeadMap().putAll(map);

}//@6

// read body

if (messageType == ProtocolConstants.MSGTYPE_HEARTBEAT_REQUEST) {

rpcMessage.setBody(HeartbeatMessage.PING);

} else if (messageType == ProtocolConstants.MSGTYPE_HEARTBEAT_RESPONSE) {

rpcMessage.setBody(HeartbeatMessage.PONG);

} else {

int bodyLength = fullLength - headLength;//@7

if (bodyLength > 0) {

byte[] bs = new byte[bodyLength];

frame.readBytes(bs);

Compressor compressor = CompressorFactory.getCompressor(compressorType);

bs = compressor.decompress(bs);

Serializer serializer = SerializerFactory.getSerializer(codecType);

rpcMessage.setBody(serializer.deserialize(bs));//@8

}

}

return rpcMessage;

}

public Map decode(ByteBuf in, int length) {//@9

Map map = new HashMap();

if (in == null || in.readableBytes() == 0 || length == 0) {

return map;

}

int tick = in.readerIndex();

while (in.readerIndex() - tick < length) {

String key = readString(in);

String value = readString(in);

map.put(key, value);

}

return map;

} 代码@1、2: 此处是读取协议投中的magic魔数,验证当前请求是否为Seata的RPC请求。

代码@3、4:此处是解析protocol header中的requestId、消息类型。

代码@5、6:解析protocol header中的:HeadMap[Optional]。

代码@7、8:反序列化请求body。

代码@9:解析protocol header中的:HeadMap[Optional]。

1.3 ProtocolV1Encoder

Seata rpc模块中编码方式代码逻辑比较简单,大部分逻辑按照协议头填充字节以及序列化header map、body,这里就不做详细讲解

@Override

public void encode(ChannelHandlerContext ctx, Object msg, ByteBuf out) {

try {

if (msg instanceof RpcMessage) {

RpcMessage rpcMessage = (RpcMessage) msg;

int fullLength = ProtocolConstants.V1_HEAD_LENGTH;

int headLength = ProtocolConstants.V1_HEAD_LENGTH;

byte messageType = rpcMessage.getMessageType();

out.writeBytes(ProtocolConstants.MAGIC_CODE_BYTES);

out.writeByte(ProtocolConstants.VERSION);

// full Length(4B) and head length(2B) will fix in the end.

out.writerIndex(out.writerIndex() + 6);

out.writeByte(messageType);

out.writeByte(rpcMessage.getCodec());

out.writeByte(rpcMessage.getCompressor());

out.writeInt(rpcMessage.getId());

// direct write head with zero-copy

Map headMap = rpcMessage.getHeadMap();

if (headMap != null && !headMap.isEmpty()) {

int headMapBytesLength = HeadMapSerializer.getInstance().encode(headMap, out);

headLength += headMapBytesLength;

fullLength += headMapBytesLength;

}

byte[] bodyBytes = null;

if (messageType != ProtocolConstants.MSGTYPE_HEARTBEAT_REQUEST

&& messageType != ProtocolConstants.MSGTYPE_HEARTBEAT_RESPONSE) {

// heartbeat has no body

Serializer serializer = SerializerFactory.getSerializer(rpcMessage.getCodec());

bodyBytes = serializer.serialize(rpcMessage.getBody());

Compressor compressor = CompressorFactory.getCompressor(rpcMessage.getCompressor());

bodyBytes = compressor.compress(bodyBytes);

fullLength += bodyBytes.length;

}

if (bodyBytes != null) {

out.writeBytes(bodyBytes);

}

// fix fullLength and headLength

int writeIndex = out.writerIndex();

// skip magic code(2B) + version(1B)

out.writerIndex(writeIndex - fullLength + 3);

out.writeInt(fullLength);

out.writeShort(headLength);

out.writerIndex(writeIndex);

} else {

throw new UnsupportedOperationException("Not support this class:" + msg.getClass());

}

} catch (Throwable e) {

LOGGER.error("Encode request error!", e);

}

} 1.4 ServerHandler

Seata Server端处理请求的类是ServerHandler,它集成:ChannelDuplexHandler,处理请求的入口方法是:channelRead。

@ChannelHandler.Sharable

class ServerHandler extends ChannelDuplexHandler {

/**

* Channel read.

*

* @param ctx the ctx

* @param msg the msg

* @throws Exception the exception

*/

@Override

public void channelRead(final ChannelHandlerContext ctx, Object msg) throws Exception {

if (!(msg instanceof RpcMessage)) {

return;

}

processMessage(ctx, (RpcMessage) msg);//@1

}

@Override

public void channelWritabilityChanged(ChannelHandlerContext ctx) {

synchronized (lock) {

if (ctx.channel().isWritable()) {

lock.notifyAll();

}

}

ctx.fireChannelWritabilityChanged();

}

/**

* Channel inactive.

*

* @param ctx the ctx

* @throws Exception the exception

*/

@Override

public void channelInactive(ChannelHandlerContext ctx) throws Exception {

debugLog("inactive:" + ctx);

if (messageExecutor.isShutdown()) {

return;

}

handleDisconnect(ctx);

super.channelInactive(ctx);

}

private void handleDisconnect(ChannelHandlerContext ctx) {

final String ipAndPort = NetUtil.toStringAddress(ctx.channel().remoteAddress());

RpcContext rpcContext = ChannelManager.getContextFromIdentified(ctx.channel());

if (LOGGER.isInfoEnabled()) {

LOGGER.info(ipAndPort + " to server channel inactive.");

}

if (rpcContext != null && rpcContext.getClientRole() != null) {

rpcContext.release();

if (LOGGER.isInfoEnabled()) {

LOGGER.info("remove channel:" + ctx.channel() + "context:" + rpcContext);

}

} else {

if (LOGGER.isInfoEnabled()) {

LOGGER.info("remove unused channel:" + ctx.channel());

}

}

}

/**

* Exception caught.

*

* @param ctx the ctx

* @param cause the cause

* @throws Exception the exception

*/

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

if (LOGGER.isInfoEnabled()) {

LOGGER.info("channel exx:" + cause.getMessage() + ",channel:" + ctx.channel());

}

ChannelManager.releaseRpcContext(ctx.channel());

super.exceptionCaught(ctx, cause);

}

/**

* User event triggered.

*

* @param ctx the ctx

* @param evt the evt

* @throws Exception the exception

*/

@Override

public void userEventTriggered(ChannelHandlerContext ctx, Object evt) {

if (evt instanceof IdleStateEvent) {

debugLog("idle:" + evt);

IdleStateEvent idleStateEvent = (IdleStateEvent) evt;

if (idleStateEvent.state() == IdleState.READER_IDLE) {

if (LOGGER.isInfoEnabled()) {

LOGGER.info("channel:" + ctx.channel() + " read idle.");

}

handleDisconnect(ctx);

try {

closeChannelHandlerContext(ctx);

} catch (Exception e) {

LOGGER.error(e.getMessage());

}

}

}

}

@Override

public void close(ChannelHandlerContext ctx, ChannelPromise future) throws Exception {

if (LOGGER.isInfoEnabled()) {

LOGGER.info(ctx + " will closed");

}

super.close(ctx, future);

}

}代码@1:此处调用了processMessage()方法处理请求。

AbstractNettyRemoting.processMessage()方法详解:

protected void processMessage(ChannelHandlerContext ctx, RpcMessage rpcMessage) throws Exception {

if (LOGGER.isDebugEnabled()) {

LOGGER.debug(String.format("%s msgId:%s, body:%s", this, rpcMessage.getId(), rpcMessage.getBody()));

}

Object body = rpcMessage.getBody();

if (body instanceof MessageTypeAware) {

MessageTypeAware messageTypeAware = (MessageTypeAware) body;

final Pair pair = this.processorTable.get((int) messageTypeAware.getTypeCode());//@1

if (pair != null) {

if (pair.getSecond() != null) {

try {

pair.getSecond().execute(() -> {

try {

pair.getFirst().process(ctx, rpcMessage);//@2

} catch (Throwable th) {

LOGGER.error(FrameworkErrorCode.NetDispatch.getErrCode(), th.getMessage(), th);

}

});

} catch (RejectedExecutionException e) {

LOGGER.error(FrameworkErrorCode.ThreadPoolFull.getErrCode(),

"thread pool is full, current max pool size is " + messageExecutor.getActiveCount());

if (allowDumpStack) {

String name = ManagementFactory.getRuntimeMXBean().getName();

String pid = name.split("@")[0];

int idx = new Random().nextInt(100);

try {

Runtime.getRuntime().exec("jstack " + pid + " >d:/" + idx + ".log");

} catch (IOException exx) {

LOGGER.error(exx.getMessage());

}

allowDumpStack = false;

}

}

} else {

try {

pair.getFirst().process(ctx, rpcMessage);

} catch (Throwable th) {

LOGGER.error(FrameworkErrorCode.NetDispatch.getErrCode(), th.getMessage(), th);

}

}

} else {

LOGGER.error("This message type [{}] has no processor.", messageTypeAware.getTypeCode());

}

} else {

LOGGER.error("This rpcMessage body[{}] is not MessageTypeAware type.", body);

}

} 代码@1:根据typeCode获取处理请求的processor

代码@2:用processor处理请求。

1.5 processor初始化

在1.4中处理请求是通过typeCode从processorTable 对象中获取processor,那么这些Processor是从哪里初始化进去的呢?接下来我们看下Seata是如何初始化Processor

Seata Processor类图如下:

![]()

RmNettyRemotingClient.registerProcessor()

private void registerProcessor() {

// 1.registry rm client handle branch commit processor

RmBranchCommitProcessor rmBranchCommitProcessor = new RmBranchCommitProcessor(getTransactionMessageHandler(), this);

super.registerProcessor(MessageType.TYPE_BRANCH_COMMIT, rmBranchCommitProcessor, messageExecutor);

// 2.registry rm client handle branch commit processor

RmBranchRollbackProcessor rmBranchRollbackProcessor = new RmBranchRollbackProcessor(getTransactionMessageHandler(), this);

super.registerProcessor(MessageType.TYPE_BRANCH_ROLLBACK, rmBranchRollbackProcessor, messageExecutor);

// 3.registry rm handler undo log processor

RmUndoLogProcessor rmUndoLogProcessor = new RmUndoLogProcessor(getTransactionMessageHandler());

super.registerProcessor(MessageType.TYPE_RM_DELETE_UNDOLOG, rmUndoLogProcessor, messageExecutor);

// 4.registry TC response processor

ClientOnResponseProcessor onResponseProcessor =

new ClientOnResponseProcessor(mergeMsgMap, super.getFutures(), getTransactionMessageHandler());

super.registerProcessor(MessageType.TYPE_SEATA_MERGE_RESULT, onResponseProcessor, null);

super.registerProcessor(MessageType.TYPE_BRANCH_REGISTER_RESULT, onResponseProcessor, null);

super.registerProcessor(MessageType.TYPE_BRANCH_STATUS_REPORT_RESULT, onResponseProcessor, null);

super.registerProcessor(MessageType.TYPE_GLOBAL_LOCK_QUERY_RESULT, onResponseProcessor, null);

super.registerProcessor(MessageType.TYPE_REG_RM_RESULT, onResponseProcessor, null);

// 5.registry heartbeat message processor

ClientHeartbeatProcessor clientHeartbeatProcessor = new ClientHeartbeatProcessor();

super.registerProcessor(MessageType.TYPE_HEARTBEAT_MSG, clientHeartbeatProcessor, null);

}AbstractNettyRemotingClient.registerProcessor()

@Override

public void registerProcessor(int requestCode, RemotingProcessor processor, ExecutorService executor) {

Pair pair = new Pair<>(processor, executor);

this.processorTable.put(requestCode, pair);

} RmNettyRemotingClient.init()

@Override

public void init() {

// registry processor

registerProcessor();

if (initialized.compareAndSet(false, true)) {

super.init();

}

}RMClient.init()

public class RMClient {

/**

* Init.

*

* @param applicationId the application id

* @param transactionServiceGroup the transaction service group

*/

public static void init(String applicationId, String transactionServiceGroup) {

RmNettyRemotingClient rmNettyRemotingClient = RmNettyRemotingClient.getInstance(applicationId, transactionServiceGroup);

rmNettyRemotingClient.setResourceManager(DefaultResourceManager.get());

rmNettyRemotingClient.setTransactionMessageHandler(DefaultRMHandler.get());

rmNettyRemotingClient.init();

}

}由以上4块代码可知,processor是由RMClient.init方法初始化的,RMClient还会初始化processor所需要的各种Handler处理器。

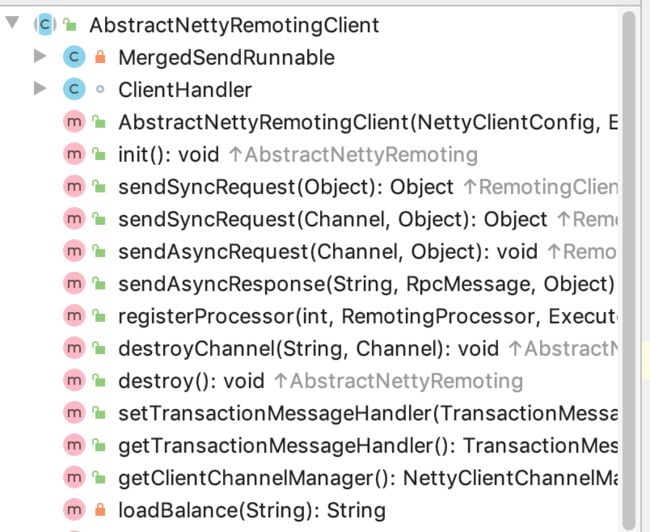

2.Seata RPC请求封装

Seata 的rpc请求api都在AbstractNettyRemotingClient类中,如下图所示:

接下来我们就简单的看下sendSyncRequest方法:

AbstractNettyRemotingClient.sendSyncRequest()方法详解:

@Override

public Object sendSyncRequest(Object msg) throws TimeoutException {

String serverAddress = loadBalance(getTransactionServiceGroup());//@1

int timeoutMillis = NettyClientConfig.getRpcRequestTimeout();//@2

RpcMessage rpcMessage = buildRequestMessage(msg, ProtocolConstants.MSGTYPE_RESQUEST_SYNC);//@3

// send batch message

// put message into basketMap, @see MergedSendRunnable

if (NettyClientConfig.isEnableClientBatchSendRequest()) {//@4

// send batch message is sync request, needs to create messageFuture and put it in futures.

MessageFuture messageFuture = new MessageFuture();

messageFuture.setRequestMessage(rpcMessage);

messageFuture.setTimeout(timeoutMillis);

futures.put(rpcMessage.getId(), messageFuture);//@5

// put message into basketMap

ConcurrentHashMap> map = basketMap;

BlockingQueue basket = map.get(serverAddress);

if (basket == null) {

map.putIfAbsent(serverAddress, new LinkedBlockingQueue<>());

basket = map.get(serverAddress);

}

basket.offer(rpcMessage);//@6

if (LOGGER.isDebugEnabled()) {

LOGGER.debug("offer message: {}", rpcMessage.getBody());

}

if (!isSending) {

synchronized (mergeLock) {

mergeLock.notifyAll();

}

}

try {

return messageFuture.get(timeoutMillis, TimeUnit.MILLISECONDS);//@7

} catch (Exception exx) {

LOGGER.error("wait response error:{},ip:{},request:{}",

exx.getMessage(), serverAddress, rpcMessage.getBody());

if (exx instanceof TimeoutException) {

throw (TimeoutException) exx;

} else {

throw new RuntimeException(exx);

}

}

} else {

Channel channel = clientChannelManager.acquireChannel(serverAddress);//@8

return super.sendSync(channel, rpcMessage, timeoutMillis);//@9

}

} 代码@1:调用负载均衡算法获取调用的节点ip+port地址。

代码@2:获取配置的rpc请求超时时间。

代码@3:组装rpc请求消息体。

代码@4:判断是否开启批量请求,如果开启批量请求则走批量请求逻辑

代码@5:把请求的MessageFuture对象放入futures这个concurrenthashmap中,用于rpc返回结果后异步回调MessageFuture对象

代码@6:把请求放入阻塞队列中,MergedSendRunnable线程会读取阻塞队列中的请求做合并发送

代码@7:同步等待请求返回

代码@8:通过ip+port地址获取Netty Channel对象发送。

代码@9:同步发送rpc请求

Seata合并多个请求批量发送rpc:

AbstractNettyRemotingClient中的MergedSendRunnable类:

private class MergedSendRunnable implements Runnable {

@Override

public void run() {

while (true) {

synchronized (mergeLock) {

try {

mergeLock.wait(MAX_MERGE_SEND_MILLS);

} catch (InterruptedException e) {

}

}

isSending = true;

for (String address : basketMap.keySet()) {//@1

BlockingQueue basket = basketMap.get(address);

if (basket.isEmpty()) {

continue;

}

MergedWarpMessage mergeMessage = new MergedWarpMessage();

while (!basket.isEmpty()) {

RpcMessage msg = basket.poll();

mergeMessage.msgs.add((AbstractMessage) msg.getBody());

mergeMessage.msgIds.add(msg.getId());

}

if (mergeMessage.msgIds.size() > 1) {

printMergeMessageLog(mergeMessage);

}

Channel sendChannel = null;

try {

// send batch message is sync request, but there is no need to get the return value.

// Since the messageFuture has been created before the message is placed in basketMap,

// the return value will be obtained in ClientOnResponseProcessor.

sendChannel = clientChannelManager.acquireChannel(address);

AbstractNettyRemotingClient.this.sendAsyncRequest(sendChannel, mergeMessage);//@2

} catch (FrameworkException e) {

if (e.getErrcode() == FrameworkErrorCode.ChannelIsNotWritable && sendChannel != null) {

destroyChannel(address, sendChannel);

}

// fast fail

for (Integer msgId : mergeMessage.msgIds) {//@3

MessageFuture messageFuture = futures.remove(msgId);

if (messageFuture != null) {

messageFuture.setResultMessage(null);

}

}

LOGGER.error("client merge call failed: {}", e.getMessage(), e);

}

}

isSending = false;

}

}

private void printMergeMessageLog(MergedWarpMessage mergeMessage) {

if (LOGGER.isDebugEnabled()) {

LOGGER.debug("merge msg size:{}", mergeMessage.msgIds.size());

for (AbstractMessage cm : mergeMessage.msgs) {

LOGGER.debug(cm.toString());

}

StringBuilder sb = new StringBuilder();

for (long l : mergeMessage.msgIds) {

sb.append(MSG_ID_PREFIX).append(l).append(SINGLE_LOG_POSTFIX);

}

sb.append("\n");

for (long l : futures.keySet()) {

sb.append(FUTURES_PREFIX).append(l).append(SINGLE_LOG_POSTFIX);

}

LOGGER.debug(sb.toString());

}

}

} 代码@1、2:此处代码是把sendSyncRequest方法放进ConcurrentHashMap中的RpcMessage取出来批量发送。

代码@3:通过msgId找到MessageFuture,唤醒sendSyncRequest在同步等待的请求。