分布式-分布式ID之Snowflake(雪花算法)

1. 背景

在复杂分布式系统中,往往需要对大量的数据和消息进行唯一标识。如在美团点评的金融、支付、餐饮、酒店、猫眼电影等产品的系统中,数据日渐增长,对数据分库分表后需要有一个唯一ID来标识一条数据或消息,数据库的自增ID显然不能满足需求;特别一点的如订单、骑手、优惠券也都需要有唯一ID做标识。此时一个能够生成全局唯一ID的系统是非常必要的。

(1)业务系统对分布式ID有哪些诉求

- 全局唯一性:不能出现重复的ID号,既然是唯一标识,这是最基本的要求;

- 趋势递增:在MySQL InnoDB引擎中使用的是聚集索引,由于多数RDBMS使用B-tree的数据结构来存储索引数据,在主键的选择上面我们应该尽量使用有序的主键保证写入性能;

- 单调递增:保证下一个ID一定大于上一个ID,例如事务版本号、IM增量消息、排序等特殊需求;

- 信息安全:如果ID是连续的,恶意用户的扒取工作就非常容易做了,直接按照顺序下载指定URL即可;如果是订单号就更危险了,竞争对手可以直接知道我们一天的单量。所以在一些应用场景下,会需要ID无规则、不规则;

- 性能要求:高可用。如平均延迟和TP999延迟都要尽可能低;可用性5个9;高QPS;

2. 介绍

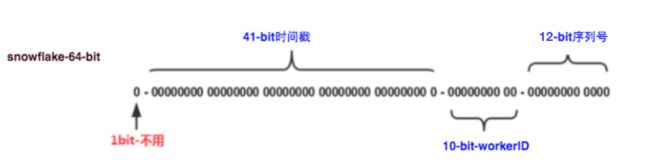

Snowflake是一种以划分命名空间(UUID也算,由于比较常见,所以单独分析)来生成ID的一种算法,这种方案把64-bit分别划分成多段,分开来标示机器、时间等,比如在snowflake中的64-bit表示如下图所示:

- Sign:固定1bit符号标识,即生成的UID为正数;

- 时间戳:当前时间,相对于时间基点"2016-05-20"的增量值(基点是允许自定义的),单位:秒,至于最多能支持多久的时间则根据定义的时间戳位数决定,如果是41位则最多可支持约69年(1L<<41)/(1000L*3600*24*365)=69);

- 机器号:机器ID,其支持的数量是根据定义的机器号位数决定。其本身的定义应该是区分机器,例如同一台机器如果重启的话,那么机器号应该是不变的,但是例如百度分布式ID生成器的机器号是不区分的,重启则自增机器号,也就是机器号用完即丢弃策略。说是后续可提供复用策略,但是大概率是不会更新了。如果有需要自己可以重新机器ID生成策略的代码,每次重启根据IP+端口查一遍数据库的信息以达到复用。这样可以压缩机器号的位数,扩大时间戳的位数,增大能支持的时间;

- 序列号:每秒下的并发序列(13 bits则可支持每秒8192个并发),可根据实际需要定义序列号的位数,以便可以给时间戳或者机器号增大位数;

2.1 优缺点

(1)优点

- 毫秒数在高位,自增序列在低位,整个ID都是趋势递增的;

- 不依赖数据库等第三方系统,以服务的方式部署,稳定性更高,生成ID的性能也是非常高的(机器号是需要依赖数据库或者Zookeeper);

- 可以根据自身业务特性分配bit位,非常灵活;

(2)缺点

- 强依赖机器时钟,如果机器上时钟回拨,会导致发号重复或者服务会处于不可用状态

3. 源码

3.1 如何解决时钟回拨问题

(1)百度ID生成器

无法处理机器重启 + 时钟回拨这套组合拳,因为其lastSecond记录的上次时间是基于本地的,没有持久化。

protected synchronized long nextId() {

long currentSecond = getCurrentSecond();

// Clock moved backwards, refuse to generate uid

if (currentSecond < lastSecond) {

long refusedSeconds = lastSecond - currentSecond;

throw new UidGenerateException("Clock moved backwards. Refusing for %d seconds", refusedSeconds);

}

// At the same second, increase sequence

if (currentSecond == lastSecond) {

// 取余:二进制运算 > %

sequence = (sequence + 1) & bitsAllocator.getMaxSequence();

// Exceed the max sequence, we wait the next second to generate uid

if (sequence == 0) {

currentSecond = getNextSecond(lastSecond);

}

// At the different second, sequence restart from zero

} else {

sequence = 0L;

}

lastSecond = currentSecond;

// Allocate bits for UID

return bitsAllocator.allocate(currentSecond - epochSeconds, workerId, sequence);

}(2)美团Leaf

利用Zookeeper没隔一段时间上报本地时间

// ...

public class SnowflakeZookeeperHolder {

private static final Logger LOGGER = LoggerFactory.getLogger(SnowflakeZookeeperHolder.class);

private String zk_AddressNode = null;//保存自身的key ip:port-000000001

private String listenAddress = null;//保存自身的key ip:port

private int workerID;

private static final String PREFIX_ZK_PATH = "/snowflake/" + PropertyFactory.getProperties().getProperty("leaf.name");

private static final String PROP_PATH = System.getProperty("java.io.tmpdir") + File.separator + PropertyFactory.getProperties().getProperty("leaf.name") + "/leafconf/{port}/workerID.properties";

private static final String PATH_FOREVER = PREFIX_ZK_PATH + "/forever";//保存所有数据持久的节点

private String ip;

private String port;

private String connectionString;

private long lastUpdateTime;

// ...

public boolean init() {

try {

CuratorFramework curator = createWithOptions(connectionString, new RetryUntilElapsed(1000, 4), 10000, 6000);

curator.start();

Stat stat = curator.checkExists().forPath(PATH_FOREVER);

if (stat == null) {

//不存在根节点,机器第一次启动,创建/snowflake/ip:port-000000000,并上传数据

zk_AddressNode = createNode(curator);

//worker id 默认是0

updateLocalWorkerID(workerID);

//定时上报本机时间给forever节点

ScheduledUploadData(curator, zk_AddressNode);

return true;

} else {

Map nodeMap = Maps.newHashMap();//ip:port->00001

Map realNode = Maps.newHashMap();//ip:port->(ipport-000001)

//存在根节点,先检查是否有属于自己的根节点

List keys = curator.getChildren().forPath(PATH_FOREVER);

for (String key : keys) {

String[] nodeKey = key.split("-");

realNode.put(nodeKey[0], key);

nodeMap.put(nodeKey[0], Integer.parseInt(nodeKey[1]));

}

Integer workerid = nodeMap.get(listenAddress);

if (workerid != null) {

//有自己的节点,zk_AddressNode=ip:port

zk_AddressNode = PATH_FOREVER + "/" + realNode.get(listenAddress);

workerID = workerid;//启动worder时使用会使用

if (!checkInitTimeStamp(curator, zk_AddressNode)) {

throw new CheckLastTimeException("init timestamp check error,forever node timestamp gt this node time");

}

//准备创建临时节点

doService(curator);

updateLocalWorkerID(workerID);

LOGGER.info("[Old NODE]find forever node have this endpoint ip-{} port-{} workid-{} childnode and start SUCCESS", ip, port, workerID);

} else {

//表示新启动的节点,创建持久节点 ,不用check时间

String newNode = createNode(curator);

zk_AddressNode = newNode;

String[] nodeKey = newNode.split("-");

workerID = Integer.parseInt(nodeKey[1]);

doService(curator);

updateLocalWorkerID(workerID);

LOGGER.info("[New NODE]can not find node on forever node that endpoint ip-{} port-{} workid-{},create own node on forever node and start SUCCESS ", ip, port, workerID);

}

}

} catch (Exception e) {

// ...

}

return true;

}

private void doService(CuratorFramework curator) {

ScheduledUploadData(curator, zk_AddressNode);// /snowflake_forever/ip:port-000000001

}

private void ScheduledUploadData(final CuratorFramework curator, final String zk_AddressNode) {

Executors.newSingleThreadScheduledExecutor(new ThreadFactory() {

@Override

public Thread newThread(Runnable r) {

Thread thread = new Thread(r, "schedule-upload-time");

thread.setDaemon(true);

return thread;

}

}).scheduleWithFixedDelay(new Runnable() {

@Override

public void run() {

updateNewData(curator, zk_AddressNode);

}

}, 1L, 3L, TimeUnit.SECONDS);//每3s上报数据

}

private boolean checkInitTimeStamp(CuratorFramework curator, String zk_AddressNode) throws Exception {

byte[] bytes = curator.getData().forPath(zk_AddressNode);

Endpoint endPoint = deBuildData(new String(bytes));

//该节点的时间不能小于最后一次上报的时间

return !(endPoint.getTimestamp() > System.currentTimeMillis());

}

//...

private void updateNewData(CuratorFramework curator, String path) {

try {

if (System.currentTimeMillis() < lastUpdateTime) {

return;

}

curator.setData().forPath(path, buildData().getBytes());

lastUpdateTime = System.currentTimeMillis();

} catch (Exception e) {

LOGGER.info("update init data error path is {} error is {}", path, e);

}

}

// ...

/**

* 上报数据结构

*/

static class Endpoint {

private String ip;

private String port;

private long timestamp;

// ...

}

3.2 多台实例机器如何解决机器号不重复

(1)百度ID生成器

默认是利用Mysql数据库做机器号统一管理。如果需要自定义逻辑,可自定义实现WorkerIdAssigner类(机器号生成类)。

public class DisposableWorkerIdAssigner implements WorkerIdAssigner {

private static final Logger LOGGER = LoggerFactory.getLogger(DisposableWorkerIdAssigner.class);

@Resource

private WorkerNodeDAO workerNodeDAO;

public WorkerNodeDAO getWorkerNodeDAO() {

return workerNodeDAO;

}

public void setWorkerNodeDAO(WorkerNodeDAO workerNodeDAO) {

this.workerNodeDAO = workerNodeDAO;

}

/**

* Assign worker id base on database.

* If there is host name & port in the environment, we considered that the node runs in Docker container

* Otherwise, the node runs on an actual machine.

*

* @return assigned worker id

*/

@Transactional

public long assignWorkerId() {

// build worker node entity

WorkerNodeEntity workerNodeEntity = buildWorkerNode();

// add worker node for new (ignore the same IP + PORT)

// 这里其实可以自定义实现,查询数据库该机器是否已经注册过,不知道为什么默认机器号不能复用

workerNodeDAO.addWorkerNode(workerNodeEntity);

LOGGER.info("Add worker node:" + workerNodeEntity);

return workerNodeEntity.getId();

}

/**

* Build worker node entity by IP and PORT

*/

private WorkerNodeEntity buildWorkerNode() {

WorkerNodeEntity workerNodeEntity = new WorkerNodeEntity();

// 这里区分是否是Docker环境部署的情况

if (DockerUtils.isDocker()) {

workerNodeEntity.setType(WorkerNodeType.CONTAINER.value());

workerNodeEntity.setHostName(DockerUtils.getDockerHost());

workerNodeEntity.setPort(DockerUtils.getDockerPort());

} else {

// 此处将端口号随机,同一机器每次重启默认都会重新分配

workerNodeEntity.setType(WorkerNodeType.ACTUAL.value());

workerNodeEntity.setHostName(NetUtils.getLocalAddress());

workerNodeEntity.setPort(System.currentTimeMillis() + "-" + RandomUtils.nextInt(100000));

}

return workerNodeEntity;

}

}(2)美团Leaf

利用Zookeeper进行机器号管理。每次启动都会通过IP + 端口向Zookeeper查询是否已经注册,如果未注册,则在Zookeeper注册一个节点。本地还会定时上报本地时间,防止时钟回拨。

public class SnowflakeZookeeperHolder {

private static final Logger LOGGER = LoggerFactory.getLogger(SnowflakeZookeeperHolder.class);

private String zk_AddressNode = null;//保存自身的key ip:port-000000001

private String listenAddress = null;//保存自身的key ip:port

private int workerID;

private static final String PREFIX_ZK_PATH = "/snowflake/" + PropertyFactory.getProperties().getProperty("leaf.name");

private static final String PROP_PATH = System.getProperty("java.io.tmpdir") + File.separator + PropertyFactory.getProperties().getProperty("leaf.name") + "/leafconf/{port}/workerID.properties";

private static final String PATH_FOREVER = PREFIX_ZK_PATH + "/forever";//保存所有数据持久的节点

private String ip;

private String port;

private String connectionString;

private long lastUpdateTime;

// ...

public boolean init() {

try {

CuratorFramework curator = createWithOptions(connectionString, new RetryUntilElapsed(1000, 4), 10000, 6000);

curator.start();

Stat stat = curator.checkExists().forPath(PATH_FOREVER);

if (stat == null) {

//不存在根节点,机器第一次启动,创建/snowflake/ip:port-000000000,并上传数据

zk_AddressNode = createNode(curator);

//worker id 默认是0

updateLocalWorkerID(workerID);

//定时上报本机时间给forever节点

ScheduledUploadData(curator, zk_AddressNode);

return true;

} else {

Map nodeMap = Maps.newHashMap();//ip:port->00001

Map realNode = Maps.newHashMap();//ip:port->(ipport-000001)

//存在根节点,先检查是否有属于自己的根节点

List keys = curator.getChildren().forPath(PATH_FOREVER);

for (String key : keys) {

String[] nodeKey = key.split("-");

realNode.put(nodeKey[0], key);

nodeMap.put(nodeKey[0], Integer.parseInt(nodeKey[1]));

}

Integer workerid = nodeMap.get(listenAddress);

if (workerid != null) {

// 有自己的节点,zk_AddressNode=ip:port

zk_AddressNode = PATH_FOREVER + "/" + realNode.get(listenAddress);

workerID = workerid;//启动worder时使用会使用

if (!checkInitTimeStamp(curator, zk_AddressNode)) {

throw new CheckLastTimeException("init timestamp check error,forever node timestamp gt this node time");

}

// 准备创建临时节点

doService(curator);

updateLocalWorkerID(workerID);

LOGGER.info("[Old NODE]find forever node have this endpoint ip-{} port-{} workid-{} childnode and start SUCCESS", ip, port, workerID);

} else {

// 表示新启动的节点,创建持久节点 ,不用check时间

String newNode = createNode(curator);

zk_AddressNode = newNode;

String[] nodeKey = newNode.split("-");

workerID = Integer.parseInt(nodeKey[1]);

doService(curator);

updateLocalWorkerID(workerID);

LOGGER.info("[New NODE]can not find node on forever node that endpoint ip-{} port-{} workid-{},create own node on forever node and start SUCCESS ", ip, port, workerID);

}

}

} catch (Exception e) {

// ...

}

return true;

}

// ...

} 3.3 Snowflake如何进一步优化性能

利用RingBuffer环形数组进行本地缓存,减少读写竞争,优化性能。

// ...

public class RingBuffer {

private static final Logger LOGGER = LoggerFactory.getLogger(RingBuffer.class);

/** Constants */

private static final int START_POINT = -1;

private static final long CAN_PUT_FLAG = 0L;

private static final long CAN_TAKE_FLAG = 1L;

public static final int DEFAULT_PADDING_PERCENT = 50;

/** The size of RingBuffer's slots, each slot hold a UID */

private final int bufferSize;

private final long indexMask;

private final long[] slots;

private final PaddedAtomicLong[] flags;

/** Tail: last position sequence to produce */

private final AtomicLong tail = new PaddedAtomicLong(START_POINT);

/** Cursor: current position sequence to consume */

private final AtomicLong cursor = new PaddedAtomicLong(START_POINT);

/** Threshold for trigger padding buffer*/

private final int paddingThreshold;

/** Reject put/take buffer handle policy */

private RejectedPutBufferHandler rejectedPutHandler = this::discardPutBuffer;

private RejectedTakeBufferHandler rejectedTakeHandler = this::exceptionRejectedTakeBuffer;

/** Executor of padding buffer */

private BufferPaddingExecutor bufferPaddingExecutor;

// ...

/**

* Take an UID of the ring at the next cursor, this is a lock free operation by using atomic cursor

*

* Before getting the UID, we also check whether reach the padding threshold,

* the padding buffer operation will be triggered in another thread

* If there is no more available UID to be taken, the specified {@link RejectedTakeBufferHandler} will be applied

*

* @return UID

* @throws IllegalStateException if the cursor moved back

*/

public long take() {

// spin get next available cursor

long currentCursor = cursor.get();

long nextCursor = cursor.updateAndGet(old -> old == tail.get() ? old : old + 1);

// check for safety consideration, it never occurs

Assert.isTrue(nextCursor >= currentCursor, "Curosr can't move back");

// trigger padding in an async-mode if reach the threshold

long currentTail = tail.get();

if (currentTail - nextCursor < paddingThreshold) {

// 如果当前尾节点到下一个可用节点的距离达到阙值(默认是RingBuffer容量的50%),则触发线程池任务,批量生成uid进入RingBuffer本地缓存

LOGGER.info("Reach the padding threshold:{}. tail:{}, cursor:{}, rest:{}", paddingThreshold, currentTail,

nextCursor, currentTail - nextCursor);

bufferPaddingExecutor.asyncPadding();

}

// cursor catch the tail, means that there is no more available UID to take

if (nextCursor == currentCursor) {

rejectedTakeHandler.rejectTakeBuffer(this);

}

// 1. check next slot flag is CAN_TAKE_FLAG

int nextCursorIndex = calSlotIndex(nextCursor);

Assert.isTrue(flags[nextCursorIndex].get() == CAN_TAKE_FLAG, "Curosr not in can take status");

// 2. get UID from next slot

// 3. set next slot flag as CAN_PUT_FLAG.

// 取出下一个节点的uid,并且将标志修改为CAN_PUT_FLAG

long uid = slots[nextCursorIndex];

flags[nextCursorIndex].set(CAN_PUT_FLAG);

// Note that: Step 2,3 can not swap. If we set flag before get value of slot, the producer may overwrite the

// slot with a new UID, and this may cause the consumer take the UID twice after walk a round the ring

return uid;

}

// ...

}

(1)定时任务触发uid生成

public class BufferPaddingExecutor {

private static final Logger LOGGER = LoggerFactory.getLogger(RingBuffer.class);

/** Constants */

private static final String WORKER_NAME = "RingBuffer-Padding-Worker";

private static final String SCHEDULE_NAME = "RingBuffer-Padding-Schedule";

private static final long DEFAULT_SCHEDULE_INTERVAL = 5 * 60L; // 5 minutes

/** Whether buffer padding is running */

private final AtomicBoolean running;

/** We can borrow UIDs from the future, here store the last second we have consumed */

private final PaddedAtomicLong lastSecond;

/** RingBuffer & BufferUidProvider */

private final RingBuffer ringBuffer;

private final BufferedUidProvider uidProvider;

/** Padding immediately by the thread pool */

private final ExecutorService bufferPadExecutors;

/** Padding schedule thread */

private final ScheduledExecutorService bufferPadSchedule;

/** Schedule interval Unit as seconds */

private long scheduleInterval = DEFAULT_SCHEDULE_INTERVAL;

// ...

/**

* Padding buffer in the thread pool

*/

public void asyncPadding() {

bufferPadExecutors.submit(this::paddingBuffer);

}

/**

* Padding buffer fill the slots until to catch the cursor

*/

public void paddingBuffer() {

LOGGER.info("Ready to padding buffer lastSecond:{}. {}", lastSecond.get(), ringBuffer);

// is still running

if (!running.compareAndSet(false, true)) {

LOGGER.info("Padding buffer is still running. {}", ringBuffer);

return;

}

// fill the rest slots until to catch the cursor

boolean isFullRingBuffer = false;

while (!isFullRingBuffer) {

// 将一秒中可用的序列号赋值给uidList

List uidList = uidProvider.provide(lastSecond.incrementAndGet());

for (Long uid : uidList) {

// 直到RingBuffer满量了停止

isFullRingBuffer = !ringBuffer.put(uid);

if (isFullRingBuffer) {

break;

}

}

}

// not running now

running.compareAndSet(true, false);

LOGGER.info("End to padding buffer lastSecond:{}. {}", lastSecond.get(), ringBuffer);

}

// ...

} 4. 实战

4.1 百度分布式ID生成器

(1)数据库初始化

可以进行二次封装,利用Flyway进行数据库的初始化,这样外部依赖的项目无需关心。

CREATE TABLE IF NOT EXISTS WORKER_NODE (

ID BIGINT NOT NULL AUTO_INCREMENT COMMENT 'auto increment id',

HOST_NAME VARCHAR(64) NOT NULL COMMENT 'host name',

PORT VARCHAR(64) NOT NULL COMMENT 'port',

TYPE INT NOT NULL COMMENT 'node type: ACTUAL or CONTAINER',

LAUNCH_DATE DATE NOT NULL COMMENT 'launch date',

MODIFIED TIMESTAMP NOT NULL COMMENT 'modified time',

CREATED TIMESTAMP NOT NULL COMMENT 'created time',

PRIMARY KEY(ID)

) COMMENT='DB WorkerID Assigner for UID Generator',ENGINE = INNODB;(2)Maven依赖

com.xfvape.uid

uid-generator

0.0.4-RELEASE

(3)ID生成

import com.xfvape.uid.UidGenerator;

import org.junit.Assert;

import org.junit.Test;

import org.springframework.beans.factory.annotation.Autowired;

public class UidGeneratorTest extends BaseTest {

@Autowired(required = false)

private UidGenerator uidGenerator;

@Test

public void uidTest() throws InterruptedException {

long uid = uidGenerator.getUID();

}

}(3)优缺点

优点:

- 利用RingBuffer进一步优化ID生成的性能(无锁竞争 + 本地缓存)

- 解决时钟回拨

缺点:

- 依赖数据库,按照目前百度最新开源的分布式ID源码看,外部依赖需要感知mybatis的mapper-locations配置

mybatis-plus:

mapper-locations:

- classpath*:/META-INF/mybatis/mapper/**/*.xml- 每秒的序列号严格递增,没有无序递增

4.2 美团Leaf分布式ID生成器

(1) 启动Zookeeper

(2)Maven依赖

基本上是全部Copy的美团点评的,但是还是改了一点东西,比如说序列号每次都是从0开始,而美团点评是随机100以内的数字做为起点。

com.github.guang19

leaf-spring-boot-starter

1.0.2

(3)ID生成

import com.github.guang19.leaf.core.IdGenerator;

import org.junit.Assert;

import org.junit.Test;

import org.springframework.beans.factory.annotation.Autowired;

public class LeafTest extends BaseTest {

@Autowired(required = false)

private IdGenerator idGenerator;

@Test

public void idTest() {

Assert.assertNotNull(idGenerator.nextId());

}

}(4)优缺点

优点:性能高

缺点:

5. FAQ

5.1 RingBuffer为什么性能优异

5.2 PaddedAtomicLong类有什么作用

PaddedAtomicLong类的作用是防止伪共享问题(伪共享:缓存系统是以缓存行为单位,常见的缓存大小是64字节,当多线程互相独立的变量时,如果这些变量在同一个缓存行中,会无意影响彼此的性能)。

- p1~p6设置成7L,是因为JAVA6版本及以下时可以不用设置,但是JAVA7及以上时,会优化无用字段。JAVA8可以通过注解方式,但是需要设置。

- sumPaddingToPreventOptimization方法是防止GC回收没有引用的对象

import java.util.concurrent.atomic.AtomicLong;

/**

* Represents a padded {@link AtomicLong} to prevent the FalseSharing problem

*

* The CPU cache line commonly be 64 bytes, here is a sample of cache line after padding:

* 64 bytes = 8 bytes (object reference) + 6 * 8 bytes (padded long) + 8 bytes (a long value)

*

* @author yutianbao

*/

public class PaddedAtomicLong extends AtomicLong {

private static final long serialVersionUID = -3415778863941386253L;

/** Padded 6 long (48 bytes) */

public volatile long p1, p2, p3, p4, p5, p6 = 7L;

/**

* Constructors from {@link AtomicLong}

*/

public PaddedAtomicLong() {

super();

}

public PaddedAtomicLong(long initialValue) {

super(initialValue);

}

/**

* To prevent GC optimizations for cleaning unused padded references

* @return PaddingToPreventOptimization

*/

public long sumPaddingToPreventOptimization() {

return p1 + p2 + p3 + p4 + p5 + p6;

}

}

6. 参考资料

【Leaf——美团点评分布式ID生成系统】

【Github美团点评Leaf源码,可一键部署】

【百度UidGenerator】

【伪共享和缓存行填充,Java并发编程还能这么优化!】