Opencv学习笔记 K-Means聚类算法进行颜色量化

颜色量化是减少图像中不同颜色数量的过程。通常,目的是尽可能保留图像的颜色外观,同时减少颜色数量,无论是用于内存限制还是压缩。实际上,著名的QBIC CBIR系统(图像搜索引擎的原始CBIR系统之一)可以利用二次距离中的量化颜色直方图来计算相似度。

OpenCv指定聚类颜色和聚类数量,聚类算法有很多种(几十种),K-Means是聚类算法中的最常用的一种,算法最大的特点是简单,好理解,运算速度快,但是只能应用于连续型的数据,并且一定要在聚类前需要手工指定要分成几类。

OpenCvSharp代码如下:

Mat srcImage = Cv2.ImRead(@"C:\Users\Desktop\123.png");

//五个颜色,聚类之后的颜色随机从这里面选择

Scalar[] colorTab = {

new Scalar(0,0,255),

new Scalar(0,255,0),

new Scalar(255,0,0),

new Scalar(0,255,255),

new Scalar(255,0,255)

};

int width = srcImage.Cols;//图像的宽

int height = srcImage.Rows;//图像的高

int channels = srcImage.Channels();//图像的通道数

//初始化一些定义

int sampleCount = width * height;//所有的像素

int clusterCount = 4;//分类数

Mat points = new Mat(sampleCount, channels, MatType.CV_32F, Scalar.All(10));//points用来保存所有的数据

Mat labels = new Mat();//聚类后的标签

Mat center = new Mat(clusterCount, 1, points.Type());//聚类后的类别的中心

//将图像的RGB像素转到到样本数据

int index;

for (int i = 0; i < srcImage.Rows; i++)

{

for (int j = 0; j < srcImage.Cols; j++)

{

index = i * width + j;

Vec3b bgr = srcImage.At(i, j);

//将图像中的每个通道的数据分别赋值给points的值

points.Set(index, 0, bgr.Item0);

points.Set(index, 1, bgr.Item1);

points.Set(index, 2, bgr.Item2);

}

}

//运行K-means算法

//MAX_ITER也可以称为COUNT最大迭代次数,EPS最高精度,10表示最大的迭代次数,0.1表示结果的精确度

TermCriteria criteria = new TermCriteria( CriteriaType.Eps & CriteriaType.Count, 10, 0.1);

Cv2.Kmeans(points, clusterCount, labels, criteria, 3, KMeansFlags.PpCenters, center);

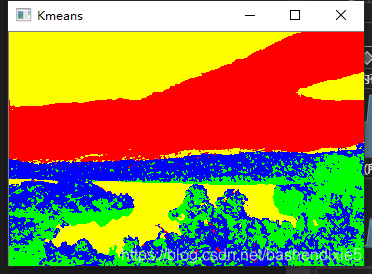

//显示图像分割结果

Mat result = Mat.Zeros(srcImage.Size(), srcImage.Type());//创建一张结果图

for (int i = 0; i < srcImage.Rows; i++)

{

for (int j = 0; j < srcImage.Cols; j++)

{

index = i * width + j;

int label = labels.At(index);//每一个像素属于哪个标签

Vec3b vec3B = new Vec3b();

vec3B.Item0 = (byte)colorTab[label][0];//对结果图中的每一个通道进行赋值

vec3B.Item1 = (byte)colorTab[label][1];

vec3B.Item2 = (byte)colorTab[label][2];

result.Set(i, j, vec3B);

}

}

Cv2.ImShow("Kmeans", result);

Python K-Means聚类算法进行颜色量化

# KMeans聚类算法图像颜色量化

# import the necessary packages

from sklearn.cluster import MiniBatchKMeans

import numpy as np

import argparse

import cv2

# load the image and grab its width and height

image = cv2.imread("C:/Users/Desktop/123.png")

(h, w) = image.shape[:2]

# convert the image from the RGB color space to the L*a*b*

# color space -- since we will be clustering using k-means

# which is based on the euclidean distance, we'll use the

# L*a*b* color space where the euclidean distance implies

# perceptual meaning

image = cv2.cvtColor(image, cv2.COLOR_BGR2LAB)

# reshape the image into a feature vector so that k-means

# can be applied

image = image.reshape((image.shape[0] * image.shape[1], 3))

# apply k-means using the specified number of clusters and

# then create the quantized image based on the predictions

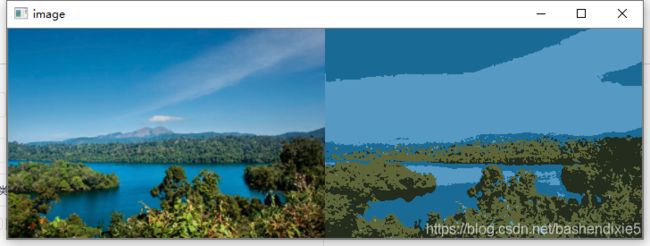

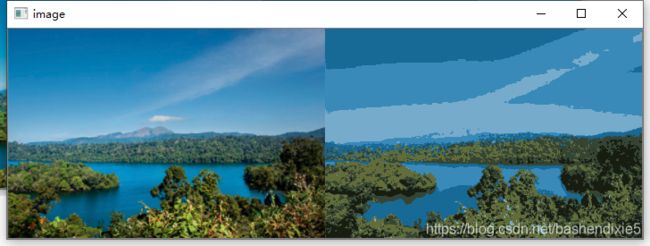

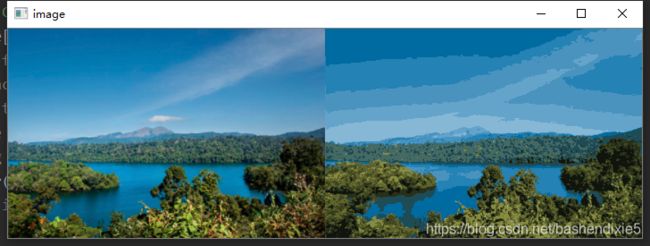

clt = MiniBatchKMeans(n_clusters = 4)

labels = clt.fit_predict(image)

quant = clt.cluster_centers_.astype("uint8")[labels]

# reshape the feature vectors to images

quant = quant.reshape((h, w, 3))

image = image.reshape((h, w, 3))

# convert from L*a*b* to RGB

quant = cv2.cvtColor(quant, cv2.COLOR_LAB2BGR)

image = cv2.cvtColor(image, cv2.COLOR_LAB2BGR)

# display the images and wait for a keypress

cv2.imshow("image", np.hstack([image, quant]))

cv2.waitKey(0)