Python Selenium练习(百度、名言、京东)

Python Selenium练习

- 一、打开百度并进行搜索

- 二、爬取名人名言

-

- 1. 爬取一页

- 2. 爬取5页

- 3. 数据储存

- 4. 总代码

- 三、爬取京东书籍信息

-

- 1. 爬取第一页

- 2. 爬取3页

- 3. 数据存储

- 4. 总代码

- 四、总结

- 参考

关于

selenium的配置可查看 B站爬虫教程

一、打开百度并进行搜索

打开百度:

from selenium.webdriver import Chrome

web = Chrome()

web.get('https://www.baidu.com')

输入要查询的值并回车:

input_btn = web.find_element_by_id('kw')

input_btn.send_keys('成龙', Keys.ENTER)

总代码和测试情况:

from selenium.webdriver import Chrome

from selenium.webdriver.common.keys import Keys

web = Chrome()

web.get('https://www.baidu.com')

web.maximize_window()

input_btn = web.find_element_by_id('kw')

input_btn.send_keys('成龙', Keys.ENTER)

二、爬取名人名言

爬取网站为http://quotes.toscrape.com/js/,需要爬取前五页的名言和对应的名人并存入csv文件中。

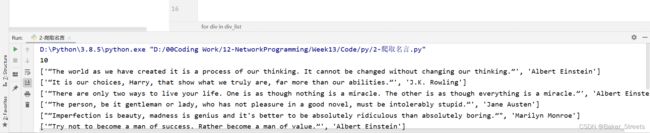

1. 爬取一页

先爬取第一页进行测试。

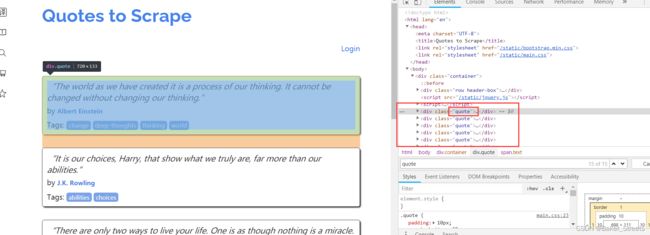

在开发者工具中可发现每一组名言(名言+名人)都是在一个class="quote"的div中,并且没有其他class="quote的标签:

之后,可发现名言句子在div下的class="text"的标签中,作者在class="author"的small标签中:

因此爬取第一页代码如下:

div_list = web.find_elements_by_class_name('quote')

print(len(div_list))

for div in div_list:

saying = div.find_element_by_class_name('text').text

author = div.find_element_by_class_name('author').text

info = [saying, author]

print(info)

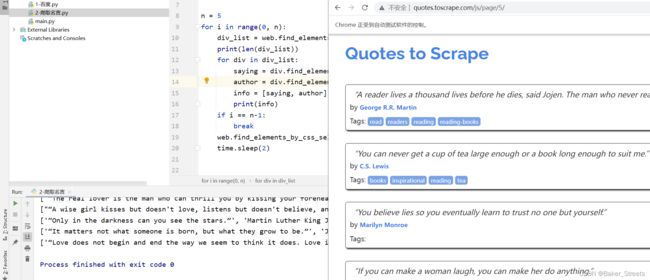

2. 爬取5页

爬取一页后,需要进行翻页,即点击翻页按钮。

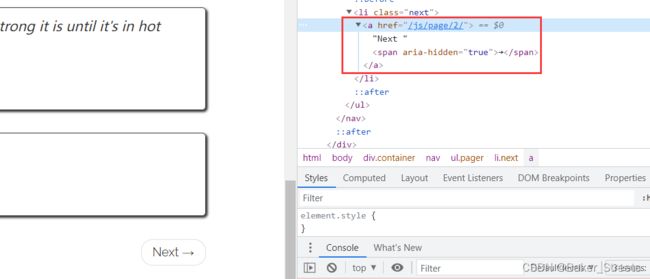

可发现Next按钮只有href属性,无法定位。且第一页只有下一页按钮,之后的页数有上一页和下一页按钮,则也无法通过xpath定位:

而其子元素span(即箭头)在第一页中的属性aria-hidden是唯一的,在之后的页数中存在aria-hidden该属性,但Next的箭头总是最后一个。

因此可以通过查找最后一个有aria-hidden属性的span标签,进行点击以跳转到下一页

web.find_elements_by_css_selector('[aria-hidden]')[-1].click()

测试:

n = 5

for i in range(0, n):

div_list = web.find_elements_by_class_name('quote')

print(len(div_list))

for div in div_list:

saying = div.find_element_by_class_name('text').text

author = div.find_element_by_class_name('author').text

info = [saying, author]

print(info)

if i == n-1:

break

web.find_elements_by_css_selector('[aria-hidden]')[-1].click()

time.sleep(2)

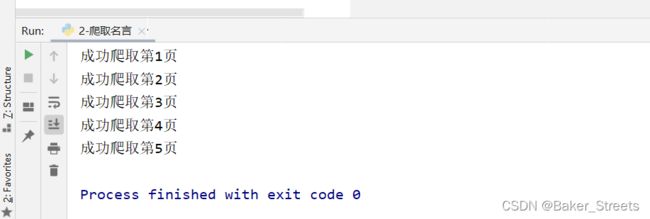

3. 数据储存

sayingAndAuthor = []

n = 5

for i in range(0, n):

div_list = web.find_elements_by_class_name('quote')

for div in div_list:

saying = div.find_element_by_class_name('text').text

author = div.find_element_by_class_name('author').text

info = [saying, author]

sayingAndAuthor.append(info)

print('成功爬取第' + (i+1) + '页')

if i == n-1:

break

web.find_elements_by_css_selector('[aria-hidden]')[-1].click()

time.sleep(2)

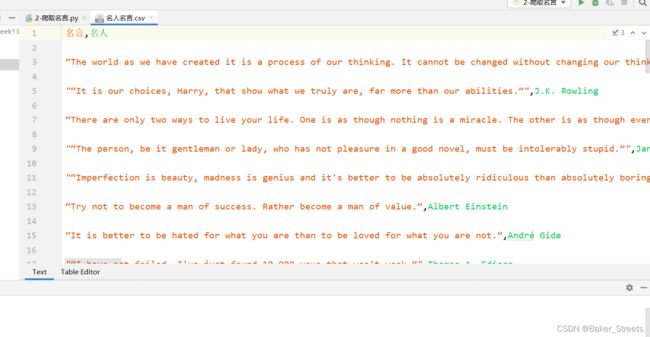

with open('名人名言.csv', 'w', encoding='utf-8')as fp:

fileWrite = csv.writer(fp)

fileWrite.writerow(['名言', '名人']) # 写入表头

fileWrite.writerows(sayingAndAuthor)

4. 总代码

from selenium.webdriver import Chrome

import time

import csv

web = Chrome()

web.get('http://quotes.toscrape.com/js/')

sayingAndAuthor = []

n = 5

for i in range(0, n):

div_list = web.find_elements_by_class_name('quote')

for div in div_list:

saying = div.find_element_by_class_name('text').text

author = div.find_element_by_class_name('author').text

info = [saying, author]

sayingAndAuthor.append(info)

print('成功爬取第' + str(i + 1) + '页')

if i == n-1:

break

web.find_elements_by_css_selector('[aria-hidden]')[-1].click()

time.sleep(2)

with open('名人名言.csv', 'w', encoding='utf-8')as fp:

fileWrite = csv.writer(fp)

fileWrite.writerow(['名言', '名人']) # 写入表头

fileWrite.writerows(sayingAndAuthor)

web.close()

爬取结果:

三、爬取京东书籍信息

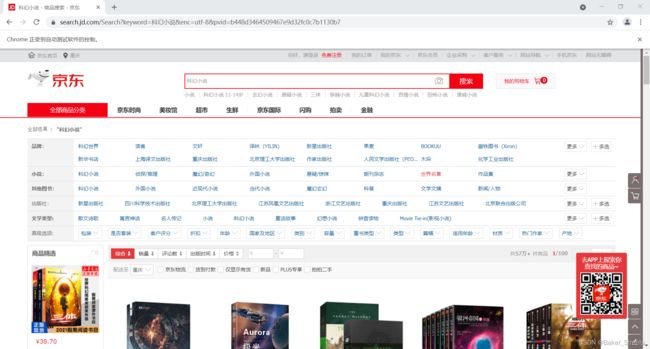

爬取某个关键字书籍的前三页书籍信息,本文以科幻小说为例

1. 爬取第一页

- 进入京东并搜索

科幻小说:

from selenium.webdriver import Chrome

from selenium.webdriver.common.keys import Keys

web = Chrome()

web.get('https://www.jd.com/')

web.maximize_window()

web.find_element_by_id('key').send_keys('科幻小说', Keys.ENTER) # 找到输入框输入,回车

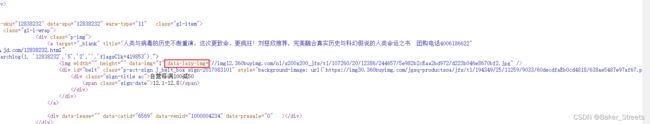

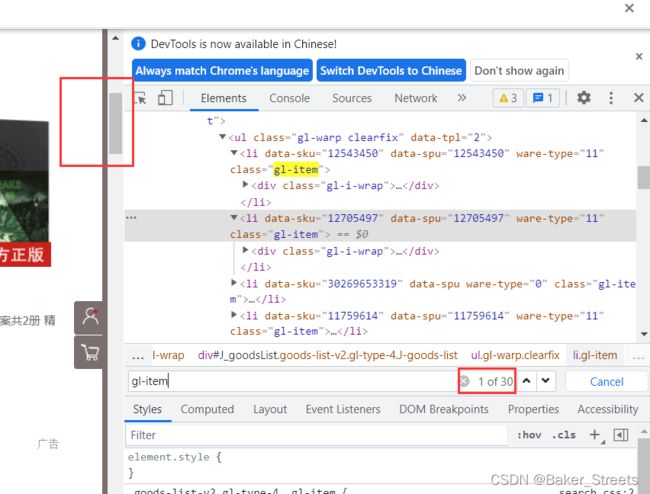

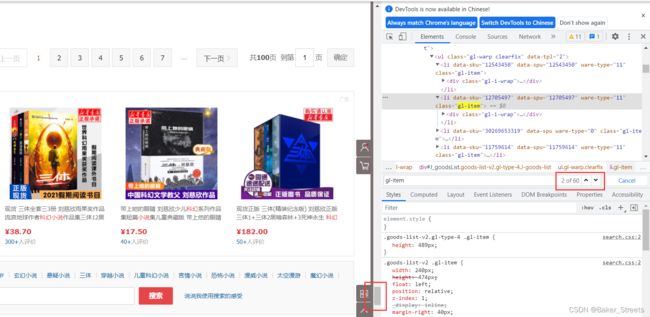

在 开发者工具 可发现每一个商品信息都存在于class包含"gl-item"的li中(鼠标覆盖在某个li上时其class变为gl-item hover):

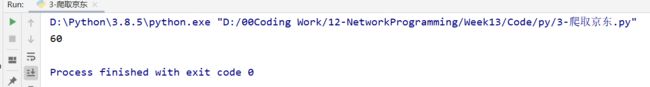

刚进入时可发现有30个li,但往下滑到页面低后,li数量变为60个,因此需要先往下滑动:

因此获取所有li如下:

web.execute_script('window.scrollTo(0, document.body.scrollHeight);')

time.sleep(2)

page_text = web.page_source

tree = etree.HTML(page_text)

li_list = tree.xpath('//li[contains(@class,"gl-item")]')

print(len(li_list))

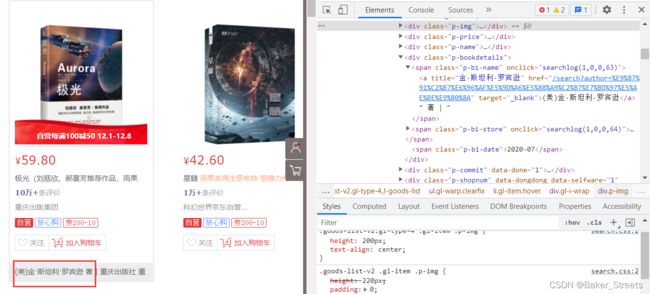

之后获取每件书籍的信息,在循环中获取

for li in li_list:

pass

获取书名和价格:

book_name = ''.join(li.xpath('.//div[@class="p-name"]/a/em/text()'))

price = '¥' + li.xpath('.//div[@class="p-price"]/strong/i/text()')[0]

author_span = li.xpath('.//span[@class="p-bi-name"]/a/text()')

if len(author_span) > 0:

author = author_span[0]

else:

author = '无'

对于获取出版社,则和作者一样:

store_span = li.xpath('.//span[@class="p-bi-store"]/a[1]/text()')

if len(store_span) > 0:

store = store_span[0]

else:

store = '无'

对于书本图片地址,有的在src属性中,有的在data-lazy-img属性中:

因此获取书本图片地址如下:

img_url_a = li.xpath('.//div[@class="p-img"]/a/img')[0]

if len(img_url_a.xpath('./@src')) > 0:

img_url = 'https' + img_url_a.xpath('./@src')[0] # 书本图片地址

else:

img_url = 'https' + img_url_a.xpath('./@data-lazy-img')[0]

因此爬取一页信息如下:

# 爬取一页

def get_onePage_info(web):

web.execute_script('window.scrollTo(0, document.body.scrollHeight);')

time.sleep(2)

page_text = web.page_source

# 进行解析

tree = etree.HTML(page_text)

li_list = tree.xpath('//li[contains(@class,"gl-item")]')

book_infos = []

for li in li_list:

book_name = ''.join(li.xpath('.//div[@class="p-name"]/a/em/text()')) # 书名

price = '¥' + li.xpath('.//div[@class="p-price"]/strong/i/text()')[0] # 价格

author_span = li.xpath('.//span[@class="p-bi-name"]/a/text()')

if len(author_span) > 0: # 作者

author = author_span[0]

else:

author = '无'

store_span = li.xpath('.//span[@class="p-bi-store"]/a[1]/text()') # 出版社

if len(store_span) > 0:

store = store_span[0]

else:

store = '无'

img_url_a = li.xpath('.//div[@class="p-img"]/a/img')[0]

if len(img_url_a.xpath('./@src')) > 0:

img_url = 'https' + img_url_a.xpath('./@src')[0] # 书本图片地址

else:

img_url = 'https' + img_url_a.xpath('./@data-lazy-img')[0]

one_book_info = [book_name, price, author, store, img_url]

book_infos.append(one_book_info)

return book_infos

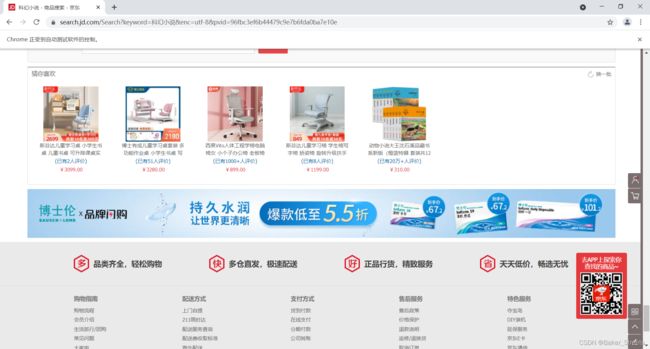

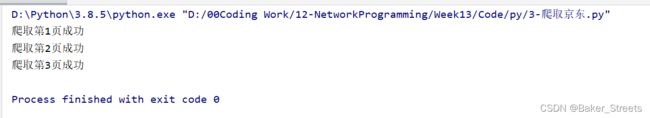

2. 爬取3页

点击下一页:

web.find_element_by_class_name('pn-next').click() # 点击下一页

爬取三页:

for i in range(0, 3):

all_book_infos += get_onePage_info(web)

web.find_element_by_class_name('pn-next').click() # 点击下一页

time.sleep(2)

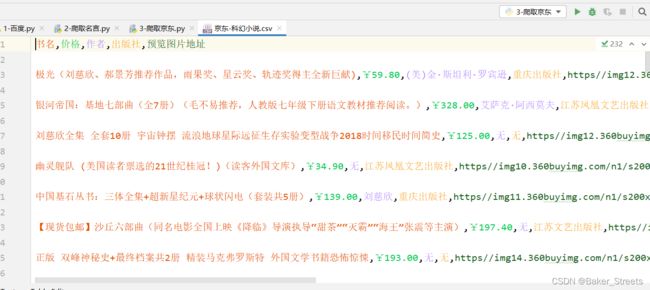

3. 数据存储

with open('京东-科幻小说.csv', 'w', encoding='utf-8')as fp:

writer = csv.writer(fp)

writer.writerow(['书名', '价格', '作者', '出版社', '预览图片地址'])

writer.writerows(all_book_info)

4. 总代码

from selenium.webdriver import Chrome

from selenium.webdriver.common.keys import Keys

import time

from lxml import etree

import csv

# 爬取一页

def get_onePage_info(web):

web.execute_script('window.scrollTo(0, document.body.scrollHeight);')

time.sleep(2)

page_text = web.page_source

# with open('3-.html', 'w', encoding='utf-8')as fp:

# fp.write(page_text)

# 进行解析

tree = etree.HTML(page_text)

li_list = tree.xpath('//li[contains(@class,"gl-item")]')

book_infos = []

for li in li_list:

book_name = ''.join(li.xpath('.//div[@class="p-name"]/a/em/text()')) # 书名

price = '¥' + li.xpath('.//div[@class="p-price"]/strong/i/text()')[0] # 价格

author_span = li.xpath('.//span[@class="p-bi-name"]/a/text()')

if len(author_span) > 0: # 作者

author = author_span[0]

else:

author = '无'

store_span = li.xpath('.//span[@class="p-bi-store"]/a[1]/text()') # 出版社

if len(store_span) > 0:

store = store_span[0]

else:

store = '无'

img_url_a = li.xpath('.//div[@class="p-img"]/a/img')[0]

if len(img_url_a.xpath('./@src')) > 0:

img_url = 'https' + img_url_a.xpath('./@src')[0] # 书本图片地址

else:

img_url = 'https' + img_url_a.xpath('./@data-lazy-img')[0]

one_book_info = [book_name, price, author, store, img_url]

book_infos.append(one_book_info)

return book_infos

def main():

web = Chrome()

web.get('https://www.jd.com/')

web.maximize_window()

web.find_element_by_id('key').send_keys('科幻小说', Keys.ENTER) # 找到输入框输入,回车

time.sleep(2)

all_book_info = []

for i in range(0, 3):

all_book_info += get_onePage_info(web)

print('爬取第' + str(i+1) + '页成功')

web.find_element_by_class_name('pn-next').click() # 点击下一页

time.sleep(2)

with open('京东-科幻小说.csv', 'w', encoding='utf-8')as fp:

writer = csv.writer(fp)

writer.writerow(['书名', '价格', '作者', '出版社', '预览图片地址'])

writer.writerows(all_book_info)

if __name__ == '__main__':

main()

四、总结

selenium对于爬取动态数据十分方便,不过速度相对较慢。

参考

CSS 属性 选择器

python中selenium操作下拉滚动条方法汇总