- Ollama 基本概念

Mr_One_Zhang

学习Ollamaai

Ollama是一个本地化的、支持多种自然语言处理(NLP)任务的机器学习框架,专注于模型加载、推理和生成任务。通过Ollama,用户能够方便地与本地部署的大型预训练模型进行交互。1.模型(Model)在Ollama中,模型是核心组成部分。它们是经过预训练的机器学习模型,能够执行不同的任务,例如文本生成、文本摘要、情感分析、对话生成等。Ollama支持多种流行的预训练模型,常见的模型有:deepse

- 人工智能直通车系列24【机器学习基础】(机器学习模型评估指标(回归))

浪九天

人工智能直通车开发语言python机器学习深度学习神经网络人工智能

目录机器学习模型评估指标(回归)1.均方误差(MeanSquaredError,MSE)2.均方根误差(RootMeanSquaredError,RMSE)3.平均绝对误差(MeanAbsoluteError,MAE)4.决定系数(CoefficientofDetermination,R2)机器学习模型评估指标(回归)1.均方误差(MeanSquaredError,MSE)详细解释均方误差是回归问

- 从零开始学机器学习——构建一个推荐web应用

努力的小雨

机器学习机器学习前端人工智能

首先给大家介绍一个很好用的学习地址:https://cloudstudio.net/columns今天,我们终于将分类器这一章节学习完活了,和回归一样,最后一章节用来构建web应用程序,我们会回顾之前所学的知识点,并新增一个web应用用来让模型和用户交互。所以今天的主题是美食推荐。美食推荐Web应用程序首先,请不要担心,本章节并不会涉及过多的前端知识点。我们此次的学习重点在于机器学习本身,因此我们

- Python自动化炒股:利用XGBoost和LightGBM进行股票市场预测的实战案例

云策量化

Python自动化炒股量化投资量化软件python量化交易QMTPTrade量化炒股量化投资deepseek

推荐阅读:《程序化炒股:如何申请官方交易接口权限?个人账户可以申请吗?》Python自动化炒股:利用XGBoost和LightGBM进行股票市场预测的实战案例在当今快节奏的金融市场中,自动化交易和预测模型成为了投资者和交易者的重要工具。Python以其强大的数据处理能力和丰富的机器学习库,成为了实现这些模型的首选语言。本文将带你了解如何使用XGBoost和LightGBM这两个流行的机器学习算法来

- 【sklearn 04】DNN、CNN、RNN

@金色海岸

sklearndnncnn

DNNDNN(DeepNeuralNetworks,深度神经网络)是一种相对浅层机器学习模型具有更多参数,需要更多数据进行训练的机器学习算法CNNCNN(convolutionalNeuralNetworks,卷积神经网络)是一种从局部特征开始学习并逐渐整合的神经网络。卷积神经网络通过卷积层来进行特征提取,通过池化层进行降维,相比较全连接的神经网络,卷积神经网络降低了模型复杂度,减少了模型的参数,

- 【sklearn 02】监督学习、非监督下学习、强化学习

@金色海岸

sklearn学习人工智能

监督学习、非监督学习、强化学习**机器学习通常分为无监督学习、监督学习和强化学习三类。-第一类:无监督学习(unsupervisedlearning),指的是从信息出发自动寻找规律,分析数据的结构,常见的无监督学习任务有聚类、降维、密度估计、关联分析等。-第二类:监督学习(supervisedlearning),监督学习指的是使用带标签的数据去训练模型,并预测未知数据的标签。监督学习有两种,当预测

- 从LLM出发:由浅入深探索AI开发的全流程与简单实践(全文3w字)

码事漫谈

AI人工智能

文章目录第一部分:AI开发的背景与历史1.1人工智能的起源与发展1.2神经网络与深度学习的崛起1.3Transformer架构与LLM的兴起1.4当前AI开发的现状与趋势第二部分:AI开发的核心技术2.1机器学习:AI的基础2.1.1机器学习的类型2.1.2机器学习的流程2.2深度学习:机器学习的进阶2.2.1神经网络基础2.2.2深度学习的关键架构2.3Transformer架构:现代LLM的核

- 纳米尺度仿真软件:Quantum Espresso_(20).机器学习在QuantumEspresso中的应用

kkchenjj

分子动力学2机器学习人工智能模拟仿真仿真模拟分子动力学

机器学习在QuantumEspresso中的应用在现代材料科学和纳米技术的研究中,机器学习(ML)技术已经成为一种强大的工具,用于加速和优化量子力学计算。QuantumEspresso是一个广泛使用的开源软件包,用于进行第一性原理计算,特别是在纳米尺度材料的模拟中。本节将介绍如何将机器学习技术应用于QuantumEspresso,以提高计算效率、预测材料性质和优化结构。1.机器学习与第一性原理计算

- 新手村:数据预处理-异常值检测方法

嘉羽很烦

机器学习机器学习

机器学习中异常值检测方法一、前置条件知识领域要求编程基础Python基础(变量、循环、函数)、JupyterNotebook或PyCharm使用。统计学基础理解均值、中位数、标准差、四分位数、正态分布、Z-score等概念。机器学习基础熟悉监督/无监督学习、分类、聚类、回归等基本概念。数据预处理数据清洗、特征缩放(标准化/归一化)、数据可视化(Matplotlib/Seaborn)。二、渐进式学习

- 新手村:数据预处理-特征缩放

嘉羽很烦

机器学习线性回归算法机器学习

新手村:数据预处理-特征缩放特征缩放(FeatureScaling)是数据预处理中的一个重要步骤,特别是在应用某些机器学习算法时。特征缩放可以使不同尺度的特征具有相同的量级,从而提高模型训练的效率和性能。常见的特征缩放方法包括标准化(Standardization)和归一化(Normalization)。常见的特征缩放方法标准化(Standardization)将特征转换为均值为0,标准差为1的标

- 过拟合:机器学习中的“死记硬背”陷阱

彩旗工作室

人工智能机器学习人工智能

在机器学习中,过拟合(Overfitting)是一个几乎每个从业者都会遇到的经典问题。它像一把双刃剑:当模型过于“聪明”时,可能会陷入对训练数据的过度依赖,从而失去处理新问题的能力。本文将从原理到实践,深入探讨过拟合的本质及应对策略。1.什么是过拟合?过拟合是指模型在训练数据上表现极佳,但在新数据(测试数据或真实场景数据)上表现显著下降的现象。通俗来说,模型像一个“死记硬背的学生”,记住了训练集中

- 【Python】已解决:pip安装第三方模块(库)与PyCharm中不同步的问题(PyCharm添加本地python解释器)

屿小夏

pythonpippycharm

个人简介:某不知名博主,致力于全栈领域的优质博客分享|用最优质的内容带来最舒适的阅读体验!文末获取免费IT学习资料!文末获取更多信息精彩专栏推荐订阅收藏专栏系列直达链接相关介绍书籍分享点我跳转书籍作为获取知识的重要途径,对于IT从业者来说更是不可或缺的资源。不定期更新IT图书,并在评论区抽取随机粉丝,书籍免费包邮到家AI前沿点我跳转探讨人工智能技术领域的最新发展和创新,涵盖机器学习、深度学习、自然

- 如何在github上参与开源项目

这个懒人

github开源软件

1.创建GitHub账号如果你还没有GitHub账号,首先需要注册一个:访问GitHub官网。点击右上角的“Signup”按钮,填写注册信息并完成注册。2.找到感兴趣的项目GitHub上有成千上万的开源项目,你可以通过以下方式找到感兴趣的项目:搜索项目:在GitHub首页的搜索框中输入关键词,例如“机器学习”、“Web开发”等。使用高级搜索功能,通过语言、标签等过滤条件找到合适的项目。浏览Tren

- 【AI大模型智能应用】Deepseek生成测试用例

柳柳的博客

AI大模型测试用例

在软件开发过程中,测试用例的设计和编写是确保软件质量的关键。然而,软件系统的复杂性不断增加,手动编写测试用例的工作量变得异常庞大,且容易出错。DeepSeek基于人工智能和机器学习,它能够依据软件的需求和设计文档,自动生成高质量的测试用例,显著减轻人工编写测试用例的负担。体验一把用DeepSeek编写测试用例,还生成清晰直观的思维导图,整个流程十分顺畅。这篇文章讲解如何使用deepseek生成功能

- Python依赖管理工具分析

xdpcxq1029

python开发语言

Python的依赖管理工具一直没有标准化,原因主要包括:历史发展的随意性:Python发展早期对于依赖管理的重视程度不足,缺乏从一开始就进行统一规划和设计的意识社区的分散性:Python社区庞大且分散,众多开发者和团队各自为政,根据自己的需求和偏好开发工具,缺乏统一的协调和整合机制多样化的使用场景:Python应用场景广泛,从Web开发到数据科学、机器学习、系统管理脚本等。不同场景对依赖管理有着不

- 【人工智能基础2】机器学习、深度学习总结

roman_日积跬步-终至千里

人工智能习题人工智能机器学习深度学习

文章目录一、人工智能关键技术二、机器学习基础1.监督、无监督、半监督学习2.损失函数:四种损失函数3.泛化与交叉验证4.过拟合与欠拟合5.正则化6.支持向量机三、深度学习基础1、概念与原理2、学习方式3、多层神经网络训练方法一、人工智能关键技术领域基础原理与逻辑机器学习机器学习基于数据,研究从观测数据出发寻找规律,利用这些规律对未来数据进行预测。基于学习模式,机器学习可以分为监督、无监督、强化学习

- Python精进系列: K-Means 聚类算法调用库函数和手动实现对比分析

进一步有进一步的欢喜

Python精进系列算法pythonkmeans

一、引言在机器学习领域,聚类分析是一种重要的无监督学习方法,用于将数据集中的样本划分为不同的组或簇,使得同一簇内的样本具有较高的相似性,而不同簇之间的样本具有较大的差异性。K-Means聚类算法是最常用的聚类算法之一,它以其简单性和高效性在数据挖掘、图像分割、模式识别等领域得到了广泛应用。本文将详细介绍K-Means聚类算法,并分别给出调用现成函数和不调用任何现成函数实现K-Means聚类的代码示

- 热门AI创作助手推荐【第一期】

量子星澜

文心一言AI写作chatgpt

星游AI创作助手人工智能在现代科技中的应用非常广泛,涵盖了诸多领域,包括但不限于以下几个方面:1.语音识别和自然语言处理:人工智能技术被广泛应用于语音识别和自然语言处理领域,例如智能助手、翻译系统、语音交互系统等。2.机器学习和数据分析:人工智能的机器学习算法被用于数据分析、预测建模、用户个性化推荐等领域,帮助企业做出更准确的商业决策。3.计算机视觉:人工智能在计算机视觉领域的应用包括图像识别、视

- 新手村:线性回归-实战-波士顿房价预测

嘉羽很烦

机器学习线性回归算法回归

新手村:线性回归-实战-波士顿房价预测前置条件阅读:新手村:线性回归了解相关概念实验目的1.熟悉机器学习的一般流程2.掌握基础的数据处理方法3.理解常用的回归算法教学例子:预测房价(以波士顿房价数据集为例)本次实验,你将使用真实的波士顿房价数据集建立起一个房价预测模型,并且了解到机器学习中的若干重要概念和评价方法,请通过机器学习建立回归模型,即:Y=θ0+θ1×X1+θ2×X2+θ3×X3+⋯+θ

- 【解锁机器学习:探寻数学基石】

游戏乐趣

机器学习人工智能

机器学习中的数学基础探秘在当今数字化时代,机器学习无疑是最具影响力和发展潜力的技术领域之一。从图像识别到自然语言处理,从智能推荐系统到自动驾驶,机器学习的应用无处不在,深刻地改变着我们的生活和工作方式。然而,在这看似神奇的机器学习背后,数学作为其坚实的理论基础,起着不可或缺的关键作用。毫不夸张地说,数学是打开机器学习大门的钥匙,是理解和掌握机器学习算法与模型的核心所在。想象一下,机器学习就像是一座

- 机器学习——正则化、欠拟合、过拟合、学习曲线

代码的建筑师

学习记录机器学习机器学习学习曲线过拟合欠拟合正则化

过拟合(overfitting):模型只能拟合训练数据的状态。即过度训练。避免过拟合的几种方法:①增加全部训练数据的数量(最为有效的方式)②使用简单的模型(简单的模型学不够,复杂的模型学的太多),这里的简单指的是不要过于复杂③正则化(对目标函数后加上正则化项):使得这个“目标函数+正则化项”的值最小,即为正则化,用防止参数变得过大(参数值变小,意味着对目标函数的影响变小),λ是正则化参数,代表正则

- 从过拟合到强化学习:机器学习核心知识全解析

吴师兄大模型

0基础实现机器学习入门到精通机器学习人工智能过拟合强化学习pythonLLMscikit-learn

Langchain系列文章目录01-玩转LangChain:从模型调用到Prompt模板与输出解析的完整指南02-玩转LangChainMemory模块:四种记忆类型详解及应用场景全覆盖03-全面掌握LangChain:从核心链条构建到动态任务分配的实战指南04-玩转LangChain:从文档加载到高效问答系统构建的全程实战05-玩转LangChain:深度评估问答系统的三种高效方法(示例生成、手

- 利用matlab实现贝叶斯优化算法(BO)优化支持向量机回归(SVR)的超参数

是内啡肽耶

算法matlab支持向量机机器学习回归

【导读】在机器学习建模中,支持向量机(SVM)回归模型的效果高度依赖超参数选择。但手动调参就像"大海捞针",而网格搜索又面临"计算爆炸"的难题。今天给大家介绍一个智能调参黑科技——贝叶斯优化算法。通过Matlab实现,只需几分钟就能让模型性能自动升级!一、为什么要用贝叶斯优化调参?传统调参三大痛点:C参数(正则化强度):过小导致过拟合,过大削弱模型能力ε参数(不敏感区域):决定对预测误差的容忍度核

- 机器学习的下一个前沿是因果推理吗?——探索机器学习的未来方向!

真智AI

人工智能机器学习

机器学习的进化:从预测到因果推理机器学习凭借强大的预测能力,已经彻底改变了多个行业。然而,要实现真正的突破,机器学习还需要克服实践和计算上的挑战,特别是在因果推理方面的应用。未来,因果推理或许将成为推动机器学习发展的新前沿。什么是因果推理,它如何与机器学习相关?如果你和我一样没有数学背景,你可能会好奇“因果推理”到底意味着什么?它与机器学习又有什么关系?当我刚开始学习机器学习时,第一次听到“因果推

- 深入解析LTE-A到5G的系统消息架构与功能演进

罗博深

本文还有配套的精品资源,点击获取简介:系统消息是移动通信网络中,UE与网络间信息交换的核心,涵盖了网络状态、服务信息与系统配置。文章深入分析了4GLTE-A到5G网络中系统消息的组成、作用及其演进,包括MIB和SIBs的功能与内容,以及5G对系统消息的优化和新技术的引入,如动态调度、网络切片和针对物联网设备的特定参数配置。5G系统消息还通过机器学习和大数据分析实现智能化分发,增强了网络灵活性、智能

- 解决约束多目标优化问题的新方法:MOEA/D-DAE算法深度解析

木子算法

多目标优化人工智能算法多目标人工智能

解决约束多目标优化问题的新方法:MOEA/D-DAE算法深度解析在工程优化、机器学习等众多领域,约束多目标优化问题(CMOPs)广泛存在。传统方法在处理这类问题时,常因可行区域不连通或约束违反局部极小点陷入停滞。近期,IEEETransactionsonEvolutionaryComputation上的一篇论文提出了一种新颖的解决方案——MOEA/D-DAE算法,通过结合检测-逃逸策略(DAE)和

- python 人工智能实战案例

2401_86114612

pygamepythonjava

大家好,今天我们要分享,python编程人工智能小例子python人工智能100例子,一起探索吧!1.背景介绍概述在这个世纪,人类已经处于数字化的时代,而这也让很多其他行业都进入了数字化领域python列表有哪些基本操作,python列表功能很重要吗。其中包括游戏行业。游戏行业的蓬勃发展促使机器学习的产生,通过计算机能够进行高效率地模拟人类的学习、决策过程,不断升级提升人类的能力。游戏领域中的AI

- Python 在人工智能领域的实际6大案例

Solomon_肖哥弹架构

人工智能机器学习python

Python作为一种功能强大且易于学习的编程语言,在人工智能(AI)领域得到了广泛的应用。从机器学习到深度学习,从自然语言处理到计算机视觉,Python提供了丰富的库和框架,使得开发者能够快速实现各种AI应用。本文将通过多个实际案例,展示Python在人工智能领域的强大功能和应用前景。二、案例一:手写数字识别(MNIST)1.背景介绍手写数字识别是机器学习领域的经典入门项目,MNIST数据集包含了

- 基于AI算法实现的情感倾向分析的方法

程序员奇奇

计算机毕设人工智能算法

完整代码:https://download.csdn.net/download/pythonyanyan/87430621背景目前,情感倾向分析的方法主要分为两类:一种是基于情感词典的方法;一种是基于机器学习的方法,如基于大规模语料库的机器学习。前者需要用到标注好的情感词典,英文的词典有很多,中文主要有知网整理的情感词典Hownet和台湾大学整理发布的NTUSD两个情感词典,还有哈工大信息检索研究

- 机器学习算法实战——天气数据分析(主页有源码)

喵了个AI

机器学习实战机器学习算法数据分析

✨个人主页欢迎您的访问✨期待您的三连✨✨个人主页欢迎您的访问✨期待您的三连✨✨个人主页欢迎您的访问✨期待您的三连✨1.引言天气数据分析是气象学和数据科学交叉领域的一个重要研究方向。随着大数据技术的发展,气象数据的采集、存储和分析能力得到了显著提升。机器学习算法在天气数据分析中的应用,不仅能够提高天气预报的准确性,还能为气候研究、灾害预警等提供有力支持。本文将介绍机器学习在天气数据分析中的应用,探讨

- Spring4.1新特性——Spring MVC增强

jinnianshilongnian

spring 4.1

目录

Spring4.1新特性——综述

Spring4.1新特性——Spring核心部分及其他

Spring4.1新特性——Spring缓存框架增强

Spring4.1新特性——异步调用和事件机制的异常处理

Spring4.1新特性——数据库集成测试脚本初始化

Spring4.1新特性——Spring MVC增强

Spring4.1新特性——页面自动化测试框架Spring MVC T

- mysql 性能查询优化

annan211

javasql优化mysql应用服务器

1 时间到底花在哪了?

mysql在执行查询的时候需要执行一系列的子任务,这些子任务包含了整个查询周期最重要的阶段,这其中包含了大量为了

检索数据列到存储引擎的调用以及调用后的数据处理,包括排序、分组等。在完成这些任务的时候,查询需要在不同的地方

花费时间,包括网络、cpu计算、生成统计信息和执行计划、锁等待等。尤其是向底层存储引擎检索数据的调用操作。这些调用需要在内存操

- windows系统配置

cherishLC

windows

删除Hiberfil.sys :使用命令powercfg -h off 关闭休眠功能即可:

http://jingyan.baidu.com/article/f3ad7d0fc0992e09c2345b51.html

类似的还有pagefile.sys

msconfig 配置启动项

shutdown 定时关机

ipconfig 查看网络配置

ipconfig /flushdns

- 人体的排毒时间

Array_06

工作

========================

|| 人体的排毒时间是什么时候?||

========================

转载于:

http://zhidao.baidu.com/link?url=ibaGlicVslAQhVdWWVevU4TMjhiKaNBWCpZ1NS6igCQ78EkNJZFsEjCjl3T5EdXU9SaPg04bh8MbY1bR

- ZooKeeper

cugfy

zookeeper

Zookeeper是一个高性能,分布式的,开源分布式应用协调服务。它提供了简单原始的功能,分布式应用可以基于它实现更高级的服务,比如同步, 配置管理,集群管理,名空间。它被设计为易于编程,使用文件系统目录树作为数据模型。服务端跑在java上,提供java和C的客户端API。 Zookeeper是Google的Chubby一个开源的实现,是高有效和可靠的协同工作系统,Zookeeper能够用来lea

- 网络爬虫的乱码处理

随意而生

爬虫网络

下边简单总结下关于网络爬虫的乱码处理。注意,这里不仅是中文乱码,还包括一些如日文、韩文 、俄文、藏文之类的乱码处理,因为他们的解决方式 是一致的,故在此统一说明。 网络爬虫,有两种选择,一是选择nutch、hetriex,二是自写爬虫,两者在处理乱码时,原理是一致的,但前者处理乱码时,要看懂源码后进行修改才可以,所以要废劲一些;而后者更自由方便,可以在编码处理

- Xcode常用快捷键

张亚雄

xcode

一、总结的常用命令:

隐藏xcode command+h

退出xcode command+q

关闭窗口 command+w

关闭所有窗口 command+option+w

关闭当前

- mongoDB索引操作

adminjun

mongodb索引

一、索引基础: MongoDB的索引几乎与传统的关系型数据库一模一样,这其中也包括一些基本的优化技巧。下面是创建索引的命令: > db.test.ensureIndex({"username":1}) 可以通过下面的名称查看索引是否已经成功建立: &nbs

- 成都软件园实习那些话

aijuans

成都 软件园 实习

无聊之中,翻了一下日志,发现上一篇经历是很久以前的事了,悔过~~

断断续续离开了学校快一年了,习惯了那里一天天的幼稚、成长的环境,到这里有点与世隔绝的感觉。不过还好,那是刚到这里时的想法,现在感觉在这挺好,不管怎么样,最要感谢的还是老师能给这么好的一次催化成长的机会,在这里确实看到了好多好多能想到或想不到的东西。

都说在外面和学校相比最明显的差距就是与人相处比较困难,因为在外面每个人都

- Linux下FTP服务器安装及配置

ayaoxinchao

linuxFTP服务器vsftp

检测是否安装了FTP

[root@localhost ~]# rpm -q vsftpd

如果未安装:package vsftpd is not installed 安装了则显示:vsftpd-2.0.5-28.el5累死的版本信息

安装FTP

运行yum install vsftpd命令,如[root@localhost ~]# yum install vsf

- 使用mongo-java-driver获取文档id和查找文档

BigBird2012

driver

注:本文所有代码都使用的mongo-java-driver实现。

在MongoDB中,一个集合(collection)在概念上就类似我们SQL数据库中的表(Table),这个集合包含了一系列文档(document)。一个DBObject对象表示我们想添加到集合(collection)中的一个文档(document),MongoDB会自动为我们创建的每个文档添加一个id,这个id在

- JSONObject以及json串

bijian1013

jsonJSONObject

一.JAR包简介

要使程序可以运行必须引入JSON-lib包,JSON-lib包同时依赖于以下的JAR包:

1.commons-lang-2.0.jar

2.commons-beanutils-1.7.0.jar

3.commons-collections-3.1.jar

&n

- [Zookeeper学习笔记之三]Zookeeper实例创建和会话建立的异步特性

bit1129

zookeeper

为了说明问题,看个简单的代码,

import org.apache.zookeeper.*;

import java.io.IOException;

import java.util.concurrent.CountDownLatch;

import java.util.concurrent.ThreadLocal

- 【Scala十二】Scala核心六:Trait

bit1129

scala

Traits are a fundamental unit of code reuse in Scala. A trait encapsulates method and field definitions, which can then be reused by mixing them into classes. Unlike class inheritance, in which each c

- weblogic version 10.3破解

ronin47

weblogic

版本:WebLogic Server 10.3

说明:%DOMAIN_HOME%:指WebLogic Server 域(Domain)目录

例如我的做测试的域的根目录 DOMAIN_HOME=D:/Weblogic/Middleware/user_projects/domains/base_domain

1.为了保证操作安全,备份%DOMAIN_HOME%/security/Defa

- 求第n个斐波那契数

BrokenDreams

今天看到群友发的一个问题:写一个小程序打印第n个斐波那契数。

自己试了下,搞了好久。。。基础要加强了。

&nbs

- 读《研磨设计模式》-代码笔记-访问者模式-Visitor

bylijinnan

java设计模式

声明: 本文只为方便我个人查阅和理解,详细的分析以及源代码请移步 原作者的博客http://chjavach.iteye.com/

import java.util.ArrayList;

import java.util.List;

interface IVisitor {

//第二次分派,Visitor调用Element

void visitConcret

- MatConvNet的excise 3改为网络配置文件形式

cherishLC

matlab

MatConvNet为vlFeat作者写的matlab下的卷积神经网络工具包,可以使用GPU。

主页:

http://www.vlfeat.org/matconvnet/

教程:

http://www.robots.ox.ac.uk/~vgg/practicals/cnn/index.html

注意:需要下载新版的MatConvNet替换掉教程中工具包中的matconvnet:

http

- ZK Timeout再讨论

chenchao051

zookeepertimeouthbase

http://crazyjvm.iteye.com/blog/1693757 文中提到相关超时问题,但是又出现了一个问题,我把min和max都设置成了180000,但是仍然出现了以下的异常信息:

Client session timed out, have not heard from server in 154339ms for sessionid 0x13a3f7732340003

- CASE WHEN 用法介绍

daizj

sqlgroup bycase when

CASE WHEN 用法介绍

1. CASE WHEN 表达式有两种形式

--简单Case函数

CASE sex

WHEN '1' THEN '男'

WHEN '2' THEN '女'

ELSE '其他' END

--Case搜索函数

CASE

WHEN sex = '1' THEN

- PHP技巧汇总:提高PHP性能的53个技巧

dcj3sjt126com

PHP

PHP技巧汇总:提高PHP性能的53个技巧 用单引号代替双引号来包含字符串,这样做会更快一些。因为PHP会在双引号包围的字符串中搜寻变量, 单引号则不会,注意:只有echo能这么做,它是一种可以把多个字符串当作参数的函数译注: PHP手册中说echo是语言结构,不是真正的函数,故把函数加上了双引号)。 1、如果能将类的方法定义成static,就尽量定义成static,它的速度会提升将近4倍

- Yii框架中CGridView的使用方法以及详细示例

dcj3sjt126com

yii

CGridView显示一个数据项的列表中的一个表。

表中的每一行代表一个数据项的数据,和一个列通常代表一个属性的物品(一些列可能对应于复杂的表达式的属性或静态文本)。 CGridView既支持排序和分页的数据项。排序和分页可以在AJAX模式或正常的页面请求。使用CGridView的一个好处是,当用户浏览器禁用JavaScript,排序和分页自动退化普通页面请求和仍然正常运行。

实例代码如下:

- Maven项目打包成可执行Jar文件

dyy_gusi

assembly

Maven项目打包成可执行Jar文件

在使用Maven完成项目以后,如果是需要打包成可执行的Jar文件,我们通过eclipse的导出很麻烦,还得指定入口文件的位置,还得说明依赖的jar包,既然都使用Maven了,很重要的一个目的就是让这些繁琐的操作简单。我们可以通过插件完成这项工作,使用assembly插件。具体使用方式如下:

1、在项目中加入插件的依赖:

<plugin>

- php常见错误

geeksun

PHP

1. kevent() reported that connect() failed (61: Connection refused) while connecting to upstream, client: 127.0.0.1, server: localhost, request: "GET / HTTP/1.1", upstream: "fastc

- 修改linux的用户名

hongtoushizi

linuxchange password

Change Linux Username

更改Linux用户名,需要修改4个系统的文件:

/etc/passwd

/etc/shadow

/etc/group

/etc/gshadow

古老/传统的方法是使用vi去直接修改,但是这有安全隐患(具体可自己搜一下),所以后来改成使用这些命令去代替:

vipw

vipw -s

vigr

vigr -s

具体的操作顺

- 第五章 常用Lua开发库1-redis、mysql、http客户端

jinnianshilongnian

nginxlua

对于开发来说需要有好的生态开发库来辅助我们快速开发,而Lua中也有大多数我们需要的第三方开发库如Redis、Memcached、Mysql、Http客户端、JSON、模板引擎等。

一些常见的Lua库可以在github上搜索,https://github.com/search?utf8=%E2%9C%93&q=lua+resty。

Redis客户端

lua-resty-r

- zkClient 监控机制实现

liyonghui160com

zkClient 监控机制实现

直接使用zk的api实现业务功能比较繁琐。因为要处理session loss,session expire等异常,在发生这些异常后进行重连。又因为ZK的watcher是一次性的,如果要基于wather实现发布/订阅模式,还要自己包装一下,将一次性订阅包装成持久订阅。另外如果要使用抽象级别更高的功能,比如分布式锁,leader选举

- 在Mysql 众多表中查找一个表名或者字段名的 SQL 语句

pda158

mysql

在Mysql 众多表中查找一个表名或者字段名的 SQL 语句:

方法一:SELECT table_name, column_name from information_schema.columns WHERE column_name LIKE 'Name';

方法二:SELECT column_name from information_schema.colum

- 程序员对英语的依赖

Smile.zeng

英语程序猿

1、程序员最基本的技能,至少要能写得出代码,当我们还在为建立类的时候思考用什么单词发牢骚的时候,英语与别人的差距就直接表现出来咯。

2、程序员最起码能认识开发工具里的英语单词,不然怎么知道使用这些开发工具。

3、进阶一点,就是能读懂别人的代码,有利于我们学习人家的思路和技术。

4、写的程序至少能有一定的可读性,至少要人别人能懂吧...

以上一些问题,充分说明了英语对程序猿的重要性。骚年

- Oracle学习笔记(8) 使用PLSQL编写触发器

vipbooks

oraclesql编程活动Access

时间过得真快啊,转眼就到了Oracle学习笔记的最后个章节了,通过前面七章的学习大家应该对Oracle编程有了一定了了解了吧,这东东如果一段时间不用很快就会忘记了,所以我会把自己学习过的东西做好详细的笔记,用到的时候可以随时查找,马上上手!希望这些笔记能对大家有些帮助!

这是第八章的学习笔记,学习完第七章的子程序和包之后

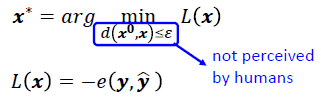

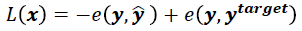

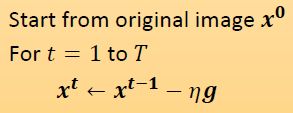

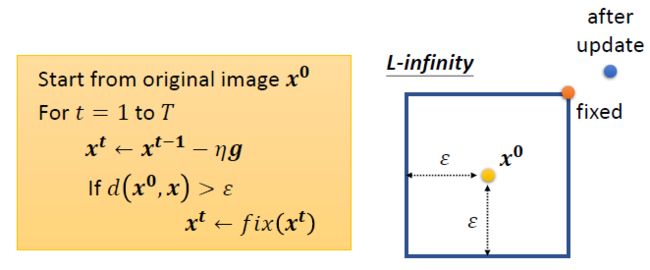

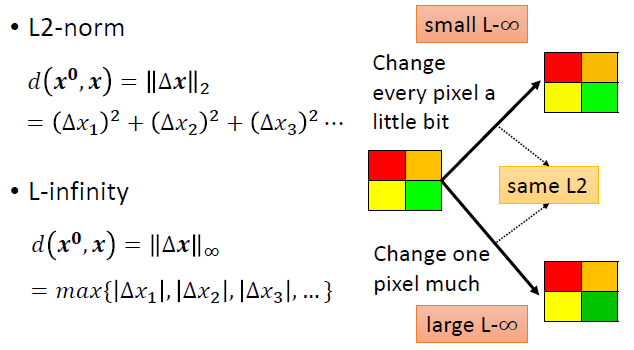

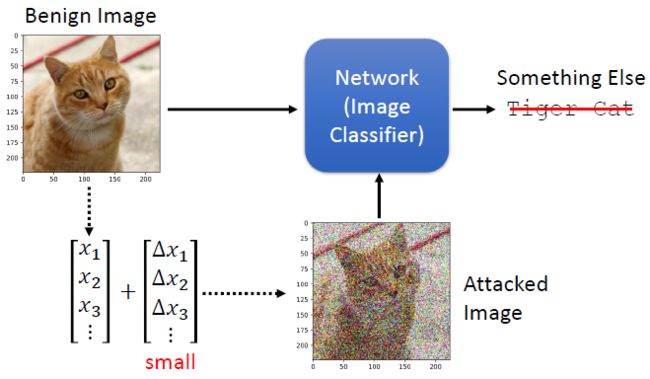

进而得到如下的优化目标 (约束 d ( x 0 , x ) ≤ ε d(x^0,x)\leq \varepsilon d(x0,x)≤ε 保证了加入的 noise 不会被人眼察觉; e e e 可以为 cross entropy):

进而得到如下的优化目标 (约束 d ( x 0 , x ) ≤ ε d(x^0,x)\leq \varepsilon d(x0,x)≤ε 保证了加入的 noise 不会被人眼察觉; e e e 可以为 cross entropy):