pytorch实现DenseBlock

1.介绍

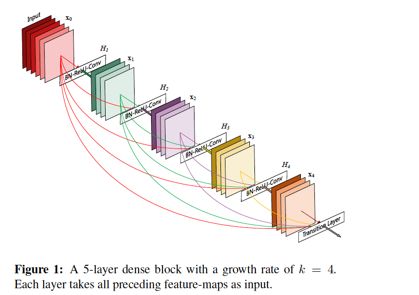

DenseBlock是DenseNet网络的重要组成部分,下面图片展示的是从DenseNet网络中节选出来的DenseBlock模块。

其主要思想是:对于每一层,前面所有层的特征映射作为当前层输入,而自己的特征映射为后续层输入,形成全互链接。每层提取出来的特征映射都可以供后续层使用。

优点:1.缓解梯度消失;2.增强特征传播;3.降低参数数量。

2.代码一

class _DenseLayer(nn.Sequential):

'''DenseBlock中的内部结构,这里是BN+ReLU+1x1 Conv+BN+ReLU+3x3 Conv结构'''

def __init__(self, num_input_features, growth_rate, bn_size, drop_rate):

super(_DenseLayer, self).__init__()

self.add_module("norm1", nn.BatchNorm2d(num_input_features))

self.add_module("relu1", nn.ReLU(inplace=True))

self.add_module("conv1", nn.Conv2d(num_input_features, bn_size*growth_rate,

kernel_size=1, stride=1, bias=False))

self.add_module("norm2", nn.BatchNorm2d(bn_size*growth_rate))

self.add_module("relu2", nn.ReLU(inplace=True))

self.add_module("conv2", nn.Conv2d(bn_size*growth_rate, growth_rate,

kernel_size=3, stride=1, padding=1, bias=False))

self.drop_rate = drop_rate

def forward(self, x):

new_features = super(_DenseLayer, self).forward(x)

if self.drop_rate > 0:

new_features = F.dropout(new_features, p=self.drop_rate, training=self.training)

return torch.cat([x, new_features], 1)

#实现DenseBlock模块,内部是密集连接方式(输入特征数线性增长)

class _DenseBlock(nn.Sequential):

"""DenseBlock"""

def __init__(self, num_layers, num_input_features, bn_size, growth_rate, drop_rate):

super(_DenseBlock, self).__init__()

for i in range(num_layers):

layer = _DenseLayer(num_input_features+i*growth_rate, growth_rate, bn_size,

drop_rate)

self.add_module("denselayer%d" % (i+1,), layer)

主要是在_DenseLayer中,我们返回的是特征提取前后的值。所以形成密集连接。

如下图所示,上层表示的是我们的代码所展示的跳跃连接,下层是跳跃连接的分解,与论文提出的密集连接块一致,每一个特征的输出都是后续的输入。

2.代码二

def bottleneck_layer(self, x, scope):

# print(x)

with tf.name_scope(scope):

x = Batch_Normalization(x, training=self.training, scope=scope+'_batch1')

x = Relu(x)

x = conv_layer(x, filter=4 * self.filters, kernel=[1,1], layer_name=scope+'_conv1')

x = Drop_out(x, rate=dropout_rate, training=self.training)

x = Batch_Normalization(x, training=self.training, scope=scope+'_batch2')

x = Relu(x)

x = conv_layer(x, filter=self.filters, kernel=[3,3], layer_name=scope+'_conv2')

x = Drop_out(x, rate=dropout_rate, training=self.training)

# print(x)

return x

def dense_block(self, input_x, nb_layers, layer_name):

with tf.name_scope(layer_name):

layers_concat = list()

layers_concat.append(input_x)

x = self.bottleneck_layer(input_x, scope=layer_name + '_bottleN_' + str(0))

layers_concat.append(x)

for i in range(nb_layers - 1):

x = Concatenation(layers_concat)

x = self.bottleneck_layer(x, scope=layer_name + '_bottleN_' + str(i + 1))

layers_concat.append(x)

x = Concatenation(layers_concat)

return x

代码二使用Concatenation函数,在每次调用dense_block时,将之前存储的所有特征相加,作为当前层的输入,满足了当前层的输入时之前所有层输出的和。