【算法】多视图几何三维重建+增量式SfM

多视图几何三维重建的基本原理:

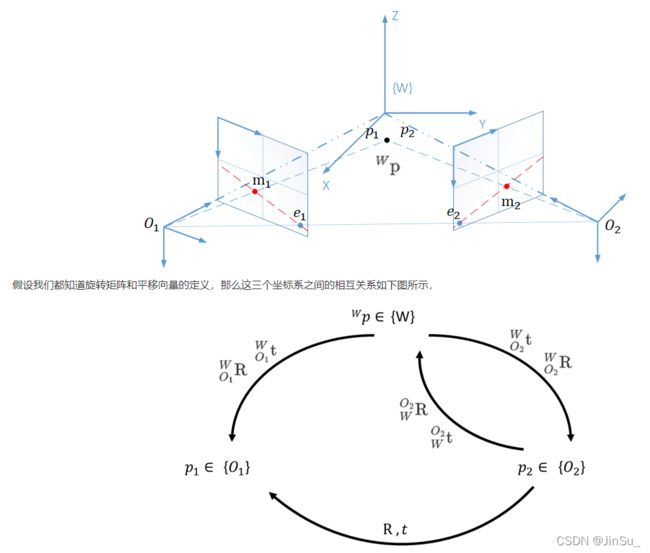

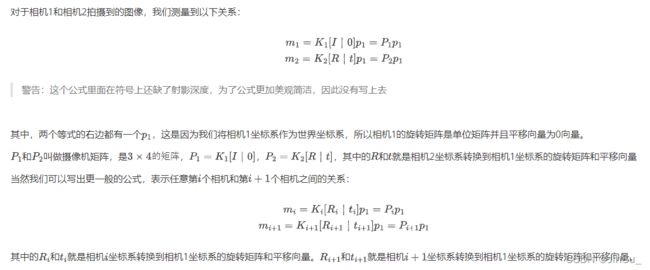

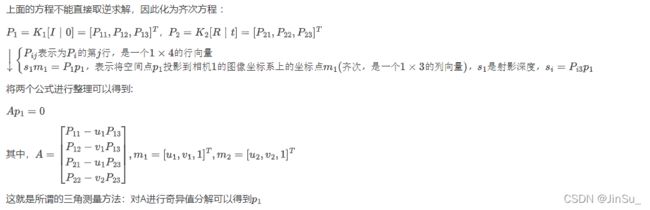

从两个或者多个视点观察同一景物,已获得在多个不同的视角下对景物的多张感知图像,运用三角测量的基本原理计算图像像素间位置偏差,获得景物的三维深度信息,这一个过程与人类观察外面的世界的过程是一样的。

SfM:

SfM的全称为Structure from Motion,即通过相机的移动来确定目标的空间和几何关系,是三维重建的一种常见方法。它只需要普通的RGB摄像头,因此成本更低廉,且受环境约束较小,在室内和室外均能使用。但是,SfM背后需要复杂的理论和算法做支持,在精度和速度上都还有待提高,所以目前成熟的商业应用并不多。

本文将实现一个简易的增量式SfM系统。

书籍:计算机视觉中的多视图几何:原书第2版/(澳)理查德\cdot哈特利 北京:机械工业出版社,2019.8

代码参考:OpenCV实现SfM(二):多目三维重建_arag2009的学习专栏-CSDN博客

数据集来源:MVS Data Set – 2014 | DTU Robot Image Data Sets

对极几何&基础矩阵

特征点提取和匹配

从上面的分析可知,要求取两个相机的相对关系,需要两幅图像中的对应点,这就变成的特征点的提取和匹配问题。

对于图像差别较大的情况,推荐使用SIFT特征,因为SIFT对旋转、尺度、透视都有较好的鲁棒性。(如果差别不大,可以考虑其他更快速的特征,比如SURF、ORB等。 ),然后采用knn最近邻算法进行匹配,距离计算采用欧氏距离。

由于OpenCV将SIFT包含在了扩展部分中,所以官网上下载的版本是没有SIFT的,

为此需要到GitHub - opencv/opencv_contrib: Repository for OpenCV's extra modules下载扩展包opencv_contrib,并按照里面的说明重新编译OpenCV。

编译opencv的时候要通过cmake -DOPENCV_EXTRA_MODULES_PATH将opencv_contrib的module编译进来,一定要保持两者的版本一致

opencv链接:GitHub - opencv/opencv: Open Source Computer Vision Library

重点说明的是,匹配结果往往有很多误匹配,为了排除这些错误,这里使用了Ratio Test方法,即使用KNN算法寻找与该特征最匹配的2个特征,若第一个特征的匹配距离与第二个特征的匹配距离之比小于某一阈值,就接受该匹配,否则视为误匹配。

void match_features(

cv::Mat & query,

cv::Mat & train,

std::vector& matches,

std::vector>& knn_matches

)

{

cv::BFMatcher matcher(cv::NORM_L2);

matcher.knnMatch(query, train, knn_matches, 2);

//获取满足Ratio Test的最小匹配的距离

float min_dist = FLT_MAX;

for (int r = 0; r < knn_matches.size(); ++r)

{

//Ratio Test

if (knn_matches[r][0].distance > 0.6*knn_matches[r][1].distance)

continue;

float dist = knn_matches[r][0].distance;

if (dist < min_dist) min_dist = dist;

}

matches.clear();

for (size_t r = 0; r < knn_matches.size(); ++r)

{

//排除不满足Ratio Test的点和匹配距离过大的点

if (

knn_matches[r][0].distance > 0.6*knn_matches[r][1].distance ||

knn_matches[r][0].distance > 5 * cv::max(min_dist, 10.0f)

)

continue;

//保存匹配点

matches.push_back(knn_matches[r][0]);

}

} 之后可以参考《计算机视觉中的多视图几何》第11章 基本矩阵估计 算法 11.4

std::vector> knn_matches;

match_features(this->leftimage->descriptor, this->rightimage->descriptor, m_matchs,knn_matches);//上文的匹配函数

auto& kp1 = this->leftimage->keypoints;

auto& kp2 = this->rightimage->keypoints;

cv::Mat m_Fundamental;

ransace_refine_matchs(kp1, kp2, m_matchs,m_Fundamental);//这里采用基于RANSAC的基础矩阵估计进行匹配点对的refine

int last_num = 0;

int next_num = 0;

double T = 10;

cv::Mat x1, x2;

cv::Point2f p1, p2;

double d;

int index_kp2;

do {

last_num = m_matchs.size();

std::vector label1(kp1.size(), -1);

std::vector label2(kp2.size(), -1);

for (int i = 0; i < m_matchs.size(); i++) {

label1[m_matchs[i].queryIdx] = 1;

label2[m_matchs[i].trainIdx] = 1;

}

for (int i = 0; i < knn_matches.size(); i++) {

if (label1[i] < 0) {

index_kp2 = knn_matches[i][0].trainIdx;

if (label2[index_kp2] < 0) {

p1 = kp1[knn_matches[i][0].queryIdx].pt;

p2 = kp2[index_kp2].pt;

x1 = (cv::Mat_(3, 1) << p1.x, p1.y, 1);

x2 = (cv::Mat_(1, 3) << p2.x, p2.y, 1);

x1 = x2*m_Fundamental*x1;

d = abs(x1.at(0, 0));

if (d < T) {

m_matchs.push_back(knn_matches[i][0]);

label1[i] = 1;

label2[index_kp2] = 1;

}

}

}

}

ransace_refine_matchs(kp1, kp2, m_matchs,m_Fundamental);//这里采用基于RANSAC的基础矩阵估计进行匹配点对的refine(再一次)

next_num = m_matchs.size();

std::cout <<"loop: "<< next_num - last_num << "\n";

} while (next_num - last_num > 0);//如果匹配点对数目比上一次少则结束循环

其中的基于RANSAC的基础矩阵估计,采用opencv的cv::findFundamentalMat,并设置参数method=cv::FM_RANSAC

void ransace_refine_matchs(

std::vector& kp1, std::vector& kp2,

std::vector& matchs,

cv::Mat& m_Fundamental)

{

std::vector _p1, _p2;

for (int i = 0; i < m_matchs.size(); i++) {

_p1.push_back(kp1[m_matchs[i].queryIdx].pt);

_p2.push_back(kp2[m_matchs[i].trainIdx].pt);

}

std::vector m_RANSACStatus;

m_Fundamental = cv::findFundamentalMat(_p1, _p2, m_RANSACStatus, cv::FM_RANSAC);//

std::vector matchs_copy = matchs;

matchs.clear();

for (int i = 0; i < matchs_copy.size(); ++i) {

if (m_RANSACStatus[i] != 0) matchs.push_back(matchs_copy[i]);

}

} 双视图的三维重建

bool find_transform(cv::Mat & K, std::vector& p1, std::vector& p2, cv::Mat & R, cv::Mat & T, cv::Mat & mask)

{

//根据内参矩阵获取相机的焦距和光心坐标(主点坐标)

double focal_length = 0.5*(K.at(0) + K.at(4));

cv::Point2d principle_point(K.at(2), K.at(5));

std::vector _p1, _p2;

for (int i = 0; i < p1.size(); i++) {

_p1.push_back(p1[i].pt);

_p2.push_back(p2[i].pt);

}

//根据匹配点求取本征矩阵,使用RANSAC,进一步排除失配点

cv::Mat E = cv::findEssentialMat(_p1, _p2, focal_length, principle_point, cv::RANSAC, 0.999, 1.0, mask);

if (E.empty()) return false;

double feasible_count = cv::countNonZero(mask);

std::cout << (int)feasible_count << " -in- " << p1.size() << std::endl;

//对于RANSAC而言,outlier数量大于50%时,结果是不可靠的

if (feasible_count <= 15 || (feasible_count / p1.size()) < 0.6)

return false;

//分解本征矩阵,获取相对变换

int pass_count = cv::recoverPose(E, _p1, _p2, R, T, focal_length, principle_point, mask);

//同时位于两个相机前方的点的数量要足够大

if (((double)pass_count) / feasible_count < 0.7)

return false;

return true;

} 参考:https://blog.csdn.net/weixin_42587961/article/details/97241162#Ax0V_556

这里我们用opencv的cv::triangulatePoints进行三角测量

void reconstruct(cv::Mat & K, cv::Mat & R1, cv::Mat & T1, cv::Mat & R2, cv::Mat & T2,

std::vector& p1, std::vector& p2, std::vector& structure)

{

std::vector _p1, _p2;

for (int i = 0; i < p1.size(); i++) {

_p1.push_back(p1[i].pt);

_p2.push_back(p2[i].pt);

}

//两个相机的投影矩阵[R T],triangulatePoints只支持float型

cv::Mat proj1(3, 4, CV_32FC1);

cv::Mat proj2(3, 4, CV_32FC1);

R1.convertTo(proj1(cv::Range(0, 3), cv::Range(0, 3)), CV_32FC1);

T1.convertTo(proj1.col(3), CV_32FC1);

R2.convertTo(proj2(cv::Range(0, 3), cv::Range(0, 3)), CV_32FC1);

T2.convertTo(proj2.col(3), CV_32FC1);

cv::Mat fK;

K.convertTo(fK, CV_32FC1);

proj1 = fK * proj1;

proj2 = fK * proj2;

//三角重建

cv::Mat s;

cv::triangulatePoints(proj1, proj2, _p1, _p2, s);

structure.clear();

structure.reserve(s.cols);

for (int i = 0; i < s.cols; ++i)

{

cv::Mat_ col = s.col(i);

col /= col(3); //齐次坐标,需要除以最后一个元素才是真正的坐标值

structure.push_back(MyPoint3f(cv::Point3f(col(0), col(1), col(2)), p1[i].color));

}

} 多视图的三维重建(增量式SfM)

我们知道,两个相机之间的变换矩阵(旋转矩阵和平移向量)可以通过估计本质矩阵以及分解本质矩阵来实现。

那么设相机1的坐标系为世界坐标系,现在加入第三幅视图,如何确定第三个相机(后面称为相机3)到相机1坐标系的变换矩阵呢?

最简单的想法,就是沿用双目重建的方法,即在第三幅图像和第一幅图像之间提取特征点匹配然后三角测量重建。

那么加入第四幅、第五幅,乃至更多呢?随着图像数量的增加,新加入的图像与第一幅图像的差异可能越来越大,特征点的提取变得异常困难,这时就不能再沿用双目重建的方法了。

现在我们采用PnP的方法,该方法根据空间中的点与图像中的点的对应关系,求解相机在空间中的位置和姿态。也就是说,如果我知道相机1坐标系中的一些三维空间点,也知道这些空间点和相机3图像的2维点匹配,那么PnP就可以解出相机3和相机1之间的变换矩阵(旋转矩阵和平移向量)。

多视图的三维重建的一个大致流程:

-

使用双目重建的方法,对头两幅图像进行重建,这样就得到了一些空间中的点,

-

加入第3幅图像后,使其与第2幅图像进行特征匹配,这些匹配点中,肯定有一部分也是图像1与图像2之间的匹配点,也就是说,这些匹配点中有一部分的空间坐标是已知的相机1坐标系下的空间点,同时又知道这些点在第3幅图像中的二维像素坐标

-

求解PnP所需的信息都有了。由于空间点的坐标都是相机1坐标系下的,所以由PnP求出的相机位置和姿态也是相机1坐标系下的,即相机3到相机1的变换矩阵。

-

利用相机3和相机1的变换矩阵继续三角测量重建更多的点云,并融合到已有的点云中

后续加入更多的图像也是这个道理,并且要进行点云的融合。

完整代码

GlobalVTKUtils.h

#pragma once

#ifndef GLOBALVTKUTILS_H

#define GLOBALVTKUTILS_H

#include

#include

class GlobalVTKUtils {

public:

GlobalVTKUtils() {};

~GlobalVTKUtils() {};

static void ShowPoints(std::vector> points,std::vector> colors = std::vector>());

};

#endif // !SHOWVTK_H GlobalVTKUtils.cpp

#include"GlobalVTKUtils.h"

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include "vtkFloatArray.h" //浮点型数组类

#include "vtkAutoInit.h"

VTK_MODULE_INIT(vtkRenderingOpenGL2); // VTK was built with vtkRenderingOpenGL2

VTK_MODULE_INIT(vtkInteractionStyle);

VTK_MODULE_INIT(vtkRenderingFreeType);

void GlobalVTKUtils::ShowPoints(std::vector> points, std::vector> colors)

{

vtkSmartPointer renderer = vtkSmartPointer< vtkRenderer>::New();

vtkSmartPointer renderWindow = vtkSmartPointer::New();

renderWindow->AddRenderer(renderer);

vtkSmartPointer renderWindowInteractor = vtkSmartPointer::New();

vtkSmartPointer istyle = vtkSmartPointer::New();

renderWindowInteractor->SetRenderWindow(renderWindow);

vtkSmartPointer style = vtkSmartPointer::New();

renderWindowInteractor->SetInteractorStyle(style);//设置交互器风格

std::vector label(points.size(), -1);

double x1, y1, z1;

double x2, y2, z2;

double T = 0.1;

double value;

for (int i = 0; i < points.size(); i++) {

double minvalue = 1e10;

int min_index = -1;

for (int j = 0; j < points.size(); j++) {

if (j != i) {

x1 = points[i][0];y1 = points[i][1];z1 = points[i][2];

x2 = points[j][0];y2 = points[j][1];z2 = points[j][2];

value = std::sqrt(std::pow(x1 - x2, 2) + std::pow(y1 - y2, 2) + std::pow(z1 - z2, 2));

if (value < minvalue) {

minvalue = value;

min_index = j;

if (minvalue < T)break;

}

}

}

//std::cout << i << " " << minvalue << std::endl;

if (min_index != -1 && minvalue>T) {

label[i] = 1;

}

}

std::vector> copy = points;

std::vector> copy_c = colors;

points.clear();

colors.clear();

for (int i = 0; i < copy.size(); i++) {

if (label[i] < 0) {

points.push_back(copy[i]);

colors.push_back(copy_c[i]);

}

}

//MAD去除离群点

double mean_pt[3];

for (int i = 0; i < points.size(); i++) {

auto pt = points[i];

for (int j = 0; j < 3; j++) {

mean_pt[j] += pt[j];

}

}

for (int j = 0; j < 3; j++) {

mean_pt[j] /= points.size();

}

double mad = 0;

for (int i = 0; i < points.size(); i++) {

auto pt = points[i];

double x, y, z;

x = pt[0];y = pt[1];z = pt[2];

mad += std::sqrt(std::pow(x - mean_pt[0], 2) + std::pow(y - mean_pt[1], 2) + std::pow(z - mean_pt[2], 2));

}

mad/=points.size();

vtkIdType idtype;

double x, y, z;

vtkPoints *vpoints = vtkPoints::New();

vtkCellArray *vcells = vtkCellArray::New();

vtkSmartPointer ptColor = vtkSmartPointer::New();

ptColor->SetNumberOfComponents(3);

bool has_color = true;

if (colors.empty())has_color = false;

for (int i = 0; i < points.size(); i ++) {

auto pt = points[i];

x = pt[0];y = pt[1];z = pt[2];

//std::cout << pt[0] << " " << pt[1] << " " << pt[2] << "\n";

if (std::sqrt(std::pow(x - mean_pt[0], 2) + std::pow(y - mean_pt[1], 2) + std::pow(z - mean_pt[2], 2)) > 2*mad) {

continue;//不显示离群点

}

idtype = vpoints->InsertNextPoint(x, y, z);

vcells->InsertNextCell(1, &idtype);

if (has_color) {

auto color = colors[i];

ptColor->InsertNextTuple3(color[2],color[1],color[0]);

}

}

// 渲染机制未知,需要同时设置点坐标与点坐标对应的verts

// verts中的id必须与点坐标对应

vtkPolyData *polyData = vtkPolyData::New();

polyData->SetPoints(vpoints);

polyData->SetVerts(vcells);

if (has_color) {

polyData->GetPointData()->SetScalars(ptColor);

}

//下面为正常的可视化流程,可设置的点云颜色、大小等已注释

vtkPolyDataMapper *mapper = vtkPolyDataMapper::New();

mapper->SetInputData(polyData);

vtkActor *actor = vtkActor::New();

actor->SetMapper(mapper);

actor->GetProperty()->SetPointSize(4);

renderer->AddActor(actor);

renderer->SetBackground(0, 0, 0);//设置背景颜色

renderWindow->Render();

renderWindow->SetSize(800, 800);//设置窗口大小

renderWindowInteractor->Start();

vtkSmartPointer writer =

vtkSmartPointer::New();

writer->SetFileName("polyData.vtp");

writer->SetInputData(polyData);

writer->Write();

}

MultiViewStereoReconstructor.h

#pragma once

#ifndef MULTIVIEWSTEREORECONSTRUCTOR

#define MULTIVIEWSTEREORECONSTRUCTOR

#include

#include

#include

#include

class MyKeyPoint :public cv::KeyPoint {

public:

MyKeyPoint() {};

MyKeyPoint(cv::Point2f _pt, std::vector _color = std::vector(),

float _size = 1, float _angle = -1, float _response = 0, int _octave = 0, int _class_id = -1)

: cv::KeyPoint(_pt,_size,_angle,_response,_octave,_class_id),color(_color){};

MyKeyPoint(cv::KeyPoint _keypoint) :cv::KeyPoint(_keypoint) {};

MyKeyPoint(cv::KeyPoint _keypoint,cv::Vec3b _color) :cv::KeyPoint(_keypoint) {

setColor(_color);

};

MyKeyPoint(cv::KeyPoint _keypoint,std::vector _color) :cv::KeyPoint(_keypoint),color(_color) {};

void setColor(cv::Vec3b _color);

~MyKeyPoint() {};

bool operator==(MyKeyPoint &that);

public:

std::vector color;

};

class MyPoint3f :public cv::Point3f {

public:

MyPoint3f() {};

MyPoint3f(cv::Point3f _p) :cv::Point3f(_p) {};

MyPoint3f(cv::Point3f _p,cv::Vec3b _color) :cv::Point3f(_p) {

setColor(_color);

};

MyPoint3f(cv::Point3f _p,std::vector _color) :cv::Point3f(_p),color(_color) {};

void setColor(cv::Vec3b _color);

public:

std::vector color;

};

class UnitView {

public:

UnitView(cv::Mat& _image) {

image = _image.clone();

};

~UnitView() {};

bool extract_feature(cv::Ptr _executor);

public:

cv::Mat image;

cv::Mat descriptor;

std::vector keypoints;

cv::Mat rotation;//旋转矩阵

cv::Mat motion;

};

class PointCloudModel {

public:

PointCloudModel() {};

~PointCloudModel() {};

public:

std::vector> correspond_struct_idx;

std::vector structure;

cv::Mat K;

cv::Mat distCoeffs;

};

class MyMatch {

public:

MyMatch(int _type=1): match_type(_type){};

~MyMatch() {};

bool SetUp();

bool Build();

bool init_structure();

void match_features(cv::Mat& query, cv::Mat& train,

std::vector& matches,

std::vector>& knn_matches);

void ransace_refine_matchs(std::vector& kp1, std::vector & kp2,

std::vector& matchs,cv::Mat& m_Fundamental);

bool find_transform(cv::Mat& K, std::vector& p1, std::vector& p2, cv::Mat& R, cv::Mat& T, cv::Mat& mask);

void maskout_points(std::vector& p1, cv::Mat& mask);

void reconstruct(

cv::Mat& K,

cv::Mat& R1, cv::Mat& T1,

cv::Mat& R2, cv::Mat& T2,

std::vector& p1, std::vector& p2,

std::vector& structure);

void get_objpoints_and_imgpoints(

std::vector& matches,

std::vector& last_struct_indices,

std::vector& global_structure,

std::vector& key_points,

std::vector& object_points,

std::vector& image_points);

void fusion_structure(

std::vector& matches,

std::vector& last_struct_indices,

std::vector& next_struct_indices,

std::vector& global_structure,

std::vector& local_structure);

cv::Mat get_disparity(cv::Mat& img1,cv::Mat& img2);

public:

std::shared_ptr leftimage;

std::shared_ptr rightimage;

std::shared_ptr global_model;

int match_type = 1;//0表示这个匹配是第一张图像和第二张图像的匹配,否则为1; 默认为1;

private:

std::vector m_structure;

std::vector m_matchs;

std::vector m1;

std::vector m2;

};

class MultiViewStereoReconstructor {

public:

MultiViewStereoReconstructor() {};

~MultiViewStereoReconstructor() {};

void SetK(cv::Mat& _K) { K = _K.clone(); };

void SetDistCoeffs(cv::Mat _distCoeffs) { distCoeffs = _distCoeffs.clone(); };

void SetData(std::vector& _images);

bool Execute();

void Visualize();

public:

std::shared_ptr global_model;

std::vector> images;

std::vector matchs;

cv::Mat K;

cv::Mat distCoeffs;

};

#endif // !MULTIVIEWSTEREORECONSTRUCTOR

MultiViewStereoReconstructor.cpp

#pragma once

#include "MultiViewStereoReconstructor.h"

#include

#include "GlobalVTKUtils.h"

#include

#include

void MyKeyPoint::setColor(cv::Vec3b _color)

{

this->color.resize(3);

for (int i = 0; i < 3; i++)this->color[i] = _color[i];

}

bool MyKeyPoint::operator==(MyKeyPoint & that)

{

return this->pt == that.pt;

}

void MyPoint3f::setColor(cv::Vec3b _color)

{

this->color.resize(3);

for (int i = 0; i < 3; i++)this->color[i] = _color[i];

}

bool UnitView::extract_feature(cv::Ptr _executor)

{

std::vector _keypoints;

_executor->detectAndCompute(this->image, cv::noArray(), _keypoints, this->descriptor);

if (_keypoints.size() <= 10) return false;

for (auto& key : _keypoints) {

this->keypoints.push_back(MyKeyPoint(key, this->image.at(key.pt.y, key.pt.x)));

}

}

bool MyMatch::SetUp()

{

//std::cout << "MyMatch::SetUp\n";

std::vector> knn_matches;

match_features(this->leftimage->descriptor, this->rightimage->descriptor, m_matchs,knn_matches);

auto& kp1 = this->leftimage->keypoints;

auto& kp2 = this->rightimage->keypoints;

cv::Mat m_Fundamental;

ransace_refine_matchs(kp1, kp2, m_matchs,m_Fundamental);

#if 1

int last_num = 0;

int next_num = 0;

double T = 10;

cv::Mat x1, x2;

cv::Point2f p1, p2;

double d;

int index_kp2;

do {

last_num = m_matchs.size();

std::vector label1(kp1.size(), -1);

std::vector label2(kp2.size(), -1);

for (int i = 0; i < m_matchs.size(); i++) {

label1[m_matchs[i].queryIdx] = 1;

label2[m_matchs[i].trainIdx] = 1;

}

for (int i = 0; i < knn_matches.size(); i++) {

if (label1[i] < 0) {

index_kp2 = knn_matches[i][0].trainIdx;

if (label2[index_kp2] < 0) {

p1 = kp1[knn_matches[i][0].queryIdx].pt;

p2 = kp2[index_kp2].pt;

x1 = (cv::Mat_(3, 1) << p1.x, p1.y, 1);

x2 = (cv::Mat_(1, 3) << p2.x, p2.y, 1);

x1 = x2*m_Fundamental*x1;

d = abs(x1.at(0, 0));

if (d < T) {

m_matchs.push_back(knn_matches[i][0]);

label1[i] = 1;

label2[index_kp2] = 1;

}

}

}

}

ransace_refine_matchs(kp1, kp2, m_matchs,m_Fundamental);

next_num = m_matchs.size();

std::cout <<"loop: "<< next_num - last_num << "\n";

} while (next_num - last_num > 0);

#endif

for (int i = 0; i < m_matchs.size(); i++) {

m1.push_back(kp1[m_matchs[i].queryIdx]);

m2.push_back(kp2[m_matchs[i].trainIdx]);

}

//std::cout << "leftimage->image.size(): " << this->leftimage->image.size() << "\n";

//std::cout << "leftimage->descriptor.size(): " << this->leftimage->descriptor.size() << "\n";

//std::cout << "rightimage->descriptor.size(): " << this->rightimage->descriptor.size() << "\n";

//std::cout << "leftimage->keypoints.size(): " << this->leftimage->keypoints.size() << "\n";

//std::cout << "rightimage->keypoints.size(): " << this->rightimage->keypoints.size() << "\n";

//std::cout << "m1.size(): " << m1.size() << "\n";

//std::cout << "m2.size(): " << m2.size() << "\n";

#if 0

int gap = 10;

std::vector &key1 = kp1;

std::vector &key2 = kp2;

std::vector tkey1;

std::vector tkey2;

std::vector tMatches;

int InlinerCount = 0;

for (int i = 0; i < m_matchs.size(); i += gap) {

tkey1.push_back(key1[m_matchs[i].queryIdx]);

tkey2.push_back(key2[m_matchs[i].trainIdx]);

cv::DMatch match;

match.queryIdx = InlinerCount;

match.trainIdx = InlinerCount;

InlinerCount++;

tMatches.push_back(match);

}

cv::Mat img_matches;

cv::drawMatches(

this->leftimage->image, tkey1, this->rightimage->image, tkey2,

tMatches, img_matches, cv::Scalar::all(-1), cv::Scalar::all(-1),

std::vector(), cv::DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

cv::imwrite("FASTResult.jpg", img_matches);

cv::imshow("Match0", img_matches);

cv::waitKey(0);

#endif

//std::cout << "done MyMatch::SetUp\n";

return true;

}

void MyMatch::match_features(

cv::Mat & query,

cv::Mat & train,

std::vector& matches,

std::vector>& knn_matches

)

{

//std::vector> knn_matches;

cv::BFMatcher matcher(cv::NORM_L2);

matcher.knnMatch(query, train, knn_matches, 2);

//std::cout << knn_matches.size() << "\n";

//std::cout <<"knn_matches: "<< knn_matches.size() << "\n";

//for (int i = 0; i < knn_matches.size(); i++) {

// std::cout << i << " " << knn_matches[i][0].queryIdx << " " << knn_matches[i][0].trainIdx<<" "< 0.6*knn_matches[r][1].distance)

continue;

float dist = knn_matches[r][0].distance;

if (dist < min_dist) min_dist = dist;

}

matches.clear();

for (size_t r = 0; r < knn_matches.size(); ++r)

{

//排除不满足Ratio Test的点和匹配距离过大的点

if (

knn_matches[r][0].distance > 0.6*knn_matches[r][1].distance ||

knn_matches[r][0].distance > 5 * cv::max(min_dist, 10.0f)

)

continue;

//保存匹配点

matches.push_back(knn_matches[r][0]);

}

}

void MyMatch::ransace_refine_matchs(

std::vector& kp1, std::vector& kp2,

std::vector& matchs,

cv::Mat& m_Fundamental)

{

//auto& kp1 = this->leftimage->keypoints;

//auto& kp2 = this->rightimage->keypoints;

std::vector _p1, _p2;

for (int i = 0; i < m_matchs.size(); i++) {

_p1.push_back(kp1[m_matchs[i].queryIdx].pt);

_p2.push_back(kp2[m_matchs[i].trainIdx].pt);

}

//cv::Mat m_Fundamental;

std::vector m_RANSACStatus;

m_Fundamental = cv::findFundamentalMat(_p1, _p2, m_RANSACStatus, cv::FM_RANSAC);//

std::vector matchs_copy = matchs;

matchs.clear();

for (int i = 0; i < matchs_copy.size(); ++i) {

if (m_RANSACStatus[i] != 0) matchs.push_back(matchs_copy[i]);

}

}

bool MyMatch::find_transform(cv::Mat & K, std::vector& p1, std::vector& p2, cv::Mat & R, cv::Mat & T, cv::Mat & mask)

{

//根据内参矩阵获取相机的焦距和光心坐标(主点坐标)

double focal_length = 0.5*(K.at(0) + K.at(4));

cv::Point2d principle_point(K.at(2), K.at(5));

std::vector _p1, _p2;

for (int i = 0; i < p1.size(); i++) {

_p1.push_back(p1[i].pt);

_p2.push_back(p2[i].pt);

}

//根据匹配点求取本征矩阵,使用RANSAC,进一步排除失配点

cv::Mat E = cv::findEssentialMat(_p1, _p2, focal_length, principle_point, cv::RANSAC, 0.999, 1.0, mask);

if (E.empty()) return false;

double feasible_count = cv::countNonZero(mask);

std::cout << (int)feasible_count << " -in- " << p1.size() << std::endl;

//对于RANSAC而言,outlier数量大于50%时,结果是不可靠的

if (feasible_count <= 15 || (feasible_count / p1.size()) < 0.6)

return false;

//分解本征矩阵,获取相对变换

int pass_count = cv::recoverPose(E, _p1, _p2, R, T, focal_length, principle_point, mask);

//同时位于两个相机前方的点的数量要足够大

if (((double)pass_count) / feasible_count < 0.7)

return false;

return true;

}

void MyMatch::maskout_points(std::vector& p1, cv::Mat & mask)

{

std::vector p1_copy = p1;

p1.clear();

for (int i = 0; i < mask.rows; ++i) {

if (mask.at(i) > 0) p1.push_back(p1_copy[i]);

}

}

void MyMatch::reconstruct(cv::Mat & K, cv::Mat & R1, cv::Mat & T1, cv::Mat & R2, cv::Mat & T2,

std::vector& p1, std::vector& p2, std::vector& structure)

{

std::vector _p1, _p2;

for (int i = 0; i < p1.size(); i++) {

_p1.push_back(p1[i].pt);

_p2.push_back(p2[i].pt);

}

//两个相机的投影矩阵[R T],triangulatePoints只支持float型

cv::Mat proj1(3, 4, CV_32FC1);

cv::Mat proj2(3, 4, CV_32FC1);

R1.convertTo(proj1(cv::Range(0, 3), cv::Range(0, 3)), CV_32FC1);

T1.convertTo(proj1.col(3), CV_32FC1);

R2.convertTo(proj2(cv::Range(0, 3), cv::Range(0, 3)), CV_32FC1);

T2.convertTo(proj2.col(3), CV_32FC1);

cv::Mat fK;

K.convertTo(fK, CV_32FC1);

proj1 = fK * proj1;

proj2 = fK * proj2;

//三角重建

cv::Mat s;

cv::triangulatePoints(proj1, proj2, _p1, _p2, s);

structure.clear();

structure.reserve(s.cols);

for (int i = 0; i < s.cols; ++i)

{

cv::Mat_ col = s.col(i);

col /= col(3); //齐次坐标,需要除以最后一个元素才是真正的坐标值

structure.push_back(MyPoint3f(cv::Point3f(col(0), col(1), col(2)), p1[i].color));

}

}

bool MyMatch::init_structure()

{

cv::Mat & K = global_model->K;

std::cout << "MyMatch::init_structure\n";

cv::Mat R, T;//旋转矩阵和平移向量

cv::Mat mask;//mask中大于零的点代表匹配点,等于零代表失配点

find_transform(K, m1, m2, R, T, mask);

maskout_points(m1, mask);//去除野值

maskout_points(m2, mask);//去除野值

cv::Mat R0 = cv::Mat::eye(3, 3, CV_64FC1);

cv::Mat T0 = cv::Mat::zeros(3, 1, CV_64FC1);

reconstruct(K, R0, T0, R, T, m1, m2, m_structure);

this->leftimage->rotation = R0;

this->leftimage->motion = T0;

this->rightimage->rotation = R;

this->rightimage->motion = T;

auto& correspond_struct_idx = global_model->correspond_struct_idx;

correspond_struct_idx.clear();

correspond_struct_idx.resize(2);

correspond_struct_idx[0].resize(this->leftimage->keypoints.size(), -1);

correspond_struct_idx[1].resize(this->rightimage->keypoints.size(), -1);

//填写头两幅图像的结构索引

int idx = 0;

for (int i = 0; i < m_matchs.size(); ++i)

{

if (mask.at(i) == 0)

continue;

correspond_struct_idx[0][m_matchs[i].queryIdx] = idx;

correspond_struct_idx[1][m_matchs[i].trainIdx] = idx;

++idx;

}

//m_correspond_struct_idx = correspond_struct_idx[1];

//初始化,直接赋值

global_model->structure = m_structure;

return true;

}

void MyMatch::get_objpoints_and_imgpoints(

std::vector& matches,

std::vector& last_struct_indices,

std::vector& global_structure,

std::vector& key_points,

std::vector& object_points,

std::vector& image_points)

{

object_points.clear();

image_points.clear();

for (int i = 0; i < matches.size(); ++i)

{

int query_idx = matches[i].queryIdx;

int train_idx = matches[i].trainIdx;

int struct_idx = last_struct_indices[query_idx];

if (struct_idx < 0) continue;

object_points.push_back(global_structure[struct_idx]);

image_points.push_back(key_points[train_idx].pt);

}

}

void MyMatch::fusion_structure(

std::vector& matches,

std::vector& last_struct_indices,

std::vector& next_struct_indices,

std::vector& global_structure,

std::vector& local_structure)

{

for (int i = 0; i < matches.size(); ++i)

{

int query_idx = matches[i].queryIdx;

int train_idx = matches[i].trainIdx;

int struct_idx = last_struct_indices[query_idx];

if (struct_idx >= 0) //若该点在空间中已经存在,则这对匹配点对应的空间点应该是同一个,索引要相同

{

next_struct_indices[train_idx] = struct_idx;

continue;

}

//若该点在空间中已经存在,将该点加入到结构中,且这对匹配点的空间点索引都为新加入的点的索引

global_structure.push_back(local_structure[i]);

last_struct_indices[query_idx] = next_struct_indices[train_idx] = global_structure.size() - 1;

}

}

cv::Mat MyMatch::get_disparity(cv::Mat & img1, cv::Mat & img2)

{

// Compute disparity

cv::Mat disparity;

cv::Ptr pStereo = cv::StereoSGBM::create(0, 16, 5);

pStereo->compute(img1, img2, disparity);

return disparity;

}

bool MyMatch::Build()

{

cv::Mat& K = global_model->K;

//std::cout << "MyMatch::Build\n";

if (this->match_type == 0) {

init_structure();

}

else {

//增量方式重建剩余的图像

cv::Mat r, R, T;

std::vector object_points;

std::vector image_points;

auto& global_structure = global_model->structure;

auto& global_struct_idx = global_model->correspond_struct_idx;

auto& last_struct_idx = global_struct_idx[global_struct_idx.size() - 1];

//获取第i幅图像中匹配点对应的三维点,以及在第i+1幅图像中对应的像素点

get_objpoints_and_imgpoints(

m_matchs,

last_struct_idx,

global_structure,

this->rightimage->keypoints,

object_points,

image_points

);

//求解变换矩阵

cv::solvePnPRansac(object_points, image_points, K, cv::noArray(), r, T);

//将旋转向量转换为旋转矩阵

cv::Rodrigues(r, R);

this->rightimage->rotation = R;

this->rightimage->motion = T;

reconstruct(

K, this->leftimage->rotation, this->leftimage->motion, R, T,

m1, m2, m_structure);

std::vector next_struct_indices(this->rightimage->keypoints.size(), -1);

//将新的重建结果与之前的融合

fusion_structure(

m_matchs,

last_struct_idx,

next_struct_indices,

global_structure,

m_structure);

global_struct_idx.push_back(next_struct_indices);

}

return true;

}

void MultiViewStereoReconstructor::SetData(std::vector& _images) {

for (auto& im : _images) {

std::shared_ptr im_ptr = std::shared_ptr(new UnitView(im));

//UnitView* im_ptr = new UnitView(im);

images.push_back(im_ptr);

}

cv::Ptr _executor = cv::xfeatures2d::SIFT::create(0, 3, 0.04, 10);

#pragma omp parallel for num_threads(2*omp_get_num_procs()-1)

for (int i = 0; i < images.size(); i++) {

printf("thread: %d\n", std::this_thread::get_id());

images[i]->extract_feature(_executor);

}

matchs.resize(images.size() - 1);

}

bool MultiViewStereoReconstructor::Execute() {

if (K.empty()) {

std::cout << "must set K matrix!\n";

return false;

}

global_model = std::shared_ptr(new PointCloudModel());

global_model->K = K.clone();

global_model->distCoeffs = distCoeffs.clone();

//#pragma omp parallel for num_threads(2*omp_get_num_procs()-1)

for (int i = 0; i < images.size() - 1; i++) {

MyMatch &match = matchs[i];

if (i == 0) {

match.match_type = 0;

}

match.leftimage = images[i];

match.rightimage = images[i + 1];

match.global_model = global_model;

//std::cout << "Matching images " << i << " - " << i + 1 << std::endl;

std::printf("thread: %d Matching images %d - %d\n", std::this_thread::get_id(), i, i + 1);

match.SetUp();

}

for (int i = 0; i < images.size() - 1; i++) {

matchs[i].Build();

}

};

void MultiViewStereoReconstructor::Visualize()

{

auto& global_structure = global_model->structure;

std::vector < std::array> tpoints;

std::vector < std::array> tcolors;

for (size_t i = 0; i < global_structure.size(); ++i)

{

std::array tp;

tp[0] = global_structure[i].x;

tp[1] = global_structure[i].y;

tp[2] = global_structure[i].z;

tpoints.push_back(tp);

std::array tc;

tc[0] = global_structure[i].color[0];

tc[1] = global_structure[i].color[1];

tc[2] = global_structure[i].color[2];

tcolors.push_back(tc);

}

std::cout <<"points.size(): "<< tpoints.size() << "\n";

GlobalVTKUtils::ShowPoints(tpoints, tcolors);

//GlobalVTKUtils::ShowPoints(tpoints);

}

main.cpp

#include"MultiViewStereoReconstructor.h"

#include

#include

#ifndef INIT_TIMER2

#define INIT_TIMER2(name) \

std::chrono::high_resolution_clock::time_point timerStart##name = std::chrono::high_resolution_clock::now();

#endif // !INIT_TIMER2

#ifndef STOP_TIMER2

#define STOP_TIMER2(name,title) \

std::cout << "RUNTIME of " << title << ": " \

<< std::chrono::duration_cast(std::chrono::high_resolution_clock::now() - timerStart##name).count()\

<< " ms " << std::endl;

#endif // !STOP_TIMER2

void get_files(const std::string & path, std::vector& files)

{

std::cout << "[jhq] get_files" << std::endl << std::endl;

//文件句柄

long long hFile = 0;

//文件信息,_finddata_t需要io.h头文件

struct _finddata_t fileinfo;

std::string p;

if ((hFile = _findfirst(p.assign(path).append("/*").c_str(), &fileinfo)) != -1) {

do {

if (strcmp(fileinfo.name, ".") != 0 && strcmp(fileinfo.name, "..") != 0) {

files.push_back(p.assign(path).append(fileinfo.name));

}

} while (_findnext(hFile, &fileinfo) == 0);

_findclose(hFile);

}

}

int main()

{

INIT_TIMER2(MultiViewStereoReconstructor)

std::vector img_names;

get_files("./Viewer16/", img_names);

cv::Mat K(cv::Matx33d(

2889.84, 0, 825.91,

0, 2881.08, 602.73,

0, 0, 1));

cv::Mat distCoeffs = (cv::Mat_(1, 5) << -0.06122164316121147, -1.243003308575242, 0.001462736730339558, -0.0001684641589496723, 1.749846418870851);

std::vector images;

for (auto it = img_names.begin(); it != img_names.end(); ++it){

std::cout << *it << std::endl;

#if 1

cv::Mat im = cv::imread(*it);

cv::Size image_size = im.size();

cv::Mat mapx = cv::Mat(image_size, CV_32FC1);

cv::Mat mapy = cv::Mat(image_size, CV_32FC1);

cv::Mat R =cv::Mat::eye(3, 3, CV_32F);

cv::initUndistortRectifyMap(K, distCoeffs, R, K,image_size, CV_32FC1, mapx, mapy);//用来计算畸变映射

cv::Mat newimage;

cv::remap(im, newimage, mapx, mapy, cv::INTER_LINEAR);//把求得的映射应用到图像上

images.push_back(newimage);

#else

images.push_back(cv::imread(*it));

#endif

}

MultiViewStereoReconstructor mvs;

mvs.SetK(K);

mvs.SetDistCoeffs(distCoeffs);

mvs.SetData(images);

mvs.Execute();

STOP_TIMER2(MultiViewStereoReconstructor,"MultiViewStereoReconstructor")

mvs.Visualize();

return 0;

}