问题描述

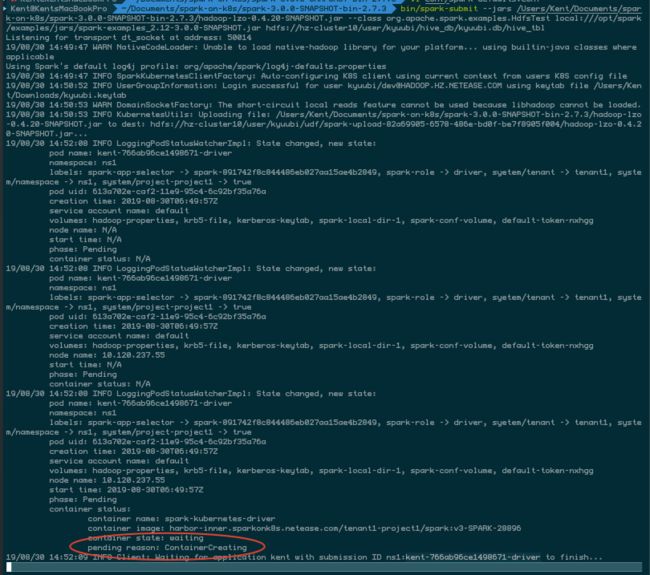

测试了若干天的Spark on k8s, 今天突然就无法初始化 Spark Driver Pod 了。

表现如下,

- 客户端侧以cluster模式提交一个几秒就会结束的测试程序,一直Hang住在ContainerCreating阶段

- 接着尝试查看该 Pod 的真实状态。发现 no space left on device 的错误。

Kent@KentsMacBookPro ~ kubectl describe pod kent-766ab96ce1498671-driver -nns1

Name: kent-766ab96ce1498671-driver

Namespace: ns1

Node: 10.120.237.55/10.120.237.55

Start Time: Fri, 30 Aug 2019 14:49:57 +0800

Labels: spark-app-selector=spark-891742f8c844486eb027aa15ae4b2849

spark-role=driver

syetem/tenant=tenant1

system/namespace=ns1

system/project-project1=true

Annotations:

Status: Pending

IP:

Containers:

spark-kubernetes-driver:

Container ID:

Image: harbor-inner.sparkonk8s.netease.com/tenant1-project1/spark:v3-SPARK-28896

Image ID:

Ports: 7078/TCP, 7079/TCP, 4040/TCP

Host Ports: 0/TCP, 0/TCP, 0/TCP

Args:

driver

--properties-file

/opt/spark/conf/spark.properties

--class

org.apache.spark.examples.HdfsTest

local:///opt/spark/examples/jars/spark-examples_2.12-3.0.0-SNAPSHOT.jar

hdfs://hz-cluster10/user/kyuubi/hive_db/kyuubi.db/hive_tbl

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Limits:

memory: 1408Mi

Requests:

cpu: 1

memory: 1408Mi

Environment:

SPARK_USER: Kent

SPARK_DRIVER_BIND_ADDRESS: (v1:status.podIP)

HADOOP_CONF_DIR: /opt/hadoop/conf

SPARK_LOCAL_DIRS: /var/data/spark-e6630b91-3892-41d1-9624-a955159b176a

SPARK_CONF_DIR: /opt/spark/conf

Mounts:

/etc/krb5.conf from krb5-file (rw,path="krb5.conf")

/mnt/secrets/kerberos-keytab from kerberos-keytab (rw)

/opt/hadoop/conf from hadoop-properties (rw)

/opt/spark/conf from spark-conf-volume (rw)

/var/data/spark-e6630b91-3892-41d1-9624-a955159b176a from spark-local-dir-1 (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-nxhgg (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

hadoop-properties:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: hz10-hadoop-dir

Optional: false

krb5-file:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: kent-766ab96ce1498671-krb5-file

Optional: false

kerberos-keytab:

Type: Secret (a volume populated by a Secret)

SecretName: kent-766ab96ce1498671-kerberos-keytab

Optional: false

spark-local-dir-1:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit:

spark-conf-volume:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: kent-766ab96ce1498671-driver-conf-map

Optional: false

default-token-nxhgg:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-nxhgg

Optional: false

QoS Class: Burstable

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 37m default-scheduler Successfully assigned ns1/kent-766ab96ce1498671-driver to 10.120.237.55

Warning FailedMount 37m kubelet, 10.120.237.55 MountVolume.SetUp failed for volume "spark-conf-volume" : configmaps "kent-766ab96ce1498671-driver-conf-map" not found

Warning FailedMount 37m kubelet, 10.120.237.55 MountVolume.SetUp failed for volume "kerberos-keytab" : secrets "kent-766ab96ce1498671-kerberos-keytab" not found

Warning FailedMount 37m kubelet, 10.120.237.55 MountVolume.SetUp failed for volume "krb5-file" : configmaps "kent-766ab96ce1498671-krb5-file" not found

Warning FailedCreatePodContainer 7m9s (x140 over 37m) kubelet, 10.120.237.55 unable to ensure pod container exists: failed to create container for [kubepods burstable pod613a702e-caf2-11e9-95c4-6c92bf35a76a] : mkdir /sys/fs/cgroup/memory/kubepods/burstable/pod613a702e-caf2-11e9-95c4-6c92bf35a76a: no space left on device

- Google一番后发现与https://github.com/rootsongjc/kubernetes-handbook/issues/313 这个同学的问题基本一致。

存在的可能有,

- Kubelet 宿主机的 Linux 内核过低 - Linux version 3.10.0-862.el7.x86_64

- K8s集群的版本过低 - 1.11.9

- 可以通过禁用kmem解决

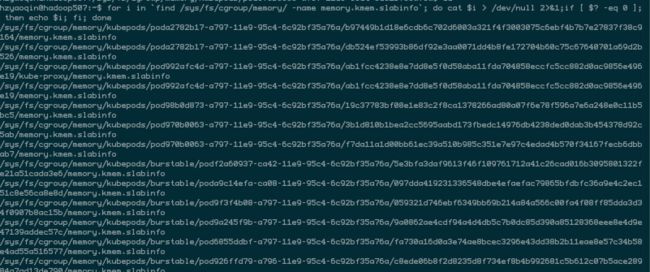

联系了一下我司k8s内核开发,首先发现kmem应该是禁止了

但实际上,貌似没有禁止干净

最后,因为在启动容器的时候runc的逻辑会默认打开容器的kmem accounting,导致3.10内核可能的泄漏问题

总结

从根源上看,这个问题应该是属于kubernetes 或者 操作系统内核的一个bug

但从结果上来讲,Container 因为各种原因分配失败应该是常有的事,Spark在处理这块的时候目前也没有retry的机制,这些异常的 Pod也没有自动清理的机制,需要上集群手动进行删除。