下面主要介绍拍照流程的底层实现。

当指定了Camera的预览类,并开始预览之后,就可以通过takePicture()方法进行拍照了。它将以异步的方式从Camera中获取图像,具有多个回调类作为参数,并且都可以为null,下面分别介绍这些参数的意义:

• shutter:在按下快门的时候回调,这里可以播放一段声音。

• raw:从Camera获取到未经处理的图像。

• postview:从Camera获取一个快速预览的图片,不是所有设备都支持。

• jpeg:从Camera获取到一个经过压缩的jpeg图片。

虽然raw、postview、jpeg都是Camera.PictureCallback回调,但是一般我们只需要获取jpeg,其他传null即可,Camera.PictureCallback里需要实现一个方法onPictureTaken(byte[] data,Camera camera),data及为图像数据。值得注意的是,一般taskPicture()方法拍照完成之后,SurfaceView都会停留在拍照的瞬间,需要重新调用startPreview()才会继续预览。

如果直接使用taskPicture()进行拍照的话,Camera是不会进行自动对焦的,这里需要使用Camera.autoFocus()方法进行对焦,它传递一个Camera.AutoFocusCallback参数,用于自动对焦完成后回调,一般会在它对焦完成在进行taskPicture()拍照。

4.2.1 java framework

首先拍照的流程直接从Camera.java的takePicture开始分析。

public final void takePicture(ShutterCallback shutter, PictureCallback raw,

PictureCallback postview, PictureCallback jpeg) {

android.util.SeempLog.record(65);

mShutterCallback = shutter;

mRawImageCallback = raw;

mPostviewCallback = postview;

mJpegCallback = jpeg;

// If callback is not set, do not send me callbacks.

int msgType = 0;

if (mShutterCallback != null) {

msgType |= CAMERA_MSG_SHUTTER;

}

if (mRawImageCallback != null) {

msgType |= CAMERA_MSG_RAW_IMAGE;

}

if (mPostviewCallback != null) {

msgType |= CAMERA_MSG_POSTVIEW_FRAME;

}

if (mJpegCallback != null) {

msgType |= CAMERA_MSG_COMPRESSED_IMAGE;

}

native_takePicture(msgType);

mFaceDetectionRunning = false;

}

可以看出,在方法中对各种回调的值进行了赋值,继续看底层对调函数的处理。

@Override

public void handleMessage(Message msg) {

switch(msg.what) {

//有数据到达通知

case CAMERA_MSG_SHUTTER:

if (mShutterCallback != null) {

mShutterCallback.onShutter();

}

return;

//处理未压缩照片函数

case CAMERA_MSG_RAW_IMAGE:

if (mRawImageCallback != null) {

mRawImageCallback.onPictureTaken((byte[])msg.obj, mCamera);

}

return;

//处理压缩处理的照片函数

case CAMERA_MSG_COMPRESSED_IMAGE:

if (mJpegCallback != null) {

mJpegCallback.onPictureTaken((byte[])msg.obj, mCamera);

}

return;

... ...

在应用层注册回调。

packages\apps\SnapdragonCamera\src\com\android\camera\AndroidCameraManagerImpl.java

@Override

public void takePicture(

Handler handler,

CameraShutterCallback shutter,

CameraPictureCallback raw,

CameraPictureCallback post,

CameraPictureCallback jpeg) {

mCameraHandler.requestTakePicture(

ShutterCallbackForward.getNewInstance(handler, this, shutter),

PictureCallbackForward.getNewInstance(handler, this, raw),

PictureCallbackForward.getNewInstance(handler, this, post),

PictureCallbackForward.getNewInstance(handler, this, jpeg));

}

在应用层实现回调。

@Override

public void onPictureTaken(final byte [] jpegData, CameraProxy camera) {

... ...

if (mRefocus) {

final String[] NAMES = { "00.jpg", "01.jpg", "02.jpg", "03.jpg",

"04.jpg", "DepthMapImage.y", "AllFocusImage.jpg" };

try {

FileOutputStream out = mActivity.openFileOutput(NAMES[mReceivedSnapNum - 1],

Context.MODE_PRIVATE);

out.write(jpegData, 0, jpegData.length);

out.close();

} catch (Exception e) {

}

}

... ...

这里就是真正存储数据的地方了,在android系统有四个地方可以存储共同数据区,

ContentProvider,sharedpreference、file、sqlite这几种方式,这里利用的是file方式。

4.2.2 JNI

然后调用到JNI层相应方法。

**static void **android_hardware_Camera_takePicture(JNIEnv *env, jobject thiz,

jint msgType)

{

ALOGV("takePicture");

JNICameraContext* context;

sp camera =

get_native_camera(env, thiz, &context);

**if **(camera == 0) **return**;

/*

* When CAMERA_MSG_RAW_IMAGE is

requested, if the raw image callback

* buffer is available,

CAMERA_MSG_RAW_IMAGE is enabled to get the

* notification _and_ the data;

otherwise, CAMERA_MSG_RAW_IMAGE_NOTIFY

* is enabled to receive the

callback notification but no data.

***

** * Note that

CAMERA_MSG_RAW_IMAGE_NOTIFY is not exposed to the

* Java application.

*/

**if **(msgType &

CAMERA_MSG_RAW_IMAGE) {

ALOGV("Enable raw image

callback buffer");

**if **(!context->isRawImageCallbackBufferAvailable())

{

ALOGV("Enable raw

image notification, since no callback buffer exists");

msgType &=

~CAMERA_MSG_RAW_IMAGE;

msgType |= CAMERA_MSG_RAW_IMAGE_NOTIFY;

}

}

**if **(camera->takePicture(msgType)

!= NO_ERROR) {

jniThrowRuntimeException(env,

"takePicture failed");

**return**;

}

}

4.2.3 Native层

根据之前分析的binder机制,Camera.cpp -> ICamera.cpp -> CameraClient.cpp(server端)

// take a picture - image is returned in

callback

status_t CameraClient::takePicture(**int **msgType) {

LOG1("takePicture (pid %d):

0x%x", getCallingPid(), msgType);

Mutex::Autolock lock(mLock);

status_t result =

checkPidAndHardware();

**if **(result != NO_ERROR) **return

**result;

**if **((msgType &

CAMERA_MSG_RAW_IMAGE) &&

(msgType &

CAMERA_MSG_RAW_IMAGE_NOTIFY)) {

ALOGE("CAMERA_MSG_RAW_IMAGE and CAMERA_MSG_RAW_IMAGE_NOTIFY"

" cannot be both

enabled");

**return **BAD_VALUE;

}

// We only accept picture related

message types

// and ignore other types of

messages for takePicture().

**int **picMsgType = msgType

&

(CAMERA_MSG_SHUTTER |

CAMERA_MSG_POSTVIEW_FRAME |

CAMERA_MSG_RAW_IMAGE |

CAMERA_MSG_RAW_IMAGE_NOTIFY |

CAMERA_MSG_COMPRESSED_IMAGE);

enableMsgType(picMsgType);

mBurstCnt =

mHardware->getParameters().getInt("num-snaps-per-shutter");

**if**(mBurstCnt <= 0)

mBurstCnt = 1;

LOG1("mBurstCnt = %d",

mBurstCnt);

**return **mHardware->takePicture();

}

此处的takepicture是在CameraHardwareInterface.h定义的方法。

frameworks/av/services/camera/libcameraservice/device1/CameraHardwareInterface.h

status_t takePicture()

{

ALOGV("%s(%s)",

__FUNCTION__, mName.string());

**if **(mDevice->ops->take_picture)

**return **mDevice->ops->take_picture(mDevice);

**return **INVALID_OPERATION;

}

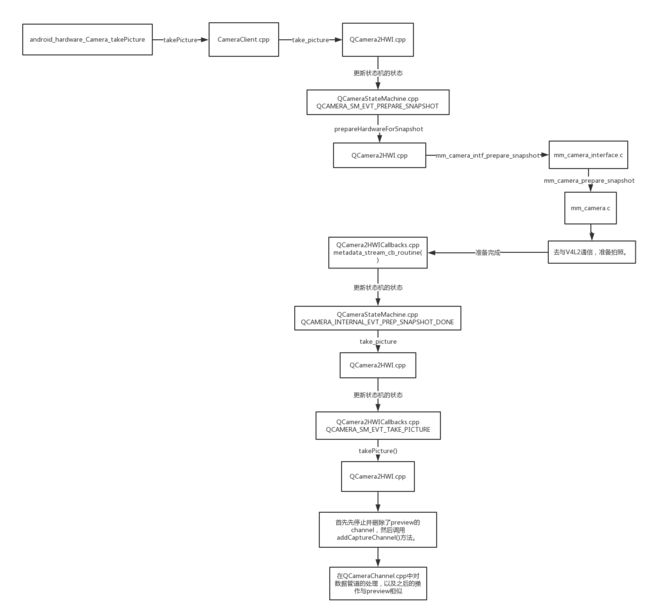

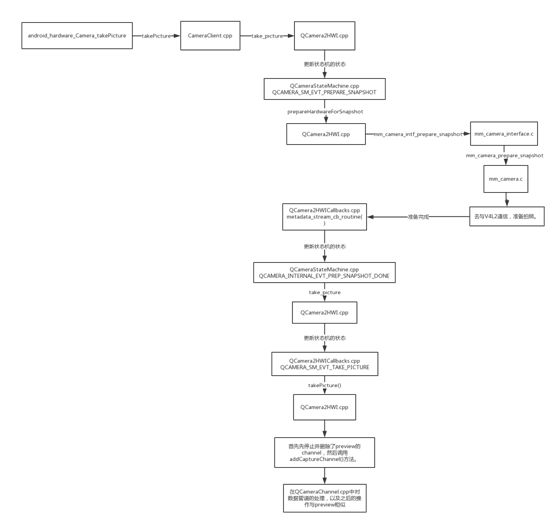

4.2.4 HAL层

在CameraClient.cpp开始调用到HAL层中进行处理,接下来主要分析在

hardware/qcom/camera/QCamera2/HAL/QCamera2HWI.cpp得具体实现。

**int **QCamera2HardwareInterface::take_picture(**struct

**camera_device *device)

{

ATRACE_CALL();

**int **ret = NO_ERROR;

QCamera2HardwareInterface *hw =

**reinterpret_cast**(device->priv);

... ...

/* Prepare snapshot in case LED

needs to be flashed */

**if **(hw->mFlashNeeded == **true

**|| hw->mParameters.isChromaFlashEnabled()) {

ret = hw->processAPI(QCAMERA_SM_EVT_PREPARE_SNAPSHOT,

NULL);

**if **(ret == NO_ERROR) {

hw->waitAPIResult(QCAMERA_SM_EVT_PREPARE_SNAPSHOT,

&apiResult);

ret = apiResult.status;

}

hw->mPrepSnapRun = **true**;

}

/* Regardless what the result value

for prepare_snapshot,

* go ahead with capture anyway.

Just like the way autofocus

* is handled in capture case. */

/* capture */

ret = hw->processAPI(QCAMERA_SM_EVT_TAKE_PICTURE,

NULL);

**if **(ret == NO_ERROR) {

hw->waitAPIResult(QCAMERA_SM_EVT_TAKE_PICTURE,

&apiResult);

ret = apiResult.status;

}

... ...

}

在此方法中去更新状态机的状态。

hardware/qcom/camera/QCamera2/HAL/QCameraStateMachine.cpp

**case **QCAMERA_SM_EVT_PREPARE_SNAPSHOT:

{

rc =

m_parent->prepareHardwareForSnapshot(FALSE);

**if **(rc == NO_ERROR) {

// Do not signal API result

in this case.

// Need to wait for

snapshot done in metadta.

m_state =

QCAMERA_SM_STATE_PREPARE_SNAPSHOT;

} **else **{

// Do not change state in

this case.

ALOGE("%s:

prepareHardwareForSnapshot failed %d",

__func__, rc);

result.status = rc;

result.request_api = evt;

result.result_type =

QCAMERA_API_RESULT_TYPE_DEF;

m_parent->signalAPIResult(&result);

}

}

**break**;

首先调用回QCamera2HWI.cpp的prepareHardwareForSnapshot方法。

int32_t

QCamera2HardwareInterface::prepareHardwareForSnapshot(int32_t afNeeded)

{

ATRACE_CALL();

CDBG_HIGH("[KPI Perf] %s:

Prepare hardware such as LED",__func__);

**return **mCameraHandle->ops->prepare_snapshot(mCameraHandle->camera_handle,

afNeeded);

}

接着调用到mm_camera_interface.c的mm_camera_intf_prepare_snapshot方法。

hardware/qcom/camera/QCamera2/stack/mm-camera-interface/src/mm_camera_interface.c

**static **int32_t

mm_camera_intf_prepare_snapshot(uint32_t camera_handle,

int32_t do_af_flag)

{

int32_t rc = -1;

mm_camera_obj_t * my_obj = NULL;

pthread_mutex_lock(&g_intf_lock);

my_obj =

mm_camera_util_get_camera_by_handler(camera_handle);

**if**(my_obj) {

pthread_mutex_lock(&my_obj->cam_lock);

pthread_mutex_unlock(&g_intf_lock);

rc =

mm_camera_prepare_snapshot(my_obj, do_af_flag);

} **else **{

pthread_mutex_unlock(&g_intf_lock);

}

**return **rc;

}

接着调用mm_camera.c的mm_camera_prepare_snapshot方法,去与V4L2通信,准备拍照。

hardware/qcom/camera/QCamera2/stack/mm-camera-interface/src/mm_camera.c

int32_t

mm_camera_prepare_snapshot(mm_camera_obj_t *my_obj,

int32_t do_af_flag)

{

int32_t rc = -1;

int32_t value = do_af_flag;

rc =

mm_camera_util_s_ctrl(my_obj->ctrl_fd, CAM_PRIV_PREPARE_SNAPSHOT,

&value);

pthread_mutex_unlock(&my_obj->cam_lock);

**return **rc;

}

当底层对拍照准备完成之后,会调用到QCamera2HWICallbacks.cpp里,处理metadata

数据的方法metadata_stream_cb_routine中。

hardware/qcom/camera/QCamera2/HAL/QCamera2HWICallbacks.cpp

**if **(pMetaData->is_prep_snapshot_done_valid)

{

qcamera_sm_internal_evt_payload_t

*payload =

(qcamera_sm_internal_evt_payload_t *)malloc(**sizeof**(qcamera_sm_internal_evt_payload_t));

**if **(NULL != payload) {

memset(payload, 0, **sizeof**(qcamera_sm_internal_evt_payload_t));

payload->evt_type =

QCAMERA_INTERNAL_EVT_PREP_SNAPSHOT_DONE;

payload->prep_snapshot_state

= pMetaData->prep_snapshot_done_state;

int32_t rc =

pme->processEvt(QCAMERA_SM_EVT_EVT_INTERNAL, payload);

**if **(rc != NO_ERROR) {

ALOGE("%s: processEvt

prep_snapshot failed", __func__);

free(payload);

payload = NULL;

}

} **else **{

ALOGE("%s: No memory for

prep_snapshot qcamera_sm_internal_evt_payload_t", __func__);

}

}

此处给状态机设置了新的状态

**case **QCAMERA_INTERNAL_EVT_PREP_SNAPSHOT_DONE:

CDBG("%s: Received

QCAMERA_INTERNAL_EVT_PREP_SNAPSHOT_DONE event",

__func__);

m_parent->processPrepSnapshotDoneEvent(internal_evt->prep_snapshot_state);

m_state =

QCAMERA_SM_STATE_PREVIEWING;

result.status = NO_ERROR;

result.request_api =

QCAMERA_SM_EVT_PREPARE_SNAPSHOT;

result.result_type =

QCAMERA_API_RESULT_TYPE_DEF;

m_parent->signalAPIResult(&result);

**break**;

之后返回到QCamera2HWI.cpp类里的take_picture方法,继续下面的操作。

ret =

hw->processAPI(QCAMERA_SM_EVT_TAKE_PICTURE, NULL);

**if **(ret == NO_ERROR) {

hw->waitAPIResult(QCAMERA_SM_EVT_TAKE_PICTURE, &apiResult);

ret = apiResult.status;

}

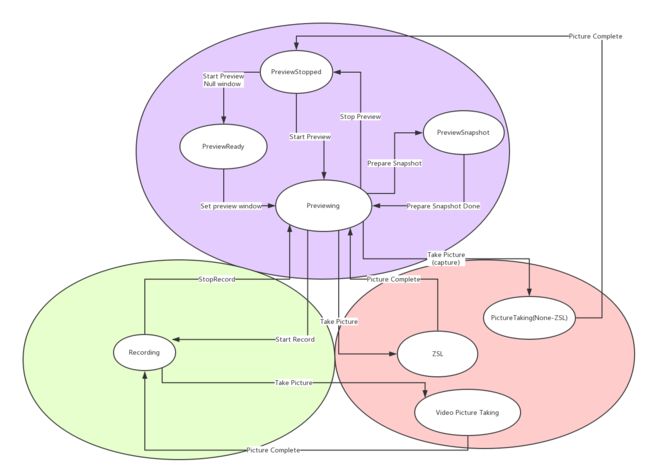

此处改变了状态机的状态,QCAMERA_SM_EVT_TAKE_PICTURE

**case **QCAMERA_SM_EVT_TAKE_PICTURE:

{

**if **(

m_parent->mParameters.getRecordingHintValue() == **true**) {

m_parent->stopPreview();

m_parent->mParameters.updateRecordingHintValue(FALSE);

// start preview again

rc = m_parent->preparePreview();

**if **(rc == NO_ERROR)

{

rc =

m_parent->startPreview();

**if **(rc !=

NO_ERROR) {

m_parent->unpreparePreview();

}

}

}

**if **(m_parent->isZSLMode()

|| m_parent->isLongshotEnabled()) {

m_state =

QCAMERA_SM_STATE_PREVIEW_PIC_TAKING;

rc =

m_parent->takePicture();

**if **(rc != NO_ERROR) {

// move state to

previewing state

m_state = QCAMERA_SM_STATE_PREVIEWING;

}

} **else **{

m_state =

QCAMERA_SM_STATE_PIC_TAKING;

rc =

m_parent->takePicture();

**if **(rc != NO_ERROR) {

// move state to preview

stopped state

m_state =

QCAMERA_SM_STATE_PREVIEW_STOPPED;

}

}

result.status = rc;

result.request_api = evt;

result.result_type =

QCAMERA_API_RESULT_TYPE_DEF;

m_parent->signalAPIResult(&result);

}

**break**;

此处又返回到QCamera2HWI.cpp类里的takePicture()方法中。

**int **QCamera2HardwareInterface::takePicture()

{

** ... ...**

} **else **{

// normal capture case

// need to stop preview

channel

stopChannel(QCAMERA_CH_TYPE_PREVIEW);

delChannel(QCAMERA_CH_TYPE_PREVIEW);

rc =

addCaptureChannel();

}

** ... ...**

}

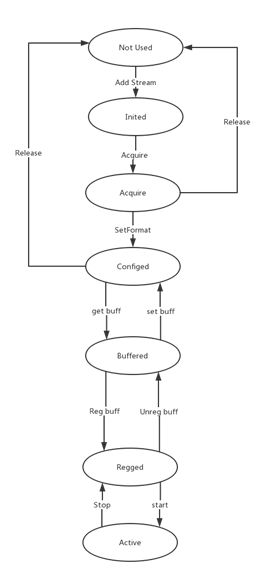

首先先停止并删除了preview的channel,然后调用addCaptureChannel()方法。

int32_t

QCamera2HardwareInterface::addCaptureChannel()

{

... ...

rc = pChannel->init(&attr,

capture_channel_cb_routine,

**this**);

... ...

rc = addStreamToChannel(pChannel,

CAM_STREAM_TYPE_METADATA,

metadata_stream_cb_routine, **this**);

... ...

rc = addStreamToChannel(pChannel,

CAM_STREAM_TYPE_POSTVIEW,

NULL, **this**);

... ...

rc = addStreamToChannel(pChannel,

CAM_STREAM_TYPE_SNAPSHOT,

NULL, **this**);

... ...

可以看出,在此方法中创建并初始化了channel,并且添加mediadata,postview,snapshot数据流到channel中。

返回到hardware/qcom/camera/QCamera2/HAL/QCamera2HWI.cpp的takePicture()方法中,继续调用到开启capture的channel。

rc =

m_channels[QCAMERA_CH_TYPE_CAPTURE]->start();

channel的start方法在之前的preview流程中具体介绍过,在此不做分析。

之后又调用了QCameraChannel.cpp的startAdvancedCapture方法,然后是mm-camera-interface.c的process_advanced_capture方法,然后是mm-camera.c的mm_camera_channel_advanced_capture方法,这一系列方法设置了当前管道是拍照模式。

4.2.5 jpeg数据流

通过mm-jpeg-interface.c处理数据流,并且生成jpeg文件,然后在QCamera2HWI.cpp中处理从mm-jpeg-interface.c发出的jpeg相关事件。

**void **QCamera2HardwareInterface::jpegEvtHandle(jpeg_job_status_t

status,

uint32_t /*client_hdl*/,

uint32_t jobId,

mm_jpeg_output_t *p_output,

**void ***userdata)

{

QCamera2HardwareInterface *obj =

(QCamera2HardwareInterface *)userdata;

**if **(obj) {

qcamera_jpeg_evt_payload_t

*payload =

(qcamera_jpeg_evt_payload_t

*)malloc(**sizeof**(qcamera_jpeg_evt_payload_t));

**if **(NULL != payload) {

memset(payload, 0, **sizeof**(qcamera_jpeg_evt_payload_t));

payload->status =

status;

payload->jobId = jobId;

**if **(p_output !=

NULL) {

payload->out_data =

*p_output;

}

obj->processMTFDumps(payload);

obj->processEvt(QCAMERA_SM_EVT_JPEG_EVT_NOTIFY, payload);

}

} **else **{

ALOGE("%s: NULL

user_data", __func__);

}

}

此处更改了状态机的状态。

**case **QCAMERA_SM_EVT_JPEG_EVT_NOTIFY:

{

qcamera_jpeg_evt_payload_t

*jpeg_job =

(qcamera_jpeg_evt_payload_t

*)payload;

rc =

m_parent->processJpegNotify(jpeg_job);

}

**break**;

然后调用回QCamera2HWI.cpp的processJpegNotify方法

int32_t

QCamera2HardwareInterface::processJpegNotify(qcamera_jpeg_evt_payload_t

*jpeg_evt)

{

**return **m_postprocessor.processJpegEvt(jpeg_evt);

}

此处调用的是QCameraPostProc.cpp的processJpegEvt方法。

hardware/qcom/camera/QCamera2/HAL/QCameraPostProc.cpp

int32_t

QCameraPostProcessor::processJpegEvt(qcamera_jpeg_evt_payload_t *evt)

{

... ...

rc =

sendDataNotify(CAMERA_MSG_COMPRESSED_IMAGE,

jpeg_mem,

0,

NULL,

&release_data);

... ...

return rc;

}

接着调用sendDataNotify方法。

int32_t

QCameraPostProcessor::sendDataNotify(int32_t msg_type,

camera_memory_t *data,

uint8_t index,

camera_frame_metadata_t *metadata,

qcamera_release_data_t *release_data)

{

qcamera_data_argm_t *data_cb =

(qcamera_data_argm_t *)malloc(**sizeof**(qcamera_data_argm_t));

**if **(NULL == data_cb) {

ALOGE("%s: no mem for

acamera_data_argm_t", __func__);

**return **NO_MEMORY;

}

memset(data_cb, 0, **sizeof**(qcamera_data_argm_t));

data_cb->msg_type = msg_type;

data_cb->data = data;

data_cb->index = index;

data_cb->metadata = metadata;

**if **(release_data != NULL) {

data_cb->release_data =

*release_data;

}

qcamera_callback_argm_t cbArg;

memset(&cbArg, 0, **sizeof**(qcamera_callback_argm_t));

cbArg.cb_type =

QCAMERA_DATA_SNAPSHOT_CALLBACK;

cbArg.msg_type = msg_type;

cbArg.data = data;

cbArg.metadata = metadata;

cbArg.user_data = data_cb;

cbArg.cookie = **this**;

cbArg.release_cb = releaseNotifyData;

**int **rc =

m_parent->m_cbNotifier.notifyCallback(cbArg);

**if **( NO_ERROR != rc ) {

ALOGE("%s: Error enqueuing

jpeg data into notify queue", __func__);

releaseNotifyData(data_cb, **this**,

UNKNOWN_ERROR);

**return **UNKNOWN_ERROR;

}

**return **rc;

}

可以看出此处在给回调的对象装填数据,并且发出通知notifyCallback回调。并且,类型为CAMERA_MSG_COMPRESSED_IMAGE。

之后的流程与preview的流程相似,将数据向上层抛送,通过JNI返回到java层的回调函数中。