server1:172.25.4.1

server2:172.25.4.2

server3:172.25.4.3

server4:172.25.4.4

真机:172.25.4.250

在实现了简单的mfs分布式存储后,在这个基础上我们实现高可用

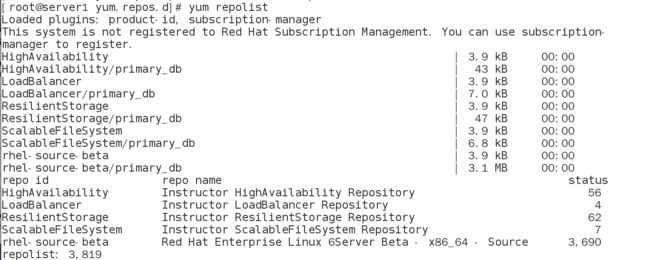

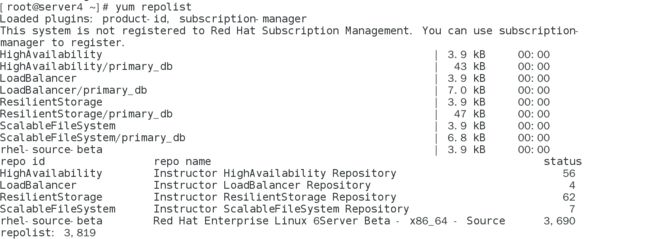

1、在server1和server4上配置高可用yum源

[rhel-source-beta]

name=Red Hat Enterprise Linux $releasever Beta - $basearch - Source

baseurl=http://172.25.4.250/rhel6.5

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-beta,file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[HighAvailability]

name=Instructor HighAvailability Repository

baseurl=http://172.25.4.250/rhel6.5/HighAvailability

[LoadBalancer]

name=Instructor LoadBalancer Repository

baseurl=http://172.25.4.250/rhel6.5/LoadBalancer

[ResilientStorage]

name=Instructor ResilientStorage Repository

baseurl=http://172.25.4.250/rhel6.5/ResilientStorage

[ScalableFileSystem]

name=Instructor ScalableFileSystem Repository

baseurl=http://172.25.4.250/rhel6.5/ScalableFileSystem

2、在server1和server4上安装组件

yum install -y pacemaker corosync 配置完了yum源可以安装

yum install crmsh-1.2.6-0.rc2.2.1.x86_64.rpm pssh-2.3.1-2.1.x86_64.rpm

需要自己下载软件包

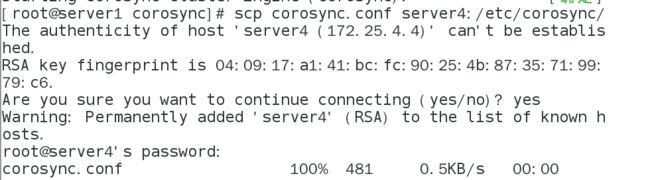

3、配置server1的高可用集群

# Please read the corosync.conf.5 manual page

compatibility: whitetank

totem {

version: 2

secauth: off

threads: 0

interface {

ringnumber: 0

bindnetaddr: 172.25.4.0

mcastaddr: 226.94.1.1

mcastport: 7654

ttl: 1

}

}

logging {

fileline: off

to_stderr: no

to_logfile: yes

to_syslog: yes

logfile: /var/log/cluster/corosync.log

debug: off

timestamp: on

logger_subsys {

subsys: AMF

debug: off

}

}

amf {

mode: disabled

}

service {

name: pacemaker

ver: 0

}

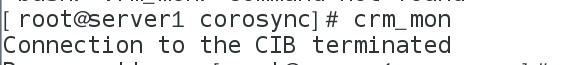

4、在server1上查看监控信息

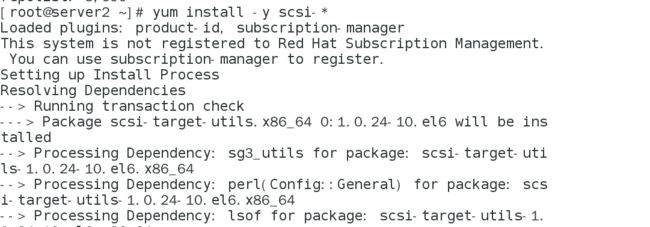

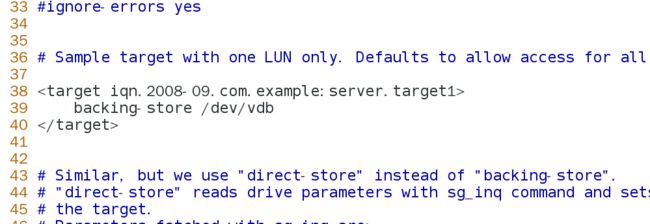

5、把server2作为存储添加一块8g硬盘

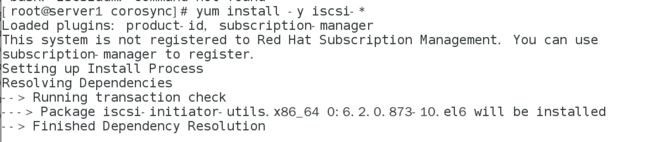

6、在server1和server4安装iscsi

[root@server1 corosync]# iscsiadm -m discovery -t st -p 172.25.4.2

正在启动 iscsid: [确定]

172.25.4.2:3260,1 iqn.2008-09.com.example:server.target1

[root@server1 corosync]# iscsiadm -m node -l

Logging in to [iface: default, target: iqn.2008-09.com.example:server.target1, portal: 172.25.4.2,3260] (multiple)

Login to [iface: default, target: iqn.2008-09.com.example:server.target1, portal: 172.25.4.2,3260] successful.

[root@server1 corosync]# fdisk -l

Disk /dev/vda: 21.5 GB, 21474836480 bytes

16 heads, 63 sectors/track, 41610 cylinders

Units = cylinders of 1008 * 512 = 516096 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x000ab44a

Device Boot Start End Blocks Id System

/dev/vda1 * 3 1018 512000 83 Linux

Partition 1 does not end on cylinder boundary.

/dev/vda2 1018 41611 20458496 8e Linux LVM

Partition 2 does not end on cylinder boundary.

Disk /dev/mapper/VolGroup-lv_root: 19.9 GB, 19906166784 bytes

255 heads, 63 sectors/track, 2420 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/mapper/VolGroup-lv_swap: 1040 MB, 1040187392 bytes

255 heads, 63 sectors/track, 126 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sda: 8589 MB, 8589934592 bytes

64 heads, 32 sectors/track, 8192 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

[root@server1 corosync]# fdisk -cu /dev/sda

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel with disk identifier 0xa18d4097.

Changes will remain in memory only, until you decide to write them.

After that, of course, the previous content won't be recoverable.

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First sector (2048-16777215, default 2048): p

First sector (2048-16777215, default 2048):

Using default value 2048

Last sector, +sectors or +size{K,M,G} (2048-16777215, default 16777215):

Using default value 16777215

Command (m for help): p

Disk /dev/sda: 8589 MB, 8589934592 bytes

64 heads, 32 sectors/track, 8192 cylinders, total 16777216 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0xa18d4097

Device Boot Start End Blocks Id System

/dev/sda1 2048 16777215 8387584 83 Linux

Command (m for help): wq

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

[root@server1 corosync]# mkfs.ext4 /dev/sda1

mke2fs 1.41.12 (17-May-2010)

文件系统标签=

操作系统:Linux

块大小=4096 (log=2)

分块大小=4096 (log=2)

Stride=0 blocks, Stripe width=0 blocks

524288 inodes, 2096896 blocks

104844 blocks (5.00%) reserved for the super user

第一个数据块=0

Maximum filesystem blocks=2147483648

64 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632

正在写入inode表: 完成

Creating journal (32768 blocks): 完成

Writing superblocks and filesystem accounting information: 完成

This filesystem will be automatically checked every 26 mounts or

180 days, whichever comes first. Use tune2fs -c or -i to override.

[root@server4 ~]# iscsiadm -m discovery -t st -p 172.25.4.2

正在启动 iscsid: [确定]

172.25.4.2:3260,1 iqn.2008-09.com.example:server.target1

[root@server4 ~]# iscsiadm -m node -l

Logging in to [iface: default, target: iqn.2008-09.com.example:server.target1, portal: 172.25.4.2,3260] (multiple)

Login to [iface: default, target: iqn.2008-09.com.example:server.target1, portal: 172.25.4.2,3260] successful.

[root@server4 ~]# fdisk -l

Disk /dev/vda: 21.5 GB, 21474836480 bytes

16 heads, 63 sectors/track, 41610 cylinders

Units = cylinders of 1008 * 512 = 516096 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x000ab44a

Device Boot Start End Blocks Id System

/dev/vda1 * 3 1018 512000 83 Linux

Partition 1 does not end on cylinder boundary.

/dev/vda2 1018 41611 20458496 8e Linux LVM

Partition 2 does not end on cylinder boundary.

Disk /dev/mapper/VolGroup-lv_root: 19.9 GB, 19906166784 bytes

255 heads, 63 sectors/track, 2420 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/mapper/VolGroup-lv_swap: 1040 MB, 1040187392 bytes

255 heads, 63 sectors/track, 126 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sda: 8589 MB, 8589934592 bytes

64 heads, 32 sectors/track, 8192 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0xa18d4097

Device Boot Start End Blocks Id System

/dev/sda1 2 8192 8387584 83 Linux

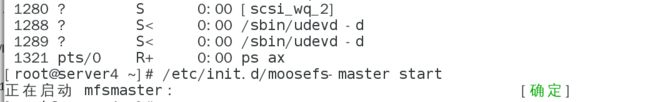

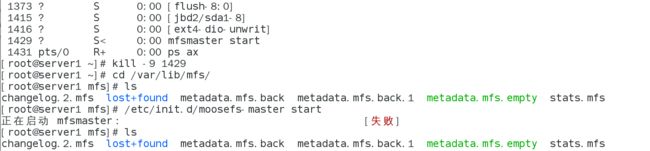

7、配置server1的master端

[root@server1 corosync]# /etc/init.d/moosefs-master status

mfsmaster (pid 1110) 正在运行...

[root@server1 corosync]# /etc/init.d/moosefs-master stop

停止 mfsmaster: [确定]

[root@server1 corosync]# cd /var/lib/mfs/

[root@server1 mfs]# ls

changelog.2.mfs metadata.mfs metadata.mfs.back.1 metadata.mfs.empty stats.mfs

[root@server1 mfs]# pwd

/var/lib/mfs

[root@server1 mfs]# mount /dev/sda1 /mnt/

[root@server1 mfs]# ls

changelog.2.mfs metadata.mfs metadata.mfs.back.1 metadata.mfs.empty stats.mfs

[root@server1 mfs]# cp -p * /mnt/

[root@server1 mfs]# cd /mnt

[root@server1 mnt]# ls

changelog.2.mfs lost+found metadata.mfs metadata.mfs.back.1 metadata.mfs.empty stats.mfs

[root@server1 mnt]# umount /mnt/

umount: /mnt: device is busy.

(In some cases useful info about processes that use

the device is found by lsof(8) or fuser(1))

[root@server1 mnt]# cd

[root@server1 ~]# umount /mnt/

[root@server1 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1019892 17142460 6% /

tmpfs 510200 37152 473048 8% /dev/shm

/dev/vda1 495844 33480 436764 8% /boot

[root@server1 ~]# mount /dev/sda1 /var/lib/mfs/

[root@server1 ~]# ll -d /var/lib/mfs

drwxr-xr-x 3 root root 4096 11月 10 21:21 /var/lib/mfs

[root@server1 ~]# chown mfs.mfs /var/lib/mfs/

[root@server1 ~]# ll -d /var/lib/mfs

drwxr-xr-x 3 mfs mfs 4096 11月 10 21:21 /var/lib/mfs

[root@server1 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1019892 17142460 6% /

tmpfs 510200 37152 473048 8% /dev/shm

/dev/vda1 495844 33480 436764 8% /boot

/dev/sda1 8255928 153096 7683456 2% /var/lib/mfs

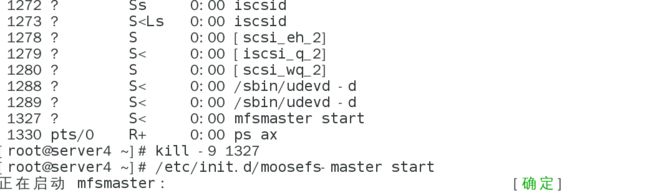

8、配置server4作为master端

9、server2和3关闭chunk

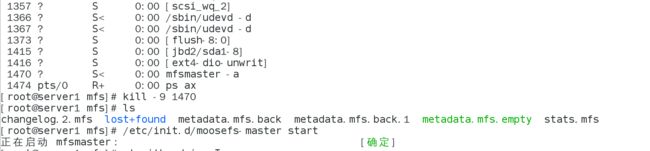

10、配置server1和server4使得直接通过kill的方式对服务依旧没有影响可以高可用

[root@server1 mfs]# vim /etc/init.d/moosefs-master 将恢复方式写入脚本

start () {

echo -n $"Starting $prog: "

$prog start >/dev/null 2>&1 || $prog -a >/dev/null 2>&1 && success || failure

RETVAL=$?

echo

[ $RETVAL -eq 0 ] && touch /var/lock/subsys/$prog

return $RETVAL

}

[root@server1 mfs]# /etc/init.d/moosefs-master start 可以打开服务

Starting mfsmaster: [ OK ]

11、server1配置策略

[root@server1 mfs]# crm

crm(live)# configure

crm(live)configure# show

node server1

node server4

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2"

crm(live)configure# property no-quorum-policy=ignore

crm(live)configure# commit

crm(live)configure# property stonith-enabled=true

crm(live)configure# show

node server1

node server4

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

no-quorum-policy="ignore" \

stonith-enabled="true"

crm(live)configure# commit

crm(live)configure# bye

bye

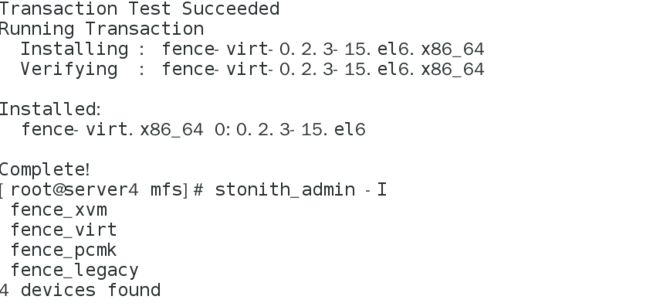

12、server4配置fence节点