利用opencv和mediapip实现对虚拟图像的多张缩放和移动

目录

一、 单张虚拟图像的缩放和移动

1.图片素材

2.代码如下:

3. 效果展示

二、多张虚拟图像的移动

1. 图片素材

2.代码如下:

3.效果如下:

三、多张图片缩放

1.代码如下:

2.效果如下:

四、多张图片的缩放和移动

1.代码如下:

2.效果如下:

提示:cvzone、mediapip的相关文档,参考:Hands - mediapipe

一、 单张虚拟图像的缩放和移动

1.图片素材

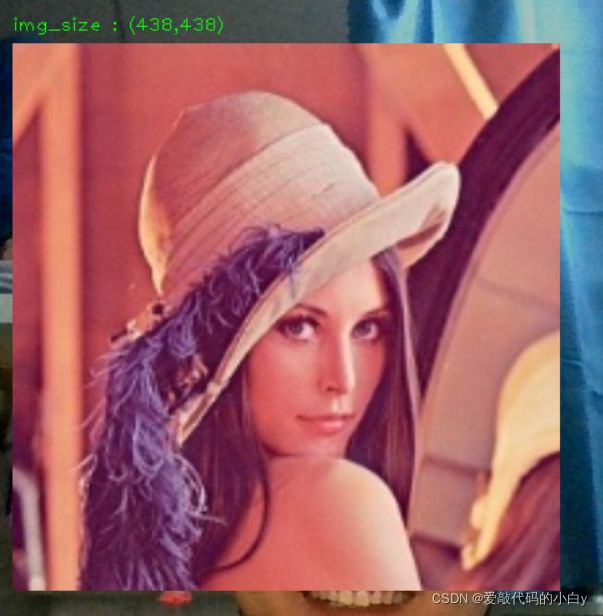

本次使用一张200*200的.jpg图片,

2.代码如下:

import cv2

from cvzone.HandTrackingModule import HandDetector

##########

wWin = 1300

hWin = 720

##########

cap = cv2.VideoCapture(0)

cap.set(3, 1300)

cap.set(4, 720)

startDistance = None

scale, cx, cy = 0, 200, 200

detector = HandDetector(maxHands=2, detectionCon=0.8)

while True:

ret, img = cap.read()

img = cv2.flip(img, flipCode=1)

# the data structure of hands: dictionary ,likes{"lmList","bbox","center","type"}

hands, img = detector.findHands(img, draw=True, flipType=False)

img1 = cv2.imread("lena.jpg")

if len(hands) == 2:

# Zoom Gesture

print(detector.fingersUp(hands[0]), detector.fingersUp(hands[1]))

if detector.fingersUp(hands[0]) == [0, 1, 0, 0, 0] and detector.fingersUp(hands[1]) == [0, 1, 0, 0, 0]:

lmList1 = hands[0]["lmList"]

lmList2 = hands[1]["lmList"] # List of 21 Landmarks points

# 8 is the tip of the index finger

if startDistance is None:

length, line_info, img = detector.findDistance(lmList1[8], lmList2[8], img)

startDistance = length

length, line_info, img = detector.findDistance(lmList1[8], lmList2[8], img)

scale = int((length - startDistance) // 2)

cx, cy = line_info[-2], line_info[-1]

else:

startDistance = None

# 异常处理

try:

h, w, _ = img1.shape

newH, newW = int((h + scale) // 2) * 2, int((w + scale) // 2) * 2

img1 = cv2.resize(img1, (newH, newW))

img[cy-newH//2:cy+newH//2, cx-newW//2:cx+newW//2] = img1

cv2.putText(img, text="img_size : " +"("+str(newH)+","+str(newW)+")"

, org=(cx-newW//2, cy-newH//2-10), fontFace=cv2.FONT_HERSHEY_PLAIN

, fontScale=1, color=(0, 255, 0), thickness=1)

except:

pass

cv2.imshow("Image", img)

if cv2.waitKey(1) & 0xFF == "q":

break

cv2.destroyAllWindows()

cap.release()3. 效果展示

缩放前:

缩放后:

二、多张虚拟图像的移动

1. 图片素材

先找两张.jpg文件,然后利用抠图软件扣去背景

抠图软件:创客贴-做图做视频必备_会打字就能做设计,商用有版权示

本次使用两张200*200的.jpg图片,

抠图后,生成.png图片

2.代码如下:

import os

import cv2

import cvzone

from cvzone.HandTrackingModule import HandDetector

##########

wWin = 1300

hWin = 720

##########

cap = cv2.VideoCapture(0)

cap.set(3, 1300)

cap.set(4, 720)

class DragImage():

def __init__(self, path, posOrigin, imgType):

self.path = path

self.posOrigin = posOrigin

self.imgType = imgType

if self.imgType == "png":

self.img = cv2.imread(self.path, cv2.IMREAD_UNCHANGED)

else:

self.img = cv2.imread(self.path)

self.size = self.img.shape[:2]

def update_oneHand_move(self, cursor):

ox, oy = self.posOrigin

h, w = self.size

# check if in the region

if (ox < cursor[0] < ox + w and oy < cursor[1] < oy + h):

self.posOrigin = cursor[0] - w // 2, cursor[1] - h // 2

path = "G:/2virtual_env python-learning-items/virtualZoom/img"

myList = os.listdir(path)

listImg = []

for index, pathImg in enumerate(myList):

if "png" in pathImg:

imgType = "png"

else:

imgType = "jpg"

listImg.append(DragImage(f"{path}/{pathImg}", [50+index*300, 50], imgType))

startDistance = 0

detector = HandDetector(maxHands=2, detectionCon=0.8)

while True:

ret, img = cap.read()

img = cv2.flip(img, flipCode=1)

# the data structure of hands: dictionary ,likes{"lmList","bbox","center","type"}

hands, img = detector.findHands(img, draw=True, flipType=False)

# load picture

try:

for imgObject in listImg:

h, w = imgObject.size

ox, oy = imgObject.posOrigin

if imgObject.imgType == "png":

# draw for PNG image

img = cvzone.overlayPNG(img, imgObject.img, [ox, oy])

if imgObject.imgType == "jpg":

# draw for JPG image

img[oy:oy+h, ox:ox+w] = imgObject.img

except:

pass

# when the index in the picture and the distance between index and middle is smaller than 60, move the picture

if len(hands) == 1:

lmList = hands[0]["lmList"]

# check if clicked

length, line_info, img = detector.findDistance(lmList[8], lmList[12], img)

if length < 60:

# restructure picture by the center of between index and middle

cv2.circle(img, (line_info[-2], line_info[-1]), 15, (0, 255, 0), cv2.FILLED)

cursor = line_info[4:]

for imgObject in listImg:

imgObject.update_oneHand_move(cursor)

cv2.imshow("Image", img)

if cv2.waitKey(1) & 0xFF == "q":

break

cv2.destroyAllWindows()

cap.release()3.效果如下:

三、多张图片缩放

1.代码如下:

import os

import cv2

import cvzone

from cvzone.HandTrackingModule import HandDetector

##########

wWin = 1300

hWin = 720

##########

cap = cv2.VideoCapture(0)

cap.set(3, 1300)

cap.set(4, 720)

class DragImage():

def __init__(self, path, posOrigin, imgType):

self.path = path

self.posOrigin = posOrigin

self.imgType = imgType

if self.imgType == "png":

self.img = cv2.imread(self.path, cv2.IMREAD_UNCHANGED)

else:

self.img = cv2.imread(self.path)

self.size = self.img.shape[:2]

def update_twoHand_Zoom(self, scale, cx, cy):

h, w = imgObject.size

ox, oy = imgObject.posOrigin

if (ox < cx < ox + w and oy < cy < oy + h):

newH, newW = int((h + scale) // 2) * 2, int((w + scale) // 2) * 2

self.size = newH, newW

self.posOrigin = [cx - newW // 2, cy - newH // 2]

self.img = cv2.resize(self.img, (newH, newW))

else:

pass

path = "G:/2virtual_env python-learning-items/virtualZoom/img"

myList = os.listdir(path)

listImg = []

for index, pathImg in enumerate(myList):

if "png" in pathImg:

imgType = "png"

else:

imgType = "jpg"

listImg.append(DragImage(f"{path}/{pathImg}", [50+index*300, 50], imgType))

startDistance = None

scale, cx, cy = 0, 0, 0

detector = HandDetector(maxHands=2, detectionCon=0.8)

while True:

ret, img = cap.read()

img = cv2.flip(img, flipCode=1)

# the data structure of hands: dictionary ,likes{"lmList","bbox","center","type"}

hands, img = detector.findHands(img, draw=True, flipType=False)

if len(hands) == 2:

# Zoom Gesture

print(detector.fingersUp(hands[0]), detector.fingersUp(hands[1]))

if detector.fingersUp(hands[0]) == [0, 1, 0, 0, 0] and detector.fingersUp(hands[1]) == [0, 1, 0, 0, 0]:

lmList1 = hands[0]["lmList"]

lmList2 = hands[1]["lmList"] # List of 21 Landmarks points

# 8 is the tip of the index finger

if startDistance is None:

length, line_info, img = detector.findDistance(lmList1[8], lmList2[8], img)

startDistance = length

length, line_info, img = detector.findDistance(lmList1[8], lmList2[8], img)

scale = int((length - startDistance) // 10)

cx, cy = line_info[-2], line_info[-1]

else:

startDistance = None

for imgObject in listImg:

h, w = imgObject.size

ox, oy = imgObject.posOrigin

if imgObject.imgType == "png":

# draw for PNG image

img = cvzone.overlayPNG(img, imgObject.img, [ox, oy])

if imgObject.imgType == "jpg":

# draw for JPG image

img[oy:oy + h, ox:ox + w] = imgObject.img

cv2.putText(img, text="img_size : " + "(" + str(imgObject.size[0]) + "," + str(imgObject.size[1]) + ")"

, org=(ox, oy-10),

fontFace=cv2.FONT_HERSHEY_PLAIN

, fontScale=1, color=(0, 255, 0), thickness=1)

try:

for imgObject in listImg:

imgObject.update_twoHand_Zoom(scale, cx, cy)

except:

pass

cv2.imshow("Image", img)

if cv2.waitKey(1) & 0xFF == "q":

break

cv2.destroyAllWindows()

cap.release()2.效果如下:

四、多张图片的缩放和移动

1.代码如下:

import os

import cv2

import cvzone

from cvzone.HandTrackingModule import HandDetector

##########

wWin = 1300

hWin = 720

##########

cap = cv2.VideoCapture(0)

cap.set(3, 1300)

cap.set(4, 720)

class DragImage():

def __init__(self, path, posOrigin, imgType):

self.path = path

self.posOrigin = posOrigin

self.imgType = imgType

if self.imgType == "png":

self.img = cv2.imread(self.path, cv2.IMREAD_UNCHANGED)

else:

self.img = cv2.imread(self.path)

self.size = self.img.shape[:2]

def update_twoHand_Zoom(self, scale, cx, cy):

h, w = imgObject.size

ox, oy = imgObject.posOrigin

if (ox < cx < ox + w and oy < cy < oy + h):

newH, newW = int((h + scale) // 2) * 2, int((w + scale) // 2) * 2

self.size = newH, newW

self.posOrigin = [cx - newW // 2, cy - newH // 2]

self.img = cv2.resize(self.img, (newH, newW))

else:

pass

path = "G:/2virtual_env python-learning-items/virtualZoom/img"

myList = os.listdir(path)

listImg = []

for index, pathImg in enumerate(myList):

if "png" in pathImg:

imgType = "png"

else:

imgType = "jpg"

listImg.append(DragImage(f"{path}/{pathImg}", [50+index*300, 50], imgType))

startDistance = None

scale, cx, cy = 0, 0, 0

detector = HandDetector(maxHands=2, detectionCon=0.8)

while True:

ret, img = cap.read()

img = cv2.flip(img, flipCode=1)

# the data structure of hands: dictionary ,likes{"lmList","bbox","center","type"}

hands, img = detector.findHands(img, draw=True, flipType=False)

if len(hands) == 2:

# Zoom Gesture

print(detector.fingersUp(hands[0]), detector.fingersUp(hands[1]))

if detector.fingersUp(hands[0]) == [0, 1, 0, 0, 0] and detector.fingersUp(hands[1]) == [0, 1, 0, 0, 0]:

lmList1 = hands[0]["lmList"]

lmList2 = hands[1]["lmList"] # List of 21 Landmarks points

# 8 is the tip of the index finger

if startDistance is None:

length, line_info, img = detector.findDistance(lmList1[8], lmList2[8], img)

startDistance = length

length, line_info, img = detector.findDistance(lmList1[8], lmList2[8], img)

scale = int((length - startDistance) // 10)

cx, cy = line_info[-2], line_info[-1]

else:

startDistance = None

for imgObject in listImg:

h, w = imgObject.size

ox, oy = imgObject.posOrigin

if imgObject.imgType == "png":

# draw for PNG image

img = cvzone.overlayPNG(img, imgObject.img, [ox, oy])

if imgObject.imgType == "jpg":

# draw for JPG image

img[oy:oy + h, ox:ox + w] = imgObject.img

cv2.putText(img, text="img_size : " + "(" + str(imgObject.size[0]) + "," + str(imgObject.size[1]) + ")"

, org=(ox, oy-10),

fontFace=cv2.FONT_HERSHEY_PLAIN

, fontScale=1, color=(0, 255, 0), thickness=1)

try:

for imgObject in listImg:

imgObject.update_twoHand_Zoom(scale, cx, cy)

except:

pass

cv2.imshow("Image", img)

if cv2.waitKey(1) & 0xFF == "q":

break

cv2.destroyAllWindows()

cap.release()