人工智能实践Tensorflow2.0 第五章--5.八股法搭建Inception网络--北京大学慕课

第五章–卷积神经网络基础–八股法搭建Inception网络

本讲目标:

介绍八股法搭建Inception网络的流程。参考视频。

八股法搭建Inception网络

- 1.Inception网络介绍

-

- 1.1-网络分析

- 2.六步法训练LeNet5网络

-

- 2.1六步法回顾

- 2.2完整代码

- 2.3输出结果

1.Inception网络介绍

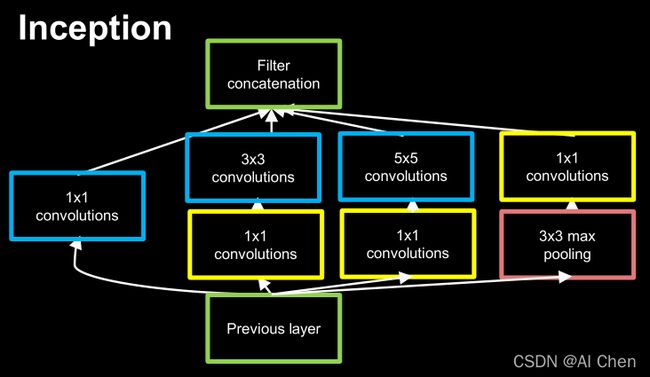

1.1-网络分析

借鉴点:一层内使用不同尺寸的卷积核,提升感知力(通过 padding 实现输出特征面积一致);

引入 1 x 1 卷积核,改变输出特征 channel 数(减少网络参数)。

引入了Batchnormalization。

用两个33的卷积核代替了一个55的卷积核。

InceptionNet 即 GoogLeNet,诞生于 2015 年,旨在通过增加网络的宽度来提升网络的能力,与 VGGNet 通过卷积层堆叠的方式(纵向)相比,是一个不同的方向(横向)。

显然,InceptionNet 模型的构建与 VGGNet 及之前的网络会有所区别,不再是简单的纵向堆叠,要理解 InceptionNet 的结构,首先要理解它的基本单元。

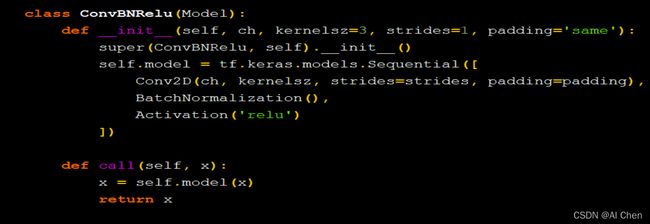

可以看到,InceptionNet 的基本单元中,卷积部分是比较统一的 C、B、A 典型结构,即卷积→BN→激活,激活均采用 Relu 激活函数,同时包含最大池化操作。

在 Tensorflow 框架下利用 Keras 构建 InceptionNet 模型时,可以将 C、B、A 结构封装在一起,定义成一个新的 ConvBNRelu 类,以减少代码量,同时更便于阅读。

上述所有的子模块拼接之后组成Inception的基本单元,同样利用class定义的方式,定义一个新的InceptionBlk类,如下图所示。

2.六步法训练LeNet5网络

2.1六步法回顾

import

train,test

model=tf.keras.Sequantial()/ class

model.compile

model.fit

model.summary

2.2完整代码

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras.layers import Conv2D, BatchNormalization, Activation, MaxPool2D, Dropout, Flatten, Dense, \

GlobalAveragePooling2D

from tensorflow.keras import Model

np.set_printoptions(threshold=np.inf)

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

class ConvBNRelu(Model):

def __init__(self, ch, kernelsz=3, strides=1, padding='same'):

super(ConvBNRelu, self).__init__()

self.model = tf.keras.models.Sequential([

Conv2D(ch, kernelsz, strides=strides, padding=padding),

BatchNormalization(),

Activation('relu')

])

def call(self, x):

x = self.model(x, training=False)

#在training=False时,BN通过整个训练集计算均值、方差去做批归一化,training=True时,通过当前batch的均值、方差去做批归一化。推理时 training=False效果好

return x

class InceptionBlk(Model):

def __init__(self, ch, strides=1):

super(InceptionBlk, self).__init__()

self.ch = ch

self.strides = strides

self.c1 = ConvBNRelu(ch, kernelsz=1, strides=strides)

self.c2_1 = ConvBNRelu(ch, kernelsz=1, strides=strides)

self.c2_2 = ConvBNRelu(ch, kernelsz=3, strides=1)

self.c3_1 = ConvBNRelu(ch, kernelsz=1, strides=strides)

self.c3_2 = ConvBNRelu(ch, kernelsz=5, strides=1)

self.p4_1 = MaxPool2D(3, strides=1, padding='same')

self.c4_2 = ConvBNRelu(ch, kernelsz=1, strides=strides)

def call(self, x):

x1 = self.c1(x)

x2_1 = self.c2_1(x)

x2_2 = self.c2_2(x2_1)

x3_1 = self.c3_1(x)

x3_2 = self.c3_2(x3_1)

x4_1 = self.p4_1(x)

x4_2 = self.c4_2(x4_1)

# concat along axis=channel

x = tf.concat([x1, x2_2, x3_2, x4_2], axis=3)

return x

class Inception10(Model):

def __init__(self, num_blocks, num_classes, init_ch=16, **kwargs):

super(Inception10, self).__init__(**kwargs)

self.in_channels = init_ch

self.out_channels = init_ch

self.num_blocks = num_blocks

self.init_ch = init_ch

self.c1 = ConvBNRelu(init_ch)

self.blocks = tf.keras.models.Sequential()

for block_id in range(num_blocks):

for layer_id in range(2):

if layer_id == 0:

block = InceptionBlk(self.out_channels, strides=2)

else:

block = InceptionBlk(self.out_channels, strides=1)

self.blocks.add(block)

# enlarger out_channels per block

self.out_channels *= 2

self.p1 = GlobalAveragePooling2D()

self.f1 = Dense(num_classes, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.blocks(x)

x = self.p1(x)

y = self.f1(x)

return y

model = Inception10(num_blocks=2, num_classes=10)

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

checkpoint_save_path = "./checkpoint/Inception10.ckpt"

if os.path.exists(checkpoint_save_path + '.index'):

print('-------------load the model-----------------')

model.load_weights(checkpoint_save_path)

cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path,

save_weights_only=True,

save_best_only=True)

history = model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1,

callbacks=[cp_callback])

model.summary()

# print(model.trainable_variables)

file = open('./weights.txt', 'w')

for v in model.trainable_variables:

file.write(str(v.name) + '\n')

file.write(str(v.shape) + '\n')

file.write(str(v.numpy()) + '\n')

file.close()

############################################### show ###############################################

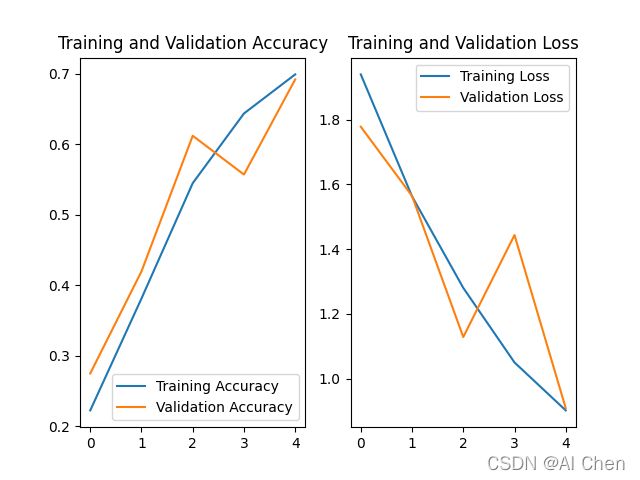

# 显示训练集和验证集的acc和loss曲线

acc = history.history['sparse_categorical_accuracy']

val_acc = history.history['val_sparse_categorical_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.subplot(1, 2, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.title('Training and Validation Accuracy')

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.title('Training and Validation Loss')

plt.legend()

plt.show()

2.3输出结果

Epoch 1/5

This message will be only logged once.

1/1563 [..............................] - ETA: 0s - loss: 3.3310 - sparse_categorical_accuracy: 0.0625WARNING:tensorflow:Callbacks method `on_train_batch_end` is slow compared to the batch time (batch time: 0.0087s vs `on_train_batch_end` time: 0.0140s). Check your callbacks.

1563/1563 [==============================] - 43s 27ms/step - loss: 1.9394 - sparse_categorical_accuracy: 0.2222 - val_loss: 1.7778 - val_sparse_categorical_accuracy: 0.2746

Epoch 2/5

1563/1563 [==============================] - 34s 22ms/step - loss: 1.5630 - sparse_categorical_accuracy: 0.3810 - val_loss: 1.5641 - val_sparse_categorical_accuracy: 0.4195

Epoch 3/5

1563/1563 [==============================] - 34s 22ms/step - loss: 1.2803 - sparse_categorical_accuracy: 0.5446 - val_loss: 1.1285 - val_sparse_categorical_accuracy: 0.6119

Epoch 4/5

1563/1563 [==============================] - 35s 22ms/step - loss: 1.0500 - sparse_categorical_accuracy: 0.6436 - val_loss: 1.4434 - val_sparse_categorical_accuracy: 0.5569

Epoch 5/5

1563/1563 [==============================] - 34s 22ms/step - loss: 0.9020 - sparse_categorical_accuracy: 0.6990 - val_loss: 0.9089 - val_sparse_categorical_accuracy: 0.6919

Model: "vg_g16"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) multiple 1792

_________________________________________________________________

batch_normalization (BatchNo multiple 256

_________________________________________________________________

activation (Activation) multiple 0

_________________________________________________________________

conv2d_1 (Conv2D) multiple 36928

_________________________________________________________________

batch_normalization_1 (Batch multiple 256

_________________________________________________________________

activation_1 (Activation) multiple 0

_________________________________________________________________

max_pooling2d (MaxPooling2D) multiple 0

_________________________________________________________________

dropout (Dropout) multiple 0

_________________________________________________________________

conv2d_2 (Conv2D) multiple 73856

_________________________________________________________________

batch_normalization_2 (Batch multiple 512

_________________________________________________________________

activation_2 (Activation) multiple 0

_________________________________________________________________

conv2d_3 (Conv2D) multiple 147584

_________________________________________________________________

batch_normalization_3 (Batch multiple 512

_________________________________________________________________

activation_3 (Activation) multiple 0

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 multiple 0

_________________________________________________________________

dropout_1 (Dropout) multiple 0

_________________________________________________________________

conv2d_4 (Conv2D) multiple 295168

_________________________________________________________________

batch_normalization_4 (Batch multiple 1024

_________________________________________________________________

activation_4 (Activation) multiple 0

_________________________________________________________________

conv2d_5 (Conv2D) multiple 590080

_________________________________________________________________

batch_normalization_5 (Batch multiple 1024

_________________________________________________________________

activation_5 (Activation) multiple 0

_________________________________________________________________

conv2d_6 (Conv2D) multiple 590080

_________________________________________________________________

batch_normalization_6 (Batch multiple 1024

_________________________________________________________________

activation_6 (Activation) multiple 0

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 multiple 0

_________________________________________________________________

dropout_2 (Dropout) multiple 0

_________________________________________________________________

conv2d_7 (Conv2D) multiple 1180160

_________________________________________________________________

batch_normalization_7 (Batch multiple 2048

_________________________________________________________________

activation_7 (Activation) multiple 0

_________________________________________________________________

conv2d_8 (Conv2D) multiple 2359808

_________________________________________________________________

batch_normalization_8 (Batch multiple 2048

_________________________________________________________________

activation_8 (Activation) multiple 0

_________________________________________________________________

conv2d_9 (Conv2D) multiple 2359808

_________________________________________________________________

batch_normalization_9 (Batch multiple 2048

_________________________________________________________________

activation_9 (Activation) multiple 0

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 multiple 0

_________________________________________________________________

dropout_3 (Dropout) multiple 0

_________________________________________________________________

conv2d_10 (Conv2D) multiple 2359808

_________________________________________________________________

batch_normalization_10 (Batc multiple 2048

_________________________________________________________________

activation_10 (Activation) multiple 0

_________________________________________________________________

conv2d_11 (Conv2D) multiple 2359808

_________________________________________________________________

batch_normalization_11 (Batc multiple 2048

_________________________________________________________________

activation_11 (Activation) multiple 0

_________________________________________________________________

conv2d_12 (Conv2D) multiple 2359808

_________________________________________________________________

batch_normalization_12 (Batc multiple 2048

_________________________________________________________________

activation_12 (Activation) multiple 0

_________________________________________________________________

max_pooling2d_4 (MaxPooling2 multiple 0

_________________________________________________________________

dropout_4 (Dropout) multiple 0

_________________________________________________________________

flatten (Flatten) multiple 0

_________________________________________________________________

dense (Dense) multiple 262656

_________________________________________________________________

dropout_5 (Dropout) multiple 0

_________________________________________________________________

dense_1 (Dense) multiple 262656

_________________________________________________________________

dropout_6 (Dropout) multiple 0

_________________________________________________________________

dense_2 (Dense) multiple 5130

=================================================================

Total params: 15,262,026

Trainable params: 15,253,578

Non-trainable params: 8,448

_________________________________________________________________