k8s+gitlab+jenkins+harbor(李作强)

harbor企业级私有镜像仓库:(单独的机器或者安装在node节点上)

# git安装文档

https://github.com/goharbor/harbor/blob/master/docs/installation_guide.md

# 下载安装包的地址

https://github.com/goharbor/harbor/releases

# git上TLS证书的配

https://github.com/goharbor/harbor/blob/master/docs/configure_https.md

安装docker:

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo //下载docker-ce

yum repolist //生成yum库

yum -y install docker-ce //安装docker

安装docker-compose:

curl -L "https://github.com/docker/compose/releases/download/1.18.0/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

# 给docker-compose执行的权限:

chmod +x /usr/local/bin/docker-compose

mv /usr/local/bin/docker-compose /usr/bin/

# 重启docker

systemctl restart docker

systemctl enable docker

安装harbor:

wget https://storage.googleapis.com/harbor-releases/release-1.4.0/harbor-offline-installer-v1.4.0.tgz

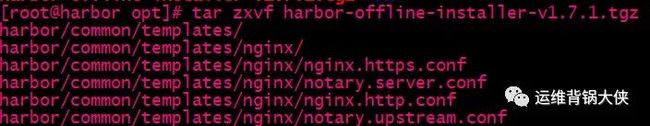

tar zxvf harbor-offline-installer-v1.4.0.tgz

配置证书:

# 创建跟证书:

mkdir -p /opt/harbor/ssl

openssl req \ -newkey rsa:4096 -nodes -sha256 -keyout ca.key \ -x509 -days 365 -out ca.crt

# 生成证书签名请求:{qiang.com 是自己起的域名,线上换环境要换成真实的域名}

openssl req \ -newkey rsa:4096 -nodes -sha256 -keyout reg.qiang.com.key \ -out reg.qiang.com.csr

# 颁发证书:

openssl x509 -req -days 365 -in reg.qiang.com.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out reg.qiang.com.crt

# 创建证书存放路径:

mkdir -p /data/cert/

cp reg.qiang.* /data/cert/

vi harbor.cfg //修改配置文件

# 配置文件中修改连接密码:

# 生成配置文件:

./prepare

# 安装hardor,拉取镜像:

./install.sh

# 查看hardor启动的情况:

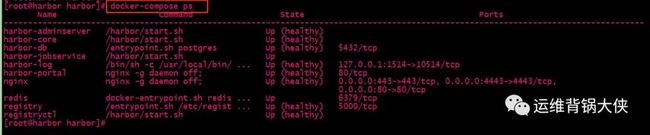

docker-compose ps

docker-compose up -d //关机后开启harbor

# 测试访问:

https://IP 或者 域名 //访问方式

用户名:admin //默认的用户名。

密码:harbor12345 //密码是配置文件中设置的密码。

# 在各个node节点上执行域名解析的操作:

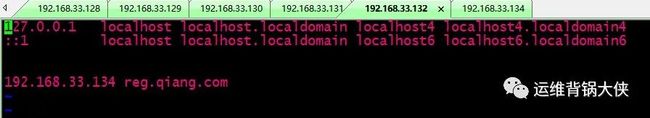

vi /etc/hosts

# 保证是能ping通的域名:

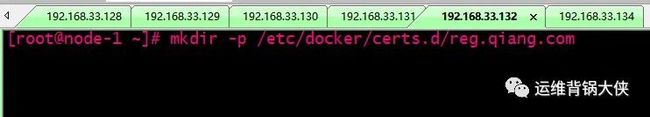

# 在各个node节点上创建此目录:

mkdir -p /etc/docker/certs.d/reg.qiang.com

# 在各个node节点上执行此命令,把证书拉取到节点上:

scp [email protected]:/opt/harbor/ssl/reg.qiang.com.crt /etc/docker/certs.d/reg.qiang.com

# 在node节点上执行,登录进私有仓库:

docker login reg.qiang.com

# 如果登陆不成功:

# 可以添加此内容:

--insecure-registry reg.qiang.com

# 需要把docker的镜像上传到docker私有仓库:

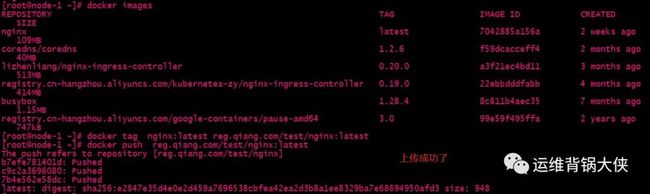

docker tag nginx:latest reg.qiang.com/test/nginx:latest //把想要上传的镜像打个包,reg.qiang.com/test/ 是域名和镜像仓库项目名是固定的格式。

# 上传到镜像仓库。

docker push reg.qiang.com/test/nginx:latest

# 页面介绍了镜像上传的方法,根据方法上传就行了 :

# 已经把镜像上传到仓库了:

# 查看镜像的详细信息:

# 拉取镜像,在镜像仓库有提示怎么下载镜像:

docker pull reg.qinag.com/test/nginx:latest

-----------------------------------------------------------------------------------------

kubernetes 安装:

https://github.com/linchqd/ //k8s(devops)所有的文件都可以直接下载。

# kubernetes 环境:

三台master通过keepalived+haproxy实现三节点高可用:

192.168.0.11 k8s-master-1.cqt.com k8s-master-1

192.168.0.12 k8s-master-2.cqt.com k8s-master-2

192.168.0.13 k8s-master-3.cqt.com k8s-master-3

三台node节点:

192.168.0.21 k8s-node-1.cqt.com k8s-node-1

192.168.0.22 k8s-node-2.cqt.com k8s-node-2

192.168.0.23 k8s-node-3.cqt.com k8s-node-3

keepalived为api-server提供的vip为: 192.168.0.15 haproxy通过监听8443来为api-server提供负载均衡

# 修改主机名:(6台机器全部都修改主机名)

hostnamectl set-hostname k8s-master-1

hostnamectl set-hostname k8s-master-2

hostnamectl set-hostname k8s-master-3

hostnamectl set-hostname k8s-node-1

hostnamectl set-hostname k8s-node-2

hostnamectl set-hostname k8s-node-3

免密码登录(node-3):

ssh-keygen #一路回车即可

ssh-copy-id k8s-master-1

ssh-copy-id k8s-master-2

ssh-copy-id k8s-master-3

ssh-copy-id k8s-node-1

ssh-copy-id k8s-node-2

# 运行以下命令以安装SaltStack存储库和密钥:

yum install https://repo.saltstack.com/yum/redhat/salt-repo-latest.el7.noarch.rpm -y

yum clean expire-cache -y

# 安装salt-minion,salt-master或其他Salt组件:

yum install salt-master -y

yum install salt-minion -y

yum install salt-ssh -y

yum install salt-syndic -y

yum install salt-cloud -y

yum install salt-api -y

# (仅限升级)重新启动所有升级的服务,例如:

systemctl restart salt-minion

systemctl restart salt-master.service

systemctl restart salt-api.service

systemctl restart salt-syndic.service

# 设置开机启动:

systemctl enable salt-minion

systemctl enable salt-master.service

systemctl enable salt-api.service

systemctl enable salt-syndic.service

# 部署步骤,准备一个salt master,并安装git,下载源码:

yum -y install git //安装git mkdir /srv/salt/modules/ -p && cd /srv/salt/modules && git clone https://github.com/linchqd/k8s.git

把github拉下来的”roster“cp到 /etc/salt,把pillar移动到/srv,根据需求更改 pillar中节点信息的内容:

sed -i "s/eth0/ens33/" k8s-master-1.sls

sed -i "s/eth0/ens33/" k8s-master-2.sls

sed -i "s/eth0/ens33/" k8s-master-3.sls

sed -i "s/eth0/ens33/" k8s-node-1.sls

sed -i "s/eth0/ens33/" k8s-node-2.sls

sed -i "s/eth0/ens33/" k8s-node-3.sls

# 修改keepalived的配置信息

sed -i "s/eth0/ens33/" /srv/salt/modules/k8s/api-server/files/keepalived.conf

# 修改每个节点的ssh登录信息:

vim /srv/salt/modules/k8s/roster

# 把文件移动到固定的位置

cp roster /etc/salt && cp -r pillar /srv/

# 根据实际修改k8s/master k8s/roster k8s/pillar/目录下文件并配置salt 下载kubernetes-1.11.1 server二进制包:

cd /tmp/ && wget https://dl.k8s.io/v1.11.1/kubernetes-server-linux-amd64.tar.gz tar zxf kubernetes-server-linux-amd64.tar.gz

# 解压后把kubernetes/server/bin下面所有的可执行文件拷贝到/srv/salt/modules/k8s/requirements/files/目录下:

mv /tmp/kubernetes/server/bin/{apiextensions-apiserver,kube-controller-manager,cloud-controller-manager,hyperkube,kubeadm,kube-apiserver,kubectl,kubelet,kube-proxy,kube-scheduler,mounter} /srv/salt/modules/k8s/requirements/files/

master 部署

# 创建集群CA:

cd /srv/salt/modules/k8s/ca-build/files/ && /srv/salt/modules/k8s/requirements/files/cfssl gencert -initca /srv/salt/modules/k8s/ca-build/files/ca-csr.json | /srv/salt/modules/k8s/requirements/files/cfssljson -bare ca

# 创建集群admin密钥配置文件:

cd /srv/salt/modules/k8s/kubectl/files/ && /srv/salt/modules/k8s/requirements/files/cfssl gencert -ca=/srv/salt/modules/k8s/ca-build/files/ca.pem -ca-key=/srv/salt/modules/k8s/ca-build/files/ca-key.pem -config=/srv/salt/modules/k8s/ca-build/files/ca-config.json -profile=kubernetes admin-csr.json | /srv/salt/modules/k8s/requirements/files/cfssljson -bare admin

# 部署etcd集群,修改vim /srv/salt/modules/k8s/etcd/files/etcd-csr.json文件中hosts ip 成etcd集群的节点ip:

cd /srv/salt/modules/k8s/etcd/files/ && /srv/salt/modules/k8s/requirements/files/cfssl gencert -ca=/srv/salt/modules/k8s/ca-build/files/ca.pem -ca-key=/srv/salt/modules/k8s/ca-build/files/ca-key.pem -config=/srv/salt/modules/k8s/ca-build/files/ca-config.json -profile=kubernetes etcd-csr.json | /srv/salt/modules/k8s/requirements/files/cfssljson -bare etcd

# 部署flanneld:

cd /srv/salt/modules/k8s/flanneld/files/ && /srv/salt/modules/k8s/requirements/files/cfssl gencert -ca=/srv/salt/modules/k8s/ca-build/files/ca.pem -ca-key=/srv/salt/modules/k8s/ca-build/files/ca-key.pem -config=/srv/salt/modules/k8s/ca-build/files/ca-config.json -profile=kubernetes flanneld-csr.json | /srv/salt/modules/k8s/requirements/files/cfssljson -bare flanneld

# 部署api-server,根据实际修改vim /srv/salt/modules/k8s/api-server/files/kubernetes-csr.json文件,如果 kube-apiserver 机器没有运行 kube-proxy,则还需要添加 --enable-aggregator-routing=true 参数:

cd /srv/salt/modules/k8s/api-server/files/ && /srv/salt/modules/k8s/requirements/files/cfssl gencert -ca=/srv/salt/modules/k8s/ca-build/files/ca.pem -ca-key=/srv/salt/modules/k8s/ca-build/files/ca-key.pem -config=/srv/salt/modules/k8s/ca-build/files/ca-config.json -profile=kubernetes kubernetes-csr.json | /srv/salt/modules/k8s/requirements/files/cfssljson -bare kubernetes

# 生成metrics-server的证书:

cd /srv/salt/modules/k8s/api-server/files/ && /srv/salt/modules/k8s/requirements/files/cfssl gencert -ca=/srv/salt/modules/k8s/ca-build/files/ca.pem -ca-key=/srv/salt/modules/k8s/ca-build/files/ca-key.pem -config=/srv/salt/modules/k8s/ca-build/files/ca-config.json -profile=kubernetes metrics-server-csr.json | /srv/salt/modules/k8s/requirements/files/cfssljson -bare metrics-server

# 部署kube-controller-manager,根据实际修改vim /srv/salt/modules/k8s/kube-controller-manager/files/kube-controller-manager-csr.json:

cd /srv/salt/modules/k8s/kube-controller-manager/files/ && /srv/salt/modules/k8s/requirements/files/cfssl gencert -ca=/srv/salt/modules/k8s/ca-build/files/ca.pem -ca-key=/srv/salt/modules/k8s/ca-build/files/ca-key.pem -config=/srv/salt/modules/k8s/ca-build/files/ca-config.json -profile=kubernetes kube-controller-manager-csr.json | /srv/salt/modules/k8s/requirements/files/cfssljson -bare kube-controller-manager

# 部署kube-scheduler,根据实际修改vim /srv/salt/modules/k8s/kube-scheduler/files/kube-scheduler-csr.json:

cd /srv/salt/modules/k8s/kube-scheduler/files/ && /srv/salt/modules/k8s/requirements/files/cfssl gencert -ca=/srv/salt/modules/k8s/ca-build/files/ca.pem -ca-key=/srv/salt/modules/k8s/ca-build/files/ca-key.pem -config=/srv/salt/modules/k8s/ca-build/files/ca-config.json -profile=kubernetes kube-scheduler-csr.json | /srv/salt/modules/k8s/requirements/files/cfssljson -bare kube-scheduler

# 部署k8s,第一次部署要加“-i”参数:

salt-ssh -E -i 'k8s-master-[123]' state.sls modules.k8s.master

# 获取主节点的数据:

salt-ssh -E 'k8s-master-[123]' pillar.items

部署master完成测试

# etcd测试:

/etc/kubernetes/bin/etcdctl --endpoints=https://192.168.0.11:2379 --ca-file=/etc/kubernetes/cert/ca.pem --cert-file=/etc/kubernetes/cert/etcd.pem --key-file=/etc/kubernetes/cert/etcd-key.pem cluster-health

# api-server测试:

kubectl get all --all-namespaces kubectl get cs kubectl cluster-info

# kube-controller-manager测试:

kubectl get endpoints kube-controller-manager --namespace=kube-system -o yaml

# kube-scheduler测试:

kubectl get endpoints kube-scheduler --namespace=kube-system -o yaml

work节点部署

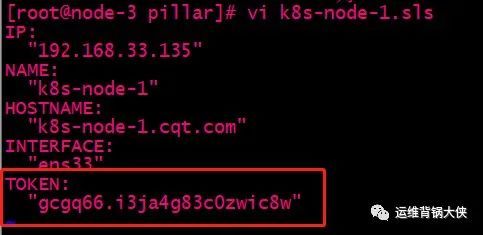

# kubelet在master上为每个node创建token,并替换每个node的对应pillar数据中的TOKEN:

# node-1生成TOKEN:

/etc/kubernetes/bin/kubeadm token create --description kubelet-bootstrap-token --groups system:bootstrappers:k8s-node-1 --kubeconfig ~/.kube/config

# node-2生成TOKEN:

/etc/kubernetes/bin/kubeadm token create --description kubelet-bootstrap-token --groups system:bootstrappers:k8s-node-2 --kubeconfig ~/.kube/config

# node-3生成TOKEN:

/etc/kubernetes/bin/kubeadm token create --description kubelet-bootstrap-token --groups system:bootstrappers:k8s-node-3 --kubeconfig ~/.kube/config

# 查看所有token:

/etc/kubernetes/bin/kubeadm token list --kubeconfig ~/.kube/config

# 替换node的对应pillar数据中的TOKEN:

vi k8s-node-1.sls

# 对/srv/salt/modules/k8s/kubelet/files/kubelet.service配置文件的修改:

# vi /srv/salt/modules/k8s/kubelet/files/kubelet.service

--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest \

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/etc/kubernetes/bin/kubelet \

--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \

--cert-dir=/etc/kubernetes/cert \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--config=/etc/kubernetes/kubelet.config.json \

--hostname-override={{ salt['config.get']('NAME') }} \

--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest \

--allow-privileged=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

# 创建kube-proxy:

cd /srv/salt/modules/k8s/kube-proxy/files/ && /srv/salt/modules/k8s/requirements/files/cfssl gencert -ca=/srv/salt/modules/k8s/ca-build/files/ca.pem -ca-key=/srv/salt/modules/k8s/ca-build/files/ca-key.pem -config=/srv/salt/modules/k8s/ca-build/files/ca-config.json -profile=kubernetes kube-proxy-csr.json | /srv/salt/modules/k8s/requirements/files/cfssljson -bare kube-proxy

# 创建node节点,第一次创建需要加 -i 参数:

salt-ssh -E -i 'k8s-node-[123]' state.sls modules.k8s.node

# 在master上kubectl get nodes查看node状态

kubectl get nodes

# 先给三台master节点生成token,在把生成的token添加到k8s-master-[123].sls中:(在master上操作):

# master-1 生成TOKEN:

/etc/kubernetes/bin/kubeadm token create --description kubelet-bootstrap-token --groups system:bootstrappers:k8s-master-1 --kubeconfig ~/.kube/config

# master-2 生成TOKEN:

/etc/kubernetes/bin/kubeadm token create --description kubelet-bootstrap-token --groups system:bootstrappers:k8s-master-2 --kubeconfig ~/.kube/config

# master-3 生成TOKEN:

/etc/kubernetes/bin/kubeadm token create --description kubelet-bootstrap-token --groups system:bootstrappers:k8s-master-3 --kubeconfig ~/.kube/config

# 查看所有token:

/etc/kubernetes/bin/kubeadm token list --kubeconfig ~/.kube/config

# 替换master的对应pillar数据中的TOKEN:

vi k8s-master-1.sls

# 在安装salt的节点上运行,第一次创建需要加 -i 参数:

salt-ssh -E -i 'k8s-master-[123]' state.sls modules.k8s.node

# 把三台master也运行为node,方法参考wokr节点部署。然后给三台master打上不调度的污点,命令:

/etc/kubernetes/bin/kubectl taint nodes k8s-master-1 node-role.kubernetes.io/master=:NoSchedule

/etc/kubernetes/bin/kubectl taint nodes k8s-master-2 node-role.kubernetes.io/master=:NoSchedule

/etc/kubernetes/bin/kubectl taint nodes k8s-master-3 node-role.kubernetes.io/master=:NoSchedule

插件部署

# coredns

salt-ssh 'k8s-master-1' state.sls modules.k8s.k8s-plugins.coredns

# dashboard

salt-ssh 'k8s-master-1' state.sls modules.k8s.k8s-plugins.dashboard

# 获取登陆token:

kubectl describe secret/$(kubectl get secret -n kube-system | grep dashboard-admin | awk '{print $1}') -n kube-system | grep token | tail -1 | awk '{print $2}'

配置文件访问登陆:

# 添加集群:

kubectl config set-cluster kubernetes --server=https://192.168.0.15:6443 --certificate-authority=/etc/kubernetes/cert/ca.pem --embed-certs=true --kubeconfig=/tmp/dashboard-ad

# 添加用户:

kubectl config set-credentials dashboard-admin --token=$(kubectl describe secret/$(kubectl get secret -n kube-system | grep dashboard-admin | awk '{print $1}') -n kube-system | grep token | tail -1 | awk '{print $2}') --kubeconfig=/tmp/dashboard-admin # 添加上下文:

kubectl config set-context dashboard-admin@kubernetes --cluster=kubernetes --user=dashboard-admin --kubeconfig=/tmp/dashboard-admin

# 使用创建的上下文:

kubectl config use-context dashboard-admin@kubernetes --kubeconfig=/tmp/dashboard-admin

# 检查下/tmp/dashboard-admin:

kubectl config view --kubeconfig=/tmp/dashboard-admin

# 将/tmp/dashboard-admin拷贝出来就可以使用配置文件方式访问。

# metrics-server

salt-ssh 'k8s-master-1' state.sls modules.k8s.k8s-plugins.metrics-server

kubectl get pods -n kube-system -o wide

kubectl get svc -n kube-system

kubectl get --raw "/apis/metrics.k8s.io/v1beta1" | jq .

kubectl get --raw "/apis/metrics.k8s.io/v1beta1/nodes" | jq .

kubectl get --raw "/apis/metrics.k8s.io/v1beta1/pods" | jq .

kubectl top nodes kubectl top pods -n kube-system

数据持久化

# 安装nfs,在所有的node节点安装,只有node作为主节点时安装nfs-utils与rpcbind,其他的节点只安装nfs-utils:

# 在master安装:

yum install -y nfs-utils rpcbind

# 在每个node安装:

yum install -y nfs-utils

# 在每个node安装:

yum install -y nfs-utils

# 启动NFS并加入开机自启动:

systemctl restart rpcbind

systemctl restart nfs.service

systemctl enable rpcbind

systemctl enable nfs.service

# NFS服务的配置文件 /etc/exports,这个文件可能不会存在,需要新建:

vim /etc/exports

/data/k8s 192.168.0.0/24(rw,no_root_squash,no_all_squash,sync)

# exports中的配置的内容,需要创建下/data/k8s:

mkdir -p /data/k8s

# 修改权限

chmod -R 777 /data/k8s

# 验证配置的/data/k8s是否正确

exportfs -r

# 参数说明:

/data/k8s:表示的是nfs服务器需要共享给其他客户端服务器的文件夹

192.168.33.0/24:表示可以挂载服务器目录的客户端ip

(rw):表示该客户端对共享的文件具有读写权限

(Root_squash) 客户机用root用户访问该共享文件夹时,将root用户映射成匿名用户

(Sync) 资料同步写入到内存与硬盘中

(All_squash) 客户机上的任何用户访问该共享目录时都映射成匿名用户

# 检测服务器的nfs状态

showmount -e //查看自己共享的服务

NFS客户端的操作:

1、showmout命令对于NFS的操作和查错有很大的帮助,所以我们先来看一下showmount的用法:-a :这个参数是一般在NFS SERVER上使用,是用来显示已经mount上本机nfs目录的cline机器。-e :显示指定的NFS SERVER上export出来的目录。

2、mount nfs目录的方法:mount -t nfs 192.168.0.23:/data/k8s /mnt

sonar安装

git clone https://github.com/M-Ayman/K8s-Sonar.git

# 在nfs服务器上操作:

vim /etc/exports

/sonarqube/mysql *(rw,sync,no_root_squash)

/sonarqube/data *(rw,sync,no_root_squash)

/sonarqube/extensions *(rw,sync,no_root_squash)

# 创建挂载的目录:

mkdir -p /sonarqube/mysql;

mkdir -p /sonarqube/data;

mkdir -p /sonarqube/extensions

chmod -R 755 /sonarqube

chown nfsnobody:nfsnobody /sonarqube -R

# 重启nfs服务:

systemctl restart rpcbind

systemctl restart nfs-server

systemctl restart nfs-lock

systemctl restart nfs-idmap

# 在sonar-deployment.yaml和sonar-mysql-deployment.yaml中修改nfsIP:

# 创建pod:

kubectl create -f .

# 打开浏览器并在端口30080上点击任何工作节点的ip

Username: admin

Password: admin

配置jenkins:

https://plugins.jenkins.io/ //jenkins 插件下载。

# 拉取nfs的yaml文件

git clone https://github.com/linchqd/nfs-storageclass.git

# 拉取jenkins的yaml文件

git clone https://github.com/linchqd/jenkins.git

# 创建kubectl-cert-cm.yaml证书文件,创建证书之前要把原有的证书文件删除:

kubectl create configmap kubectl-cert-cm --from-file=/etc/kubernetes/cert/ca.pem --from-file=/etc/kubernetes/cert/admin.pem --from-file=admin.key=/etc/kubernetes/cert/admin-key.pem --dry-run -o yaml > kubectl-cert-cm.yaml

kubectl apply -f kubectl-cert-cm.yaml //创建证书。

kubectl describe cm //查看kubectl-cert-cm详情。

kubectl get cm //获取kubectl-cert-cm pod信息。

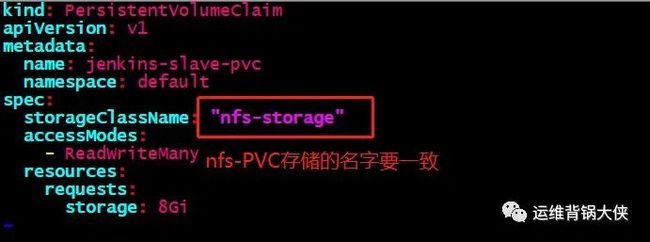

# 获取定义存储类的名字信息:

kubectl get sc

vim jenkins-slave-pvc.yaml

# 构建jenkins-slave的镜像:

docker build -t reg.qiang.com/ops/jnlp-slave-mvn:latest . //构建镜像。

docker push reg.qiang.com/ops/jnlp-slave-mvn //上传到仓库 。

vi jenkins-statefulset.yaml

##### 以下代码是替换jenkins-statefulset.yaml中service部分####

apiVersion: v1

kind: Service

metadata:

name: jenkins

annotations:

# ensure the client ip is propagated to avoid the invalid crumb issue (k8s <1.7)

# service.beta.kubernetes.io/external-traffic: OnlyLocal

spec:

#type: LoadBalancer

type: NodePort

selector:

name: jenkins

# k8s 1.7+

# externalTrafficPolicy: Local

ports:

-

name: http

port: 80

targetPort: 8080

protocol: TCP

nodePort: 30001

-

name: agent

port: 50000

protocol: TCP

————————————————————————————————————————————

jenkins所需插件:

Git Parameter

Gitlab

GitLab Plugin

Kubernetes Continuous Deploy

Kubernetes

Extended Choice Parametes

Version Number

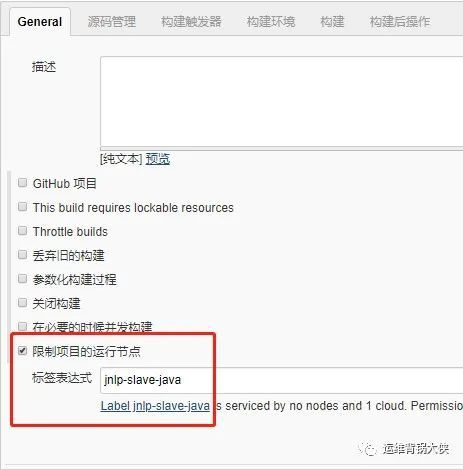

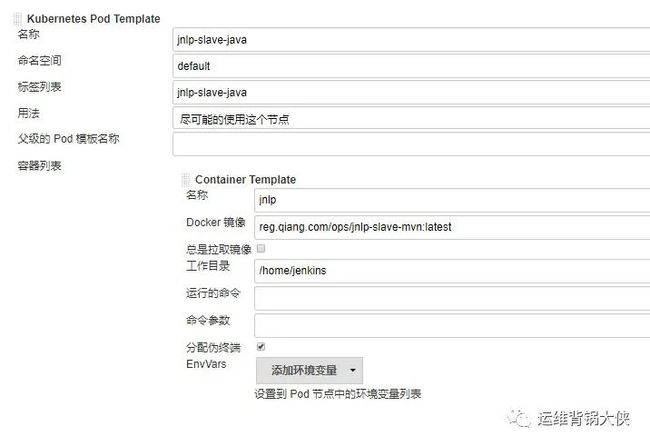

# 配置项目中使用jnlp-slave:

# 创建k8s与jenkins的集成:

# 创建k8s pod 模板:

# 挂载宿主机的文件到容器内,kubectl-cert-cm是刚刚创建的证书:

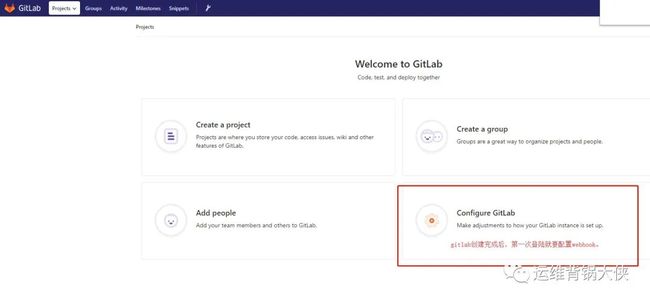

gitlab配置:

gitlab的管理员密码和账号,在配置gitlab.yaml中添加:

# gitlab使用webhook向jenkins发送请求:

# 即可进入Admin area,在Admin area中,在settings标签下面,找到OutBound Request,勾选上Allow requests to the local network from hooks and services

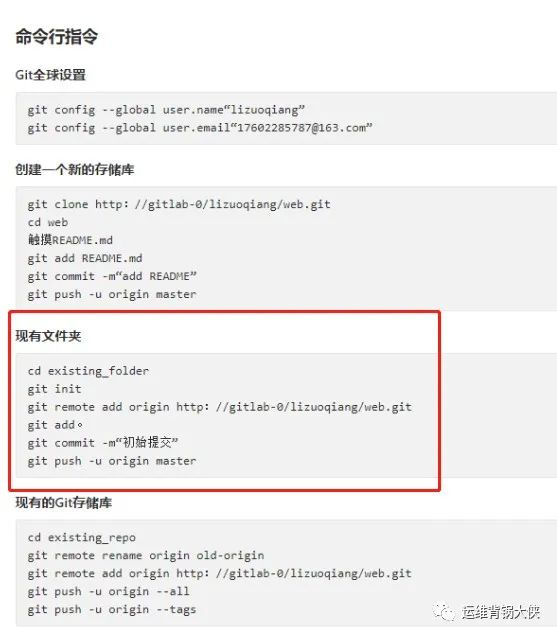

# 创建一个仓库后,需要在服务器上执行的命令,主要是把项目目录改成git仓库,提交代码:

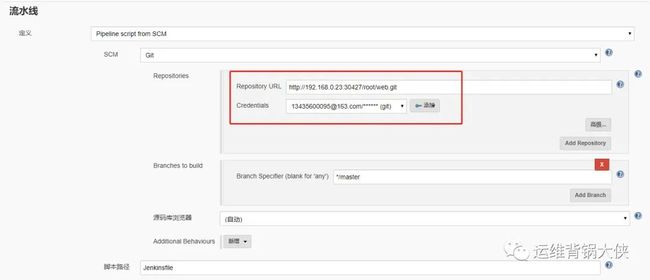

# 配置流水线连接gitlab仓库:

# 添加k8s+jenkins集成的凭据:

cat /root/.kube/config //查看kubernetes的证书文件,粘贴到凭据内,作为jenkins连接k8s的通道。

# 添加gitlab的凭据(gitlab的账号和密码):

# harbor的交互凭据配置:

# 创建gitlab+jenkins钩子:

创建身份验证令牌:(内容随便定义)

http://192.168.0.23:30485/project/web/build?token=12345 //配置webhook URL。

25721b8aa041d12b9a2944e8c4133f8d //生成的jenkins token

修改devops.yaml文件:

==============以下是yaml安装包==================

wordpress项目的测试包:

链接: https://pan.baidu.com/s/1xIDg6eu1HL4weKyanyV95A # 百度网盘

密码: jwlg

————————————————————————————

k8s-devops根据stash分支拉取代码:

创建git仓库目录:

mkdir raydata-pm

把目录设置为git仓库:

git init

添加仓库的连接地址:

git remote add origin http://[email protected]/scm/rayd/raydata-pm.git

# 上传evops的文件:

https://pan.baidu.com/s/1x2bzBXmlYqjpRH9cN-b22g #百度网盘

密码: aqcm

# 配置项目中使用jnlp-slave:

# 创建k8s与jenkins的集成:

# 创建k8s pod 模板:

# 挂载宿主机的文件到容器内,kubectl-cert-cm是刚刚创建的证书:

# 配置Jenkins项目:

# 配置Jenkins连接git仓库: