yolov5超级详细教程(包含win10的错误解决方案)

一、下载yolov5

https://github.com/ultralytics/yolov5

git clone也可以,下载压缩包解压缩也可以

得到我们的yolov5文件夹

二、准备我们的权重文件和数据集

在D:\jestonnano\yolov5\data\scripts下有相应下载的文件,运行即可下载

或者在网上下载相应的coco128.zip数据集和yolov5权重

将权重文件放在yolov5下,然后在yolov5下创建文件夹dataset,把coco128放入

三、配置对应的环境

1.运行命令

# pip install -r requirements.txt

2.或者手动下载

# base ----------------------------------------

matplotlib>=3.2.2

numpy>=1.18.5

opencv-python>=4.1.2

Pillow

PyYAML>=5.3.1

scipy>=1.4.1

torch>=1.7.0

torchvision>=0.8.1

tqdm>=4.41.0

# logging -------------------------------------

tensorboard>=2.4.1

# wandb

# plotting ------------------------------------

seaborn>=0.11.0

pandas

# export --------------------------------------

# coremltools>=4.1

# onnx>=1.9.0

# scikit-learn==0.19.2 # for coreml quantization

# extras --------------------------------------

# Cython # for pycocotools https://github.com/cocodataset/cocoapi/issues/172

# pycocotools>=2.0 # COCO mAP

# albumentations>=1.0.3

thop # FLOPs computation

四、设置训练集

在yolov5/data下,以coco128.yaml举例

我们可以看到代码:

# YOLOv5 by Ultralytics https://ultralytics.com, licensed under GNU GPL v3.0

# COCO128 dataset https://www.kaggle.com/ultralytics/coco128 (first 128 images from COCO train2017)

# Example usage: python train.py --data coco128.yaml

# parent

# ├── yolov5

# └── datasets

# └── coco128 ← downloads here

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: ../datasets/coco128 # dataset root dir

train: images/train2017 # train images (relative to 'path') 128 images

val: images/train2017 # val images (relative to 'path') 128 images

test: # test images (optional)

# Classes

nc: 80 # number of classes

names: ['person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light',

'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard',

'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch',

'potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear',

'hair drier', 'toothbrush'] # class names

# Download script/URL (optional)

download: https://github.com/ultralytics/yolov5/releases/download/v1.0/coco128.zip

由于我们已经下载了coco128.zip,我们修改代码为

path: ../datasets/coco128 # dataset root dir

train: images/train2017 # train images (relative to 'path') 128 images

val: images/train2017 # val images (relative to 'path') 128 images

test: # test images (optional)

# Classes

nc: 80 # number of classes

names: ['person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light',

'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard',

'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch',

'potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear',

'hair drier', 'toothbrush'] # class names

如果我们要训练我们自己的数据集,我们可以修改训练数据集的路径,nc,names等等

即

train:换成你自己的训练集地址

val:换成自己的val地址

nc: 换成你自己定义的类别的数量

names:换成自己定义的类别的名称

怎么定义我们的数据集呢?

模仿coco128,我们要有我们的图片集和标签集

使用Labelbox和CVAT工具进行图像标记,将标签导出为darknet格式,×.txt每张图像一个文件(如果图像中没有对象,则不需要×.txt文件)。

txt文件内容有:

每个对象一行

每行都是class x_center y_center width height格式

框坐标必须采用归一化的xywh格式(从0到1)。如果boxes以像素为单位,划分x_center和width关于图像宽度,y_center和height关于图像高度。

类别定义利用序号,一般从0开始。

每个图像的标签文件应该可以通过在其路径名中简单地替换/images/ *.jpg为/labels/*.txt来定位。

当然我们也可以去互联网搜索现成的数据集

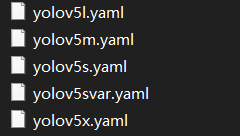

五、选择模型

在yolov5/models下

有五个模型文件,这里我们以yolov5s.yaml为例子

内容为

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Focus, [64, 3]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 9, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 1, SPP, [1024, [5, 9, 13]]],

[-1, 3, C3, [1024, False]], # 9

]

# YOLOv5 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

如果你用的自己的数据集或者其它的数据集,根据你的类别修改nc数值即可

六、开始训练

我们打开train.py,可以看到

def parse_opt(known=False):

parser = argparse.ArgumentParser()

parser.add_argument('--weights', type=str, default='yolov5s.pt', help='initial weights path')

parser.add_argument('--cfg', type=str, default='', help='model.yaml path')

parser.add_argument('--data', type=str, default='data/coco128.yaml', help='dataset.yaml path')

parser.add_argument('--hyp', type=str, default='data/hyps/hyp.scratch.yaml', help='hyperparameters path')

parser.add_argument('--epochs', type=int, default=300)

parser.add_argument('--batch-size', type=int, default=16, help='total batch size for all GPUs')

parser.add_argument('--imgsz', '--img', '--img-size', type=int, default=640, help='train, val image size (pixels)')

parser.add_argument('--rect', action='store_true', help='rectangular training')

parser.add_argument('--resume', nargs='?', const=True, default=False, help='resume most recent training')

parser.add_argument('--nosave', action='store_true', help='only save final checkpoint')

parser.add_argument('--noval', action='store_true', help='only validate final epoch')

parser.add_argument('--noautoanchor', action='store_true', help='disable autoanchor check')

parser.add_argument('--evolve', type=int, nargs='?', const=300, help='evolve hyperparameters for x generations')

parser.add_argument('--bucket', type=str, default='', help='gsutil bucket')

parser.add_argument('--cache', type=str, nargs='?', const='ram', help='--cache images in "ram" (default) or "disk"')

parser.add_argument('--image-weights', action='store_true', help='use weighted image selection for training')

parser.add_argument('--device', default='', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument('--multi-scale', action='store_true', help='vary img-size +/- 50%%')

parser.add_argument('--single-cls', action='store_true', help='train multi-class data as single-class')

parser.add_argument('--adam', action='store_true', help='use torch.optim.Adam() optimizer')

parser.add_argument('--sync-bn', action='store_true', help='use SyncBatchNorm, only available in DDP mode')

parser.add_argument('--workers', type=int, default=8, help='maximum number of dataloader workers')

parser.add_argument('--project', default='runs/train', help='save to project/name')

parser.add_argument('--entity', default=None, help='W&B entity')

parser.add_argument('--name', default='exp', help='save to project/name')

parser.add_argument('--exist-ok', action='store_true', help='existing project/name ok, do not increment')

parser.add_argument('--quad', action='store_true', help='quad dataloader')

parser.add_argument('--linear-lr', action='store_true', help='linear LR')

parser.add_argument('--label-smoothing', type=float, default=0.0, help='Label smoothing epsilon')

parser.add_argument('--upload_dataset', action='store_true', help='Upload dataset as W&B artifact table')

parser.add_argument('--bbox_interval', type=int, default=-1, help='Set bounding-box image logging interval for W&B')

parser.add_argument('--save_period', type=int, default=-1, help='Log model after every "save_period" epoch')

parser.add_argument('--artifact_alias', type=str, default="latest", help='version of dataset artifact to be used')

parser.add_argument('--local_rank', type=int, default=-1, help='DDP parameter, do not modify')

parser.add_argument('--freeze', type=int, default=0, help='Number of layers to freeze. backbone=10, all=24')

opt = parser.parse_known_args()[0] if known else parser.parse_args()

return opt

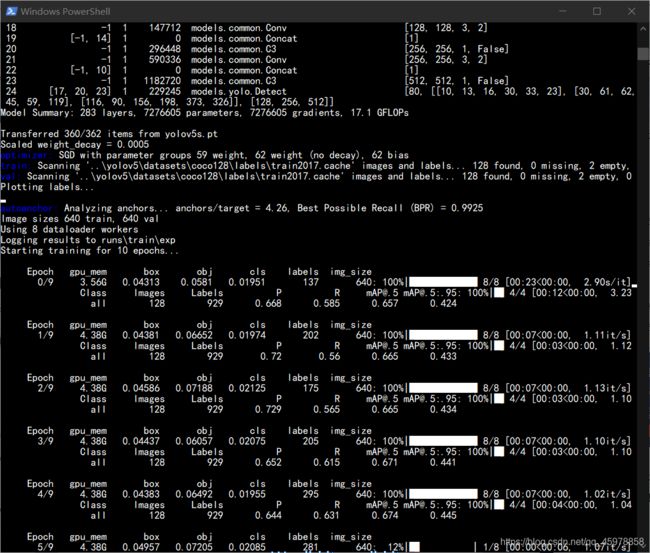

这都是可以修改的参数,学习率啥的我们都不用修改,我们只用修改模型路径,权重,batch-size,epoch即可,在yolov5下打开我们的powershell/cmd

python train.py --img 640 --batch-size 16 --epochs 10

--data data/coco128.yaml 之间空格间隔就行,我是为了美观

--cfg models/yolov5s.yaml

--weights '' 代表从头开始训练

--weights 'yolov5s.pt' 代表从我们下载的权重即预训练的模型加载开始训练

到这里我们win10的小伙伴可能会遇到一些错误,就是

UnicodeDecodeError: 'gbk' codec can't decode byte .................

这是因为我们open文件的时候txt的编码是utf8

解决方案:

只需要将train.py里面的69行with open(hyp) as f: 改为 with open(hyp,‘rb’) as f:,将yolo.py里的86行with open(cfg) as f:也改为with open(cfg,‘rb’) as f:即可

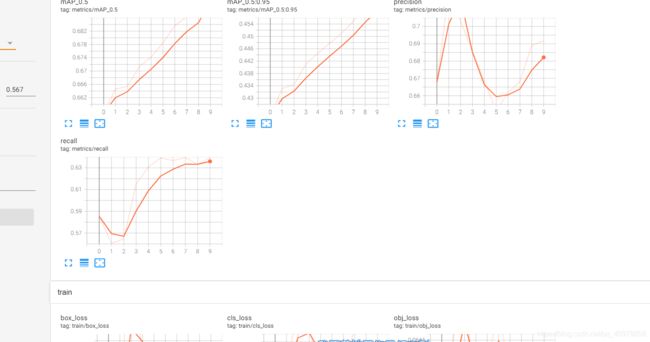

结束以后在yolov5目录下,使用:

tensorboard --logdir=runs

我们可以可视化我们的结果:

训练完以后会在runs/train/exp/weights下保留两个权重,一个是最好的,一个是最后一次

七、使用训练好的预训练模型进行测试

我们可以看到detect.py:

def run(weights='yolov5s.pt', # model.pt path(s)

source='data/images', # file/dir/URL/glob, 0 for webcam

imgsz=640, # inference size (pixels)

conf_thres=0.25, # confidence threshold

iou_thres=0.45, # NMS IOU threshold

max_det=1000, # maximum detections per image

device='', # cuda device, i.e. 0 or 0,1,2,3 or cpu

view_img=False, # show results

save_txt=False, # save results to *.txt

save_conf=False, # save confidences in --save-txt labels

save_crop=False, # save cropped prediction boxes

nosave=False, # do not save images/videos

classes=None, # filter by class: --class 0, or --class 0 2 3

agnostic_nms=False, # class-agnostic NMS

augment=False, # augmented inference

visualize=False, # visualize features

update=False, # update all models

project='runs/detect', # save results to project/name

name='exp', # save results to project/name

exist_ok=False, # existing project/name ok, do not increment

line_thickness=3, # bounding box thickness (pixels)

hide_labels=False, # hide labels

hide_conf=False, # hide confidences

half=False, # use FP16 half-precision inference

):

同样我们也只用修改几个地方

python detect.py --source 可以是图片,摄像头,图片链接

--weights 我们刚刚训练好的权重

--device 0

python detect.py --source 0(代表摄像头)

--weights runs/train/exp/weights/best.pt

--device 0