深度学习模型试跑(六):yolov5

文章目录

- 一.前言

- 二.运行

-

- 2.1 数据

- 2.2 配置

- 3 训练

- 4 检测

- 三.ncnn

- 四.tensorrt

一.前言

划水的时候写一篇用yolov5在做的项目。

yolov5官方代码

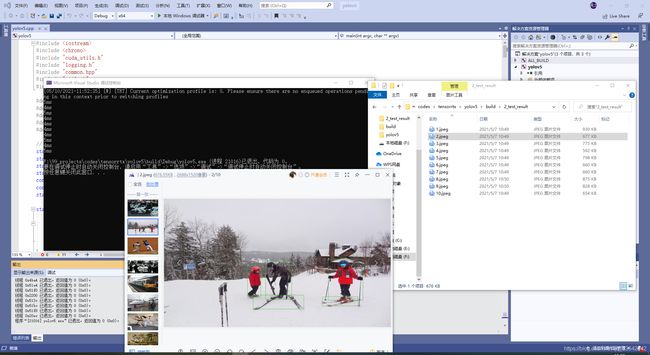

测试效果:

效果和速度都不用说了,而且还有很多大佬写出了ncnn和tensorrt的实现。

ncnn效果

trt的实现,强烈推荐

2021/9/27,tensorrtx+deepsort在vs2019下的实现

总而言之,就是分了太多子版本和s/l/m/x等不同大小的预训练权重(这个也算作是优势),容易搞得人云里雾里;但是整个代码对于不怎么了解深度学习的人来说都是很好使用的。

二.运行

我下的是最新版 (v3.1)的,win10上和ubuntu18.04上都训练过,以下以win10的环境为主。

关键环境:

cuda: 10.2

cudnn: 7.6.5

pytorch: 1.6.0

…

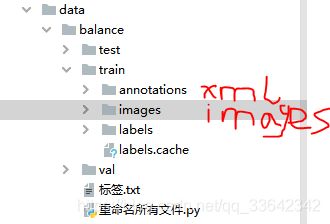

2.1 数据

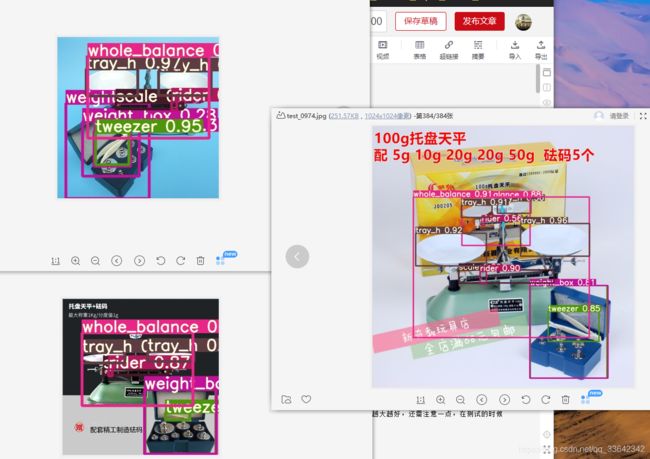

数据我是拿给标注公司标注的,一共有14个类别,他们导出的格式是voc的xml格式。

我把这些图片和标签放到了data/train下面, anotations里面放xml文件,images放图片.

然后运行第一张图最下面的那个voc2coco,

将会生成上图中的labels文件夹,里面包含了所有图片标注的txt文件,也就是能在yolov5里面用的coco格式注释文件。

# -- coding: UTF-8 --

import cv2

import os

import xml.etree.ElementTree as ET

from lxml import etree

def xml_txt(txt_path, image_path, path, labels):

cnt = 0

# 遍历图片文件夹

for (root, dirname, files) in os.walk(image_path):

# print(root,dirname,files)

# 获取图片名

for ft in files:

# ft是图片名字+扩展名,替换txt,xml格式

ftxt = ft.replace(ft.split('.')[1], 'txt')

fxml = ft.replace(ft.split('.')[1], 'xml')

# xml文件路径

xml_path = os.path.join(path, fxml)

# txt文件路径

ftxt_path = os.path.join(txt_path, ftxt)

# 解析xml

# tree = ET.parse(xml_path)

tree = etree.parse(xml_path)

root = tree.getroot()

# 获取weight,height

size = root.find('size')

w = size.find('width').text

h = size.find('height').text

dw = 1 / int(w)

dh = 1 / int(h)

# 初始化line

line = ''

for item in root.findall('object'):

# 提取label,并获取索引

label = item.find('name').text

label = labels.index(label)

# 提取信息labels, x, y, w, h

# 多框转化

for box in item.findall('bndbox'):

xmin = float(box.find('xmin').text)

ymin = float(box.find('ymin').text)

xmax = float(box.find('xmax').text)

ymax = float(box.find('ymax').text)

# print(xmin,ymin,xmax,ymax)

# x, y, w, h归一化

center_x = ((xmin + xmax) / 2) * dw

center_y = ((ymin + ymax) / 2) * dh

bbox_width = (xmax - xmin) * dw

bbox_height = (ymax - ymin) * dh

# print(center_x,center_y,bbox_width,bbox_height)

# 传入信息,txt是字符串形式

line += '{} {} {} {} {}'.format(label, center_x, center_y, bbox_width, bbox_height) + '\n'

# 将txt信息写入文件

# if not os.path.exists(ftxt_path):

# os.mkdir(ftxt_path)

with open(ftxt_path, 'w') as f_txt:

f_txt.write(line)

cnt += 1

print('文件数量:', cnt)

if __name__ == '__main__':

# filespath = os.getcwd()

filespath = "F:/99_projects/codes/yolov5-master/data/datasets/train/"

txt_path = os.path.join(filespath, 'txt') # yolo存放生成txt的文件目录

image_path = os.path.join(filespath, 'data') # 存放图片的文件目录

path = os.path.join(filespath, 'annotations') # 存放xml的文件目录

labels = ['whole_balance', 'weight_box', 'tray_h', 'tray_v', 'tweezer',

'rider', 'weight', 'scale', 'beaker', 'metal_block',

'hand_tweezer', 'tweezer_rider', 'tweezer_weight', 'hand_screw'] # 用于获取label位置

# print(txt_path, image_path, path, labels)

xml_txt(txt_path, image_path, path, labels)

记住val里面也要有images和labels两个文件夹,我偷懒直接只放了一张图和对应的标签;数据量大的可以严格配比。

我的test里面全是网上的图片,这个是我为了规范自己放了些图进去的。

2.2 配置

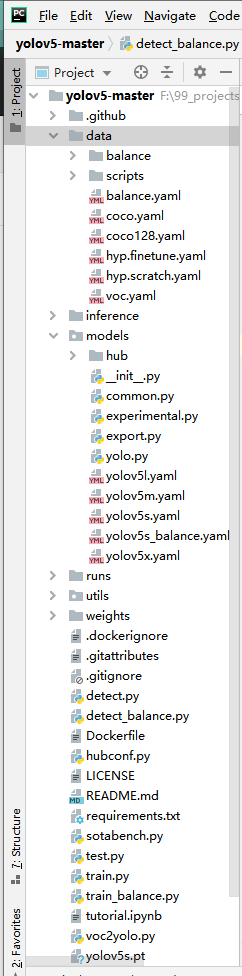

还是第一张图,里面有我新添加的两个配置文件,分别是balance.yaml和yolov5s_balance.yaml

balance.yaml(共四个配置,仿照coco.yaml写的)

train: F:\99_projects\codes\yolov5-master\data\balance\train\images\

val: F:\99_projects\codes\yolov5-master\data\balance\val\images\

nc: 14

names: [ 'whole_balance', 'weight_box', 'tray_h', 'tray_v', 'tweezer',

'rider', 'weight', 'scale', 'beaker', 'metal_block',

'hand_tweezer', 'tweezer_rider', 'tweezer_weight', 'hand_screw' ]

yolov5s_balance.yaml初次训练只需要改nc,和上面那个一致就行了。

3 训练

注意yolov5中不同子版本的代码和模型是不通用,这和PC的环境无关,否则会报各种各样的错误。

我仿造train.py,设置了训练程序中的一些参数的默认值,然后直接在pycharm里运行的;需要借鉴的按我下面程序中的注释修改即可。

train_balance.py

import argparse

import logging

import os

import random

import shutil

import time

from pathlib import Path

from warnings import warn

import math

import numpy as np

import torch.distributed as dist

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

import torch.optim.lr_scheduler as lr_scheduler

import torch.utils.data

import yaml

from torch.cuda import amp

from torch.nn.parallel import DistributedDataParallel as DDP

from torch.utils.tensorboard import SummaryWriter

from tqdm import tqdm

import test # import test.py to get mAP after each epoch

from models.yolo import Model

from utils.datasets import create_dataloader

from utils.general import (

torch_distributed_zero_first, labels_to_class_weights, plot_labels, check_anchors, labels_to_image_weights,

compute_loss, plot_images, fitness, strip_optimizer, plot_results, get_latest_run, check_dataset, check_file,

check_git_status, check_img_size, increment_dir, print_mutation, plot_evolution, set_logging, init_seeds)

from utils.google_utils import attempt_download

from utils.torch_utils import ModelEMA, select_device, intersect_dicts

logger = logging.getLogger(__name__)

import os

os.environ['KMP_DUPLICATE_LIB_OK'] = 'True'

def train(hyp, opt, device, tb_writer=None, wandb=None):

logger.info(f'Hyperparameters {hyp}')

log_dir = Path(tb_writer.log_dir) if tb_writer else Path(opt.logdir) / 'evolve' # logging directory

wdir = log_dir / 'weights' # weights directory

os.makedirs(wdir, exist_ok=True)

last = wdir / 'last.pt'

best = wdir / 'best.pt'

results_file = str(log_dir / 'results.txt')

epochs, batch_size, total_batch_size, weights, rank = \

opt.epochs, opt.batch_size, opt.total_batch_size, opt.weights, opt.global_rank

# Save run settings

with open(log_dir / 'hyp.yaml', 'w') as f:

yaml.dump(hyp, f, sort_keys=False)

with open(log_dir / 'opt.yaml', 'w') as f:

yaml.dump(vars(opt), f, sort_keys=False)

# Configure

cuda = device.type != 'cpu'

init_seeds(2 + rank)

with open(opt.data) as f:

data_dict = yaml.load(f, Loader=yaml.FullLoader) # data dict

with torch_distributed_zero_first(rank):

check_dataset(data_dict) # check

train_path = data_dict['train']

test_path = data_dict['val']

nc, names = (1, ['item']) if opt.single_cls else (int(data_dict['nc']), data_dict['names']) # number classes, names

assert len(names) == nc, '%g names found for nc=%g dataset in %s' % (len(names), nc, opt.data) # check

# Model

pretrained = weights.endswith('.pt')

if pretrained:

with torch_distributed_zero_first(rank):

attempt_download(weights) # download if not found locally

ckpt = torch.load(weights, map_location=device) # load checkpoint

if hyp.get('anchors'):

ckpt['model'].yaml['anchors'] = round(hyp['anchors']) # force autoanchor

model = Model(opt.cfg or ckpt['model'].yaml, ch=3, nc=nc).to(device) # create

exclude = ['anchor'] if opt.cfg or hyp.get('anchors') else [] # exclude keys

state_dict = ckpt['model'].float().state_dict() # to FP32

state_dict = intersect_dicts(state_dict, model.state_dict(), exclude=exclude) # intersect

model.load_state_dict(state_dict, strict=False) # load

logger.info('Transferred %g/%g items from %s' % (len(state_dict), len(model.state_dict()), weights)) # report

else:

model = Model(opt.cfg, ch=3, nc=nc).to(device) # create

# Freeze

freeze = [] # parameter names to freeze (full or partial)

for k, v in model.named_parameters():

v.requires_grad = True # train all layers

if any(x in k for x in freeze):

print('freezing %s' % k)

v.requires_grad = False

# Optimizer

nbs = 64 # nominal batch size

accumulate = max(round(nbs / total_batch_size), 1) # accumulate loss before optimizing

hyp['weight_decay'] *= total_batch_size * accumulate / nbs # scale weight_decay

pg0, pg1, pg2 = [], [], [] # optimizer parameter groups

for k, v in model.named_modules():

if hasattr(v, 'bias') and isinstance(v.bias, nn.Parameter):

pg2.append(v.bias) # biases

if isinstance(v, nn.BatchNorm2d):

pg0.append(v.weight) # no decay

elif hasattr(v, 'weight') and isinstance(v.weight, nn.Parameter):

pg1.append(v.weight) # apply decay

if opt.adam:

optimizer = optim.Adam(pg0, lr=hyp['lr0'], betas=(hyp['momentum'], 0.999)) # adjust beta1 to momentum

else:

optimizer = optim.SGD(pg0, lr=hyp['lr0'], momentum=hyp['momentum'], nesterov=True)

optimizer.add_param_group({'params': pg1, 'weight_decay': hyp['weight_decay']}) # add pg1 with weight_decay

optimizer.add_param_group({'params': pg2}) # add pg2 (biases)

logger.info('Optimizer groups: %g .bias, %g conv.weight, %g other' % (len(pg2), len(pg1), len(pg0)))

del pg0, pg1, pg2

# Scheduler https://arxiv.org/pdf/1812.01187.pdf

# https://pytorch.org/docs/stable/_modules/torch/optim/lr_scheduler.html#OneCycleLR

lf = lambda x: ((1 + math.cos(x * math.pi / epochs)) / 2) * (1 - hyp['lrf']) + hyp['lrf'] # cosine

scheduler = lr_scheduler.LambdaLR(optimizer, lr_lambda=lf)

# plot_lr_scheduler(optimizer, scheduler, epochs)

# Logging

if wandb and wandb.run is None:

id = ckpt.get('wandb_id') if 'ckpt' in locals() else None

wandb_run = wandb.init(config=opt, resume="allow", project="YOLOv5", name=os.path.basename(log_dir), id=id)

# Resume

start_epoch, best_fitness = 0, 0.0

if pretrained:

# Optimizer

if ckpt['optimizer'] is not None:

optimizer.load_state_dict(ckpt['optimizer'])

best_fitness = ckpt['best_fitness']

# Results

if ckpt.get('training_results') is not None:

with open(results_file, 'w') as file:

file.write(ckpt['training_results']) # write results.txt

# Epochs

start_epoch = ckpt['epoch'] + 1

if opt.resume:

assert start_epoch > 0, '%s training to %g epochs is finished, nothing to resume.' % (weights, epochs)

shutil.copytree(wdir, wdir.parent / f'weights_backup_epoch{start_epoch - 1}') # save previous weights

if epochs < start_epoch:

logger.info('%s has been trained for %g epochs. Fine-tuning for %g additional epochs.' %

(weights, ckpt['epoch'], epochs))

epochs += ckpt['epoch'] # finetune additional epochs

del ckpt, state_dict

# Image sizes

gs = int(max(model.stride)) # grid size (max stride)

imgsz, imgsz_test = [check_img_size(x, gs) for x in opt.img_size] # verify imgsz are gs-multiples

# DP mode

if cuda and rank == -1 and torch.cuda.device_count() > 1:

model = torch.nn.DataParallel(model)

# SyncBatchNorm

if opt.sync_bn and cuda and rank != -1:

model = torch.nn.SyncBatchNorm.convert_sync_batchnorm(model).to(device)

logger.info('Using SyncBatchNorm()')

# Exponential moving average

ema = ModelEMA(model) if rank in [-1, 0] else None

# DDP mode

if cuda and rank != -1:

model = DDP(model, device_ids=[opt.local_rank], output_device=opt.local_rank)

# Trainloader

dataloader, dataset = create_dataloader(train_path, imgsz, batch_size, gs, opt,

hyp=hyp, augment=True, cache=opt.cache_images, rect=opt.rect,

rank=rank, world_size=opt.world_size, workers=opt.workers)

mlc = np.concatenate(dataset.labels, 0)[:, 0].max() # max label class

nb = len(dataloader) # number of batches

assert mlc < nc, 'Label class %g exceeds nc=%g in %s. Possible class labels are 0-%g' % (mlc, nc, opt.data, nc - 1)

# Process 0

if rank in [-1, 0]:

ema.updates = start_epoch * nb // accumulate # set EMA updates

testloader = create_dataloader(test_path, imgsz_test, total_batch_size, gs, opt,

hyp=hyp, augment=False, cache=opt.cache_images and not opt.notest, rect=True,

rank=-1, world_size=opt.world_size, workers=opt.workers)[0] # testloader

if not opt.resume:

labels = np.concatenate(dataset.labels, 0)

c = torch.tensor(labels[:, 0]) # classes

# cf = torch.bincount(c.long(), minlength=nc) + 1. # frequency

# model._initialize_biases(cf.to(device))

plot_labels(labels, save_dir=log_dir)

if tb_writer:

# tb_writer.add_hparams(hyp, {}) # causes duplicate https://github.com/ultralytics/yolov5/pull/384

tb_writer.add_histogram('classes', c, 0)

# Anchors

if not opt.noautoanchor:

check_anchors(dataset, model=model, thr=hyp['anchor_t'], imgsz=imgsz)

# Model parameters

hyp['cls'] *= nc / 80. # scale coco-tuned hyp['cls'] to current dataset

model.nc = nc # attach number of classes to model

model.hyp = hyp # attach hyperparameters to model

model.gr = 1.0 # iou loss ratio (obj_loss = 1.0 or iou)

model.class_weights = labels_to_class_weights(dataset.labels, nc).to(device) # attach class weights

model.names = names

# Start training

t0 = time.time()

nw = max(round(hyp['warmup_epochs'] * nb), 1e3) # number of warmup iterations, max(3 epochs, 1k iterations)

# nw = min(nw, (epochs - start_epoch) / 2 * nb) # limit warmup to < 1/2 of training

maps = np.zeros(nc) # mAP per class

results = (0, 0, 0, 0, 0, 0, 0) # P, R, [email protected], [email protected], val_loss(box, obj, cls)

scheduler.last_epoch = start_epoch - 1 # do not move

scaler = amp.GradScaler(enabled=cuda)

logger.info('Image sizes %g train, %g test\n'

'Using %g dataloader workers\nLogging results to %s\n'

'Starting training for %g epochs...' % (imgsz, imgsz_test, dataloader.num_workers, log_dir, epochs))

for epoch in range(start_epoch, epochs): # epoch ------------------------------------------------------------------

model.train()

# Update image weights (optional)

if opt.image_weights:

# Generate indices

if rank in [-1, 0]:

cw = model.class_weights.cpu().numpy() * (1 - maps) ** 2 # class weights

iw = labels_to_image_weights(dataset.labels, nc=nc, class_weights=cw) # image weights

dataset.indices = random.choices(range(dataset.n), weights=iw, k=dataset.n) # rand weighted idx

# Broadcast if DDP

if rank != -1:

indices = (torch.tensor(dataset.indices) if rank == 0 else torch.zeros(dataset.n)).int()

dist.broadcast(indices, 0)

if rank != 0:

dataset.indices = indices.cpu().numpy()

# Update mosaic border

# b = int(random.uniform(0.25 * imgsz, 0.75 * imgsz + gs) // gs * gs)

# dataset.mosaic_border = [b - imgsz, -b] # height, width borders

mloss = torch.zeros(4, device=device) # mean losses

if rank != -1:

dataloader.sampler.set_epoch(epoch)

pbar = enumerate(dataloader)

logger.info(('\n' + '%10s' * 8) % ('Epoch', 'gpu_mem', 'box', 'obj', 'cls', 'total', 'targets', 'img_size'))

if rank in [-1, 0]:

pbar = tqdm(pbar, total=nb) # progress bar

optimizer.zero_grad()

for i, (imgs, targets, paths, _) in pbar: # batch -------------------------------------------------------------

ni = i + nb * epoch # number integrated batches (since train start)

imgs = imgs.to(device, non_blocking=True).float() / 255.0 # uint8 to float32, 0-255 to 0.0-1.0

# Warmup

if ni <= nw:

xi = [0, nw] # x interp

# model.gr = np.interp(ni, xi, [0.0, 1.0]) # iou loss ratio (obj_loss = 1.0 or iou)

accumulate = max(1, np.interp(ni, xi, [1, nbs / total_batch_size]).round())

for j, x in enumerate(optimizer.param_groups):

# bias lr falls from 0.1 to lr0, all other lrs rise from 0.0 to lr0

x['lr'] = np.interp(ni, xi, [hyp['warmup_bias_lr'] if j == 2 else 0.0, x['initial_lr'] * lf(epoch)])

if 'momentum' in x:

x['momentum'] = np.interp(ni, xi, [hyp['warmup_momentum'], hyp['momentum']])

# Multi-scale

if opt.multi_scale:

sz = random.randrange(imgsz * 0.5, imgsz * 1.5 + gs) // gs * gs # size

sf = sz / max(imgs.shape[2:]) # scale factor

if sf != 1:

ns = [math.ceil(x * sf / gs) * gs for x in imgs.shape[2:]] # new shape (stretched to gs-multiple)

imgs = F.interpolate(imgs, size=ns, mode='bilinear', align_corners=False)

# Forward

with amp.autocast(enabled=cuda):

pred = model(imgs) # forward

loss, loss_items = compute_loss(pred, targets.to(device), model) # loss scaled by batch_size

if rank != -1:

loss *= opt.world_size # gradient averaged between devices in DDP mode

# Backward

scaler.scale(loss).backward()

# Optimize

if ni % accumulate == 0:

scaler.step(optimizer) # optimizer.step

scaler.update()

optimizer.zero_grad()

if ema:

ema.update(model)

# Print

if rank in [-1, 0]:

mloss = (mloss * i + loss_items) / (i + 1) # update mean losses

mem = '%.3gG' % (torch.cuda.memory_reserved() / 1E9 if torch.cuda.is_available() else 0) # (GB)

s = ('%10s' * 2 + '%10.4g' * 6) % (

'%g/%g' % (epoch, epochs - 1), mem, *mloss, targets.shape[0], imgs.shape[-1])

pbar.set_description(s)

# Plot

if ni < 3:

f = str(log_dir / f'train_batch{ni}.jpg') # filename

result = plot_images(images=imgs, targets=targets, paths=paths, fname=f)

# if tb_writer and result is not None:

# tb_writer.add_image(f, result, dataformats='HWC', global_step=epoch)

# tb_writer.add_graph(model, imgs) # add model to tensorboard

# end batch ------------------------------------------------------------------------------------------------

# Scheduler

lr = [x['lr'] for x in optimizer.param_groups] # for tensorboard

scheduler.step()

# DDP process 0 or single-GPU

if rank in [-1, 0]:

# mAP

if ema:

ema.update_attr(model, include=['yaml', 'nc', 'hyp', 'gr', 'names', 'stride'])

final_epoch = epoch + 1 == epochs

if not opt.notest or final_epoch: # Calculate mAP

results, maps, times = test.test(opt.data,

batch_size=total_batch_size,

imgsz=imgsz_test,

model=ema.ema,

single_cls=opt.single_cls,

dataloader=testloader,

save_dir=log_dir,

plots=epoch == 0 or final_epoch, # plot first and last

log_imgs=opt.log_imgs)

# Write

with open(results_file, 'a') as f:

f.write(s + '%10.4g' * 7 % results + '\n') # P, R, [email protected], [email protected], val_loss(box, obj, cls)

if len(opt.name) and opt.bucket:

os.system('gsutil cp %s gs://%s/results/results%s.txt' % (results_file, opt.bucket, opt.name))

# Log

tags = ['train/giou_loss', 'train/obj_loss', 'train/cls_loss', # train loss

'metrics/precision', 'metrics/recall', 'metrics/mAP_0.5', 'metrics/mAP_0.5:0.95',

'val/giou_loss', 'val/obj_loss', 'val/cls_loss', # val loss

'x/lr0', 'x/lr1', 'x/lr2'] # params

for x, tag in zip(list(mloss[:-1]) + list(results) + lr, tags):

if tb_writer:

tb_writer.add_scalar(tag, x, epoch) # tensorboard

if wandb:

wandb.log({tag: x}) # W&B

# Update best mAP

fi = fitness(np.array(results).reshape(1, -1)) # weighted combination of [P, R, [email protected], [email protected]]

if fi > best_fitness:

best_fitness = fi

# Save model

save = (not opt.nosave) or (final_epoch and not opt.evolve)

if save:

with open(results_file, 'r') as f: # create checkpoint

ckpt = {'epoch': epoch,

'best_fitness': best_fitness,

'training_results': f.read(),

'model': ema.ema,

'optimizer': None if final_epoch else optimizer.state_dict(),

'wandb_id': wandb_run.id if wandb else None}

# Save last, best and delete

torch.save(ckpt, last)

if best_fitness == fi:

torch.save(ckpt, best)

del ckpt

# end epoch ----------------------------------------------------------------------------------------------------

# end training

if rank in [-1, 0]:

# Strip optimizers

n = opt.name if opt.name.isnumeric() else ''

fresults, flast, fbest = log_dir / f'results{n}.txt', wdir / f'last{n}.pt', wdir / f'best{n}.pt'

for f1, f2 in zip([wdir / 'last.pt', wdir / 'best.pt', results_file], [flast, fbest, fresults]):

if os.path.exists(f1):

os.rename(f1, f2) # rename

if str(f2).endswith('.pt'): # is *.pt

strip_optimizer(f2) # strip optimizer

os.system('gsutil cp %s gs://%s/weights' % (f2, opt.bucket)) if opt.bucket else None # upload

# Finish

if not opt.evolve:

plot_results(save_dir=log_dir) # save as results.png

logger.info('%g epochs completed in %.3f hours.\n' % (epoch - start_epoch + 1, (time.time() - t0) / 3600))

dist.destroy_process_group() if rank not in [-1, 0] else None

torch.cuda.empty_cache()

return results

if __name__ == '__main__':

###最主要的参数###

parser = argparse.ArgumentParser()

parser.add_argument('--weights', type=str, default='yolov5s.pt', help='initial weights path')#迁移学习的权重,根据需要修改

parser.add_argument('--cfg', type=str, default='models/yolov5s_balance.yaml', help='model.yaml path')#改

parser.add_argument('--data', type=str, default='data/balance.yaml', help='data.yaml path')#改

parser.add_argument('--hyp', type=str, default='data/hyp.scratch.yaml', help='hyperparameters path')

parser.add_argument('--epochs', type=int, default=300)#根据需要修改

parser.add_argument('--batch-size', type=int, default=21, help='total batch size for all GPUs')#根据需要修改

parser.add_argument('--img-size', nargs='+', type=int, default=[640, 640], help='[train, test] image sizes')

parser.add_argument('--device', default='', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')#双卡就写default='0,1'

###最主要的参数###

###其余默认##

parser.add_argument('--rect', action='store_true', help='rectangular training')

parser.add_argument('--resume', nargs='?', const=True, default=False, help='resume most recent training')

parser.add_argument('--nosave', action='store_true', help='only save final checkpoint')

parser.add_argument('--notest', action='store_true', help='only test final epoch')

parser.add_argument('--noautoanchor', action='store_true', help='disable autoanchor check')

parser.add_argument('--evolve', action='store_true', help='evolve hyperparameters')

parser.add_argument('--bucket', type=str, default='', help='gsutil bucket')

parser.add_argument('--cache-images', action='store_true', help='cache images for faster training')

parser.add_argument('--image-weights', action='store_true', help='use weighted image selection for training')

parser.add_argument('--name', default='', help='renames experiment folder exp{N} to exp{N}_{name} if supplied')

parser.add_argument('--device', default='', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument('--multi-scale', action='store_true', help='vary img-size +/- 50%%')

parser.add_argument('--single-cls', action='store_true', help='train as single-class dataset')

parser.add_argument('--adam', action='store_true', help='use torch.optim.Adam() optimizer')

parser.add_argument('--sync-bn', action='store_true', help='use SyncBatchNorm, only available in DDP mode')

parser.add_argument('--local_rank', type=int, default=-1, help='DDP parameter, do not modify')

parser.add_argument('--logdir', type=str, default='runs/', help='logging directory')

parser.add_argument('--log-imgs', type=int, default=10, help='number of images for W&B logging, max 100')

parser.add_argument('--workers', type=int, default=8, help='maximum number of dataloader workers')

opt = parser.parse_args()

# Set DDP variables

opt.total_batch_size = opt.batch_size

opt.world_size = int(os.environ['WORLD_SIZE']) if 'WORLD_SIZE' in os.environ else 1

opt.global_rank = int(os.environ['RANK']) if 'RANK' in os.environ else -1

set_logging(opt.global_rank)

if opt.global_rank in [-1, 0]:

check_git_status()

# Resume

if opt.resume: # resume an interrupted run

ckpt = opt.resume if isinstance(opt.resume, str) else get_latest_run() # specified or most recent path

log_dir = Path(ckpt).parent.parent # runs/exp0

assert os.path.isfile(ckpt), 'ERROR: --resume checkpoint does not exist'

with open(log_dir / 'opt.yaml') as f:

opt = argparse.Namespace(**yaml.load(f, Loader=yaml.FullLoader)) # replace

opt.cfg, opt.weights, opt.resume = '', ckpt, True

logger.info('Resuming training from %s' % ckpt)

else:

# opt.hyp = opt.hyp or ('hyp.finetune.yaml' if opt.weights else 'hyp.scratch.yaml')

opt.data, opt.cfg, opt.hyp = check_file(opt.data), check_file(opt.cfg), check_file(opt.hyp) # check files

assert len(opt.cfg) or len(opt.weights), 'either --cfg or --weights must be specified'

opt.img_size.extend([opt.img_size[-1]] * (2 - len(opt.img_size))) # extend to 2 sizes (train, test)

log_dir = increment_dir(Path(opt.logdir) / 'exp', opt.name) # runs/exp1

# DDP mode

device = select_device(opt.device, batch_size=opt.batch_size)

if opt.local_rank != -1:

assert torch.cuda.device_count() > opt.local_rank

torch.cuda.set_device(opt.local_rank)

device = torch.device('cuda', opt.local_rank)

dist.init_process_group(backend='nccl', init_method='env://') # distributed backend

assert opt.batch_size % opt.world_size == 0, '--batch-size must be multiple of CUDA device count'

opt.batch_size = opt.total_batch_size // opt.world_size

# Hyperparameters

with open(opt.hyp) as f:

hyp = yaml.load(f, Loader=yaml.FullLoader) # load hyps

if 'box' not in hyp:

warn('Compatibility: %s missing "box" which was renamed from "giou" in %s' %

(opt.hyp, 'https://github.com/ultralytics/yolov5/pull/1120'))

hyp['box'] = hyp.pop('giou')

# Train

logger.info(opt)

if not opt.evolve:

tb_writer, wandb = None, None # init loggers

if opt.global_rank in [-1, 0]:

# Tensorboard

logger.info(f'Start Tensorboard with "tensorboard --logdir {opt.logdir}", view at http://localhost:6006/')

tb_writer = SummaryWriter(log_dir=log_dir) # runs/exp0

# W&B

try:

import wandb

assert os.environ.get('WANDB_DISABLED') != 'true'

logger.info("Weights & Biases logging enabled, to disable set os.environ['WANDB_DISABLED'] = 'true'")

except (ImportError, AssertionError):

opt.log_imgs = 0

logger.info("Install Weights & Biases for experiment logging via 'pip install wandb' (recommended)")

train(hyp, opt, device, tb_writer, wandb)

# Evolve hyperparameters (optional)

else:

# Hyperparameter evolution metadata (mutation scale 0-1, lower_limit, upper_limit)

meta = {'lr0': (1, 1e-5, 1e-1), # initial learning rate (SGD=1E-2, Adam=1E-3)

'lrf': (1, 0.01, 1.0), # final OneCycleLR learning rate (lr0 * lrf)

'momentum': (0.3, 0.6, 0.98), # SGD momentum/Adam beta1

'weight_decay': (1, 0.0, 0.001), # optimizer weight decay

'warmup_epochs': (1, 0.0, 5.0), # warmup epochs (fractions ok)

'warmup_momentum': (1, 0.0, 0.95), # warmup initial momentum

'warmup_bias_lr': (1, 0.0, 0.2), # warmup initial bias lr

'box': (1, 0.02, 0.2), # box loss gain

'cls': (1, 0.2, 4.0), # cls loss gain

'cls_pw': (1, 0.5, 2.0), # cls BCELoss positive_weight

'obj': (1, 0.2, 4.0), # obj loss gain (scale with pixels)

'obj_pw': (1, 0.5, 2.0), # obj BCELoss positive_weight

'iou_t': (0, 0.1, 0.7), # IoU training threshold

'anchor_t': (1, 2.0, 8.0), # anchor-multiple threshold

'anchors': (2, 2.0, 10.0), # anchors per output grid (0 to ignore)

'fl_gamma': (0, 0.0, 2.0), # focal loss gamma (efficientDet default gamma=1.5)

'hsv_h': (1, 0.0, 0.1), # image HSV-Hue augmentation (fraction)

'hsv_s': (1, 0.0, 0.9), # image HSV-Saturation augmentation (fraction)

'hsv_v': (1, 0.0, 0.9), # image HSV-Value augmentation (fraction)

'degrees': (1, 0.0, 45.0), # image rotation (+/- deg)

'translate': (1, 0.0, 0.9), # image translation (+/- fraction)

'scale': (1, 0.0, 0.9), # image scale (+/- gain)

'shear': (1, 0.0, 10.0), # image shear (+/- deg)

'perspective': (0, 0.0, 0.001), # image perspective (+/- fraction), range 0-0.001

'flipud': (1, 0.0, 1.0), # image flip up-down (probability)

'fliplr': (0, 0.0, 1.0), # image flip left-right (probability)

'mosaic': (1, 0.0, 1.0), # image mixup (probability)

'mixup': (1, 0.0, 1.0)} # image mixup (probability)

assert opt.local_rank == -1, 'DDP mode not implemented for --evolve'

opt.notest, opt.nosave = True, True # only test/save final epoch

# ei = [isinstance(x, (int, float)) for x in hyp.values()] # evolvable indices

yaml_file = Path(opt.logdir) / 'evolve' / 'hyp_evolved.yaml' # save best result here

if opt.bucket:

os.system('gsutil cp gs://%s/evolve.txt .' % opt.bucket) # download evolve.txt if exists

for _ in range(300): # generations to evolve

if os.path.exists('evolve.txt'): # if evolve.txt exists: select best hyps and mutate

# Select parent(s)

parent = 'single' # parent selection method: 'single' or 'weighted'

x = np.loadtxt('evolve.txt', ndmin=2)

n = min(5, len(x)) # number of previous results to consider

x = x[np.argsort(-fitness(x))][:n] # top n mutations

w = fitness(x) - fitness(x).min() # weights

if parent == 'single' or len(x) == 1:

# x = x[random.randint(0, n - 1)] # random selection

x = x[random.choices(range(n), weights=w)[0]] # weighted selection

elif parent == 'weighted':

x = (x * w.reshape(n, 1)).sum(0) / w.sum() # weighted combination

# Mutate

mp, s = 0.8, 0.2 # mutation probability, sigma

npr = np.random

npr.seed(int(time.time()))

g = np.array([x[0] for x in meta.values()]) # gains 0-1

ng = len(meta)

v = np.ones(ng)

while all(v == 1): # mutate until a change occurs (prevent duplicates)

v = (g * (npr.random(ng) < mp) * npr.randn(ng) * npr.random() * s + 1).clip(0.3, 3.0)

for i, k in enumerate(hyp.keys()): # plt.hist(v.ravel(), 300)

hyp[k] = float(x[i + 7] * v[i]) # mutate

# Constrain to limits

for k, v in meta.items():

hyp[k] = max(hyp[k], v[1]) # lower limit

hyp[k] = min(hyp[k], v[2]) # upper limit

hyp[k] = round(hyp[k], 5) # significant digits

# Train mutation

results = train(hyp.copy(), opt, device)

# Write mutation results

print_mutation(hyp.copy(), results, yaml_file, opt.bucket)

# Plot results

plot_evolution(yaml_file)

print(f'Hyperparameter evolution complete. Best results saved as: {yaml_file}\n'

f'Command to train a new model with these hyperparameters: $ python train.py --hyp {yaml_file}')

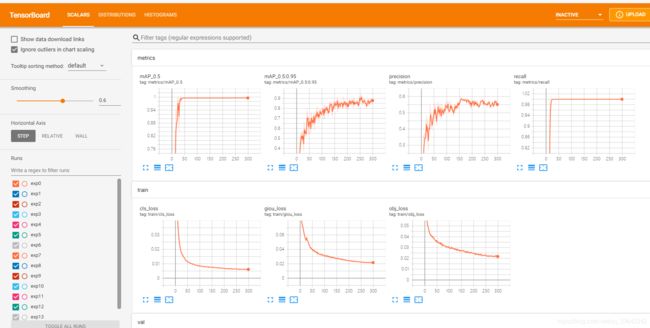

tensorboard

tensorboard --logdir=runs/

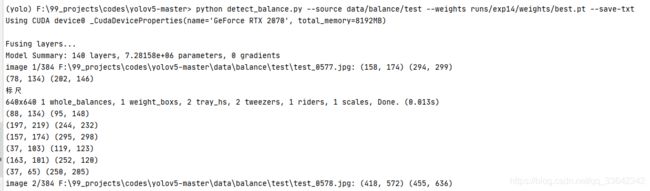

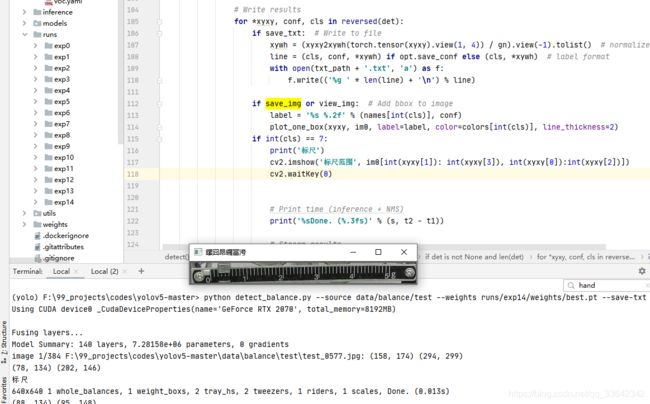

4 检测

可以在detect.py里面, # Write results这行后面找到最终检测结果的位置,为后续工程做准备

python detect_balance.py --source data/balance/test --weights runs/exp14/weights/best.pt --save-txt

三.ncnn

os: ubuntu 18.04

测试手机系统: android 8.1.0

测试手机运行内存: 6GB

一.安装java jdk

1、更新软件包列表:

sudo apt-get update

2、安装openjdk-8-jdk:

sudo apt-get install openjdk-8-jdk

3、查看java版本,看看是否安装成功:

java -version

二.安装Android studio

可完全参考

https://jingyan.baidu.com/article/86112f13a46ff42736978740.html

第二步直接右键解压,安装包放在同一文件夹下;

第七步不要勾选安装虚拟机;

停留在Android Studio启动界面.

三.启动项目

先解压程序包;

在Android Studio启动界面点击第二项’Open an existing Android Studio project ’;

指向android_YOLOV5_NCNN项目(一般一个完整的Android项目在打开时前面都会有个图标)

打开后会有很多依赖包需要安装,均会在ide上提示,安装时忘了记录,需要安装时可以问我…

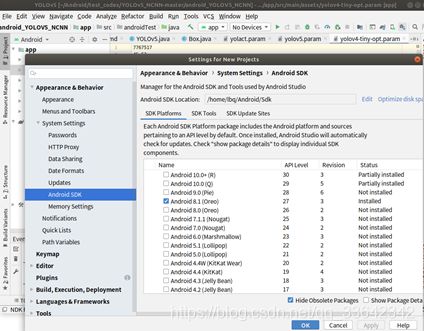

四.安装sdk

根据Android手机的勾选sdk(点击工具栏最右侧的sdk manager).

SDK Tools这一页这5项也要安装(有的已经安装过了).

如果成功安装所有依赖项,项目文件的所有警示标志都会消失.

五.调试手机

1)打开手机开发者选项,使用adb连接手机:https://jingyan.baidu.com/article/066074d6a1878083c31cb01c.html

如果adb连接有问题,ide右侧会自动提示步骤,直至ide找出手机

![]()

2)点击上图☝的绿色启动箭头,便可直接安装到手机上测试,此时需要关注手机的运行状态,对于所有的权限同意即可。

六.代码结构

1)External Build Files

里面最重要的文件是CMakeLists.txt,它配置了整个项目的结构;如果想要加快检测速度,就用 其它在ncnn官方提供的图形渲染方式而不是vulkan

2)Gradle Scripts

之前装的依赖项就是按照这里面来的,所以不用理. 3)app

代码比较工整,都是按编程语言来分的

1.java(com.wzt.yolov5)目录

主程序和一些模型的调用程序

2.cpp目录

每个模型的实际调用程序,均在对应cpp文件中复现了,这些代码可以在VS里尝试调用;

3.assets目录

每个模型的网络结构(.param netron可以查看)以及权重文件(.bin)

4.res目录

UI相关文件

另外两个目录不用管。

四.tensorrt

具体可以参考这篇文章,我是完全按他的来,这里我只列举我用到的关键库的版本.

- OpenCV: 4.5

- tensorrt: 7.0.0.11

- vs2019

- cmake/windows: 3.17.1