强化学习落地挑战赛:学习指定平等的促销策略(训练流程)

赛事官方入口:https://codalab.lisn.upsaclay.fr/competitions/823#learn_the_details-overview

深度强化实验室的中文说明:

http://deeprl.neurondance.com/d/583-ai

http://deeprl.neurondance.com/d/584-ai

本文的内容基于前面的文章:赛题分析:AI决策•强化学习落地挑战赛——学习指定平等的促销策略

这里直接讲述训练过程。

数据预处理

在得到csv文件之后,我们需要将文件中的数据分门别类,方便以后进行处理。以下为data_preprocess.py的内容:

import sys

import pandas as pd

import numpy as np

def data_preprocess(offline_data_path: str, base_days=30):

"""从原始离线数据中定义用户状态,生成包含用户状态的新离线数据

参数:

offline_data_path: 原始数据文件路径

base_days: 定义初始用户状态所需的历史天数

返回:

new_offline_data(pd.DataFrame): 包含用户状态的新离线数据。用户状态有3个维度:历史总订单数、非零日订单数历史平均值、 非零日订单费用历史平均值

user_states_by_day(np.ndarray): 包含所有用户状态的数组

user_states_by_day[0] 表示第一天的所有用户状态。

evaluation_start_states(np.ndarray): 本次比赛验证第一天的状态

"""

# 读取csv文件形成一个表格的形式

df = pd.read_csv(offline_data_path)

# 得到数据中的总天数

total_days = df['step'].max() + 1

# 其中的三列为用户的数据

useful_df = df[['index', 'day_order_num', 'day_average_order_fee']]

# 根据一个用户的数据量和总数据量,得到每个用户数据开始的行索引

index = np.arange(0, df.shape[0], total_days)

# 定义每个用户初始状态用到的行索引,展开成一个一维的numpy数组

initial_data_index = np.array([np.arange(i, i+base_days) for i in index]).flatten()

# 除了定义每个用户初始状态之外的行索引,展开成一个一维的numpy数组

rollout_data_index = np.array([np.arange(i+base_days, i+total_days) for i in index]).flatten()

# 定义每个用户初始状态的数据(三列)

initial_data = useful_df.iloc[initial_data_index, :]

# 得到1000 * 2的表,代表了1000个用户各两个数据,这两个数据是前30天总订单数,以及前30天订单数不为0的天数

day_order_num_df = initial_data.groupby('index')['day_order_num'].agg(total_num='sum', nozero_time=np.count_nonzero)

# 添加一列数据显示非零订单的平均单数

day_order_num_df['average_num'] = day_order_num_df.apply(lambda x: x['total_num'] / x['nozero_time'] if x['nozero_time'] > 0 else 0, axis=1)

# 得到1000 * 2的表,代表了1000个用户各两个数据,这两个数据是前30天总订单金额,以及前30天订单数不为0的天数

day_order_average_fee_df = initial_data.groupby('index')['day_average_order_fee'].agg(total_fee='sum', nozero_time=np.count_nonzero)

# 添加一列显示非零订单中day_average_order_fee的平均数额

day_order_average_fee_df['average_fee'] = day_order_average_fee_df.apply(lambda x: x['total_fee'] / x['nozero_time'] if x['nozero_time'] > 0 else 0, axis=1)

# 把用户的总订单数量,平均数量,以及平均消费三列内容拼接组成新的表,这这个表能够定义用户的初始状态

initial_states = pd.concat([day_order_num_df[['total_num', 'average_num']], day_order_average_fee_df[['average_fee']]], axis=1)

# 用户数量

traj_num = initial_states.shape[0]

# 转换成numpy数组

initial_states = initial_states.to_numpy()

traj_list = []

final_state_list = []

for t in range(traj_num):

# 获取第t个用户的状态(由前30天决定)

state = initial_states[t, :]

traj_list.append(state)

index = np.arange(t * total_days, (t+1) * total_days)

# 获取用户后30天的动作

user_act = useful_df.to_numpy()[index, 1:][base_days:]

# 逐一处理后30天,计算后面30天每一天的用户状态,存到数组中

for i in range(total_days-base_days):

cur_act = user_act[i]

next_state = np.empty(state.shape)

size = (state[0] / state[1]) if state[1] > 0 else 0

next_state[0] = state[0] + cur_act[0]

next_state[1] = state[1] + 1 / (size + 1) * (cur_act[0] - state[1]) * float(cur_act[0] > 0.0)

next_state[2] = state[2] + 1 / (size + 1) * (cur_act[1] - state[2]) * float(cur_act[1] > 0.0)

state = next_state

# 判断是否最后一天的状态,以此添加进不同的列表

traj_list.append(state) if (i + 1) < total_days-base_days else final_state_list.append(state)

history_state_array = np.array(traj_list)

evaluation_start_states = np.array(final_state_list)

# 后30天的优惠券动作的numpy数组

coupon_action_array = df.iloc[rollout_data_index, 1:3].to_numpy()

# 后30天用户动作的numpy数组

user_action_array = df.iloc[rollout_data_index, 3:5].to_numpy()

# 二维数组,形如[[0],[1],...,[29],[0],[1],...[29],...],1000次循环

step_list = [[j] for _ in range(traj_num) for j in range(total_days - base_days)]

# 二维数组,形如[[0] * 1000,[1] * 1000,....]

traj_index_list = [[i] for i in range(traj_num) for _ in range(total_days - base_days)]

steps, trajs = np.array(step_list), np.array(traj_index_list)

columns = ['index', 'total_num', 'average_num', 'average_fee', 'day_deliver_coupon_num', 'coupon_discount', 'day_order_num', 'day_average_order_fee', 'step']

# 把上面所有处理的数据全部拼成一个表,包括:用户id,状态,优惠券动作,后30天中的第几天,共9列

new_offline_data = pd.DataFrame(data=np.concatenate([trajs, history_state_array, coupon_action_array, user_action_array, steps], -1), columns=columns)

index = np.arange(0, history_state_array.shape[0], total_days-base_days)

# 把history_state_array变成三维数组,中间加入一维分离每个用户

user_states_by_day = np.array([history_state_array[index+i] for i in range(total_days-base_days)])

new_offline_data_dict = { "state": history_state_array, "action_1": coupon_action_array, "action_2": user_action_array, "index": index + (total_days-base_days) }

# 返回四个值,第一个是panda数据总表,第二个是后30天用户状态的numpy数组,第三个是最后一天用户状态的数组,第四个是字典,内容与第一个表相差不大

return new_offline_data, user_states_by_day, evaluation_start_states, new_offline_data_dict

if __name__ == "__main__":

offline_data = sys.argv[1]

new_offline_data, user_states_by_day, evaluation_start_states, new_offline_data_dict = data_preprocess(offline_data)

print(new_offline_data.shape)

print(user_states_by_day.shape)

print(evaluation_start_states.shape)

# 把第一个数据总表存储成csv

new_offline_data.to_csv('offline_592_3_dim_state.csv', index=False)

# 以npy文件的形式保存用户动作的numpy数组

np.save('user_states_by_day.npy', user_states_by_day)

# 以npy文件的形式保存最后一天用户状态的数组

np.save('evaluation_start_states.npy', evaluation_start_states)

# 以npz的形式保存字典

np.savez('venv.npz', **new_offline_data_dict)

我们需要在控制台运行该文件,而不是在pycharm等编辑器中运行。确保data_preprocess.py与offline_592_1000.csv在同一个文件夹下,控制台cd到该文件夹,执行:

python data_preprocess.py offline_592_1000.csv

然后就会在该目录下自动生成上面所述的四个文件。

代码优化

import sys

import pandas as pd

import numpy as np

import user_states

def data_preprocess(offline_data_path: str, base_days=30):

"""Define user state from original offline data and generate new offline data containing user state

Args:

offline_data_path: Path of original offline data

base_days: The number of historical days required for defining the initial user state

Return:

new_offline_data(pd.DataFrame): New offline data containing user state.

User state, 3 dimension:Historical total order number、historical average of non-zero day order number、and historical average of non-zero day order fee

user_states_by_day(np.ndarray): An array of shape (number_of_days, number_of_users, 3), contains all the user states.

If base_days is equal to 30, the number_of_days is equal to total_days - base_days, 30.

And user_states_b y_day[0] means all the user states in the first day.

evaluation_start_states(np.ndarray): the states for the first day of validation in this competition

venv_dataset: New offline data in dictionary form, grouped by states, user actions and coupon actions.

"""

df = pd.read_csv(offline_data_path)

total_users = df["index"].max() + 1

total_days = df["step"].max() + 1

venv_days = total_days - base_days

state_size = len(user_states.get_、state_names())

# Prepare processed data

user_states_by_day = []

user_actions_by_day = []

coupon_actions_by_day = []

evaluation_start_states = np.empty((total_users, state_size))

# Recurrently unroll states from offline dataset

states = np.zeros((total_users, state_size)) # State of day 0 to be all zero

with np.errstate(invalid="ignore", divide="ignore"): # Ignore nan and inf result from division by 0

for current_day, day_user_data in df.groupby("step"):

# Fetch field data

day_coupon_num = day_user_data["day_deliver_coupon_num"].to_numpy()

coupon_discount = day_user_data["coupon_discount"].to_numpy()

day_order_num = day_user_data["day_order_num"].to_numpy()

day_average_fee = day_user_data["day_average_order_fee"].to_numpy()

# Unroll next state

next_states = user_states.get_next_state(states, day_order_num, day_average_fee, np.empty(states.shape))

# Collect data for new offline dataset

if current_day >= base_days:

user_states_by_day.append(states)

user_actions_by_day.append(np.column_stack((day_order_num, day_average_fee)))

coupon_actions_by_day.append(np.column_stack((day_coupon_num, coupon_discount)))

if current_day >= total_days:

evaluation_start_states = next_states # Evaluation starts from the final state in the offline dataset

states = next_states

# Group states by users (by trajectory) and generate processed offline dataset

venv_dataset = {

"state": np.swapaxes(user_states_by_day, 0, 1).reshape((-1, state_size)), # Sort by users (trajectory)

"action_1": np.swapaxes(coupon_actions_by_day, 0, 1).reshape((-1, 2)),

"action_2": np.swapaxes(user_actions_by_day, 0, 1).reshape((-1, 2)),

"index": np.arange(venv_days, (total_users + 1) * venv_days, venv_days) # End index for each trajectory

}

traj_indices, step_indices = np.mgrid[0:total_users, 0:venv_days].reshape((2, -1, 1)) # Prepare indices

new_offline_data = pd.DataFrame(

data=np.concatenate([traj_indices, venv_dataset["state"], venv_dataset["action_1"], venv_dataset["action_2"], step_indices], -1),

columns=["index", *user_states.get_state_names(), "day_deliver_coupon_num", "coupon_discount", "day_order_num", "day_average_order_fee", "step"])

return new_offline_data, np.array(user_states_by_day), evaluation_start_states, venv_dataset

if __name__ == "__main__":

offline_data = sys.argv[1]

new_offline_data, user_states_by_day, evaluation_start_states, new_offline_data_dict = data_preprocess(offline_data)

print(new_offline_data.shape)

print(user_states_by_day.shape)

print(evaluation_start_states.shape)

new_offline_data.to_csv('offline_592_3_dim_state.csv', index=False)

np.save('user_states_by_day.npy', user_states_by_day)

np.save('evaluation_start_states.npy', evaluation_start_states)

np.savez('venv.npz', **new_offline_data_dict)

再把对应的函数封装成user_states.py:

import numpy as np

def get_state_names():

return ["total_num", "average_num", "average_fee"]

# 定义了下一个状态的计算方式

def get_next_state(states, day_order_num, day_average_fee, next_states):

# If you define day_order_num to be continuous instead of discrete/category, apply round function here.

day_order_num = day_order_num.clip(0, 6).round()

day_average_fee = day_average_fee.clip(0.0, 100.0)

# Rules on the user action: if either action is 0 (order num or fee), the other action should also be 0.

day_order_num[day_average_fee <= 0.0] = 0

day_average_fee[day_order_num <= 0] = 0.0

# We compute the days accumulated for each user's state by dividing the total order num with average order num

accumulated_days = states[..., 0] / states[..., 1]

accumulated_days[states[..., 1] == 0.0] = 0.0

# Compute next state

next_states[..., 0] = states[..., 0] + day_order_num # Total num

next_states[..., 1] = states[..., 1] + 1 / (accumulated_days + 1) * (day_order_num - states[..., 1]) # Average order num

next_states[..., 2] = states[..., 2] + 1 / (accumulated_days + 1) * (day_average_fee - states[..., 2]) # Average order fee across days

return next_states

# 这里定义了如何把用户的状态转换为神经网络训练的输入,这里定义为14个元素的数组

def states_to_observation(states: np.ndarray, day_total_order_num: int=0, day_roi: float=0.0):

"""Reduce the two-dimensional sequence of states of all users to a state of a user community

A naive approach is adopted: mean, standard deviation, maximum and minimum values are calculated separately for each dimension.

Additionly, we add day_total_order_num and day_roi.

Args:

states(np.ndarray): A two-dimensional array containing individual states for each user

day_total_order_num(int): The total order number of the users in one day

day_roi(float): The day ROI of the users

Return:

The states of a user community (np.array)

"""

assert len(states.shape) == 2

mean_obs = np.mean(states, axis=0)

std_obs = np.std(states, axis=0)

max_obs = np.max(states, axis=0)

min_obs = np.min(states, axis=0)

day_total_order_num, day_roi = np.array([day_total_order_num]), np.array([day_roi])

return np.concatenate([mean_obs, std_obs, max_obs, min_obs, day_total_order_num, day_roi], 0)

Revive SDK的使用

这个内容请参考我前面的文章:offline强化学习之Revive SDK的使用

在本项目中,Revive的数据文件就是上面储存的venv.npz,配置文件venv.yaml如下:

metadata:

graph:

action_1:

- state

action_2:

- state

- action_1

next_state:

- action_2

- state

columns:

- total_num:

dim: state

# type: discrete

# num: 181

type: continuous

min: 0

max: 180

- average_num:

dim: state

type: continuous

min: 0

max: 6

- average_fee:

dim: state

type: continuous

min: 0

max: 100

- day_deliver_coupon_num:

dim: action_1

# type: discrete

# num: 6

type: continuous

min: 0

max: 5

# type: category

# values: [0, 1, 2, 3, 4, 5]

- coupon_discount:

dim: action_1

# type: discrete

# num: 8

type: continuous

min: 0.6

max: 0.95

# type: category

# values: [0.95, 0.90, 0.85, 0.80, 0.75, 0.70, 0.65, 0.60]

- day_order_num:

dim: action_2

# type: discrete

# num: 7

type: continuous

min: 0

max: 6

# type: category

# values: [0, 1, 2, 3, 4, 5, 6]

- day_average_order_fee:

dim: action_2

type: continuous

min: 0

max: 100

expert_functions:

next_state:

'node_function' : 'venv.get_next_state'

此文件放在data文件夹下,构建了各个状态转换的图,以及表明了需要用到的专家函数,有专家函数的地方无需构建神经网络。专家函数放到了data文件夹的venv.py中:

from typing import Dict

import torch

import user_states

def get_next_state(inputs : Dict[str, torch.Tensor]) -> torch.Tensor:

# Extract variables from inputs

states = inputs["state"]

user_action = inputs["action_2"]

day_order_num = user_action[..., 0]

day_avg_fee = user_action[..., 1]

# Construct next_states array with currrent state's shape and device

next_states = states.new_empty(states.shape)

user_states.get_next_state(states, day_order_num, day_avg_fee, next_states)

return next_states

有了这几个文件之后,就可以开始训练我们的虚拟环境了,训练命令为:

python train.py --run_id venv_baseline -rcf config.json --data_file venv.npz --config_file venv.yaml --venv_mode tune --policy_mode None --train_venv_trials 3

命令中的policy_mode 为None表示只训练虚拟环境而不训练策略。

应当修改命令的路径于文件路径一致。训练完毕后就能在Revive中logs文件夹中对应的项目名称文件夹下找到一个env.pkl文件,把它复制到baseline的data文件夹中,改名为venv.pkl。

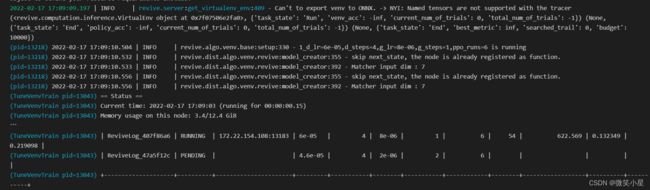

训练过程中的输出:

环境评估

在Revive的环境训练出来后,我们需要查看其是否符合我们的预期:

打开 revive\logs\venv_baseline\ 文件夹,然后打开train_venv.json查看best_id是什么。然后打开其文件夹。最佳的trail是根据metrics来确定的,metrics越小,说明环境拟合得越好。

我们查看histogram文件夹下的action_2.day_order_num-train.png,如下图所示:

由于我们训练环境的目的就是在已知state和action1的情况下得知action2的输出,因此我们只需要看action2是否拟合效果好即可。可以看到,训练的结果分布较为紧密,且值偏小,因此训练策略时会造成奖励偏小。但相对来说,已经是一个可以接受的结果。

Gym环境搭建

要想进行强化学习,我们必须对策略的效果能够进行很好的评估,也就是说,我们应当设定一个奖励函数来作为智能体学习的依据。这里我们运用了一个强化学习环境库Gym,Gym能够让我们创建属于自己的强化学习环境。我们自己可以定义一个环境类,只需要继承自Gym的Env类,就能拥有Gym通用环境的特性。

import torch

import numpy as np

import pickle as pk

from typing import List

from gym import Env

from gym.utils.seeding import np_random

from gym.spaces import Box, MultiDiscrete

import user_states

class VirtualMarketEnv(Env):

MAX_ENV_STEP = 14 # Number of test days in the current phase

DISCOUNT_COUPON_LIST = [0.95, 0.90, 0.85, 0.80, 0.75, 0.70, 0.65, 0.60]

ROI_THRESHOLD = 9.0

# In real validation environment, if we do not send any coupons in 14 days, we can get this gmv value

ZERO_GMV = 81840.0763705537

def __init__(self,

initial_user_states: np.ndarray,

venv_model: object,

act_num_size: List[int] = [6, 8],

obs_size: int = 14,

device: torch.device = torch.device('cpu'),

seed_number: int = 0):

"""

Args:

initial_user_states: 每天的用户状态

venv_model: Revive算法训练的环境模型

act_num_size: 动作可选择的维度

obs_size: 这里可以视为在真实环境中运行的天数

device: 运算设备

seed_number: 随机种子

"""

self.rng = self.seed(seed_number)

self.initial_user_states = initial_user_states

self.venv_model = venv_model

self.current_env_step = None

self.states = None

self.done = None

self.device = device

self._set_action_space(act_num_size)

self._set_observation_space(obs_size)

self.total_cost, self.total_gmv = None, None

def seed(self, seed_number):

return np_random(seed_number)[0]

# 创建离散动作空间,第一维有6个数可以取,第二维8个数可以取,对应发行的优惠券数量和折扣

def _set_action_space(self, num_list=[6, 8]):

self.action_space = MultiDiscrete(num_list)

# 创建连续状态空间,定义最大最小值以及环境步长

def _set_observation_space(self, obs_size, low=0, high=100):

self.observation_space = Box(low=low, high=high, shape=(obs_size,), dtype=np.float32)

# 最重要的函数,决定了状态转移以及获得的奖励

def step(self, action):

# 获取动作输出

coupon_num, coupon_discount = action[0], VirtualMarketEnv.DISCOUNT_COUPON_LIST[action[1]]

# 把输出分配到每个人的身上

coupon_num, coupon_discount = np.full(self.states.shape[0], coupon_num), np.full(self.states.shape[0], coupon_discount)

# 按列合并两个矩阵

coupon_actions = np.column_stack((coupon_num, coupon_discount))

# 把用户状态和优惠券动作输入到Revive训练的模型中,得到用户输出的动作

venv_infer_result = self.venv_model.infer_one_step({"state": self.states, "action_1": coupon_actions})

user_actions = venv_infer_result["action_2"]

day_order_num, day_average_fee = user_actions[..., 0], user_actions[..., 1]

# 计算下一个用户状态

with np.errstate(invalid="ignore", divide="ignore"):

self.states = user_states.get_next_state(self.states, day_order_num, day_average_fee, np.empty(self.states.shape))

info = {

"CouponNum": coupon_num[0],

"CouponDiscount": coupon_discount[0],

"UserAvgOrders": day_order_num.mean(),

"UserAvgFee": day_average_fee.mean(),

"NonZeroOrderCount": np.count_nonzero(day_order_num)

}

# 开始按照公式计算各个评估数值

# 优惠订单数

day_coupon_used_num = np.minimum(day_order_num, coupon_num)

# 成本

day_cost = (1 - coupon_discount) * day_coupon_used_num * day_average_fee

# 单人营收

day_gmv = day_average_fee * day_order_num - day_cost

# 单日成本

day_total_cost = np.sum(day_cost)

# 单日营收

day_total_gmv = np.sum(day_gmv)

# 总营收

self.total_gmv += day_total_gmv

# 总成本

self.total_cost += day_total_cost

# 计算奖励

if (self.current_env_step+1) < VirtualMarketEnv.MAX_ENV_STEP:

reward = 0

else:

# 计算总盈利率,在比赛中这个值低于6.5的话得分为0,训练中设为9

avg_roi = self.total_gmv / max(self.total_cost, 1)

if avg_roi >= VirtualMarketEnv.ROI_THRESHOLD:

reward = self.total_gmv / VirtualMarketEnv.ZERO_GMV

else:

reward = avg_roi - VirtualMarketEnv.ROI_THRESHOLD

info["TotalGMV"] = self.total_gmv

info["TotalROI"] = avg_roi

# 是否完成一轮

self.done = ((self.current_env_step + 1) == VirtualMarketEnv.MAX_ENV_STEP)

self.current_env_step += 1

day_total_order_num = int(np.sum(day_order_num))

day_roi = day_total_gmv / max(day_total_cost, 1)

# step函数的输出为下一个状态,奖励,是否完成,以及信息(可选)

return user_states.states_to_observation(self.states, day_total_order_num, day_roi), reward, self.done, info

# 初始化环境

def reset(self):

"""Reset the initial states of all users

Return:

The group state

"""

self.states = self.initial_user_states[self.rng.randint(0, self.initial_user_states.shape[0])]

self.done = False

self.current_env_step = 0

self.total_cost, self.total_gmv = 0.0, 0.0

return user_states.states_to_observation(self.states)

# 建立环境的函数

def get_env_instance(states_path, venv_model_path, device = torch.device('cpu')):

initial_states = np.load(states_path)

with open(venv_model_path, 'rb') as f:

venv_model = pk.load(f, encoding='utf-8')

venv_model.to(device)

return VirtualMarketEnv(initial_states, venv_model, device=device)

训练策略

设定好环境类后,我们就可以正式开始训练了,训练的代码为:

import sys

import os

from stable_baselines3 import PPO

from stable_baselines3.common.callbacks import CheckpointCallback

from virtual_env import get_env_instance

if __name__ == '__main__':

# 设定存储文件夹,如果命令中没有包含存储路径,则使用“model_checkpoints”

save_path = sys.argv[1] if len(sys.argv) > 1 else 'model_checkpoints'

# 将本工作路径添加到检索的最高优先级

sys.path.insert(0, os.getcwd())

# 实例化我们的环境类

env = get_env_instance('user_states_by_day.npy', 'venv.pkl')

# 定义用PPO算法来训练我们的环境

model = PPO("MlpPolicy", env, n_steps=840, batch_size=420, verbose=1, tensorboard_log='logs')

# 定义存档

checkpoint_callback = CheckpointCallback(save_freq=1e4, save_path=save_path)

# 开始训练

model.learn(total_timesteps=int(8e6), callback=[checkpoint_callback])

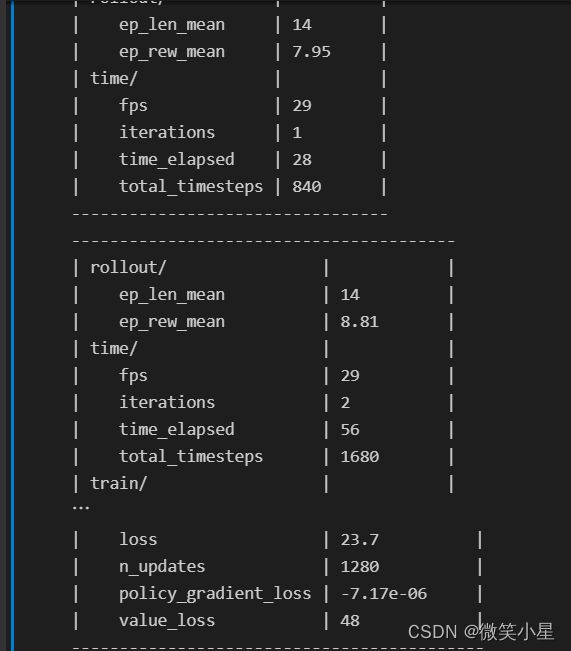

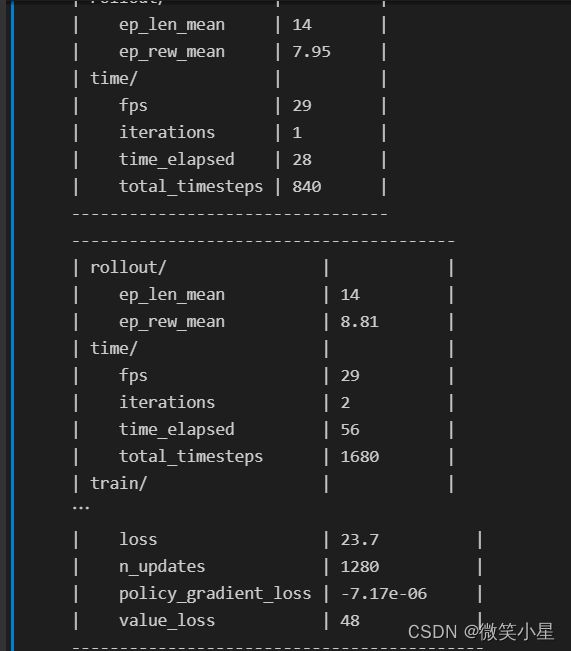

训练输出如下:

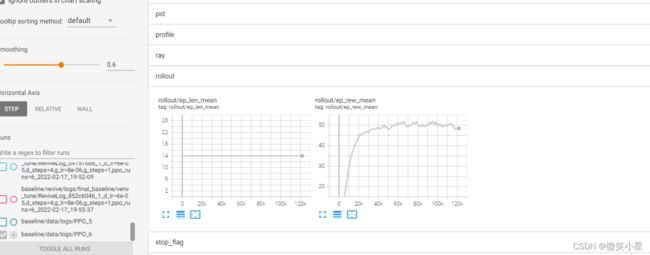

查看tensorboard

要想对训练效果进行查看,tensorboard是一个非常好用的工具。

在.ipynb文件中,我们只需要这两行命令就能打开tensorboard:

%load_ext tensorboard

!mkdir -p $baseline_root/data/logs

%tensorboard --bind_all --logdir $baseline_root/data/logs

也可以用响应的命令行开启,非常方便地查看训练过程:

评估策略

在将策略上传至比赛网站之前,我们可以在本地的虚拟环境中评估策略,我们需要把训练好的模型文件复制到data文件夹中,然后评估代码如下:

import importlib

import numpy as np

import random

from stable_baselines3 import PPO

import virtual_env

importlib.reload(virtual_env)

env = virtual_env.get_env_instance("user_states_by_day.npy", "venv.pkl")

policy = PPO.load("rl_model.zip")

validation_length = 14

obs = env.reset()

for day_index in range(validation_length):

coupon_action, _ = policy.predict(obs, deterministic=True) # Some randomness will be added to action if deterministic=False

# coupon_action = np.array([random.randint(0, 6), random.randint(0, 5)]) # Random action

obs, reward, done, info = env.step(coupon_action)

if reward != 0:

info["Reward"] = reward

print(f"Day {day_index+1}: {info}")

输出如下:

Day 1: {'CouponNum': 1, 'CouponDiscount': 0.9, 'UserAvgOrders': 1.5904, 'UserAvgFee': 29.765987, 'NonZeroOrderCount': 9791}

Day 2: {'CouponNum': 1, 'CouponDiscount': 0.9, 'UserAvgOrders': 1.5912, 'UserAvgFee': 30.867702, 'NonZeroOrderCount': 9792}

Day 3: {'CouponNum': 1, 'CouponDiscount': 0.9, 'UserAvgOrders': 1.568, 'UserAvgFee': 31.559679, 'NonZeroOrderCount': 9795}

Day 4: {'CouponNum': 1, 'CouponDiscount': 0.9, 'UserAvgOrders': 1.4854, 'UserAvgFee': 31.584871, 'NonZeroOrderCount': 9800}

Day 5: {'CouponNum': 1, 'CouponDiscount': 0.9, 'UserAvgOrders': 1.3933, 'UserAvgFee': 30.971512, 'NonZeroOrderCount': 9802}

Day 6: {'CouponNum': 1, 'CouponDiscount': 0.9, 'UserAvgOrders': 1.3002, 'UserAvgFee': 30.063185, 'NonZeroOrderCount': 9805}

Day 7: {'CouponNum': 1, 'CouponDiscount': 0.9, 'UserAvgOrders': 1.2253, 'UserAvgFee': 29.109732, 'NonZeroOrderCount': 9811}

Day 8: {'CouponNum': 1, 'CouponDiscount': 0.9, 'UserAvgOrders': 1.1646, 'UserAvgFee': 28.225338, 'NonZeroOrderCount': 9810}

Day 9: {'CouponNum': 1, 'CouponDiscount': 0.9, 'UserAvgOrders': 1.1005, 'UserAvgFee': 27.522356, 'NonZeroOrderCount': 9812}

Day 10: {'CouponNum': 1, 'CouponDiscount': 0.9, 'UserAvgOrders': 1.0491, 'UserAvgFee': 27.030869, 'NonZeroOrderCount': 9814}

Day 11: {'CouponNum': 1, 'CouponDiscount': 0.9, 'UserAvgOrders': 1.0053, 'UserAvgFee': 26.705904, 'NonZeroOrderCount': 9812}

Day 12: {'CouponNum': 1, 'CouponDiscount': 0.9, 'UserAvgOrders': 0.9851, 'UserAvgFee': 26.488718, 'NonZeroOrderCount': 9820}

Day 13: {'CouponNum': 1, 'CouponDiscount': 0.9, 'UserAvgOrders': 0.9823, 'UserAvgFee': 26.372862, 'NonZeroOrderCount': 9817}

Day 14: {'CouponNum': 1, 'CouponDiscount': 0.9, 'UserAvgOrders': 0.9817, 'UserAvgFee': 26.34233, 'NonZeroOrderCount': 9815, 'TotalGMV': 5323465.089286477, 'TotalROI': 14.024639540209506, 'Reward': 65.04716668619675}

可以看到评估的结果TotalROI是14,达到6.5以上的线。然后看TotalGMV,达到了532万。这个值可以通过改进我们的各种训练策略来提高,满足TotalROI>6.5的情况下,越大越好。

对于在本地的表现较好而真实环境的测试结果较差的情况,可能是由于虚拟环境没有正确学习。执行以下代码:

import pickle as pk

with open(f"{baseline_root}/data/venv.pkl", "rb") as f:

venv = pk.load(f, encoding="utf-8")

initial_states = np.load(f"{baseline_root}/data/user_states_by_day.npy")[10]

coupon_actions = np.array([(5, 0.95) for _ in range(initial_states.shape[0])])

node_values = venv.infer_one_step({ "state": initial_states, "action_1": coupon_actions })

user_actions = node_values['action_2']

day_order_num, day_avg_fee = user_actions[..., 0].round(), user_actions[..., 1].round(2)

print(day_order_num.reshape((-1,))[:100])

print(day_avg_fee.reshape((-1,))[:100])

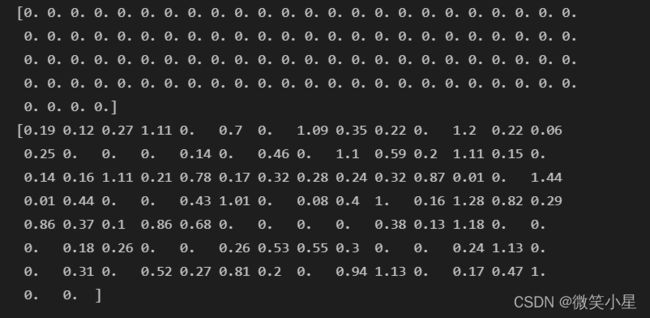

输出的是day_order_num和day_avg_fee两个数组。如下图所示:

如果发现在day_order_num=0的情况下,day_avg_fee不接近于0,则环境训练较为不理想。

另外,一个较为理想的策略在训练是奖励应当不大于10,如果大于这个值,可能意味着虚拟环境的训练不太理想。

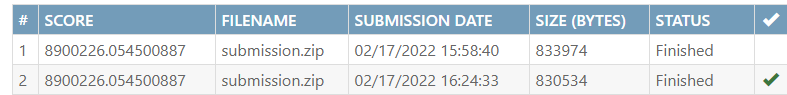

上传策略

在自己的策略训练完成后,我们会在data文件夹得到一个名为rl_model的压缩包,把这个压缩包替换掉simple_submission中data文件夹中的同名文件,同理把evaluation_start_states.npy也替换成我们自己生成的文件,把simple_submission重新压缩后就可以上传了。上传后,模型会在服务器的数据上跑出真实得分。

以上是baseline的上传方式,如果想获得更好的结果,可以从更改用户状态的定义以及更改奖励函数入手,容易获得更好的结果。