Pytorch Mask R-CNN 实例分割

Mask R-CNN通过在 Faster-RCNN 的基础上添加一个分支网络,在实现目标检测的同时,把目标像素分割出来.

图像分割是深度学习和神经网络的一个重要应用.

使用Pytorch自带的Mask R-CNN模型.

在实例分割中每个实例根据不同的类别划分颜色.如何实现呢? 可以这么说,我们可以检测出一张图片中的每个物体的存在,得到它的锚框,进行分类,然后用不同的颜色mask it 。所以说,实例分割是目标检测和图像分割的结合。

import cv2

import numpy as np

import random

import torch

import torchvision

import argparse

from PIL import Image

from torchvision.transforms import transforms as transforms

coco_names= [

'__background__', 'person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus',

'train', 'truck', 'boat', 'traffic light', 'fire hydrant', 'N/A', 'stop sign',

'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'N/A', 'backpack', 'umbrella', 'N/A', 'N/A',

'handbag', 'tie', 'suitcase', 'frisbee', 'skis', 'snowboard', 'sports ball',

'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard', 'tennis racket',

'bottle', 'N/A', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl',

'banana', 'apple', 'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza',

'donut', 'cake', 'chair', 'couch', 'potted plant', 'bed', 'N/A', 'dining table',

'N/A', 'N/A', 'toilet', 'N/A', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'N/A', 'book',

'clock', 'vase', 'scissors', 'teddy bear', 'hair drier', 'toothbrush'

]

COLORS = np.random.uniform(0, 255, size=(len(coco_names), 3))

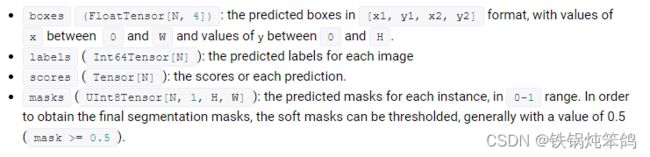

def get_outputs(image, model, threshold):

with torch.no_grad():

# forward pass of the image through the modle

outputs = model(image)

# get all the scores

scores = list(outputs[0]['scores'].detach().cpu().numpy())

# index of those scores which are above a certain threshold

thresholded_preds_inidices = [scores.index(i) for i in scores if i > threshold]

thresholded_preds_count = len(thresholded_preds_inidices)

# get the masks

masks = (outputs[0]['masks']>0.5).squeeze().detach().cpu().numpy()

# discard masks for objects which are below threshold

masks = masks[:thresholded_preds_count]

# get the bounding boxes, in (x1, y1), (x2, y2) format

boxes = [[(int(i[0]), int(i[1])), (int(i[2]), int(i[3]))] for i in outputs[0]['boxes'].detach().cpu()]

# discard bounding boxes below threshold value

boxes = boxes[:thresholded_preds_count]

# get the classes labels

labels = [coco_names[i] for i in outputs[0]['labels']]

return masks, boxes, labels

def draw_segmentation_map(image, masks, boxes, labels):

alpha = 1

beta = 0.6 # transparency for the segmentation map

gamma = 0 # scalar added to each sum

for i in range(len(masks)):

red_map = np.zeros_like(masks[i]).astype(np.uint8)

green_map = np.zeros_like(masks[i]).astype(np.uint8)

blue_map = np.zeros_like(masks[i]).astype(np.uint8)

# apply a randon color mask to each object

color = COLORS[random.randrange(0, len(COLORS))]

red_map[masks[i] == 1], green_map[masks[i] == 1], blue_map[masks[i] == 1] = color

# combine all the masks into a single image

segmentation_map = np.stack([red_map, green_map, blue_map], axis=2)

#convert the original PIL image into NumPy format

image = np.array(image)

# convert from RGN to OpenCV BGR format

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

# apply mask on the image

cv2.addWeighted(image, alpha, segmentation_map, beta, gamma, image)

# draw the bounding boxes around the objects

cv2.rectangle(image, boxes[i][0], boxes[i][1], color=color,

thickness=2)

# put the label text above the objects

cv2.putText(image , labels[i], (boxes[i][0][0], boxes[i][0][1]-10),

cv2.FONT_HERSHEY_SIMPLEX, 1, color,

thickness=2, lineType=cv2.LINE_AA)

return image

parser = argparse.ArgumentParser()

parser.add_argument('-i', '--input', required=True,

help='path to the input data')

parser.add_argument('-t', '--threshold', default=0.965, type=float,

help='score threshold for discarding detection')

args = vars(parser.parse_args(args=['-i','image1.jpg']))

# initialize the model

model = torchvision.models.detection.maskrcnn_resnet50_fpn(pretrained=True, progress=True,

num_classes=91)

# set the computation device

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# load the modle on to the computation device and set to eval mode

model.to(device).eval()

# transform to convert the image to tensor

transform = transforms.Compose([

transforms.ToTensor()

])

image_path = args['input']

image = Image.open(image_path).convert('RGB')

# keep a copy of the original image for OpenCV functions and applying masks

orig_image = image.copy()

# transform the image

image = transform(image)

# add a batch dimension

image = image.unsqueeze(0).to(device)

masks, boxes, labels = get_outputs(image, model, args['threshold'])

result = draw_segmentation_map(orig_image, masks, boxes, labels)

# visualize the image

cv2.imshow('Segmented image', result)

cv2.waitKey(0)

# set the save path

save_path = f"../outputs/{args['input'].split('/')[-1].split('.')[0]}.jpg"

cv2.imwrite(save_path, result)