非局部均值滤波(NL-means)算法的CUDA优化加速

在上一篇文章中,我们讲了使用积分图来加速NL-means算法,虽然运算耗时减少了好多,还是没达到毫秒级。所以本文在积分图加速的基础上,进一步使用CUDA来并行加速,使得耗时减少到毫秒级。

使用积分图来加速NL-means算法原理,此处给出链接,不再复述:

非局部均值滤波(NL-means)算法的原理与C++实现

非局部均值滤波(NL-means)算法的积分图加速原理与C++实现

1. 使用CUDA并行计算内两层循环

由上篇文章,我们知道使用积分图加速的计算顺序是:外两层循环是搜索窗口循环,内两层循环是原图像循环。

for 搜索窗口的每一行

{

for 搜索窗口的每一列 //在这一层循环确定了所有搜索窗口中相同偏移位置的点

{

for 原图像的每一行

{

for 原图像的每一列 //在这一层循环确定了原图像中的一个待滤波点

{

}

}

}

}

假设原图像为m行n列图像,搜索窗口的大小为2half_ssize+1行2half_ssize+1列,那么我们可以使用CUDA开启m*n个线程并行计算内两层循环,每一个线程对应原图像的一个点。这样一来,总循环次数由原来的(2half_ssize+1)*(2half_ssize+1)*m*n减少为(2half_ssize+1)*(2half_ssize+1),因此可以大程度减少计算耗时。

for 搜索窗口的每一行

{

for 搜索窗口的每一列 //在这一层循环确定了所有搜索窗口中相同偏移位置的点

{

//CUDA并行计算内两层循环的每一个点

}

}

我们还知道,内两层循环要执行的计算步骤包括:

(1) 构造平方差图像;

(2) 计算平方差图像的积分图;

积分图的并行计算原理,我们在在前文主要讲了两种思路,由于思路2更加细化的分块并行计算,因此思路2要比思路1更快,虽然看起来思路2的计算步骤更多。

积分图的一种CUDA并行运算

思路1:

步骤一:开启m个线程,每个线程分别对应原图像的一行,负责计算该行的前缀和,所有线程并行计算。该步骤计算结束之后,得到了m*n的行前缀和图像。

步骤二:开启n个线程,每个线程分别对应行前缀和图像的一列,负责计算该列的前缀和,所有线程并行计算。该步骤计算结束之后,则得到m*n的积分图像。

思路2:在思路1的基础上进一步分块并行计算。

(3) 使用积分图快速计算搜索窗口内点的权重,然后累加权重,以及累加权重与搜索窗口内点的乘积。

因此如果要并行计算内两层循环,就涉及到平方差图像的并行计算、积分图的并行计算,还有权重、权重和、像素值加权和的并行计算。

此外,外两层循环结束之后,还要计算“像素值加权和/权重和”,使像素值加权和得到归一化,这里也可以并行计算。

我们根据思路2来计算积分图,使用8个CUDA核函数来实现内两层循环,使用1个CUDA核函数实现“像素值加权和/权重和”的计算。总共9个核函数,且这9个核函数按顺序执行(核函数内部并行执行,但核函数之间顺序执行):

for 搜索窗口的每一行

{

for 搜索窗口的每一列 //在这一层循环确定了所有搜索窗口中相同偏移位置的点

{

//计算平方差图像的核函数

//积分图核函数1

//积分图核函数2

//积分图核函数3

//积分图核函数4

//积分图核函数5

//积分图核函数6

//计算权重、权重和、像素值加权和的核函数

}

}

//计算“像素值加权和/权重和”的核函数

讲完并行计算思路,下面我们上代码。

计算差值平方图的核函数代码:

__global__ void cuda_SqDiff2_tex(float *src, float *diff2, int Ds, int t1, int t2, int m1, int n1)

{

int j = threadIdx.x + blockDim.x * blockIdx.x; //col

int i = threadIdx.y + blockDim.y * blockIdx.y; //row

if (i < m1 && j < n1)

{

float diff;

int src_n = n1 + Ds + Ds;

int index = (i + Ds)*src_n + j + Ds;

diff = src[index] - src[index + t1*src_n + t2]; //src[(i+Ds)*src_n+j+Ds] - src[(i+Ds+t1)*src_n+j+Ds+t2];

diff2[i*n1 + j] = diff*diff;

}

}

积分图核函数1代码:

__global__ void cuda_integal0_tex(float *src, float *integral, int row, int col)

{

int idx = threadIdx.x + blockDim.x * blockIdx.x; //col/4

int idy = threadIdx.y + blockDim.y * blockIdx.y; //row

if (idx < col / 4 && idy < row)

{

int offset = idy*col + idx * 4; //每个小块的首地址

integral[offset] = src[offset];

integral[offset + 1] = src[offset + 1] + integral[offset];

integral[offset + 2] = src[offset + 2] + integral[offset + 1];

integral[offset + 3] = src[offset + 3] + integral[offset + 2];

}

}

积分图核函数2代码:

__global__ void cuda_integal1_tex(float *integral, int row, int col)

{

int idx = threadIdx.x + blockDim.x * blockIdx.x; //row

if (idx < row)

{

int offset = idx*col;

for (int i = 7; i < col; i += 4)

{

integral[offset + i] += integral[offset + i - 4];

}

}

}

积分图核函数3代码:

__global__ void cuda_integal2_tex(float *integral, int row, int col)

{

int idx = threadIdx.x + blockDim.x * blockIdx.x; //col/4

int idy = threadIdx.y + blockDim.y * blockIdx.y; //row

if (idx > 0 && idx < col / 4 && idy < row)

{

int offset = idy*col + idx * 4; //每个小块的首地址

float pre_sum = integral[idy*col + (idx * 4 - 1)]; //前一个小块的最后一个数

integral[offset] += pre_sum;

integral[offset + 1] += pre_sum;

integral[offset + 2] += pre_sum;

}

}

积分图核函数4代码:

__global__ void cuda_integal3_tex(float *integral, int row, int col)

{

int idx = threadIdx.x + blockDim.x * blockIdx.x; //col

int idy = threadIdx.y + blockDim.y * blockIdx.y; //row/4

if (idx < col && idy < row / 4)

{

int offset = idy * 4 * col + idx; //每个小块的首地址

int offset1 = offset + col;

int offset2 = offset1 + col;

int offset3 = offset2 + col;

integral[offset1] += integral[offset];

integral[offset2] += integral[offset1];

integral[offset3] += integral[offset2];

}

}

积分图核函数5代码:

__global__ void cuda_integal4_tex(float *integral, int row, int col)

{

int idx = threadIdx.x + blockDim.x * blockIdx.x; //col

if (idx < col)

{

int col_4 = 4 * col;

for (int i = 7; i < row; i += 4)

{

//integral[i*col + idx] += integral[(i - 4)*col + idx];

int offset = i*col + idx;

integral[offset] += integral[offset - col_4];

}

}

}

积分图核函数6代码:

__global__ void cuda_integal5_tex(float *integral, int row, int col)

{

int idx = threadIdx.x + blockDim.x * blockIdx.x; //col

int idy = threadIdx.y + blockDim.y * blockIdx.y; //row/4

if (idx < col && idy > 0 && idy < row / 4)

{

int offset = idy * 4 * col + idx; //每个小块的首地址

float pre_sum = integral[(idy * 4 - 1)*col + idx]; //前一个小块的最后一个数

integral[offset] += pre_sum;

integral[offset + col] += pre_sum;

integral[offset + col + col] += pre_sum;

}

}

计算权重、权重和、像素值加权和的核函数代码:

__global__ void NLmeansKernel_tex(float *v, float *St, int t1, int t2, int Ds, int ds, float d2, float h2, int m, int n, int n1, float *sweight, float *average)

{

int idx = threadIdx.x + blockDim.x * blockIdx.x; //col

int idy = threadIdx.y + blockDim.y * blockIdx.y; //row

int i1; //行

int j1; //列

int index1, index2;

double dist2;

int i1n1;

int dsn1;

if (idx < n && idy < m)

{

i1 = idy + ds + 1; //行

j1 = idx + ds + 1; //列

i1n1 = i1*n1;

dsn1 = ds*n1;

//dist2 = (St[(i1+ds)*n1+(j1+ds)] + St[(i1-ds-1)*n1+(j1-ds-1)]) - (St[(i1+ds)*n1+(j1-ds-1)] + St[(i1-ds-1)*n1+(j1+ds)]);

dist2 = (St[(i1n1 + dsn1) + (j1 + ds)] + St[(i1n1 - dsn1 - n1) + (j1 - ds - 1)]) - (St[(i1n1 + dsn1) + (j1 - ds - 1)] + St[(i1n1 - dsn1 - n1) + (j1 + ds)]);

dist2 /= (-d2*h2);

dist2 = exp(dist2);

index1 = idy*n + idx;

//index2 = (idy + Ds + t1)*(n + 2 * Ds) + (idx + Ds + t2);

index2 = (idy + Ds + t1 + ds)*(n + 2 * (Ds + ds + 1)) + (idx + Ds + t2 + ds);

sweight[index1] += dist2;

average[index1] += dist2 * v[index2];

}

}

计算“像素值加权和/权重和”的代码:

__global__ void cuda_mean(float *average, float *sweight, int m, int n)

{

int j = threadIdx.x + blockDim.x * blockIdx.x; //col

int i = threadIdx.y + blockDim.y * blockIdx.y; //row

if (i < m && j < n)

{

int index = i*n + j;

average[index] /= sweight[index];

}

}

主体函数代码:

void cuda_fastNLmeans_tex(Mat src, Mat &dst, int ds, int Ds, float h)

{

Mat src_tmp;

src.convertTo(src_tmp, CV_32F);

int row_r = src_tmp.rows % 4;

int col_r = src_tmp.cols % 4;

if (row_r || col_r) //这里要扩充边缘时因为积分图的计算以4为block,因此图像尺寸必须为4的倍数

{

copyMakeBorder(src_tmp, src_tmp, 0, row_r, 0, col_r, BORDER_REFLECT); //扩充边缘

}

int m = src_tmp.rows;

int n = src_tmp.cols;

int boardSize_x = Ds + ds + 1;

int boardSize_y = Ds + ds + 1;

Mat src_board;

copyMakeBorder(src_tmp, src_board, boardSize_y, boardSize_y, boardSize_x, boardSize_x, BORDER_REFLECT);

float h2 = h*h;

float d2 = (float)(2 * ds + 1)*(2 * ds + 1);

int m1 = src_board.rows - 2 * Ds; //行

int n1 = src_board.cols - 2 * Ds; //列

//host端

int img_size = sizeof(float) * m * n;

int img_size1 = sizeof(float) * m1 * n1;

int img_size3 = sizeof(float) * src_board.rows * src_board.cols;

//

float *sweight_array = (float *)malloc(img_size);

float *average_array = (float *)malloc(img_size);

//device端

float *sweight_array_cuda;

float *average_array_cuda;

float *src_board_cuda;

float *diff2_cuda;

float *integral;

cudaMalloc((void**)&sweight_array_cuda, img_size);

cudaMalloc((void**)&average_array_cuda, img_size);

cudaMalloc((void**)&src_board_cuda, img_size3);

cudaMalloc((void**)&diff2_cuda, img_size1);

cudaMalloc((void**)&integral, m1*n1 * sizeof(float));

//

cudaMemset(sweight_array_cuda, 0.0, img_size);

cudaMemset(average_array_cuda, 0.0, img_size);

cudaMemcpy(src_board_cuda, (float *)src_board.data, img_size3, cudaMemcpyHostToDevice);

//

dim3 dimBlock(16, 16); //每个线程块有16*16个线程

int M = (src.cols + dimBlock.x - 1) / dimBlock.x;

int N = (src.rows + dimBlock.y - 1) / dimBlock.y;

dim3 dimGrid(M, N); //M*N个线程块

dim3 diffBlock(16, 16); //每个线程块有16*16个线程

M = (n1 + diffBlock.x - 1) / diffBlock.x;

N = (m1 + diffBlock.y - 1) / diffBlock.y;

dim3 diffGrid(M, N);

dim3 k0block(16, 16); //row*(col/4)

dim3 k0grid((n1 / 4 + k0block.x - 1) / k0block.x, (m1 + k0block.y - 1) / k0block.y);

dim3 k1block(16, 1); //row

dim3 k1grid((m1 + k1block.x - 1) / k1block.x, 1);

dim3 k2block = k0block; //row*(col/4)

dim3 k2grid = k0grid;

dim3 k3block(16, 16); //(row/4)*col

dim3 k3grid((n1 + k3block.x - 1) / k3block.x, (m1 / 4 + k3block.y - 1) / k3block.y);

dim3 k4block(16, 1); //col

dim3 k4grid((n1 + k4block.x - 1) / k4block.x, 1);

dim3 k5block = k3block;

dim3 k5grid = k3grid;

//

for (int t1 = -Ds; t1 <= Ds; t1++)

{

for (int t2 = -Ds; t2 <= Ds; t2++)

{

cuda_SqDiff2_tex << > >(src_board_cuda, diff2_cuda, Ds, t1, t2, m1, n1); //计算差值平方图

cuda_integal0_tex << > >(diff2_cuda, integral, m1, n1);

cuda_integal1_tex << > >(integral, m1, n1);

cuda_integal2_tex << > >(integral, m1, n1);

cuda_integal3_tex << > >(integral, m1, n1);

cuda_integal4_tex << > >(integral, m1, n1);

cuda_integal5_tex << > >(integral, m1, n1);

NLmeansKernel_tex << > >(src_board_cuda, integral, t1, t2, Ds, ds, d2, h2, m, n, n1, sweight_array_cuda, average_array_cuda);

}

}

///

dim3 meanBlock(16, 16); //每个线程块有16*16个线程

M = (n + meanBlock.x - 1) / meanBlock.x;

N = (m + meanBlock.y - 1) / meanBlock.y;

dim3 meanGrid(M, N);

cuda_mean << > >(average_array_cuda, sweight_array_cuda, m, n); //除以权重归一化

cudaMemcpy(average_array, average_array_cuda, img_size, cudaMemcpyDeviceToHost); //这里的内存拷贝是阻塞拷贝,必须等待上方所有kernel运行完毕才会进行拷贝

Mat average(m, n, CV_32FC1, average_array);

Mat dst_tmp;

average(Rect(0, 0, src.cols, src.rows)).convertTo(dst_tmp, CV_8U);

dst = dst_tmp.clone(); //为了确保dst连续,所以clone了一份

cudaFree(sweight_array_cuda);

cudaFree(average_array_cuda);

cudaFree(src_board_cuda);

cudaFree(diff2_cuda);

cudaFree(integral);

free(sweight_array);

free(average_array);

}

运行上述代码,同样对496*472的Lena图像去噪,结果如下(左侧噪声图,右侧去噪图)。耗时由上文的1.6秒左右减少到约86毫秒,成功实现毫秒级加速,耶~!

2. 使用CUDA并行计算内三层循环

在上述并行思路中,内两层循环并行计算,还有外两层循环需要顺序计算。那么,还有没有进一步并行加速的思路呢,答案是有的。CUDA的线程可以是一维、二维、三维线程。在上方的并行思路中我们使用了二维线程,其实还可以使用三维线程来并行计算内三层循环,这时只有最外面一层循环需要顺序计算了,循环次数由(2half_ssize+1)*(2half_ssize+1)减少为2half_ssize+1,因此计算耗时进一步减少。

for 搜索窗口的每一行

{

//CUDA并行计算内三层循环

}

由于三维线程有点复杂,核函数个数还是少一点好,我们选择使用上述思路1的积分图并行计算方法的来计算积分图。

上代码:

计算差值平方图的核函数代码:

__global__ void cuda_SqDiff2_dim3(float *src, float *diff2, int Ds, int t1, int m1, int n1)

{

int j = threadIdx.x + blockDim.x * blockIdx.x; //col

int i = threadIdx.y + blockDim.y * blockIdx.y; //row

int k = blockIdx.z; //t2 = k - Ds

if (i < m1 && j < n1 && k <= 2*Ds)

{

//float data, diff;

float diff;

int src_n = n1 + Ds + Ds;

int index = (i + Ds)*src_n + j + Ds;

int t2 = k - Ds;

diff = src[index] - src[index + t1*src_n + t2]; //src[(i+Ds)*src_n+j+Ds] - src[(i+Ds+t1)*src_n+j+Ds+t2];

int offset = m1*n1*k; //k取0~2Ds

diff2[offset + i*n1 + j] = diff*diff;

}

}

积分图核函数1代码:

__global__ void cal_integral_X_dim3(float *src, float *integral_x, int Ds, int row, int col)

{

int idx = threadIdx.x + blockIdx.x*blockDim.x;

int k = blockIdx.y; //t2 = k - Ds

if (idx < row && k <= 2*Ds)

{

int row_offset = k*row*col + idx*col;

integral_x[row_offset] = src[row_offset];

for (int i = 1; i < col; i++)

{

int index = row_offset + i;

integral_x[index] = src[index] + integral_x[index - 1];

}

}

}

积分图核函数2代码:

__global__ void cal_integral_Y_dim3(float *src, float *integral_y, int Ds, int row, int col)

{

int idx = threadIdx.x + blockIdx.x*blockDim.x;

int k = blockIdx.y; //t2 = k - Ds

if (idx < col && k <= 2*Ds)

{

int offset = k*row*col;

integral_y[offset+idx] = src[offset+idx];

for (int i = 1; i < row; i++)

{

int col_offset = offset + i*col + idx;

integral_y[col_offset] = src[col_offset] + integral_y[col_offset - col];

}

}

}

计算权重、权重和、像素值加权和的核函数代码:

__global__ void NLmeansKernel_dim3(float *v, float *St, int t1, int Ds, int ds, float d2, float h2, int m, int n, int m1, int n1, float *sweight, float *average)

{

int idx = threadIdx.x + blockDim.x * blockIdx.x; //col

int idy = threadIdx.y + blockDim.y * blockIdx.y; //row

int k = blockIdx.z; //t2 = k - Ds

int i1; //行

int j1; //列

int index1, index2;

double dist2;

int i1n1;

int dsn1;

if (idx < n && idy < m && k <= 2*Ds)

{

int t2 = k - Ds;

int st_offset = k*m1*n1;

int sweight_offset = k*m*n;

i1 = idy + ds + 1; //行

j1 = idx + ds + 1; //列

i1n1 = i1*n1;

dsn1 = ds*n1;

//dist2 = (St[(i1+ds)*n1+(j1+ds)] + St[(i1-ds-1)*n1+(j1-ds-1)]) - (St[(i1+ds)*n1+(j1-ds-1)] + St[(i1-ds-1)*n1+(j1+ds)]);

dist2 = (St[st_offset + (i1n1 + dsn1) + (j1 + ds)] + St[st_offset + (i1n1 - dsn1 - n1) + (j1 - ds - 1)]) - (St[st_offset + (i1n1 + dsn1) + (j1 - ds - 1)] + St[st_offset + (i1n1 - dsn1 - n1) + (j1 + ds)]);

dist2 /= (-d2*h2);

dist2 = exp(dist2);

index1 = sweight_offset + idy*n + idx;

index2 = (idy + Ds + t1 + ds)*(n + 2 * (Ds + ds + 1)) + (idx + Ds + t2 + ds);

sweight[index1] += dist2;

average[index1] += dist2 * v[index2];

}

}

计算“像素值加权和/权重和”的代码:

__global__ void cuda_mean_dim3(float *average, float *sweight, int Ds, int m, int n)

{

int j = threadIdx.x + blockDim.x * blockIdx.x; //col

int i = threadIdx.y + blockDim.y * blockIdx.y; //row

if (i < m && j < n)

{

int index = i*n + j;

int cnt = Ds * 2;

int img_size = m*n;

int offset = img_size;

for (int k = 1; k <= cnt; k++)

{

average[index] += average[offset + index];

sweight[index] += sweight[offset + index];

offset += img_size;

}

average[index] /= sweight[index];

}

}

主体函数代码:

void cuda_fastNLmeans_dim3(Mat src, Mat &dst, int ds, int Ds, float h)

{

Mat src_tmp;

src.convertTo(src_tmp, CV_32F);

int m = src_tmp.rows;

int n = src_tmp.cols;

int boardSize_x = Ds + ds + 1;

int boardSize_y = Ds + ds + 1;

Mat src_board;

copyMakeBorder(src_tmp, src_board, boardSize_y, boardSize_y, boardSize_x, boardSize_x, BORDER_REFLECT);

float h2 = h*h;

float d2 = (float)(2 * ds + 1)*(2 * ds + 1);

int m1 = src_board.rows - 2 * Ds; //行

int n1 = src_board.cols - 2 * Ds; //列

int t2_num = 2 * Ds + 1;

//host端

int img_size = sizeof(float) * m * n;

int img_size1 = sizeof(float) * m1 * n1;

int img_size3 = sizeof(float) * src_board.rows * src_board.cols;

//

//device端

float *sweight_array_cuda;

float *average_array_cuda;

float *src_board_cuda;

float *diff2_cuda;

cudaMalloc((void**)&sweight_array_cuda, img_size*t2_num);

cudaMalloc((void**)&average_array_cuda, img_size*t2_num);

cudaMalloc((void**)&src_board_cuda, img_size3);

cudaMalloc((void**)&diff2_cuda, img_size1*t2_num);

//

cudaMemset(sweight_array_cuda, 0.0, img_size*t2_num);

cudaMemset(average_array_cuda, 0.0, img_size*t2_num);

cudaMemcpy(src_board_cuda, (float *)src_board.data, img_size3, cudaMemcpyHostToDevice);

//

dim3 dimBlock(16, 16, 1); //每个线程块有16*16个线程

int M = (src.cols + dimBlock.x - 1) / dimBlock.x;

int N = (src.rows + dimBlock.y - 1) / dimBlock.y;

dim3 dimGrid(M, N, t2_num); //M*N*t2_num个线程块

dim3 diffBlock(16, 16, 1); //每个线程块有16*16个线程

M = (n1 + diffBlock.x - 1) / diffBlock.x;

N = (m1 + diffBlock.y - 1) / diffBlock.y;

dim3 diffGrid(M, N, t2_num);

float *y_presum;

float *x_presum;

cudaMalloc((void**)&y_presum, m1*n1 * sizeof(float)*t2_num);

cudaMalloc((void**)&x_presum, m1*n1 * sizeof(float)*t2_num);

int block_num = 16;

M = (n1 + block_num - 1) / block_num; //n1是列

N = (m1 + block_num - 1) / block_num; //m1是行

dim3 integal_block_x(block_num, 1);

dim3 integal_grid_x(N, t2_num);

dim3 integal_block_y(block_num, 1);

dim3 integal_grid_y(M, t2_num);

//

for (int t1 = -Ds; t1 <= Ds; t1++)

{

cuda_SqDiff2_dim3 << > >(src_board_cuda, diff2_cuda, Ds, t1, m1, n1); //计算差值平方图

cal_integral_X_dim3 << > >(diff2_cuda, x_presum, Ds, m1, n1);

cal_integral_Y_dim3 << > >(x_presum, y_presum, Ds, m1, n1);

NLmeansKernel_dim3 << > >(src_board_cuda, y_presum, t1, Ds, ds, d2, h2, m, n, m1, n1, sweight_array_cuda, average_array_cuda);

}

///

dim3 meanBlock(16, 16); //每个线程块有16*16个线程

M = (n + meanBlock.x - 1) / meanBlock.x;

N = (m + meanBlock.y - 1) / meanBlock.y;

dim3 meanGrid(M, N);

cuda_mean_dim3 << > >(average_array_cuda, sweight_array_cuda, Ds, m, n); //除以权重归一化

//

Mat average(m, n, CV_32FC1);

cudaMemcpy((float *)average.data, average_array_cuda, img_size, cudaMemcpyDeviceToHost);

average.convertTo(dst, CV_8U);

cudaFree(sweight_array_cuda);

cudaFree(average_array_cuda);

cudaFree(src_board_cuda);

cudaFree(diff2_cuda);

cudaFree(y_presum);

cudaFree(x_presum);

}

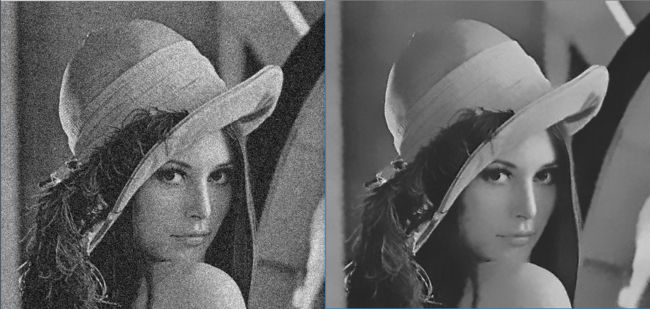

运行上述代码,也对496*472的Lena图像去噪,结果如下(左侧噪声图,右侧去噪图)。耗时由上文的86毫秒左右减少到约59毫秒,耗时进一步减少啦,嘿嘿~

我在想,其实是不是可以再进一步加速呢,比如使用思路2并行计算积分图,结合三维线程的使用,应该可以更进一步加速吧。本文就讲到这里,接下有时间来再试试哈~

本人公众号如下,不定期更新精彩内容,欢迎扫码关注~