感谢:https://www.jianshu.com/p/66a0a6fd3cae

深度学习和机器学习移动端化是未来趋势,这两年各个大厂都在这方面发力,竞相推出自己移动端的推理框架。

google: Tensorflow Lite

apple: CoreML

facebook: Caffe2

tencent: ncnn

baidu: paddle mobile

xiaomi: MACE

各个平台之间性能有差异。

以下介绍框架的转换流程:

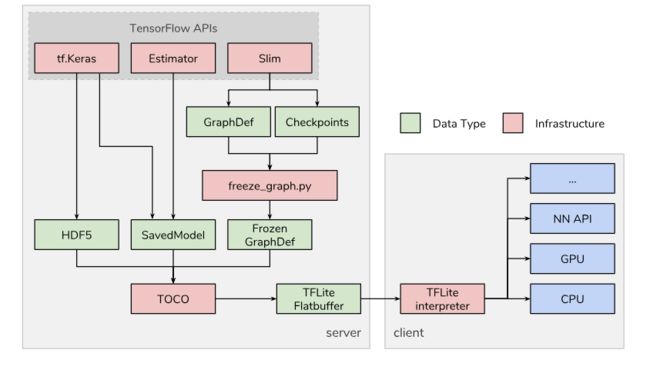

一、tensorflow 转 tensorflow lite

tensorflow提供官方转换工具toco, 可以直接将tensorflow.pb模型转换为.tflite模型。

使用例子:

toco --graph_def_file=DeeplabV3++_portrait_384_1_05alpha.pb --output_file=DeeplabV3++_portrait_384_1_05alpha.tflite --output_format=TFLITE --input_shape=1,384,384,3 --input_array=input_1 --output_array=output_0 --inference_type=float --allow_custom_ops

下面介绍如何查看tensorflow模型中节点名称

1、如何查看checkpoints中节点名称

saver = tf.train.import_meta_graph(/path/to/meta/graph)

sess = tf.Session()

saver.restore(sess, /path/to/checkpoints)

graph = sess.graph

print([node.name for node in graph.as_graph_def().node])

2、 如何查看静态图.pb节点信息:

以simplenet静态图文件为例

"""FIND GRAPH INFO"""

tf_model_path = "./simplenet_V2_8M.pb"

with open(tf_model_path , 'rb') as f: serialized = f.read() tf.reset_default_graph() original_gdef = tf.GraphDef() original_gdef.ParseFromString(serialized) with tf.Graph().as_default() as g: tf.import_graph_def(original_gdef, name ='') ops = g.get_operations() N = len(ops) for i in [0,1,2,N-3,N-2,N-1]: # for循环设置输出的节点信息 print('\n\nop id {} : op type: "{}"'.format(str(i), ops[i].type)) print('input(s):') for x in ops[i].inputs: print("name = {}, shape: {}, ".format(x.name, x.get_shape())) print('\noutput(s):'), for x in ops[i].outputs: print("name = {}, shape: {},".format(x.name, x.get_shape()))

输出信息如下:

op id 0 : op type: "Placeholder"

input(s):

output(s):

name = input_1:0, shape: (?, 32, 32, 3),

op id 1 : op type: "Const"

input(s):

output(s):

name = block1_conv/kernel:0, shape: (3, 3, 3, 128),

op id 2 : op type: "Identity" input(s): name = block1_conv/kernel:0, shape: (3, 3, 3, 128), output(s): name = block1_conv/kernel/read:0, shape: (3, 3, 3, 128), op id 190 : op type: "MatMul" input(s): name = global_average_pooling2d_1/Mean:0, shape: (?, 600), name = dense_1/kernel/read:0, shape: (600, 10), output(s): name = dense_1/MatMul:0, shape: (?, 10), op id 191 : op type: "BiasAdd" input(s): name = dense_1/MatMul:0, shape: (?, 10), name = dense_1/bias/read:0, shape: (10,), output(s): name = dense_1/BiasAdd:0, shape: (?, 10), op id 192 : op type: "Softmax" input(s): name = dense_1/BiasAdd:0, shape: (?, 10), output(s): name = activation_1/Softmax:0, shape: (?, 10),提供合适的信息给toco进行转换:

toco --graph_def_file=simplenet_V2_8M.pb --output_file= simplenet_v2_8M.tflite --output_format=TFLITE --input_shape=1,32,32,3 --input_arrays=input_1 --output_arrays=activation_1/Softmax

转换成功之后就会生成.tflite文件,可以用于移动端部署。

实际上在直接用于移动端部署之前,需要测试tflite模型的准确度,这就需要直接在Python里面调用tflite

import numpy as np

import tensorflow as tf # Load TFLite model and allocate tensors. interpreter = tf.contrib.lite.Interpreter(model_path="converted_model.tflite") interpreter.allocate_tensors() # Get input and output tensors. input_details = interpreter.get_input_details() output_details = interpreter.get_output_details() # Test model on random input data. input_shape = input_details[0]['shape'] # change the following line to feed into your own data. input_data = np.array(np.random.random_sample(input_shape), dtype=np.float32) interpreter.set_tensor(input_details[0]['index'], input_data) interpreter.invoke() output_data = interpreter.get_tensor(output_details[0]['index']) print(output_data)

二、Tensroflow 转CoreML

CoreML并不提供将tensorflow模型直接转换为mlmodel的工具,提供两种思路:

1、使用keras接口, coreml支持keras转换;

2、使用第三方转换工具tf-coreml

以下展开tf-coreml的使用方法

首先,将之前保存的checkpoints保存为静态图,.pb格式

然后,要输入的参数有:

tf_model_path: .pb静态图模型路径

mlmodel_path: 生成的CoreML模型路径地址

input_name_shape_dict: 网络的输入名称和数据的大小(要根据原始的模型输入确定)

output_feature_names:网络输出的名称(要根据原始的模型输出确定)

此外,可以对输入的数据做一些归一化处理:

image_scale

red_bias

green_bias

blue_bias

程序如下所示:

import tensorflow as tf

import tfcoreml

from coremltools.proto import FeatureTypes_pb2 as _FeatureTypes_pb2 import coremltools """ FIND GRAPH INFO """ tf_model_path = "/tmp//retrained_graph.pb" with open(tf_model_path , 'rb') as f: serialized = f.read() tf.reset_default_graph() original_gdef = tf.GraphDef() original_gdef.ParseFromString(serialized) with tf.Graph().as_default() as g: tf.import_graph_def(original_gdef, name ='') ops = g.get_operations() N = len(ops) for i in [0,1,2,N-3,N-2,N-1]: print('\n\nop id {} : op type: "{}"'.format(str(i), ops[i].type)) print('input(s):') for x in ops[i].inputs: print("name = {}, shape: {}, ".format(x.name, x.get_shape())) print('\noutput(s):'), for x in ops[i].outputs: print("name = {}, shape: {},".format(x.name, x.get_shape())) """ CONVERT TF TO CORE ML """ # Model Shape input_tensor_shapes = {"input:0":[1,224,224,3]} # Input Name image_input_name = ['input:0'] # Output CoreML model path coreml_model_file = '/tmp/myModel.mlmodel' # Output name output_tensor_names = ['final_result:0'] # Label file for classification class_labels = '/tmp/retrained_labels.txt' #Convert Process coreml_model = tfcoreml.convert( tf_model_path=tf_model_path, mlmodel_path=coreml_model_file, input_name_shape_dict=input_tensor_shapes, output_feature_names=output_tensor_names, image_input_names = image_input_name, class_labels = class_labels) # Get image pre-processing parameters of a saved CoreML model spec = coremltools.models.utils.load_spec(coreml_model_file) if spec.WhichOneof('Type') == 'neuralNetworkClassifier': nn = spec.neuralNetworkClassifier print("neuralNetworkClassifier") if spec.WhichOneof('Type') == 'neuralNetwork': nn = spec.neuralNetwork print("neuralNetwork") if spec.WhichOneof('Type') == 'neuralNetworkRegressor': nn = spec.neuralNetworkRegressor print("neuralNetworkClassifierRegressor") preprocessing = nn.preprocessing[0].scaler print('channel scale: ', preprocessing.channelScale) print('blue bias: ', preprocessing.blueBias) print('green bias: ', preprocessing.greenBias) print('red bias: ', preprocessing.redBias) inp = spec.description.input[0] if inp.type.WhichOneof('Type') == 'imageType': colorspace = _FeatureTypes_pb2.ImageFeatureType.ColorSpace.Name(inp.type.imageType.colorSpace) print('colorspace: ', colorspace) coreml_model = tfcoreml.convert( tf_model_path=tf_model_path, mlmodel_path=coreml_model_file, input_name_shape_dict=input_tensor_shapes, output_feature_names=output_tensor_names, image_input_names = image_input_name, class_labels = class_labels, red_bias = -1, green_bias = -1, blue_bias = -1, image_scale = 2.0/255.0)

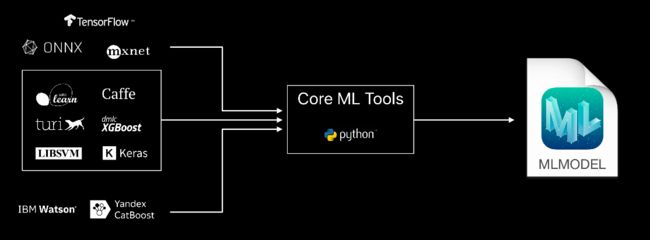

coremltools是apple官方转换工具,支持很多平台:

以下是Keras模型转换coreml模型脚本:

import coremltools

import keras

from keras.models import load_model from keras.utils.generic_utils import CustomObjectScope class_labels = [] for i in range(62): class_labels.append(str(i)) with CustomObjectScope({'relu6': keras.applications.mobilenet.relu6}): keras_model = load_model('traffic_sign_with_class_weights.h5') coreml_model = coremltools.converters.keras.convert(keras_model, input_names=['input_1'], image_input_names='input_1', output_names='activation_1', image_scale=2/255.0, red_bias=-1, green_bias=-1, blue_bias=-1, class_labels=class_labels) coreml_model.save('traffic_sign_with_class_weights.mlmodel')