pytorch 深度学习实践 第11讲 卷积神经网络高级篇 CNN

第11讲 卷积神经网络高级篇 Advanced CNN

pytorch学习视频——B站视频链接:《PyTorch深度学习实践》完结合集_哔哩哔哩_bilibili

以下是视频内容笔记以及源码,笔记纯属个人理解,如有错误欢迎路过的大佬指出 。

1. GoogleNet

网络结构如图所示,

GoogleNet,常被用作基础主干网络,图中红色圈出的一个部分称为Inception块。

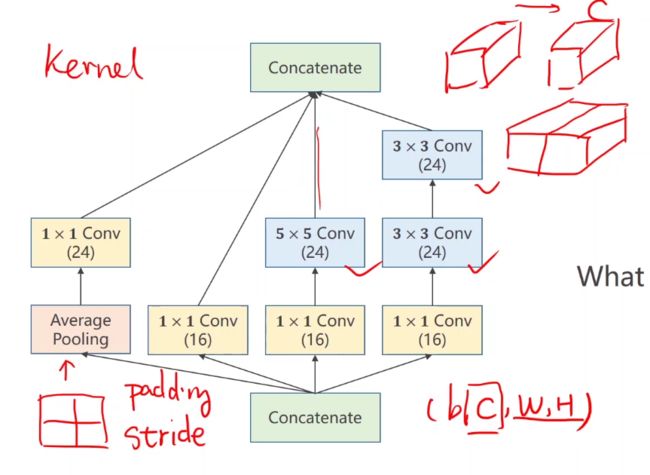

2. Inception Module解析

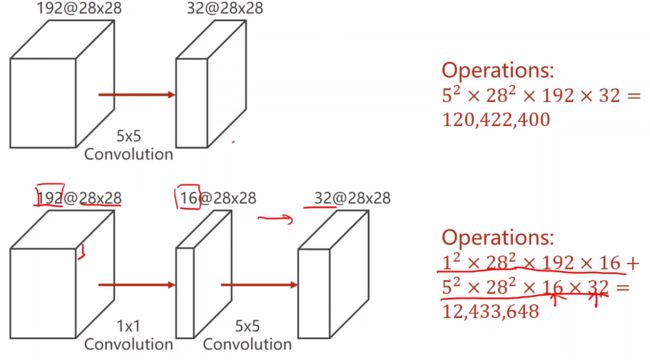

不知道选取什么kernel,将卷积核都使用一遍,对效果比较好的卷积核赋予更高的权重,将各个卷积核得到的结果拼接起来。

-

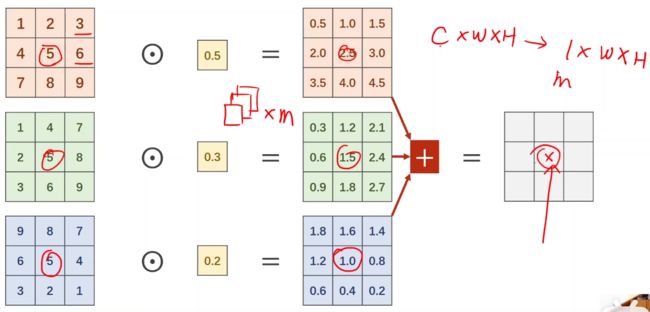

1 × 1卷积核解析

可以看成是在做信息融合,最终得到的特征图的一个像素值融合了前面三个通道的值。

信息融合最简单的例子——考试对各科分数求总分进行比较分数高低,在多个维度下不太好比较。

此处就是在做一个通道的变换,原通道数为3,新的通道数是卷积核的个数,高度和宽度不变。

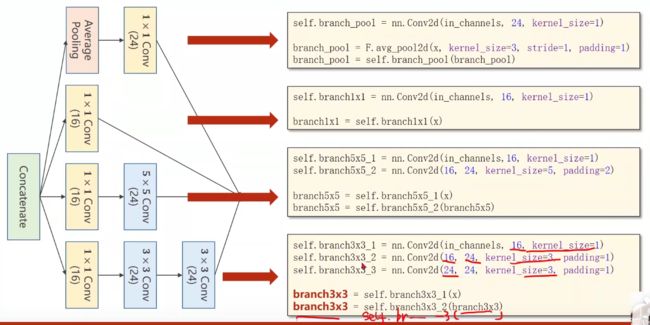

3. Inception Module的实现

如图所示的Inception块的4个分支

实现——advanced_cnn.py

import torch

from torch import nn

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

import matplotlib.pyplot as plt

batch_size = 64

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307, ), (0.3081, ))

])

train_dataset = datasets.MNIST(root='../dataset/mnist',

train=True,

download=True,

# 指定数据用transform来处理

transform=transform)

train_loader = DataLoader(dataset=train_dataset,

batch_size=batch_size,

shuffle=True)

test_dataset = datasets.MNIST(root='../dataset/mnist',

train=False,

download=True,

transform=transform)

test_loader = DataLoader(dataset=test_dataset,

batch_size=batch_size,

shuffle=False)

# 定义一个Inception类,在网络里会用到

class InceptionA(nn.Module):

def __init__(self, in_channels):

super(InceptionA, self).__init__()

self.branch1X1 = nn.Conv2d(in_channels, 16, kernel_size=1)

# 设置padding保证各个分支输出的高度和宽度保持不变

self.branch5X5_1 = nn.Conv2d(in_channels, 16, kernel_size=1)

self.branch5X5_2 = nn.Conv2d(16, 24, kernel_size=5, padding=2)

self.branch3X3_1 = nn.Conv2d(in_channels, 16, kernel_size=1)

self.branch3X3_2 = nn.Conv2d(16, 24, kernel_size=3, padding=1)

self.branch3X3_3 = nn.Conv2d(24, 24, kernel_size=3, padding=1)

self.branch_pool = nn.Conv2d(in_channels, 24, kernel_size=1)

def forward(self, x):

branch1X1 = self.branch1X1(x)

branch5X5 = self.branch5X5_1(x)

branch5X5 = self.branch5X5_2(branch5X5)

branch3X3 = self.branch3X3_1(x)

branch3X3 = self.branch3X3_2(branch3X3)

branch3X3 = self.branch3X3_3(branch3X3)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1X1, branch5X5, branch3X3, branch_pool]

# (b, c, w, h),dim=1——以第一个维度channel来拼接

return torch.cat(outputs, dim=1)

# 定义模型

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = nn.Conv2d(88, 20, kernel_size=5)

self.incep1 = InceptionA(in_channels=10)

self.incep2 = InceptionA(in_channels=20)

self.mp = nn.MaxPool2d(2)

self.fc = nn.Linear(1408, 10)

def forward(self, x):

in_size = x.size(0)

x = F.relu(self.mp(self.conv1(x)))

x = self.incep1(x)

x = F.relu(self.mp(self.conv2(x)))

x = self.incep2(x)

x = x.view(in_size, -1)

x = self.fc(x)

return x

model = Net()

# 将模型迁移到GPU上运行,cuda:0表示第0块显卡

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(torch.cuda.is_available())

model.to(device)

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

# 将训练和测试过程分别封装在两个函数当中

def train(epoch):

running_loss = 0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

# 将要计算的张量也迁移到GPU上——输入和输出

inputs, target = inputs.to(device), target.to(device)

optimizer.zero_grad()

# 前馈 反馈 更新

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))

running_loss = 0

accuracy = []

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

# 测试中的张量也迁移到GPU上

images, labels = images.to(device), labels.to(device)

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy on test set: %d %%' % (100 * correct / total))

accuracy.append(100 * correct / total)

if __name__ == '__main__':

for epoch in range(10):

train(epoch)

test()

print(accuracy)

plt.plot(range(10), accuracy)

plt.xlabel("epoch")

plt.ylabel("Accuracy")

plt.show()

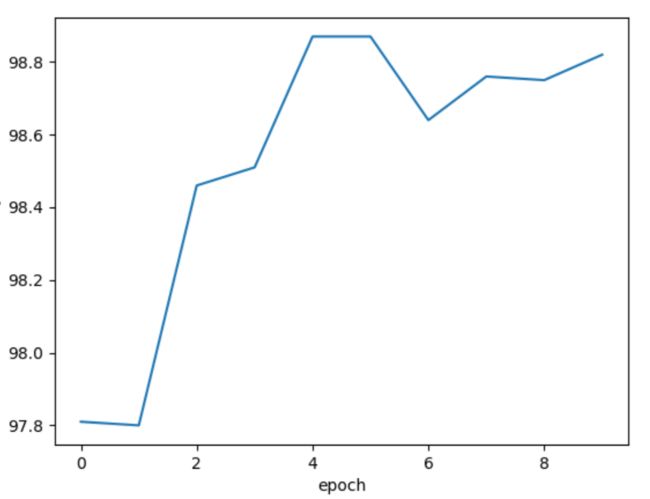

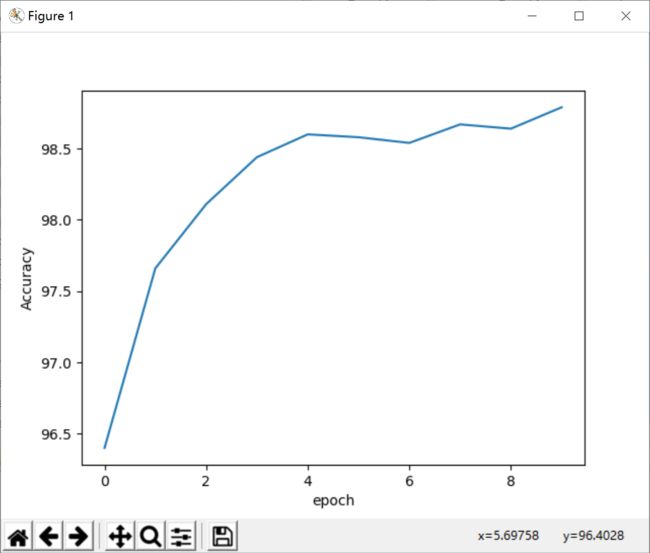

结果:

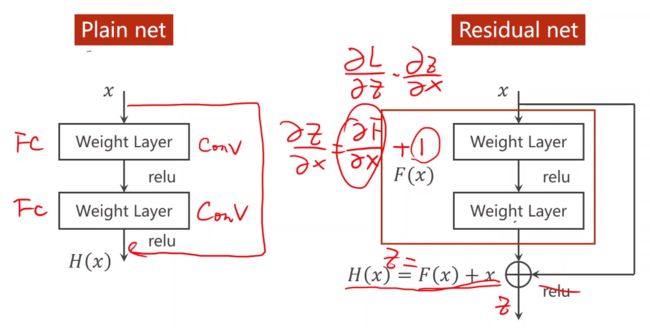

4. 残差网络

普通网络与残差网络的区别,残差网络在做完卷积激活之前,将该层的输入加上输出一起作为整个的输出来激活。

定义:

实现——residual.py

import torch

from torch import nn

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

import matplotlib.pyplot as plt

batch_size = 64

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307, ), (0.3081, ))

])

train_dataset = datasets.MNIST(root='../dataset/mnist',

train=True,

download=True,

# 指定数据用transform来处理

transform=transform)

train_loader = DataLoader(dataset=train_dataset,

batch_size=batch_size,

shuffle=True)

test_dataset = datasets.MNIST(root='../dataset/mnist',

train=False,

download=True,

transform=transform)

test_loader = DataLoader(dataset=test_dataset,

batch_size=batch_size,

shuffle=False)

# 定义一个残差模块类

class ResidualBlock(nn.Module):

def __init__(self, channels):

super(ResidualBlock, self).__init__()

self.channels = channels

self.conv1 = nn.Conv2d(channels, channels, kernel_size=3, padding=1)

self.conv2 = nn.Conv2d(channels, channels, kernel_size=3, padding=1)

def forward(self, x):

y = F.relu(self.conv1(x))

y = self.conv2(y)

return F.relu(x + y)

# 定义模型

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1,16, kernel_size=5)

self.conv2 = nn.Conv2d(16, 32, kernel_size=5)

self.mp = nn.MaxPool2d(2)

self.rblock1 = ResidualBlock(16)

self.rblock2 = ResidualBlock(32)

self.fc = nn.Linear(512, 10)

def forward(self, x):

in_size = x.size(0)

x = self.mp(F.relu(self.conv1(x)))

x = self.rblock1(x)

x = self.mp(F.relu(self.conv2(x)))

x = self.rblock2(x)

x = x.view(in_size, -1)

x = self.fc(x)

return x

model = Net()

# 将模型迁移到GPU上运行,cuda:0表示第0块显卡

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(torch.cuda.is_available())

model.to(device)

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

# 将训练和测试过程分别封装在两个函数当中

def train(epoch):

running_loss = 0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

# 将要计算的张量也迁移到GPU上——输入和输出

inputs, target = inputs.to(device), target.to(device)

optimizer.zero_grad()

# 前馈 反馈 更新

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))

running_loss = 0

accuracy = []

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

# 测试中的张量也迁移到GPU上

images, labels = images.to(device), labels.to(device)

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

# ??怎么比较的相等

# print(predicted)

# print(labels)

# print('predicted == labels', predicted == labels)

# 两个张量比较,得出的是其中相等的元素的个数(即一个批次中预测正确的个数)

correct += (predicted == labels).sum().item()

# print('correct______', correct)

print('Accuracy on test set: %d %%' % (100 * correct / total))

accuracy.append(100 * correct / total)

if __name__ == '__main__':

for epoch in range(10):

train(epoch)

test()

print(accuracy)

plt.plot(range(10), accuracy)

plt.xlabel("epoch")

plt.ylabel("Accuracy")

plt.show()

结果: