【综述】Deep Learning for Visual Tracking: A Comprehensive Survey-2019

论文地址: https://arxiv.org/pdf/1912.00535.pdf

Abstract

视觉目标跟踪是计算机视觉中最受欢迎但最具挑战性的研究课题之一。鉴于问题的不适定性质及其在广泛的现实世界场景中的流行,已经建立了一些大规模的基准数据集,在这些数据集上开发了相当多的方法,并在近年来取得了重大进展-主要是通过最近基于深度学习(DL)的方法。本调查旨在系统地研究当前基于DL的视觉跟踪方法、基准数据集和评估度量。它还广泛地评估和分析了领先的视觉跟踪方法。首先,从网络体系结构、网络开发、视觉跟踪网络训练、网络目标、网络输出和相关滤波器优势的开发六个关键方面总结了基于DL的方法的基本特征、主要动机和贡献。第二,比较了流行的视觉跟踪基准及其各自的属性,并总结了它们的评价指标。第三,在OTB2013、OTB2015、VOT2018和LaSOT的一组公认的基准上,对最先进的基于DL的方法进行了全面的研究。最后,通过对这些最先进的方法进行定量和定性的批判性分析,研究了它们在各种常见场景下的利弊。它可以作为一个温和的使用指南,供从业者权衡何时和在何种条件下选择哪种方法。 它还促进了对当前问题的讨论,并阐明了有希望的研究方向。

Index :Visual tracking, deep learning, computer vision, appearance modeling

1.Introduction

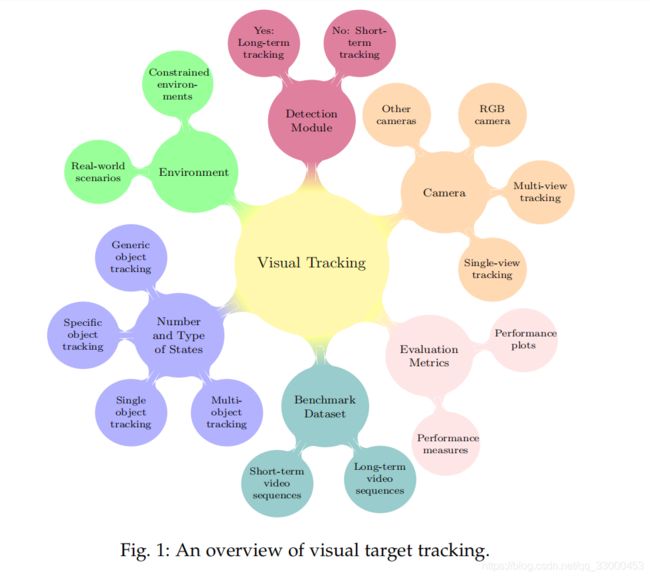

视觉跟踪旨在估计未知视觉目标的轨迹时,只有目标的初始帧(在视频帧中)是可以拿到的。视觉跟踪是一个开放和有吸引力的研究领域(见图1)

具有广泛的类别和应用;包括自动驾驶汽车[1]-[4],自主机器人[5],[6],监控[7]-[10],增强现实[11]-[13],无人驾驶飞行器跟踪[14],体育[15],外科[16],生物学[17]-[19],海洋勘探[20],举几个例子。视觉跟踪的不适定定义(即无模型跟踪、实时学习、单摄像机、2D信息)在复杂的现实世界场景中更具挑战性,其中可能包括任意类别的目标外观及其运动模型(例如,人、无人机、动物、车辆)。不同的成像特性(例如静态/移动摄像机、平滑/中断运动、摄像机分辨率)和环境条件的变化(例如光照变化、背景杂波、拥挤场景)。传统视觉跟踪方法利用各种框架-如判别相关滤波器(DCF)[21]-[24]、轮廓跟踪[25]、[26]、核跟踪[27]-[29]、点跟踪、[31]等-但这些方法在无约束环境中不能提供满意的结果。主要原因是目标表示采用手工制作的特征(如定向梯度直方图(HOG)[32]和颜色名称(CN)[33]和不灵活的目标建模。 受深度学习(DL)突破的启发[34]-[38]在图像网大规模视觉识别竞赛(ILSVRC)[39]以及视觉对象跟踪(VOT)挑战[40]-[46],基于DL的方法引起了视觉跟踪社区的相当大的兴趣,以提供健壮的视觉跟踪器。虽然卷积神经网络(CNNs)最初一直是主导网络,但目前研究的结构范围广泛,如Siamese神经网络(SNNs)、递归神经网络(RNNs)、自动编码器(AES)、生成对抗性网络(GANs)和自定义神经网络。Fig.2介绍了近几年深度视觉跟踪器的发展历史。

最先进的基于DL的视觉跟踪器具有开发深度体系结构、骨干网络、学习过程、训练数据集、网络目标、网络输出、开发深度特征类型、CPU/GPU实现、编程语言和框架、速度等明显的特点。 此外,近年来还提出了几个视觉跟踪基准数据集,用于视觉跟踪方法的实际培训和评估。 尽管有各种属性,但这些基准数据集中的一些具有常见的视频序列。因此,本文对基于DL的方法、它们的基准数据集和评估度量进行了比较研究,以方便视觉跟踪社区开发先进的方法。

视觉跟踪方法可大致分为计算机视觉DL革命之前之后两大类。第一类视觉跟踪调查论文[47]-[50]主要回顾传统的基于经典对象和运动表示的方法,然后系统地、实验地或两者都检查它们的利弊。考虑到基于DL的视觉跟踪器的显著进展,这些论文所综述的方法已经过时。另一方面,第二类评论限制了深度视觉跟踪[51]-[53]。 论文[51],[52](一篇论文的两个版本)将81和93手工制作和深度视觉跟踪器分类为相关滤波器跟踪器和非相关滤波器跟踪器,然后应用了基于体系结构和跟踪机制的进一步分类。这方面论文<40种基于DL的方法进行了有限的研究。虽然本文特别地研究[54]九种基于SNN的方法的网络分支、层和训练方面,但它不包括最先进的基于SNN的跟踪器(例如,[55]-[57]SiamRPN++,残差用到孪生网络中,在线快速目标跟踪与分割)他们部分利用SNN的定制网络(例如,[58]样本多样性)。最后一篇综述论文[53]根据43种基于DL的方法的结构、功能和训练进行了分类。然后,用不同的手工制作的视觉跟踪方法对16种基于DL的视觉跟踪器进行了评价。从结构的角度来看,这些跟踪器分为34种基于CNN的方法(包括10种CNN匹配和24种CNN分类)、5种基于RNN的方法和4种其他基于体系结构的方法(例如AE)。此外,从网络功能的角度,将这些方法分为特征提取网络(FEN)或端到端网络(EEN)。虽然FENs是利用预先训练的模型进行不同任务的方法,EENs按其产出分类;即对象分数、置信图和包围框(BB)。从网络训练的角度来看,这些方法分为NP-OL、IP-NOL、IP-OL、VP-NOL和VP-OL类,其中NP、IP、VP、OL和NOL分别是无预训练、图像预训练、视频预训练、在线学习和无在线学习的缩写。尽管做出了所有的努力,但没有进行全面的研究,不仅对基于DL的跟踪器、它们的动机和解决不同问题的方法进行广泛的分类,而且还根据不同的具有挑战性的场景对最佳方法进行了实验分析。在这些关注的推动下,本调查的主要目标是填补这一空白,并调查目前的主要问题和未来的方向。 本调查与以往调查的主要差异描述如下。

以往调查的差异:尽管目前有现有的评论论文,本文只关注129种最先进的基于DL的视觉跟踪方法,这些方法已经发表在主要的图像处理和计算机视觉会议和期刊上。

These methods include the HCFT[59],DeepSRDCF[60],FCNT[61],CNNSVM[62],DPST[63],CCOT[64],GOTURN[65],SiamFC[66],SINT[67],MDNet[68],HDT[69],STCT[70],RPNT[71],DeepTrack[72],CNT[73],CF-CNN[74],TCNN[75],RDLT[76],PTAV[77],[78],CREST[79],UCT/UCTLite[80],DSiam/DSiamM[81],TSN[82],WECO[83],RFL[84],IBCCF[85],DTO[86]],SRT[87],R-FCSN[88],GNET[89],LST[90],VRCPF[91],DCPF[92],CFNet[93],ECO[94],DeepCSRDCF[95],MCPF[96],BranchOut[97],DeepLMCF[98],Obli-RaFT[99],ACFN[100],SANet[101],DCFNet/DCFNet2[102],DET[103],DRN[104],DNT[105],STSGS[106],TripletLoss[107],DSLT[108],UPDT[109],ACT[110],DaSiamRPN[111],RT-MDNet[112],StructSiam[113],MMLT[114],CPT[115],STP[116],Siam-MCF[117],Siam-BM[118],WAEF[119],TRACA[120],VITAL[121],DeepSTRCF[122],SiamRPN[123],SA-Siam[124],FlowTrack[125],DRT[126],LSART[127],RASNet[128],MCCT[129],DCPF2[130],VDSR-SRT[131],FCSFN[132],FRPN2TSiam[133], FMFT[134],IMLCF[135],TGGAN[136],DAT[137],DCTN[138],FPRNet[139],HCFTs[140],adaDDCF[141],YCNN[142],DeepHPFT[143],CFCF[144],CFSRL[145],P2T[146],DCDCF[147],FICFNet[148],LCTdeep[149],HSTC[150],DeepFWDCF[151],CF-FCSiam[152],MGNet[153],ORHF[154],ASRCF[155],ATOM[156],CRPN[157],GCT[158],RPCF[159],SPM[160],SiamDW[56],SiamMask[57],SiamRPN[55],TADT[161],UDT[162],DiMP[163],ADT[164],CODA[165],DRRL[166],SMART[167],MRCNN[168],MM[169],MTHCF[170],AEPCF[171],IMM-DFT[172],TAAT[173],DeepTACF[174],MAM[175],ADNet[176],[177],C2FT[178],DRL-IS[179],DRLT[180],EAST[181],HP[182],P-Track[183],RDT[184],and SINT[58]。

跟踪器包括73个基于CNN的、35个基于SNN的、15个基于自定义的(包括基于AE的、基于增强学习(RL)的和组合网络)、三个基于RNN的和三个基于GAN的方法。 本文的一个主要贡献和新颖性是包括和比较基于SNN的视觉跟踪方法,这是目前视觉跟踪社区非常感兴趣的。此外,还回顾了最近基于GAN和自定义网络(包括基于RL的方法)的视觉跟踪器。虽然本调查中的方法被归类为利用现成的深层特征和视觉跟踪的深层特征(类似于[53]中的FENs和EENs),但还介绍了这些方法的详细特点,如预先训练或骨干网络、剥削层、训练数据集、目标函数、跟踪速度、使用的特征、跟踪输出的类型、CPU/GPU实现、编程语言、DL框架。从网络训练的角度,本调查独立研究了深层现成特征和深层特征进行视觉跟踪。 由于从FENs中提取的深层现成特征(即从FENs中提取的特征)大多是在对象识别任务,因此它们的训练细节将被独立地审查。因此,用于视觉跟踪的网络培训被归类为基于DL的方法,这些方法只利用离线培训、仅在线培训或离线和在线培训程序。 最后,本文综合分析了45种最先进的视觉跟踪方法在四个视觉跟踪数据集上的不同方面。

本文的主要贡献总结如下:

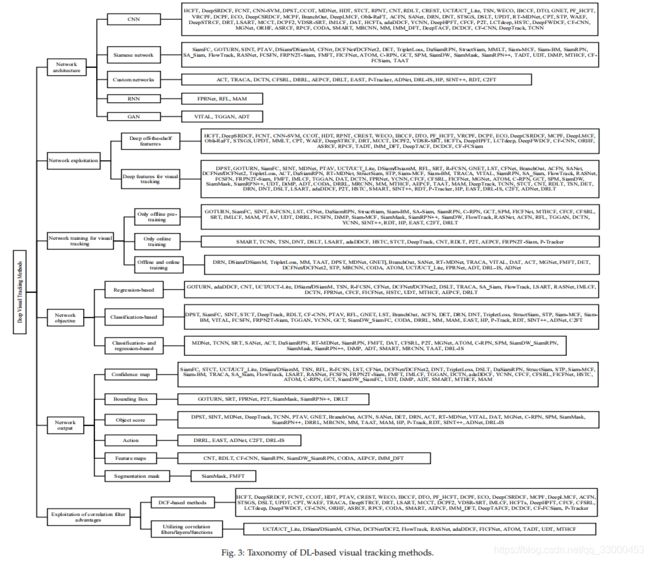

1)基于DL的最先进的视觉跟踪方法是根据其体系结构(即CNN、SNN、RNN、GAN和自定义网络)、网络开发(即用于视觉跟踪的底层深层特征和深层特征)、视觉跟踪网络培训(即仅离线培训、仅在线培训、离线和在线培训)、网络目标(即基于回归的、基于分类的、以及基于分类和回归的)以及利用相关滤波器优势(即DCF框架和利用相关滤波器/层/功能)进行分类)。 这种研究涵盖了所有这些方面,详细分类的视觉跟踪方法,以前没有提出。

2)总结了基于DL的方法解决视觉跟踪问题的主要动机和贡献。 据我们所知,这是第一篇研究视觉跟踪方法的主要问题和提出的解决方案的论文。 这种分类为设计精确和健壮的基于DL的视觉跟踪方法提供了适当的洞察力。

3)基于基本特征(包括视频数量、帧数、类或簇数、序列属性、缺失标签和与其他数据集重叠),最近的视觉跟踪基准数据集包括

OTB2013 [185], VOT [40]–[46], ALOV [48], OTB2015 [186], TC128 [187], UAV123 [188], NUS-PRO [189], NfS [190], DTB [191], TrackingNet [192], OxUvA [193], BUAA-PRO [194], GOT10k [195], and LaSOT [196] are compared.

4)最后,对著名的OTB2013、OTB2015、VOT2018和LaSOT视觉跟踪数据集进行了广泛的定量和定性实验评价,并根据不同的方面对最先进的视觉跟踪器进行了分析。 此外,本文还首次指定了最具挑战性的视觉属性,不仅用于VOT2018数据集,而且还用于OTB2015和LaSOT数据集。 最后,对VOT工具包[45]进行了修改,根据TraX协议[197]对不同的方法进行了定性比较。

根据比较,作出如下观察:

1)基于SNN的方法是最有吸引力的深层结构,因为它们在视觉跟踪的性能和效率之间有着令人满意的平衡。 此外,视觉跟踪方法最近试图利用RL和GAN方法的优势来完善它们的决策,并缓解训练数据的缺乏。 基于这些优点,最近的视觉跟踪方法旨在设计用于视觉跟踪目的自定义神经网络。

2)深度特征的离线端到端学习适当地适应预先训练的特征进行视觉跟踪。 虽然DNN的在线训练增加了计算复杂度,使得大多数这些方法不适合实时应用,但它大大有助于视觉跟踪器适应显著的外观变化,防止视觉干扰,提高视觉跟踪方法的准确性和鲁棒性。 因此,利用离线和在线培训程序提供了更健壮的视觉跟踪器。

3)利用更深、更宽的骨干网络提高了区分目标与其背景的鉴别能力。

4)最佳的视觉跟踪方法不仅使用回归和分类目标函数来估计最佳目标方案,而且还可以找到最紧的BB进行目标定位。

5)不同特征的开发增强了目标模型的鲁棒性。 例如,基于DCF的大多数方法都融合了现成的深层特征和手工制作的特征(例如HOG和CN),因为这个原因。 此外,利用互补特征,如时间或上下文信息,导致了更具鉴别性和鲁棒性的目标表示特征。

6)基于DL的视觉跟踪方法最具挑战性的属性是遮挡、跳出视图和快速运动。 此外,具有相似语义的视觉干扰可能导致漂移问题。

2.TAXONOMY OF DEEP VISUAL TRACKING METHODS

在本节中,描述了目标表示/信息、培训过程和学习过程的三个主要组成部分。 然后,提出了基于DL的方法的综合分类。

基于DL的方法的主要动机之一是通过利用/融合深层层次特征、利用上下文信息或运动信息以及选择更具鉴别性和鲁棒性的深层特征来改进目标表示。此外,基于DL的方法旨在有效地训练用于视觉跟踪系统的DNN。 他们的一般动机可以分为使用不同的网络培训(例如网络预训练、在线培训或两者兼而有之)或处理一些培训问题(例如缺乏培训数据、过度拟合培训数据和计算复杂性)。无监督训练是最近使用丰富的未标记样本的另一种方案,可以通过根据上下文信息对这些样本进行聚类、将训练数据映射到多个空间或利用基于一致性的目标函数来执行。最后,将基于DL的跟踪器根据其学习过程的主要动机分为在线更新方案、纵横比估计、尺度估计、搜索策略和提供长期记忆。

在下面,基于DL的视觉跟踪方法根据网络体系结构、网络开发、用于视觉跟踪的网络训练、网络目标、网络输出和相关滤波器优势的开发六个主要方面进行了综合分类。基于DL的视觉跟踪方法的拟议分类如图 3.所示。

此外,本节还将介绍其他重要细节,包括预先训练的网络、骨干网络、被开发的层、深度特征的类型、手工制作和深度特征的融合、训练数据集、跟踪输出、跟踪速度、硬件实现细节、编程语言和DL框架。在本节中,不仅对最先进的基于DL的视觉跟踪方法进行了分类,而且还对这些方法的主要动机和贡献进行了分类,这些方法可以提供有用的视角来确定未来的方向。

2.1 Network Architecture

虽然CNN已经被广泛应用于基于DL的方法中,但近年来其他体系结构也主要是为了提高视觉跟踪器的效率和鲁棒性而开发的。根据各种深层结构的技术范围,分类法由基于CNN的、基于SNN的、基于GAN的、基于RNN的和基于自定义网络的方法组成。

2.1.1 Convolutional Neural Network (CNN)

由于CNN在计算机视觉任务中的突破和一些吸引人的优点,如参数共享、稀疏交互和优势表示,广泛的方法利用CNN进行视觉跟踪。 基于CNN的视觉跟踪器主要根据以下动机进行分类。

2)压缩或修剪训练样本空间[94]、[115]、[141]、[153]、[168]或特征选择[61],

3)通过RoIarlign操作[112](即通过双线性插值进行特征逼近)或斜随机森林[99]进行特征计算,以更好地捕获数据,

4)校正域适应方法[165],

5)轻量级结构[72],[73],[167],

6)有效的优化过程[98],[155],

7)利用相关滤波器的优点用于有效的计算[59]–[61], [64], [69], [74], [77]–[80], [83], [85], [86], [92], [94]–[96], [98], [100], [106], [108], [109], [115], [119], [122], [126], [127], [129]– [131], [135], [140], [141], [143], [144], [149]–[151], [155], [159], [165], [167], [171], [172], [174]

8)粒子采样策略[96]

9)利用注意机制[100]

2.1.2 Siamese Neural Network (SNN)

为了学习相似知识和实现实时速度,SNN在过去的几年中被广泛用于视觉跟踪目的。 给定目标和搜索区域对,这些孪生网络计算相同的函数以产生相似图。 基于SNN的方法的共同目的是克服预先训练的深度CNN的局限性,并充分利用端到端学习进行实时应用。

2.1.4 Generative Adversarial Network (GAN)

基于一些吸引人的优点,如捕获统计分布和在没有广泛注释数据的情况下生成所需的训练样本,GANs在许多研究领域得到了广泛的利用。 虽然GAN通常很难训练和评估,但一些基于DL的视觉跟踪器使用它们来丰富训练样本和目标建模。 这些网络可以在特征空间中增强正样本,以解决训练样本[121]的不平衡分布。 此外,基于GAN的方法可以学习一般的外观分布来处理视觉跟踪[136]的自学习问题。 此外,回归和判别网络的联合优化将导致[164]利用回归和分类任务。

2.1.5 Custom Networks

在特定的深层架构和网络层的启发下,现代基于DL的方法结合了广泛的网络,如AE、CNN、RNN、SNN和深度RL进行视觉跟踪。 主要动机是利用其他网络的优势来弥补普通方法的不足。 主要动机和贡献分类如下。

2.2 Network Exploitation

2.3 Network Training

2.4 Network Objective

2.5 Network Output

根据其网络输出,将基于DL的方法分为六大类(见图 3和表2至表4),即confifidence map (还包括评分图、响应图和投票图)、BB(还包括旋转BB)、对象评分(还包括对象提案的概率、验证分数、分数和分层分数)、动作、特征映射和分割mask。根据网络目标,基于DL的方法生成不同的网络输出来估计或细化估计的目标位置。

2.6 Exploitation of Correlation Filters Advantages

基于DCF的方法旨在学习一组判别滤波器,它们与一组频域训练样本的元素乘法确定空间目标位置。由于DCF提供了竞争的跟踪性能和计算效率相比,复杂的技术,基于DL的视觉跟踪器使用相关滤波器的优势。这些方法是根据他们如何利用DCF的优势,使用一个完整的DCF框架或一些好处,如它的目标函数或相关滤波器/层。相当多的视觉跟踪方法是基于DCF框架中深度特征的集成(见图3)。这些方法旨在提高目标表示对具有挑战性的属性的鲁棒性,而其他方法则试图提高correlation fifilter(s)[93],correlation layer(s) [125], [141], [148], [161],[170], 和the objective function of correlation filters [80], [81], [102], [128], [156], [162]的计算效率。

3 VISUAL TRACKING BENCHMARK DATASETS

视觉跟踪基准数据集已经被引入,以提供公平和标准化的评价单目标跟踪算法。跟踪数据集包含视频序列,不仅包括不同的目标类别,而且具有不同的时间持续时间和具有挑战性的属性。这些数据集包含大量的视频序列、帧、属性和类(或clusters)。属性包括光照变化(IV)、尺度变化(SV)、遮挡(OCC)、变形(DEF)、运动模糊(MB)、快速运动(FM)、面内旋转(IPR)、面外旋转(OPR)、视距外旋转(OV)、背景杂波(BC)、低分辨率(LR)、纵横比变化(ARC)、摄像机运动(CM)、全遮挡(FOC)、部分遮挡(POC)、相似物体(SOB)、视点变化(VC)、光(LI)、表面覆盖(SC)、镜面(SP)、透明度(TR)、形状(SH)、平滑度(MS)、运动相干性(MCO)、混淆(CON)、低对比度(LC)、缩放摄像机(ZC)、长时间(LD)、阴影变化(SHC)、图像变化(SHC闪光灯(FL)、暗光(DL)、相机抖动(CS)、旋转(ROT)、快速背景变化(FBC)、运动变化(MOC)、物体颜色变化(OCO)、场景复杂性(SCO)、绝对运动(AM)、尺寸(SZ)、相对速度(RS)、干扰器(DI)、长度(LE)、快速摄像机运动(FCM)和小/大物体(SLO)。表5比较了视觉跟踪数据集的特征,存在用于无监督训练的缺失标记数据,以及数据集的部分重叠。 通过不同的评估协议,现有的视觉跟踪基准评估视觉跟踪器在现实场景中的准确性和鲁棒性。 均匀化的评估协议便于直观的比较和开发视觉跟踪器。 在下面,简要描述最流行的视觉跟踪基准数据集和评估度量。

3.1 Visual Tracking Datasets

3.2 Evaluation Metrics

为了在大规模数据集上进行实验比较,视觉跟踪方法由两个基本的性能度量和性能图评估类别进行评估。 这些指标简述如下。

3.2.1 Performance Measures

为了反映视觉跟踪器的几个视图,提出了各种性能度量。 这些度量试图从准确性、鲁棒性和跟踪速度的互补度量来直观地解释性能比较。 以下是对这些措施的简要调查。

考虑到每个阈值计算的各种精度,成功图测量估计重叠和地面真相重叠的帧的百分比大于某一阈值。

OPER是一个有监督的系统,它不断测量跟踪方法的准确性,以便在发生故障时重新初始化它。此外,SRER对SRE的许多评估也执行相同的OPER。

4 EXPERIMENTAL ANALYSES

为了分析最先进的视觉跟踪方法的性能,在四个著名的数据集OTB2013[185]、OTB2015[186]、VOT2018[45]和LaSOT[196]上对45种不同的方法进行了定量比较。 由于页面限制,所有的实验结果都可以在https://github.com/MMarvasti/的深度学习-视觉-跟踪-调查上公开。实验中包含的45个基于DL的跟踪器如表6所示。 经合组织、CFNet、TRACA、深度STRCF和C-RPN被认为是比较各种数据集性能的基线跟踪器。 所有评估都是在IntelI7-9700K3.60G Hz CPU上进行的,CPU有32GB的RAM,并借助MatConvNet工具箱[205]该工具箱使用NVIDIA GeForRTX2080Ti GPU进行计算。OTB和LaSOT工具包根据著名的精度和成功图对视觉跟踪方法进行评估,然后根据AUC评分[185]、[186]对方法进行排序。 为了在VOT2018数据集上进行性能比较,根据TraX评估协议对视觉跟踪器进行了评估[197]使用了三种主要的精度、鲁棒性和EAO来提供精度-鲁棒性(AR)图、预期平均重叠曲线和根据五个具有挑战性的视觉属性[45]、[206]、[207]排序图。

4.1 Quantitative Comparisons

4.2 Most Challenging Attributes per Benchmark Dataset

Reference

[1] M.-f. Chang, J. Lambert, P. Sangkloy, J. Singh, B. Sławomir,A. Hartnett, D. Wang, P. Carr, S. Lucey, D. Ramanan, and J. Hays,“Argoverse: 3D tracking and forecasting with rich maps,” in Proc.IEEE CVPR , 2019, pp. 8748–8757.[2] W. Luo, B. Yang, and R. Urtasun, “Fast and furious: Real-timeend-to-end 3D detection, tracking and motion forecasting with asingle convolutional net,” in Proc. IEEE CVPR , 2018, pp. 3569–3577.[3] P. Gir˜ ao, A. Asvadi, P. Peixoto, and U. Nunes, “3D object tracking in driving environment: A short review and a benchmarkdataset,” in Proc. IEEE ITSC , 2016, pp. 7–12.[4] C. Li, X. Liang, Y. Lu, N. Zhao, and J. Tang, “RGB-T objecttracking: Benchmark and baseline,” Pattern Recognit. , vol. 96,2019.[5] H. V. Hoof, T. V. D. Zant, and M. Wiering, “Adaptive visual facetracking for an autonomous robot,” in Proc. Belgian/NetherlandsArtifificial Intelligence Conference , 2011.[6] C. Robin and S. Lacroix, “Multi-robot target detection and tracking: Taxonomy and survey,” Autonomous Robots , vol. 40, no. 4, pp.729–760, 2016.[7] B. Risse, M. Mangan, B. Webb, and L. Del Pero, “Visual trackingof small animals in cluttered natural environments using a freelymoving camera,” in Proc. IEEE ICCVW , 2018, pp. 2840–2849.[8] Y. Luo, D. Yin, A. Wang, and W. Wu, “Pedestrian tracking insurveillance video based on modifified CNN,” Multimed. ToolsAppl. , vol. 77, no. 18, pp. 24 041–24 058, 2018.[9] A. Brunetti, D. Buongiorno, G. F. Trotta, and V. Bevilacqua,“Computer vision and deep learning techniques for pedestriandetection and tracking: A survey,” Neurocomputing , vol. 300, pp.17–33, 2018.[10] L. Hou, W. Wan, J. N. Hwang, R. Muhammad, M. Yang, andK. Han, “Human tracking over camera networks: A review,”EURASIP Journal on Advances in Signal Processing , vol. 2017, no. 1,2017.[11] G. Klein, “Visual tracking for augmented reality,” Phd Thesis , pp.1–182, 2006.[12] M. Klopschitz, G. Schall, D. Schmalstieg, and G. Reitmayr, “Visual tracking for augmented reality,” in Proc. IPIN , 2010, pp. 1–4.[13] F. Ababsa, M. Maidi, J. Y. Didier, and M. Mallem, “Vision-basedtracking for mobile augmented reality,” in Studies in Computational Intelligence . Springer, 2008, vol. 120, pp. 297–326.[14] J. Hao, Y. Zhou, G. Zhang, Q. Lv, and Q. Wu, “A review of targettracking algorithm based on UAV,” in Proc. IEEE CBS , 2019, pp.328–333.[15] M. Manafififard, H. Ebadi, and H. Abrishami Moghaddam, “Asurvey on player tracking in soccer videos,” Comput. Vis. ImageUnd. , vol. 159, pp. 19–46, 2017.[16] D. Bouget, M. Allan, D. Stoyanov, and P. Jannin, “Vision-basedand marker-less surgical tool detection and tracking: A review ofthe literature,” Medical Image Analysis , vol. 35, pp. 633–654, 2017.[17] V. Ulman, M. Maˇ ska, and et al., “An objective comparison of celltracking algorithms,” Nature Methods , vol. 14, no. 12, pp. 1141–1152, 2017.[18] T. He, H. Mao, J. Guo, and Z. Yi, “Cell tracking using deep neuralnetworks with multi-task learning,” Image Vision Comput. , vol. 60,pp. 142–153, 2017.[19] D. E. Hernandez, S. W. Chen, E. E. Hunter, E. B. Steager, andV. Kumar, “Cell tracking with deep learning and the Viterbialgorithm,” in Proc. MARSS , 2018, pp. 1–6.[20] J. Luo, Y. Han, and L. Fan, “Underwater acoustic target tracking:A review,” Sensors , vol. 18, no. 1, p. 112, 2018.[21] D. S. Bolme, J. R. Beveridge, B. A. Draper, and Y. M. Lui, “Visualobject tracking using adaptive correlation fifilters,” in Proc. IEEECVPR , 2010, pp. 2544–2550.[22] J. F. Henriques, R. Caseiro, P. Martins, and J. Batista, “High-speedtracking with kernelized correlation fifilters,” IEEE Trans. PatternAnal. Mach. Intell. , vol. 37, no. 3, pp. 583–596, 2015.[23] M. Danelljan, G. Hager, F. S. Khan, and M. Felsberg, “Discriminative Scale Space Tracking,” IEEE Trans. Pattern Anal. Mach. Intell. ,vol. 39, no. 8, pp. 1561–1575, 2017.[24] S. M. Marvasti-Zadeh, H. Ghanei-Yakhdan, and S. Kasaei,“Rotation-aware discriminative scale space tracking,” in IranianConf. Electrical Engineering (ICEE) , 2019, pp. 1272–1276.[25] G. Boudoukh, I. Leichter, and E. Rivlin, “Visual tracking of objectsilhouettes,” in Proc. ICIP , 2009, pp. 3625–3628.[26] C. Xiao and A. Yilmaz, “Effificient tracking with distinctive targetcolors and silhouette,” in Proc. ICPR , 2016, pp. 2728–2733.[27] V. Bruni and D. Vitulano, “An improvement of kernel-basedobject tracking based on human perception,” IEEE Trans. Syst.,Man, Cybern. Syst. , vol. 44, no. 11, pp. 1474–1485, 2014.[28] W. Chen, B. Niu, H. Gu, and X. Zhang, “A novel strategy forkernel-based small target tracking against varying illuminationwith multiple features fusion,” in Proc. ICICT , 2018, pp. 135–138.[29] D. H. Kim, H. K. Kim, S. J. Lee, W. J. Park, and S. J. Ko, “Kernelbased structural binary pattern tracking,” IEEE Trans. CircuitsSyst. Video Technol. , vol. 24, no. 8, pp. 1288–1300, 2014.[30] I. I. Lychkov, A. N. Alfifimtsev, and S. A. Sakulin, “Tracking ofmoving objects with regeneration of object feature points,” inProc. GloSIC , 2018, pp. 1–6.[31] M. Ighrayene, G. Qiang, and T. Benlefki, “Making Bayesiantracking and matching by the BRISK interest points detector/descriptor cooperate for robust object tracking,” in Proc. IEEEICSIP , 2017, pp. 731–735.[32] N. Dalal and B. Triggs, “Histograms of oriented gradients forhuman detection,” in Proc. IEEE CVPR , 2005, pp. 886–893.[33] J. Van De Weijer, C. Schmid, and J. Verbeek, “Learning colornames from real-world images,” in Proc. IEEE CVPR , 2007, pp.1–8.[34] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet classififi-cation with deep convolutional neural networks,” in Proc. NIPS ,vol. 2, 2012, pp. 1097–1105.[35] K. Chatfifield, K. Simonyan, A. Vedaldi, and A. Zisserman, “Return of the devil in the details: Delving deep into convolutionalnets,” in Proc. BMVC , 2014, pp. 1–11.[36] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” in Proc. ICLR , 2014, pp.1–14.[37] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov,D. Erhan, V. Vanhoucke, and A. Rabinovich, “Going deeper withconvolutions,” in Proc. IEEE CVPR , 2015, pp. 1–9.[38] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning forimage recognition,” in Proc. IEEE CVPR , 2016, pp. 770–778.[39] O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma,Z. Huang, A. Karpathy, A. Khosla, M. Bernstein, A. C. Berg, andL. Fei-Fei, “ImageNet large scale visual recognition challenge,”IJCV , vol. 115, no. 3, pp. 211–252, 2015.[40] M. Kristan, R. Pflflugfelder, A. Leonardis, J. Matas, F. Porikli, andet al., “The visual object tracking VOT2013 challenge results,” inProc. ICCV , 2013, pp. 98–111.[41] M. Kristan, R. Pflflugfelder, A. Leonardis, J. Matas, and et al., “Thevisual object tracking VOT2014 challenge results,” in Proc. ECCV ,2015, pp. 191–217.[42] M. Kristan, J. Matas, A. Leonardis, M. Felsberg, and et al., “Thevisual object tracking VOT2015 challenge results,” in Proc. IEEEICCV , 2015, pp. 564–586.[43] M. Kristan, J. Matas, A. Leonardis, M. Felsberg, R. Pflflugfelder,and et al., “The visual object tracking VOT2016 challenge results,” in Proc. ECCVW , 2016, pp. 777–823.[44] M. Kristan, A. Leonardis, J. Matas, M. Felsberg, R. Pflflugfelder,L. C. Zajc, and et al., “The visual object tracking VOT2017challenge results,” in Proc. IEEE ICCVW , 2017, pp. 1949–1972.[45] M. Kristan, A. Leonardis, J. Matas, M. Felsberg, R. Pflflugfelder,and et al., “The sixth visual object tracking VOT2018 challengeresults,” in Proc. ECCVW , 2019, pp. 3–53.[46] M. Kristan and et al., “The seventh visual object trackingVOT2019 challenge results,” in Proc. ICCVW , 2019.[47] A. Yilmaz, O. Javed, and M. Shah, “Object tracking: A survey,”ACM Computing Surveys , vol. 38, no. 4, Dec. 2006.[48] A. W. Smeulders, D. M. Chu, R. Cucchiara, S. Calderara, A. Dehghan, and M. Shah, “Visual tracking: An experimental survey,”IEEE Trans. Pattern Anal. Mach. Intell. , vol. 36, no. 7, pp. 1442–1468, 2014.[49] H. Yang, L. Shao, F. Zheng, L. Wang, and Z. Song, “Recent advances and trends in visual tracking: A review,” Neurocomputing ,vol. 74, no. 18, pp. 3823–3831, 2011.[50] X. Li, W. Hu, C. Shen, Z. Zhang, A. Dick, and A. Van Den Hengel,“A survey of appearance models in visual object tracking,” ACMTrans. Intell. Syst. Tec. , vol. 4, no. 4, pp. 58:1—-58:48, 2013.[51] M. Fiaz, A. Mahmood, and S. K. Jung, “Tracking noisy targets:A review of recent object tracking approaches,” 2018. [Online].Available: http://arxiv.org/abs/1802.03098[52] M. Fiaz, A. Mahmood, S. Javed, and S. K. Jung, “Handcraftedand deep trackers: Recent visual object tracking approaches andtrends,” ACM Computing Surveys , vol. 52, no. 2, pp. 43:1—-43:44,2019.[53] P. Li, D. Wang, L. Wang, and H. Lu, “Deep visual tracking:Review and experimental comparison,” Pattern Recognit. , vol. 76,pp. 323–338, 2018.[54] R. Pflflugfelder, “An in-depth analysis of visual trackingwith Siamese neural networks,” 2017. [Online]. Available:http://arxiv.org/abs/1707.00569[55] B. Li, W. Wu, Q. Wang, F. Zhang, J. Xing, and J. Yan,“SiamRPN++: Evolution of Siamese visual tracking with verydeep networks,” 2018. [Online]. Available: http://arxiv.org/abs/1812.11703[56] Z. Zhang and H. Peng, “Deeper and wider Siamese networksfor real-time visual tracking,” 2019. [Online]. Available:http://arxiv.org/abs/1901.01660[57] Q. Wang, L. Zhang, L. Bertinetto, W. Hu, and P. H. S. Torr, “Fastonline object tracking and segmentation: A unifying approach,”2018. [Online]. Available: http://arxiv.org/abs/1812.05050[58] X. Wang, C. Li, B. Luo, and J. Tang, “SINT++: Robust visualtracking via adversarial positive instance generation,” in Proc.IEEE CVPR , 2018, pp. 4864–4873.[59] C. Ma, J. B. Huang, X. Yang, and M. H. Yang, “Hierarchicalconvolutional features for visual tracking,” in Proc. IEEE ICCV ,2015, pp. 3074–3082.[60] M. Danelljan, G. Hager, F. S. Khan, and M. Felsberg, “Convolutional features for correlation fifilter based visual tracking,” inProc. IEEE ICCVW , 2016, pp. 621–629.[61] L. Wang, W. Ouyang, X. Wang, and H. Lu, “Visual trackingwith fully convolutional networks,” in Proc. IEEE ICCV , 2015,pp. 3119–3127.[62] S. Hong, T. You, S. Kwak, and B. Han, “Online tracking bylearning discriminative saliency map with convolutional neuralnetwork,” in Proc. ICML , 2015, pp. 597–606.[63] Y. Zha, T. Ku, Y. Li, and P. Zhang, “Deep position-sensitivetracking,” IEEE Trans. Multimedia , no. 8, 2019.[64] M. Danelljan, A. Robinson, F. S. Khan, and M. Felsberg, “Beyondcorrelation fifilters: Learning continuous convolution operators forvisual tracking,” in Proc. ECCV , vol. 9909 LNCS, 2016, pp. 472–488.[65] D. Held, S. Thrun, and S. Savarese, “Learning to track at 100 FPSwith deep regression networks,” in Proc. ECCV , 2016, pp. 749–765.[66] L. Bertinetto, J. Valmadre, J. F. Henriques, A. Vedaldi, and P. H.Torr, “Fully-convolutional Siamese networks for object tracking,”in Proc. ECCV , 2016, pp. 850–865.[67] R. Tao, E. Gavves, and A. W. Smeulders, “Siamese instance searchfor tracking,” in Proc. IEEE CVPR , 2016, pp. 1420–1429.[68] H. Nam and B. Han, “Learning multi-domain convolutionalneural networks for visual tracking,” in Proc. IEEE CVPR , 2016,pp. 4293–4302.[69] Y. Qi, S. Zhang, L. Qin, H. Yao, Q. Huang, J. Lim, and M. H.Yang, “Hedged deep tracking,” in Proc. IEEE CVPR , 2016, pp.4303–4311.[70] L. Wang, W. Ouyang, X. Wang, and H. Lu, “STCT: Sequentiallytraining convolutional networks for visual tracking,” in Proc.IEEE CVPR , 2016, pp. 1373–1381.[71] G. Zhu, F. Porikli, and H. Li, “Robust visual tracking with deepconvolutional neural network based object proposals on PETS,”in Proc. IEEE CVPRW , 2016, pp. 1265–1272.[72] H. Li, Y. Li, and F. Porikli, “DeepTrack: Learning discriminativefeature representations online for robust visual tracking,” IEEETrans. Image Process. , vol. 25, no. 4, pp. 1834–1848, 2016.[73] K. Zhang, Q. Liu, Y. Wu, and M. H. Yang, “Robust visual trackingvia convolutional networks without training,” IEEE Trans. ImageProcess. , vol. 25, no. 4, pp. 1779–1792, 2016.[74] C. Ma, Y. Xu, B. Ni, and X. Yang, “When correlation fifilters meetconvolutional neural networks for visual tracking,” IEEE SignalProcess. Lett. , vol. 23, no. 10, pp. 1454–1458, 2016.[75] H. Nam, M. Baek, and B. Han, “Modeling and propagatingCNNs in a tree structure for visual tracking,” 2016. [Online].Available: http://arxiv.org/abs/1608.07242[76] G. Wu, W. Lu, G. Gao, C. Zhao, and J. Liu, “Regional deeplearning model for visual tracking,” Neurocomputing , vol. 175, no.PartA, pp. 310–323, 2015.[77] H. Fan and H. Ling, “Parallel tracking and verifying: A framework for real-time and high accuracy visual tracking,” in Proc.IEEE ICCV , 2017, pp. 5487–5495.[78] H. Fan and H.Ling, “Parallel tracking and verifying,” IEEE Trans.Image Process. , vol. 28, no. 8, pp. 4130–4144, 2019.[79] Y. Song, C. Ma, L. Gong, J. Zhang, R. W. Lau, and M. H. Yang,“CREST: Convolutional residual learning for visual tracking,” inProc. ICCV , 2017, pp. 2574–2583.[80] Z. Zhu, G. Huang, W. Zou, D. Du, and C. Huang, “UCT: Learningunifified convolutional networks for real-time visual tracking,” inProc. ICCVW , 2018, pp. 1973–1982.[81] Q. Guo, W. Feng, C. Zhou, R. Huang, L. Wan, and S. Wang,“Learning dynamic Siamese network for visual object tracking,”in Proc. IEEE ICCV , 2017, pp. 1781–1789.[82] Z. Teng, J. Xing, Q. Wang, C. Lang, S. Feng, and Y. Jin, “Robustobject tracking based on temporal and spatial deep networks,” inProc. IEEE ICCV , 2017, pp. 1153–1162.[83] Z. He, Y. Fan, J. Zhuang, Y. Dong, and H. Bai, “Correlation fifilterswith weighted convolution responses,” in Proc. ICCVW , 2018, pp.1992–2000.[84] T. Yang and A. B. Chan, “Recurrent fifilter learning for visualtracking,” in Proc. ICCVW , 2018, pp. 2010–2019.[85] F. Li, Y. Yao, P. Li, D. Zhang, W. Zuo, and M. H. Yang, “Integratingboundary and center correlation fifilters for visual tracking withaspect ratio variation,” in Proc. IEEE ICCVW , 2018, pp. 2001–2009.[86] X. Wang, H. Li, Y. Li, F. Porikli, and M. Wang, “Deep trackingwith objectness,” in Proc. ICIP , 2018, pp. 660–664.[87] X. Xu, B. Ma, H. Chang, and X. Chen, “Siamese recurrent architecture for visual tracking,” in Proc. ICIP , 2018, pp. 1152–1156.[88] L. Yang, P. Jiang, F. Wang, and X. Wang, “Region-based fullyconvolutional Siamese networks for robust real-time visual tracking,” in Proc. ICIP , 2017, pp. 2567–2571.[89] T. Kokul, C. Fookes, S. Sridharan, A. Ramanan, and U. A. J.Pinidiyaarachchi, “Gate connected convolutional neural networkfor object tracking,” in Proc. ICIP , 2017, pp. 2602–2606.[90] K. Dai, Y. Wang, and X. Yan, “Long-term object tracking based onSiamese network,” in Proc. ICIP , 2017, pp. 3640–3644.[91] B. Akok, F. Gurkan, O. Kaplan, and B. Gunsel, “Robust objecttracking by interleaving variable rate color particle fifiltering anddeep learning,” in Proc. ICIP , 2017, pp. 3665–3669.[92] R. J. Mozhdehi and H. Medeiros, “Deep convolutional particlefifilter for visual tracking,” in Proc. IEEE ICIP , 2017, pp. 3650–3654.[93] J. Valmadre, L. Bertinetto, J. Henriques, A. Vedaldi, and P. H. Torr,“End-to-end representation learning for correlation fifilter basedtracking,” in Proc. IEEE CVPR , 2017, pp. 5000–5008.[94] M. Danelljan, G. Bhat, F. Shahbaz Khan, and M. Felsberg, “ECO:Effificient convolution operators for tracking,” in Proc. IEEE CVPR ,2017, pp. 6931–6939.[95] A. Lukeˇ ziˇ c, T. Voj ´ ı, L. ˇ CehovinZajc, J. Matas, and M. Kristan,“Discriminative correlation fifilter tracker with channel and spatialreliability,” IJCV , vol. 126, no. 7, pp. 671–688, 2018.[96] T. Zhang, C. Xu, and M. H. Yang, “Multi-task correlation particlefifilter for robust object tracking,” in Proc. IEEE CVPR , 2017, pp.4819–4827.[97] B. Han, J. Sim, and H. Adam, “BranchOut: Regularization foronline ensemble tracking with convolutional neural networks,”in Proc. IEEE CVPR , 2017, pp. 521–530.[98] M. Wang, Y. Liu, and Z. Huang, “Large margin object trackingwith circulant feature maps,” in Proc. IEEE CVPR , 2017, pp. 4800–4808.[99] L. Zhang, J. Varadarajan, P. N. Suganthan, N. Ahuja, andP. Moulin, “Robust visual tracking using oblique randomforests,” in Proc. IEEE CVPR , 2017, pp. 5825–5834.[100] J. Choi, H. J. Chang, S. Yun, T. Fischer, Y. Demiris, and J. Y.Choi, “Attentional correlation fifilter network for adaptive visualtracking,” in Proc. IEEE CVPR , 2017, pp. 4828–4837.[101] H. Fan and H. Ling, “SANet: Structure-aware network for visualtracking,” in Proc. IEEE CVPRW , 2017, pp. 2217–2224.[102] Q. Wang, J. Gao, J. Xing, M. Zhang, and W. Hu, “DCFNet:Discriminant correlation fifilters network for visual tracking,”2017. [Online]. Available: http://arxiv.org/abs/1704.04057[103] J. Guo and T. Xu, “Deep ensemble tracking,” IEEE Signal Process.Lett. , vol. 24, no. 10, pp. 1562–1566, 2017.[104] J. Gao, T. Zhang, X. Yang, and C. Xu, “Deep relative tracking,”IEEE Trans. Image Process. , vol. 26, no. 4, pp. 1845–1858, 2017.[105] Z. Chi, H. Li, H. Lu, and M. H. Yang, “Dual deep network forvisual tracking,” IEEE Trans. Image Process. , vol. 26, no. 4, pp.2005–2015, 2017.[106] P. Zhang, T. Zhuo, W. Huang, K. Chen, and M. Kankanhalli,“Online object tracking based on CNN with spatial-temporalsaliency guided sampling,” Neurocomputing , vol. 257, pp. 115–127, 2017.[107] X. Dong and J. Shen, “Triplet loss in Siamese network for objecttracking,” in Proc. ECCV , vol. 11217 LNCS, 2018, pp. 472–488.[108] X. Lu, C. Ma, B. Ni, X. Yang, I. Reid, and M. H. Yang, “Deepregression tracking with shrinkage loss,” in Proc. ECCV , 2018,pp. 369–386.[109] G. Bhat, J. Johnander, M. Danelljan, F. S. Khan, and M. Felsberg,“Unveiling the power of deep tracking,” in Proc. ECCV , 2018, pp.493–509.[110] B. Chen, D. Wang, P. Li, S. Wang, and H. Lu, “Real-time ‘actorcritic’ tracking,” in Proc. ECCV , 2018, pp. 328–345.[111] Z. Zhu, Q. Wang, B. Li, W. Wu, J. Yan, and W. Hu, “Distractoraware Siamese networks for visual object tracking,” in Proc.ECCV , vol. 11213 LNCS, 2018, pp. 103–119.[112] I. Jung, J. Son, M. Baek, and B. Han, “Real-time MDNet,” in Proc.ECCV , 2018, pp. 89–104.[113] Y. Zhang, L. Wang, J. Qi, D. Wang, M. Feng, and H. Lu, “Structured Siamese network for real-time visual tracking,” in Proc.ECCV , 2018, pp. 355–370.[114] H. Lee, S. Choi, and C. Kim, “A memory model based on theSiamese network for long-term tracking,” in Proc. ECCVW , 2019,pp. 100–115.[115] M. Che, R. Wang, Y. Lu, Y. Li, H. Zhi, and C. Xiong, “Channelpruning for visual tracking,” in Proc. ECCVW , 2019, pp. 70–82.[116] E. Burceanu and M. Leordeanu, “Learning a robust society oftracking parts using co-occurrence constraints,” in Proc. ECCVW ,2019, pp. 162–178.[117] H. Morimitsu, “Multiple context features in Siamese networksfor visual object tracking,” in Proc. ECCVW , 2019, pp. 116–131.[118] A. He, C. Luo, X. Tian, and W. Zeng, “Towards a better match inSiamese network based visual object tracker,” in Proc. ECCVW ,2019, pp. 132–147.[119] L. Rout, D. Mishra, and R. K. S. S. Gorthi, “WAEF: Weightedaggregation with enhancement fifilter for visual object tracking,”in Proc. ECCVW , 2019, pp. 83–99.[120] J. Choi, H. J. Chang, T. Fischer, S. Yun, K. Lee, J. Jeong, Y. Demiris,and J. Y. Choi, “Context-aware deep feature compression forhigh-speed visual tracking,” in Proc. IEEE CVPR , 2018, pp. 479–488.[121] Y. Song, C. Ma, X. Wu, L. Gong, L. Bao, W. Zuo, C. Shen, R. W.Lau, and M. H. Yang, “VITAL: Visual tracking via adversariallearning,” in Proc. IEEE CVPR , 2018, pp. 8990–8999.[122] F. Li, C. Tian, W. Zuo, L. Zhang, and M. H. Yang, “Learningspatial-temporal regularized correlation fifilters for visual tracking,” in Proc. IEEE CVPR , 2018, pp. 4904–4913.[123] B. Li, J. Yan, W. Wu, Z. Zhu, and X. Hu, “High performancevisual tracking with Siamese region proposal network,” in Proc.IEEE CVPR , 2018, pp. 8971–8980.[124] A. He, C. Luo, X. Tian, and W. Zeng, “A twofold Siamese networkfor real-time object tracking,” in Proc. IEEE CVPR , 2018, pp. 4834–4843.[125] Z. Zhu, W. Wu, W. Zou, and J. Yan, “End-to-end flflow correlationtracking with spatial-temporal attention,” in Proc. IEEE CVPR ,2018, pp. 548–557.[126] C. Sun, D. Wang, H. Lu, and M. H. Yang, “Correlation trackingvia joint discrimination and reliability learning,” in Proc. IEEECVPR , 2018, pp. 489–497.[127] C. Sun, D. Wang, H. Lu, and M. Yang, “Learning spatial-awareregressions for visual tracking,” in Proc. IEEE CVPR , 2018, pp.8962–8970.[128] Q. Wang, Z. Teng, J. Xing, J. Gao, W. Hu, and S. Maybank,“Learning attentions: Residual attentional Siamese network forhigh performance online visual tracking,” in Proc. IEEE CVPR ,2018, pp. 4854–4863.[129] N. Wang, W. Zhou, Q. Tian, R. Hong, M. Wang, and H. Li, “Multicue correlation fifilters for robust visual tracking,” in Proc. IEEECVPR , 2018, pp. 4844–4853.[130] R. J. Mozhdehi, Y. Reznichenko, A. Siddique, and H. Medeiros,“Deep convolutional particle fifilter with adaptive correlationmaps for visual tracking,” in Proc. ICIP , 2018, pp. 798–802.[131] Z. Lin and C. Yuan, “Robust visual tracking in low-resolutionsequence,” in Proc. ICIP , 2018, pp. 4103–4107.[132] M. Cen and C. Jung, “Fully convolutional Siamese fusion networks for object tracking,” in Proc. ICIP , 2018, pp. 3718–3722.[133] G. Wang, B. Liu, W. Li, and N. Yu, “Flow guided Siamese networkfor visual tracking,” in Proc. ICIP , 2018, pp. 231–235.[134] K. Dai, Y. Wang, X. Yan, and Y. Huo, “Fusion of templatematching and foreground detection for robust visual tracking,”in Proc. ICIP , 2018, pp. 2720–2724.[135] G. Liu and G. Liu, “Integrating multi-level convolutional featuresfor correlation fifilter tracking,” in Proc. ICIP , 2018, pp. 3029–3033.[136] J. Guo, T. Xu, S. Jiang, and Z. Shen, “Generating reliable onlineadaptive templates for visual tracking,” in Proc. ICIP , 2018, pp.226–230.[137] S. Pu, Y. Song, C. Ma, H. Zhang, and M. H. Yang, “Deep attentivetracking via reciprocative learning,” in Proc. NIPS , 2018, pp. 1931–1941.[138] X. Jiang, X. Zhen, B. Zhang, J. Yang, and X. Cao, “Deep collaborative tracking networks,” in Proc. BMVC , 2018, p. 87.[139] D. Ma, W. Bu, and X. Wu, “Multi-scale recurrent tracking viapyramid recurrent network and optical flflow,” in Proc. BMVC ,2018, p. 242.[140] C. Ma, J. B. Huang, X. Yang, and M. H. Yang, “Robust visualtracking via hierarchical convolutional features,” IEEE Trans.Pattern Anal. Mach. Intell. , 2018.[141] Z. Han, P. Wang, and Q. Ye, “Adaptive discriminative deepcorrelation fifilter for visual object tracking,” IEEE Trans. CircuitsSyst. Video Technol. , 2018.[142] K. Chen and W. Tao, “Once for all: A two-flflow convolutionalneural network for visual tracking,” IEEE Trans. Circuits Syst.Video Technol. , vol. 28, no. 12, pp. 3377–3386, 2018.[143] S. Li, S. Zhao, B. Cheng, E. Zhao, and J. Chen, “Robust visualtracking via hierarchical particle fifilter and ensemble deep features,” IEEE Trans. Circuits Syst. Video Technol. , 2018.[144] E. Gundogdu and A. A. Alatan, “Good features to correlate forvisual tracking,” IEEE Trans. Image Process. , vol. 27, no. 5, pp.2526–2540, 2018.[145] Y. Xie, J. Xiao, K. Huang, J. Thiyagalingam, and Y. Zhao, “Correlation fifilter selection for visual tracking using reinforcementlearning,” IEEE Trans. Circuits Syst. Video Technol. , 2018.[146] J. Gao, T. Zhang, X. Yang, and C. Xu, “P2T: Part-to-target trackingvia deep regression learning,” IEEE Trans. Image Process. , vol. 27,no. 6, pp. 3074–3086, 2018.[147] C. Peng, F. Liu, J. Yang, and N. Kasabov, “Densely connecteddiscriminative correlation fifilters for visual tracking,” IEEE SignalProcess. Lett. , vol. 25, no. 7, pp. 1019–1023, 2018.[148] D. Li, G. Wen, Y. Kuai, and F. Porikli, “End-to-end featureintegration for correlation fifilter tracking with channel attention,”IEEE Signal Process. Lett. , vol. 25, no. 12, pp. 1815–1819, 2018.[149] C. Ma, J. B. Huang, X. Yang, and M. H. Yang, “Adaptive correlation fifilters with long-term and short-term memory for objecttracking,” IJCV , vol. 126, no. 8, pp. 771–796, 2018.[150] Y. Cao, H. Ji, W. Zhang, and F. Xue, “Learning spatio-temporalcontext via hierarchical features for visual tracking,” Signal Proc.:Image Comm. , vol. 66, pp. 50–65, 2018.[151] F. Du, P. Liu, W. Zhao, and X. Tang, “Spatialtemporal adaptivefeature weighted correlation fifilter for visual tracking,” SignalProc.: Image Comm. , vol. 67, pp. 58–70, 2018.[152] Y. Kuai, G. Wen, and D. Li, “When correlation fifilters meet fullyconvolutional Siamese networks for distractor-aware tracking,”Signal Proc.: Image Comm. , vol. 64, pp. 107–117, 2018.[153] W. Gan, M. S. Lee, C. hao Wu, and C. C. Kuo, “Online object tracking via motion-guided convolutional neural network(MGNet),” J. VIS. COMMUN. IMAGE R. , vol. 53, pp. 180–191,2018.[154] M. Liu, C. B. Jin, B. Yang, X. Cui, and H. Kim, “Occlusionrobust object tracking based on the confifidence of online selectedhierarchical features,” IET Image Proc. , vol. 12, no. 11, pp. 2023–2029, 2018.[155] K. Dai, D. Wang, H. Lu, C. Sun, and J. Li, “Visual tracking viaadaptive spatially-regularized correlation fifilters,” in Proc. CVPR ,2019, pp. 4670–4679.[156] M. Danelljan, G. Bhat, F. S. Khan, and M. Felsberg, “ATOM:Accurate tracking by overlap maximization,” 2018. [Online].Available: http://arxiv.org/abs/1811.07628[157] H. Fan and H. Ling, “Siamese cascaded region proposalnetworks for real-time visual tracking,” 2018. [Online].Available: http://arxiv.org/abs/1812.06148[158] J. Gao, T. Zhang, and C. Xu, “Graph convolutional tracking,” inProc. CVPR , 2019, pp. 4649–4659.[159] Y. Sun, C. Sun, D. Wang, Y. He, and H. Lu, “ROI pooled correlation fifilters for visual tracking,” in Proc. CVPR , 2019, pp. 5783–5791.[160] G. Wang, C. Luo, Z. Xiong, and W. Zeng, “Spm-tracker:Series-parallel matching for real-time visual object tracking,”2019. [Online]. Available: http://arxiv.org/abs/1904.04452[161] X. Li, C. Ma, B. Wu, Z. He, and M.-H. Yang, “Target-aware deeptracking,” 2019. [Online]. Available: http://arxiv.org/abs/1904.01772[162] N. Wang, Y. Song, C. Ma, W. Zhou, W. Liu, and H. Li,“Unsupervised deep tracking,” 2019. [Online]. Available:http://arxiv.org/abs/1904.01828[163] G. Bhat, M. Danelljan, L. V. Gool, and R. Timofte, “Learningdiscriminative model prediction for tracking,” 2019. [Online].Available: http://arxiv.org/abs/1904.07220[164] F. Zhao, J. Wang, Y. Wu, and M. Tang, “Adversarial deep tracking,” IEEE Trans. Circuits Syst. Video Technol. , vol. 29, no. 7, pp.1998–2011, 2019.[165] H. Li, X. Wang, F. Shen, Y. Li, F. Porikli, and M. Wang, “Real-timedeep tracking via corrective domain adaptation,” IEEE Trans.Circuits Syst. Video Technol. , vol. 8215, 2019.[166] B. Zhong, B. Bai, J. Li, Y. Zhang, and Y. Fu, “Hierarchical trackingby reinforcement learning-based searching and coarse-to-fifineverifying,” IEEE Trans. Image Process. , vol. 28, no. 5, pp. 2331–2341, 2019.[167] J. Gao, T. Zhang, and C. Xu, “SMART: Joint sampling andregression for visual tracking,” IEEE Trans. Image Process. , vol. 28,no. 8, pp. 3923–3935, 2019.[168] H. Hu, B. Ma, J. Shen, H. Sun, L. Shao, and F. Porikli, “Robustobject tracking using manifold regularized convolutional neuralnetworks,” IEEE Trans. Multimedia , vol. 21, no. 2, pp. 510–521,2019.[169] L. Wang, L. Zhang, J. Wang, and Z. Yi, “Memory mechanismsfor discriminative visual tracking algorithms with deep neuralnetworks,” IEEE Trans. Cogn. Devel. Syst. , 2019.[170] Y. Kuai, G. Wen, and D. Li, “Multi-task hierarchical featurelearning for real-time visual tracking,” IEEE Sensors J. , vol. 19,no. 5, pp. 1961–1968, 2019.[171] X. Cheng, Y. Zhang, L. Zhou, and Y. Zheng, “Visual tracking viaAuto-Encoder pair correlation fifilter,” IEEE Trans. Ind. Electron. ,2019.[172] F. Tang, X. Lu, X. Zhang, S. Hu, and H. Zhang, “Deep featuretracking based on interactive multiple model,” Neurocomputing ,vol. 333, pp. 29–40, 2019.[173] X. Lu, B. Ni, C. Ma, and X. Yang, “Learning transform-awareattentive network for object tracking,” Neurocomputing , vol. 349,pp. 133–144, 2019.[174] D. Li, G. Wen, Y. Kuai, J. Xiao, and F. Porikli, “Learning targetaware correlation fifilters for visual tracking,” J. VIS. COMMUN.IMAGE R. , vol. 58, pp. 149–159, 2019.[175] B. Chen, P. Li, C. Sun, D. Wang, G. Yang, and H. Lu, “Multiattention module for visual tracking,” Pattern Recognit. , vol. 87,pp. 80–93, 2019.[176] S. Yun, J. J. Y. Choi, Y. Yoo, K. Yun, and J. J. Y. Choi, “Actiondecision networks for visual tracking with deep reinforcementlearning,” in Proc. IEEE CVPR , 2016, pp. 2–6.[177] S. Yun, J. Choi, Y. Yoo, K. Yun, and J. Y. Choi, “Action-drivenvisual object tracking with deep reinforcement learning,” IEEETrans. Neural Netw. Learn. Syst. , vol. 29, no. 6, pp. 2239–2252, 2018.[178] W. Zhang, K. Song, X. Rong, and Y. Li, “Coarse-to-fifine UAV targettracking with deep reinforcement learning,” IEEE Trans. Autom.Sci. Eng. , pp. 1–9, 2018.[179] L. Ren, X. Yuan, J. Lu, M. Yang, and J. Zhou, “Deep reinforcementlearning with iterative shift for visual tracking,” in Proc. ECCV ,2018, pp. 697–713.[180] D. Zhang, H. Maei, X. Wang, and Y.-F. Wang, “Deepreinforcement learning for visual object tracking in videos,”2017. [Online]. Available: http://arxiv.org/abs/1701.08936[181] C. Huang, S. Lucey, and D. Ramanan, “Learning policies foradaptive tracking with deep feature cascades,” in Proc. IEEEICCV , 2017, pp. 105–114.[182] X. Dong, J. Shen, W. Wang, Y. Liu, L. Shao, and F. Porikli, “Hyperparameter optimization for tracking with continuous deep Qlearning,” in Proc. IEEE CVPR , 2018, pp. 518–527.[183] J. Supancic and D. Ramanan, “Tracking as online decisionmaking: Learning a policy from streaming videos with reinforcement learning,” in Proc. IEEE ICCV , 2017, pp. 322–331.[184] J. Choi, J. Kwon, and K. M. Lee, “Real-time visual tracking bydeep reinforced decision making,” Comput. Vis. Image Und. , vol.171, pp. 10–19, 2018.[185] Y. Wu, J. Lim, and M. H. Yang, “Online object tracking: Abenchmark,” in Proc. IEEE CVPR , 2013, pp. 2411–2418.[186] Y. Wu, J. Lim, and M. Yang, “Object tracking benchmark,” IEEE

Trans. Pattern Anal. Mach. Intell., vol. 37, no. 9, pp. 1834–1848,

2015.

[187] P. Liang, E. Blasch, and H. Ling, “Encoding color information for

visual tracking: Algorithms and benchmark,” IEEE Trans. Image

Process., vol. 24, no. 12, pp. 5630–5644, 2015.

[188] M. Mueller, N. Smith, and B. Ghanem, “A benchmark and simulator for UAV tracking,” in Proc. ECCV, 2016, pp. 445–461.

[189] A. Li, M. Lin, Y. Wu, M. H. Yang, and S. Yan, “NUS-PRO: A new

visual tracking challenge,” IEEE Trans. Pattern Anal. Mach. Intell.,

vol. 38, no. 2, pp. 335–349, 2016.

[190] H. K. Galoogahi, A. Fagg, C. Huang, D. Ramanan, and S. Lucey,

“Need for speed: A benchmark for higher frame rate object

tracking,” in Proc. IEEE ICCV, 2017, pp. 1134–1143.

[191] S. Li and D. Y. Yeung, “Visual object tracking for unmanned aerial

vehicles: A benchmark and new motion models,” in Proc. AAAI,

2017, pp. 4140–4146.

[192] M. M¨uller, A. Bibi, S. Giancola, S. Alsubaihi, and B. Ghanem,

“TrackingNet: A large-scale dataset and benchmark for object

tracking in the wild,” in Proc. ECCV, 2018, pp. 310–327.

[193] J. Valmadre, L. Bertinetto, J. F. Henriques, R. Tao, A. Vedaldi,

A. W. Smeulders, P. H. Torr, and E. Gavves, “Long-term tracking

in the wild: A benchmark,” in Proc. ECCV, vol. 11207 LNCS, 2018,

pp. 692–707.

[194] A. Li, Z. Chen, and Y. Wang, “BUAA-PRO: A tracking dataset

with pixel-level annotation,” in Proc. BMVC, 2018. [Online].

Available: http://bmvc2018.org/contents/papers/0851.pdf

[195] L. Huang, X. Zhao, and K. Huang, “GOT-10k: A large highdiversity benchmark for generic object tracking in the wild,”

2018. [Online]. Available: http://arxiv.org/abs/1810.11981

[196] H. Fan, L. Lin, F. Yang, P. Chu, G. Deng, S. Yu, H. Bai,

Y. Xu, C. Liao, and H. Ling, “LaSOT: A high-quality benchmark

for large-scale single object tracking,” 2018. [Online]. Available:

http://arxiv.org/abs/1809.07845

[197] L. ˇCehovin, “TraX: The visual tracking exchange protocol and

library,” Neurocomputing, vol. 260, pp. 5–8, 2017.

[198] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C. Y. Fu, and

A. C. Berg, “SSD: Single shot multibox detector,” in Proc. ECCV,

2016, pp. 21–37.

[199] G. Koch, R. Zemel, and R. Salakhutdinov, “Siamese neural

networks for one-shot image recognition,” in Proc. ICML Deep

Learning Workshop, 2015.

[200] G. Lin, A. Milan, C. Shen, and I. Reid, “RefineNet: Multi-path

refinement networks for high-resolution semantic segmentation,”

in Proc. IEEE CVPR, 2017, pp. 5168–5177.

[201] T. Y. Lin, P. Doll´ar, R. Girshick, K. He, B. Hariharan, and S. Belongie, “Feature pyramid networks for object detection,” in Proc.

IEEE CVPR, 2017, pp. 936–944.

[202] S. Gladh, M. Danelljan, F. S. Khan, and M. Felsberg, “Deep

motion features for visual tracking,” in Proc. ICPR, 2016, pp.

1243–1248.

[203] E. Real, J. Shlens, S. Mazzocchi, X. Pan, and V. Vanhoucke,

“YouTube-BoundingBoxes: A large high-precision humanannotated data set for object detection in video,” in Proc. IEEE

CVPR, 2017, pp. 7464–7473.

[204] G. A. Miller, “WordNet: A lexical database for English,” Communications of the ACM, vol. 38, no. 11, pp. 39–41, 1995.

[205] A. Vedaldi and K. Lenc, “MatConvNet: Convolutional neural

networks for MATLAB,” in Proc. ACM Multimedia Conference,

2015, pp. 689–692.

[206] M. Kristan, J. Matas, A. Leonardis, T. Vojir, R. Pflugfelder,

G. Fernandez, G. Nebehay, F. Porikli, and L. Cehovin, “A novel

performance evaluation methodology for single-target trackers,”

IEEE Trans. Pattern Anal. Mach. Intell., vol. 38, no. 11, pp. 2137–

2155, 2016.

[207] L. Cehovin, M. Kristan, and A. Leonardis, “Is my new tracker

really better than yours?” in Proc. IEEE WACV, 2014, pp. 540–

547.