Intel RealSense Depth Camera D435i 跑通 RTAB-Map

文章目录

-

- 运行命令参数

- 启动相机

- 查看发布的话题

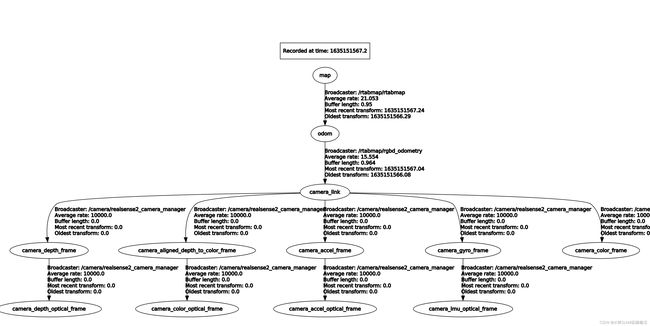

- rqt_tf_tree

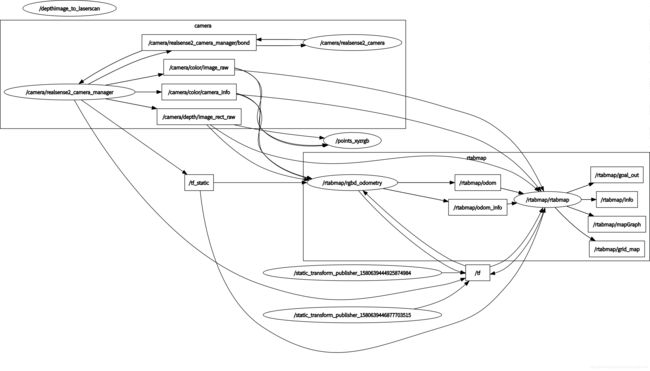

- rqt_graph

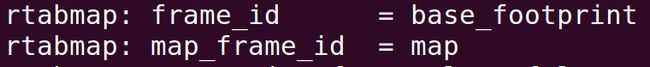

- 启动rtabmap_ros

- 增加base_footprint 底盘坐标系

- 深度图转换为雷达数据

- 保存地图

- RTAB-Map 点云滤波、点云分割与地面检测

- 参考链接

安装步骤

move_base_msgsConfig.cmake

move_base_msgs-config.cmake

ddynamic_reconfigureConfig.cmake

ddynamic_reconfigure-config.cmake

sudo apt-get install ros-kinetic-move-base-msgs

sudo apt-get install ros-kinetic-ddynamic-reconfigure

cd ~

mkdir -p rtabmap_ros_ws/src

git clone https://github.com/introlab/rtabmap_ros.git src/rtabmap_ros

cd src

catkin_init_workspace

cd ~

git clone https://github.com/introlab/rtabmap.git rtabmap

cd rtabmap/build

cmake -DWITH_G2O=OFF -DWITH_GTSAM=OFF -DCMAKE_INSTALL_PREFIX=~/projects/rtabmap_ros_ws/devel ..

make -j4

make install

catkin_make -j4

echo "source ~/catkin_ws/devel/setup.bash " >> ~/.bashrc

source ~/.bashrc

运行命令参数

启动相机

roslaunch realsense2_camera rs_camera.launch

查看发布的话题

rostopic list

/camera/color/camera_info

/camera/color/image_raw

/camera/infra1/camera_info

/camera/infra1/image_rect_raw

/camera/infra2/camera_info

/camera/infra2/image_rect_raw

rqt_tf_tree

rosrun rqt_tf_tree rqt_tf_tree

/camera/realsense2_camera/base_frame_id: camera_link

rqt_graph

启动rtabmap_ros

roslaunch realsense2_camera rs_camera.launch \

align_depth:=true \

unite_imu_method:="linear_interpolation" \

enable_gyro:=true \

enable_accel:=true

rosrun imu_filter_madgwick imu_filter_node \

_use_mag:=false \

_publish_tf:=false \

_world_frame:="enu" \

/imu/data_raw:=/camera/imu \

/imu/data:=/rtabmap/imu

roslaunch rtabmap_ros rtabmap.launch \

rtabmap_args:="--delete_db_on_start --Optimizer/GravitySigma 0.3" \

depth_topic:=/camera/aligned_depth_to_color/image_raw \

rgb_topic:=/camera/color/image_raw \

camera_info_topic:=/camera/color/camera_info \

approx_sync:=false \

wait_imu_to_init:=true \

imu_topic:=/rtabmap/imu

增加base_footprint 底盘坐标系

roslaunch rtabmap_ros rtabmap.launch rtabmap_args:="--delete_db_on_start --Optimizer/GravitySigma 0.3" depth_topic:=/camera/aligned_depth_to_color/image_raw rgb_topic:=/camera/color/image_raw camera_info_topic:=/camera/color/camera_info approx_sync:=false wait_imu_to_init:=true imu_topic:=/rtabmap/imu frame_id:=base_footprint

rosrun tf static_transform_publisher 0 0 0 0 0 0 base_footprint camera_link 100

rosrun tf static_transform_publisher 0 0 0 0 0 0 odom base_footprint 100

深度图转换为雷达数据

rosrun depthimage_to_laserscan depthimage_to_laserscan image:=/camera/aligned_depth_to_color/image_raw camera_info:=/camera/depth/camera_info range=0.1 range_max=5

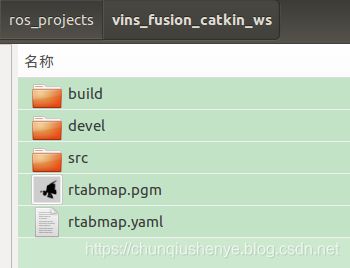

保存地图

rosrun map_server map_saver -f rtabmap map:=/rtabmap/grid_map

RTAB-Map 点云滤波、点云分割与地面检测

- 在obstacles_detection.cpp中处理点云回调callback函数,获取frame_ID到cloud_ID的变换关系localTransform,map_ID到frame_ID的变换关系pose,将cloud_ID中的点云转换到frame_ID中:

inputCloud = rtabmap::util3d::transformPointCloud(inputCloud, localTransform);

实际是调用的是下面的函数

pcl::transformPointCloud(*cloud, *output, transform.toEigen4f());

关于这个函数的使用我单独写了可以独立编译运行的程序共学习,代码地址如下:

https://github.com/xiaoqiuslamshizhanjiaocheng/xiaoqiuslamshizhanjiaocheng/blob/main/5_point_cloud/transformPointCloud.cpp

对应目录下有CMakeLists.txt编译文件和table_scene_lms400.pcd点云文件

- 点云由体素网格进行下采样,体素大小等于固定网格单元大小。

然后从点云中分割出地平面:计算点云的法线,然后在固定的最大角度“\Grid/MaxGroundAngle”范围内,所有法线平行于z轴(向上)的点作为地面,其他点则为障碍物。

RTABMAP处理占据栅格地图流程

调用OccupancyGrid.hpp中segmentCloud函数实现地面和障碍物点云分割的功能:

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud = grid_.segmentCloud<pcl::PointXYZ>(

inputCloud,

pcl::IndicesPtr(new std::vector<int>),

pose,

cv::Point3f(localTransform.x(), localTransform.y(), localTransform.z()),

ground,

obstacles,

&flatObstacles);

先根据位姿pose(frame_ID机器人帧在map_ID地图帧坐标系中的位姿)将姿态分解成欧拉角rpy,仅使用roll和pitch对点云(frame_ID机器人帧中的坐标)进行坐标转换(projMapFrame为false,不使用位移相关信息,本质上还是在frame_ID机器人帧),然后调用util3d_mapping.hpp中segmentObstaclesFromGround函数来实现分割:

util3d::segmentObstaclesFromGround<PointT>(

cloud,

indices,

groundIndices,

obstaclesIndices,

normalKSearch_,

maxGroundAngle_,

clusterRadius_,

minClusterSize_,

flatObstaclesDetected_,

maxGroundHeight_,

flatObstacles,

Eigen::Vector4f(viewPoint.x, viewPoint.y, viewPoint.z+(projMapFrame_?pose.z():0), 1));

其中,viewPoint是cloud_ID(相机帧)在frame_ID(机器人帧)坐标系中的坐标。

调用util3d_filtering.cpp中normalFiltering函数分割地面:

pcl::IndicesPtr flatSurfaces = normalFiltering(

cloud,

indices,

groundNormalAngle,

Eigen::Vector4f(0,0,1,0),

normalKSearch,

viewPoint);

实际是调用normalFilteringImpl函数实现:

normalFilteringImplpcl::PointXYZ(cloud, indices, angleMax, normal, normalKSearch, viewpoint);

点云分割与地面检测:

OccupancyGrid.hpp – segmentCloud函数

对点云进行体素化与降采样。调用pcl的setLeafSize()实现。

• rtabmap: cloud_voxel_size: 0.05f, gridCellSize = 0.05f. ICP配准中没有启用voxel(parameters.h)

根据当前位姿,将点云从相机坐标系转换至世界坐标系。

• 调用rtabmap的util3d::transformPointCloud()实现。

机器人范围检测与环境高度检测

• 分别采用util3d::cropBox和util3d::passThrough方法实现,在util3d_filtering.cpp中。

检测地面点云

• util3d::segmentObstaclesFromGround,来自util3d_mapping.hpp

• 使用util3d::normalFitering方法滤波获取地面点云,指标为点的法线与向量(0,0,1)的夹角大小,默认值为45°。首先,通过pcl::NormalEstimationOMP方法,使用KdTree作为搜索方法,并通过setViewPoint方法设置视角,根据公式计算点云所有点的法向量。通过pcl::getAngle3D获得点云每个点法向量与地面垂直向量的夹角。实现方法在util3d_filtering.cpp

• 提取聚类分离地面与平坦障碍物,方法为util3d::extractClusters,来自util3d_filtering.cpp。具体算法为pcl::EuclideanClusterExtraction

对地面点云滤波,分离地面与非地面点云

通过地面与障碍物高度排除三维空间外点,采用passThrough直通滤波器方法滤波,从之前的步骤获取这两种点云的下标值。或者通过cropbox方法直接通过移动机器人footprint范围来排除三维空间外点。

生成栅格地图

• util3d::occupancy2DFromGroundObstacles,来自util3d_mapping.cpp

点云分割与地面检测:

OccupancyGrid.hpp -- segmentCloud函数

对点云进行体素化与降采样。调用pcl的setLeafSize()实现。

rtabmap: cloud_voxel_size: 0.05f, gridCellSize = 0.05f. ICP配准中没有启用voxel(parameters.h)

根据当前位姿,将点云从相机坐标系转换至世界坐标系。

调用rtabmap的util3d::transformPointCloud()实现。

机器人范围检测与环境高度检测

分别采用util3d::cropBox和util3d::passThrough方法实现,在util3d_filtering.cpp中。

检测地面点云

util3d::segmentObstaclesFromGround,来自util3d_mapping.hpp

使用util3d::normalFitering方法滤波获取地面点云,指标为与垂直法向量的夹角大小,默认值为45°。首先,通过pcl::NormalEstimationOMP方法,使用KdTree作为搜索方法,并通过setViewPoint方法设置视角,根据公式计算点云所有点的法向量。通过pcl::getAngle3D获得点云每个点法向量与地面垂直向量的夹角。实现方法在util3d_filtering.cpp

提取聚类分离地面与平坦障碍物,方法为util3d::extractClusters,来自util3d_filtering.cpp。具体算法为pcl::EuclideanClusterExtraction

对地面点云滤波,分离地面与非地面点云

通过地面与障碍物高度排除三维空间外点,采用passThrough直通滤波器方法滤波,从之前的步骤获取这两种点云的下标值

//setNegative: 在min与max范围内的被保留。minGroundHeight为min输入,maxObstacleHeight为max输入

pcl::PointCloud<pcl::PointXYZRGB>::Ptr passThrough(

const pcl::PointCloud<pcl::PointXYZRGB>::Ptr & cloud,

const std::string & axis,

float min,

float max,

bool negative)

{

UASSERT(max > min);

UASSERT(axis.compare("x") == 0 || axis.compare("y") == 0 || axis.compare("z") == 0);

pcl::PointCloud<pcl::PointXYZRGB>::Ptr output(new pcl::PointCloud<pcl::PointXYZRGB>);

pcl::PassThrough<pcl::PointXYZRGB> filter;

filter.setNegative(negative);

filter.setFilterFieldName(axis);

filter.setFilterLimits(min, max);

filter.setInputCloud(cloud);

filter.filter(*output);

return output;

}

或者通过cropbox方法直接通过移动机器人footprint范围来排除三维空间外点

生成栅格地图

util3d::occupancy2DFromGroundObstacles,来自util3d_mapping.cpp

参考链接

RTABMAP-ROS RGB-D的建图原理

RTAB-MAP原理详解

rtabmap

rtabmap

official-rtab-map-forum

rtabmap_ros

rtabmap_ros

https://github.com/introlab/rtabmap/wiki/Installation

https://github.com/introlab/rtabmap_ros#installation

http://wiki.ros.org/rtabmap_ros

http://official-rtab-map-forum.67519.x6.nabble.com/

http://wiki.ros.org/rtabmap_ros/Tutorials/HandHeldMapping

http://wiki.ros.org/camera_calibration/Tutorials/StereoCalibration

http://wiki.ros.org/camera_calibration

http://wiki.ros.org/rtabmap_ros/Tutorials/StereoHandHeldMapping

http://wiki.ros.org/rtabmap_ros/Tutorials/SetupOnYourRobot#Stereo_A