【NLP自然语言处理】白话理解 Word2vec 词向量表示含义:含Skip-Gram与CBOW代码以及算法优化负采样与层序SoftMax

文章目录

- Word2vec词向量模型

-

- Skip-Gram模型

- CBOW 模型

- 算法优化方法:负采样

- 算法优化方法:层序SoftMax

Word2vec指用特征向量表示单词的技术,且每两个词向量可计算余弦相似度表示它们之间的关系。

Word2vec词向量模型

实现的算法手段:

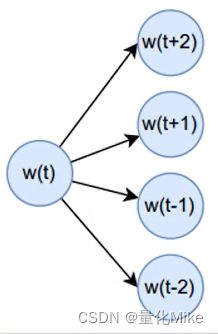

- Skip-Gram(跳元模型):中间的词预测周围的词

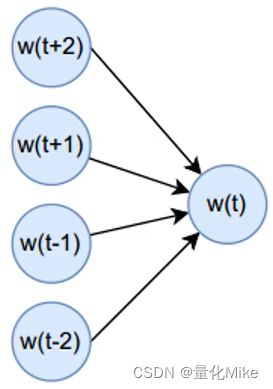

- CBOW ( Continues Bag of Words,连续词袋):周围的词去预测中间词

算法优化方法:

- 负例采样

- 层序Softmax( Hierarchical Softmax )

from gensim.models import word2vec

if __name__ == '__main__':

s1 = [0,1,2,3,4] # s1 代表为一句话,每个数字代表一个词(词语0,词语1,词语2,词语3,词语4)

s2 = [0,2,4,5,6]

s3 = [2,3,4,4,6]

s4 = [1,3,5,0,3]

seqs = [s1, s2, s3, s4]

# vector_size:转换的向量维度:1xn

model = word2vec.Word2Vec(seqs, vector_size=16, min_count=1)

print(model.wv[1]) # 将词语1表示为向量

print(model.wv.most_similar(1,topn=3)) # 返回与词语1相似度最高的前三个词

Skip-Gram模型

用一个词去预测其周围的词。假设窗口大小为5,目前窗口中的词是[xt-2,xt-1,wt,wt+1,wt+2],则Skip-Gram可以理解为用wt生成xt-2,xt-1,wt+1,wt+2。

训练过程:

1、随机初始化词汇数量的中心词向量v与背景词向量u。当某个词作为中心词参与计算时,取中心词向量,用vc表示;当某个词作为背景词时则取背景词向量,用uo表示。

2、通过中心词c生成背景词o的概率可描述为:

3、损失函数采取交叉嫡损失函数。

代码可以参考这篇Github

from torch import nn

import torch

class Skip_Gram(nn.Module):

def __init__(self,vocabs,vector_size):

super().__init__()

self.vocabs = torch.LongTensor(vocabs)

vocab_numbers = len(vocabs)

self.word_embs = nn.Embedding(vocab_numbers,vector_size)

self.bkp_word_embs = nn.Embedding(vocab_numbers,vector_size)

self.softmax = nn.Softmax()

def forward(self,x):

x = self.word_embs(x)

bkp = self.bkp_word_embs(self.vocabs) # 获取背景词向量

y = torch.matmul(x,bkp.T) # 中心词与背景词向量相乘

y = self.softmax(y) #进行softmax进行分类处理

return y

def getvocabsOnlyIndex(seqs):

vocabs = set()

for seq in seqs:

vocabs |= set(seq)

vocabs = list(vocabs)

print('getvocabsOnlyIndex获取词语:',vocabs)

return vocabs

def getVocabs(seqs):

vacabs = set()

for seq in seqs:

vacabs |= set(seq)

vacabs = list(vacabs)

vacab_map = dict(zip(vacabs, range(len(vacabs))))

return vacabs,vacab_map

def word2vec( seqs, window_size = 1 ):

vocabs = getvocabsOnlyIndex(seqs) # 获取句子中单词列表

net = Skip_Gram(vocabs,vector_size=16)

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.SGD( net.parameters(), lr=0.01)

net.train()

for seq in seqs:

for i in range(0,len(seq)-(window_size*2)):

optimizer.zero_grad()

window = seq[i:i+1+window_size*2]

# [window*2]

x = torch.LongTensor([window[window_size] for _ in range(window_size*2)])

y_pred = net(x)

window.pop(window_size)

# [window*2]

y = torch.LongTensor(window)

loss = criterion(y_pred, y)

print(loss)

loss.backward()

optimizer.step()

if __name__ == '__main__':

s1 = [0,1,2,3,4]

s2 = [0,2,4,5,6]

s3 = [2,3,4,4,6]

s4 = [1,3,5,0,7]

seqs = [s1,s2,s3,s4]

word2vec(seqs)

CBOW 模型

CBOW模型就是用周围的词预测中心词。假设窗口大小为5,目前窗口中的词是[xt-2,xt-1,wt,wt+1,wt+2],则CBOW模型即可描述为用[xt-2,xt-1,wt+1,wt+2],生成wt。

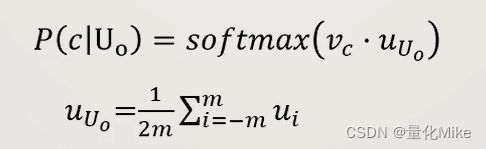

训练过程:

1、随机初始化词汇数量的中心词向量v与背景词向量u,当某个词作为中心词参与计算时,则取中心词向量,用vc表示,当某个词作为背景词时则取背景词向量,用uo表示。

2、设窗口大小为m,则周围包含索引的背景词向量可以表示为:

生成中心词的概率可以表示为:

3、损失函数采取交叉嫡损失函数。

代码可以参考这篇Github

from torch import nn

import torch

# from word2vec.vocabs import getvocabsOnlyIndex

class CBOW(nn.Module):

def __init__(self,vocabs,vector_size):

super().__init__()

self.vocabs = torch.LongTensor(vocabs)

vocab_numbers = len(vocabs)

self.word_embs = nn.Embedding(vocab_numbers,vector_size)

self.bkp_word_embs = nn.Embedding(vocab_numbers,vector_size)

self.softmax = nn.Softmax()

def forward(self,x):

x = self.word_embs(x)

x = torch.mean(x,0)

bkp = self.bkp_word_embs(self.vocabs)

y = torch.matmul(x,bkp.T)

y = self.softmax(y)

return torch.unsqueeze(y,0)

def getvocabsOnlyIndex(seqs):

vocabs = set()

for seq in seqs:

vocabs |= set(seq)

vocabs = list(vocabs)

print('getvocabsOnlyIndex获取词语:',vocabs)

return vocabs

def getVocabs(seqs):

vacabs = set()

for seq in seqs:

vacabs |= set(seq)

vacabs = list(vacabs)

vacab_map = dict(zip(vacabs, range(len(vacabs))))

return vacabs,vacab_map

def word2vec( seqs, window_size = 1 ):

vocabs = getvocabsOnlyIndex(seqs)

net = CBOW(vocabs,vector_size=16)

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.SGD( net.parameters(), lr=0.01)

net.train()

for seq in seqs:

for i in range(0,len(seq)-(window_size*2)):

optimizer.zero_grad()

window = seq[i:i+1+window_size*2]

#[1]

y = torch.LongTensor([window[window_size]])

window.pop(window_size)

#[window*2]

x = torch.LongTensor(window)

y_pred = net(x)

loss = criterion(y_pred, y)

loss.backward()

optimizer.step()

print(loss)

if __name__ == '__main__':

s1 = [0,1,2,3,4]

s2 = [0,2,4,5,6]

s3 = [2,3,4,4,6]

s4 = [1,3,5,0,3]

seqs = [s1,s2,s3,s4]

word2vec(seqs)

算法优化方法:负采样

Skip-Gram或CBOW都属于Sotfmax多分类预测,且类别数目是整个词典的大小。负采样正是为了优化这一计算开销。负例指的是不与中心词c同时出现在同一窗口的词。

所以负采样后Word2vec的任务可转变为以Sigmoid为最终计划的二分类任务。

负采样的方式可以完全随机,或者根据某个概率分布,例如根据整个文档词频做概率分布。

设负例的数量为k,则时间复杂度可由原先的o(n)降为o(k+1)。

算法优化方法:层序SoftMax

首先构建一个二叉树,用叶子节点表示所有的词汇,节点的深度是log2(节点数量)。

以Skip-Gram为例,通过中心词c生成背景词o的概率P(o l c)可近似为,二叉树的根节点走到节点o的概率,用数学语言可描述为: