k8s安装与部署

参考课程:https://edu.51cto.com/course/23741.html

官网文档:https://kubernetes.io/zh/docs/setup/production-environment

准备工作

1、开启三个虚拟机,一个master节点,两个node节点

配置:4G/4核/100G

(1)静态IP配置

(2)关闭防火墙

(3)安装一些常用组件

(4)关闭SELinux

(5)设置主机名,配置主机文件

(6)设置免密登录

(7)时间同步

以上配置参考:https://blog.csdn.net/qq_42666043/article/details/107668439

安装docker

各个节点操作

1、下载配置docker的yum源 ,因为国外的源太慢了,改为阿里的

cd /etc/yum.repos.d/ && mkdir bak && mv CentOS-* bak/ && mv epel* bak/

cp bak/CentOS-Base.repo ./

wget -P /etc/yum.repos.d/ https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

2、安装docker

yum update && yum install docker-ce docker-ce-cli containerd.io -y

3、创建 /etc/docker 目录。

mkdir /etc/docker

4、设置cgroup driver类型为systemd

cat > /etc/docker/daemon.json <5、创建docker.service.d

mkdir -p /etc/systemd/system/docker.service.d

6、启动docker服务并验证,可以通过docker info查看docker安装的版本等信息

systemctl daemon-reload

systemctl restart docker

systemctl enable docker

安装kubeadm组件

1、配置kubernetes源,使⽤阿⾥的kubernetes源

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

启动kubectl

setenforce 0

yum install -y kubelet kubeadm kubectl

systemctl enable kubelet && systemctl start kubelet

2、设置iptables⽹桥参数

cat < /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

3、重新启动kubelet服务,使配置⽣效

systemctl restart kubelet && systemctl enable kubelet

4、此时查看kubelet的状态会发现如下错误

Aug 26 17:05:54 master1 systemd[1]: kubelet.service: main process exited, code=exited, status=255/n/a

Aug 26 17:05:54 master1 systemd[1]: Unit kubelet.service entered failed state.

Aug 26 17:05:54 master1 systemd[1]: kubelet.service failed.

解决方法:不用管,接着往下做,因为据官方文档,在kubeadm init 之前kubelet会不断重启,直到生成CA证书后会被自动解决。

导⼊kubernetes镜像

1、初始化集群

kubeadm init --pod-network-cidr 172.16.0.0/16

出现错误

[init] Using Kubernetes version: v1.18.8

[preflight] Running pre-flight checks

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR Swap]: running with swap on is not supported. Please disable swap

解决方法:(三个节点都操作)

(1)下载工具

yum whatprovides "*bin/swapoff"

(2)安装通用工具

yum install util-linux

(3)禁用swap

先查看swap所在

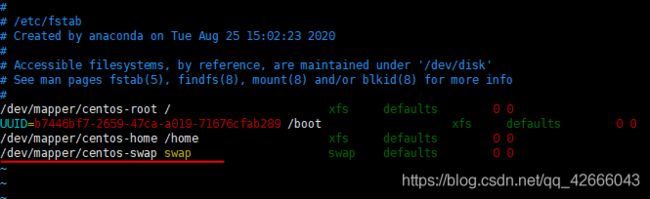

cat /etc/fstab

复制路径/dev/mapper/centos_master1-swap

禁用

swapoff /dev/mapper/centos_master1-swap

(4)将/etc/fstab文件的swap注释掉,图中划线行注释掉

vim /etc/fstab

(5)重新跑一下初始化,开始镜像拉取,可能会失败,因为要去Google的仓库去拉镜像,除非你的机器能

kubeadm init --pod-network-cidr 172.16.0.0/16

2、所以选择离线载入安装镜像

(1)现将文件上传到根目录下

rz

(2)解压文件

unzip k8s-v1.18.3-01.zip #没有unzip需要安装

(3)进入k8s-v1.18.3文件夹,并通过docker load命令将镜像导⼊到环境中

cd k8s-v1.18.3

ls -l

![]()

(4)使用如下load命令依次将上图中的镜像文件导入环境中

docker image load -i cni:v3.14.0.tar

docker image load -i coredns:1.6.7.tar

docker image load -i etcd:3.4.3-0.tar

docker image load -i kube-apiserver:v1.18.3.tar

docker image load -i kube-controller-manager:v1.18.3.tar

docker image load -i kube-proxy:v1.18.3.tar

docker image load -i kube-scheduler:v1.18.3.tar

docker image load -i node.tar

docker image load -i node:v3.14.0.tar

docker image load -i pause:3.2.tar

docker image load -i pod2daemon-flexvol:v3.14.0.tar

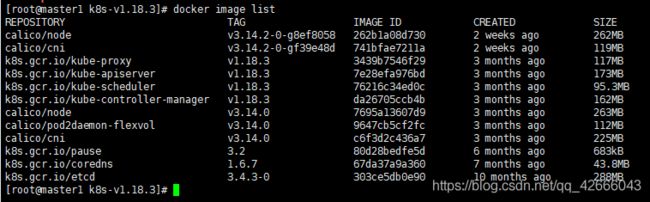

(5)检查镜像列表

docker image list

kubeadm初始化集群

1、 kubeadm初始化集群,需要设置初始参数(在master节点操作)

- –kubernetes-version指定版本,一定要指定版本,不然会出错

- –pod-network-cidr指定pod使⽤的⽹段,设置值根据不同的⽹络plugin选择,本⽂以 cailico为例设置值为172.16.0.0/16

- container runtime可以通过–cri-socket指定socket⽂件所属路径

- 如果有多个⽹卡可以通过–apiserver-advertise-address指定master地址,默认会选择访问外⽹的ip

kubeadm init --kubernetes-version v1.18.3 --apiserver-advertise-address 192.168.12.181 --apiserver-bind-port 6443 --pod-network-cidr 172.16.0.0/16

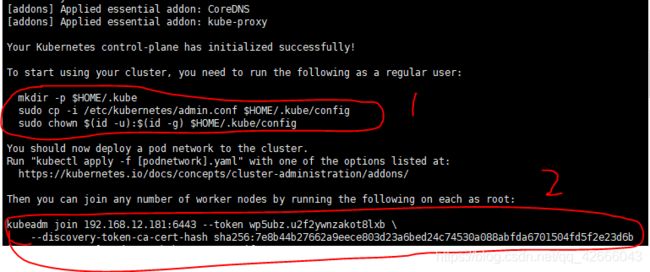

以上这条命令的运行结果显示了kubeadm安装过程中的⼀些重要步骤:下载镜像,⽣成证书,⽣成配置⽂件,配置RBAC授权认证,配置环境变量,安装⽹络插件指引, 添加node指引配置⽂件。

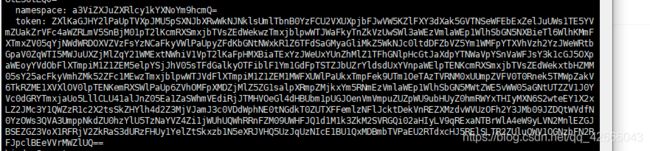

上图即运行成功的结果图,图中标注出来的两部分比较重要,这里建议现将语句2保存在一个TXT文件中,以便后续扩充节点。

2、根据提示⽣成kubectl环境配置⽂件

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

3、根据提示把 work node 加入到集群中来

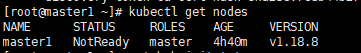

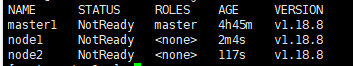

kubectl get nodes #查看已有节点

在node1和node2执行以下语句(上图执行成功时反馈的提示语句2),将其加入到集群中来

kubeadm join 192.168.12.181:6443 --token wp5ubz.u2f2ywnzakot8lxb \

--discovery-token-ca-cert-hash sha256:7e8b44b27662a9eece803d23a6bed24c74530a088abfda6701504fd5f2e23d6b

此时由于还没安装⽹络plugin, 所有的node节点均显示NotReady状态。

4、安装⽹络plugin,只需要在master执行

kubernetes⽀持多种类型⽹络插件,要求⽹络⽀持CNI插件即可,CNI是 Container Network Interface,要求kubernetes的中pod⽹络访问⽅式:

- node和node之间⽹络互通

- pod和pod之间⽹络互通

- node和pod之间⽹络互通

不同的CNI plugin⽀持的特性有所差别。kubernetes⽀持多种开源的⽹络CNI插件,常⻅的有 flannel、calico、canal、weave等.

wget https://docs.projectcalico.org/v3.14/manifests/calico.yaml

kubectl apply -f calico.yaml

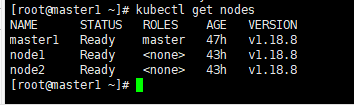

此时再查看各node的状态,已经由NotReady变为Ready(如果没变,稍等一下,clear)

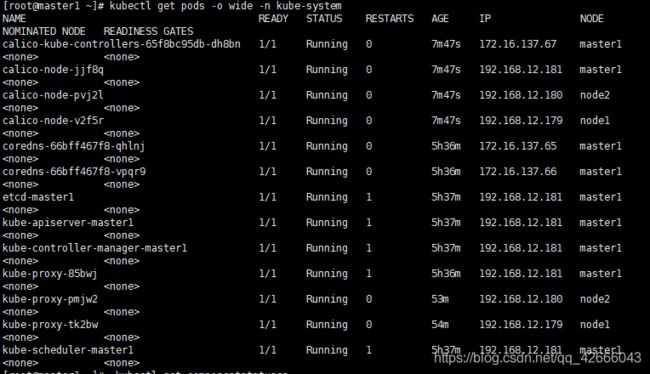

5、查看calico安装的daemonsets

kubectl get ds -n kube-system

kubectl get pods -o wide -n kube-system

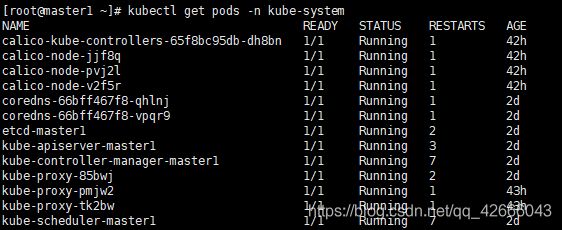

kubectl get pods -n kube-system

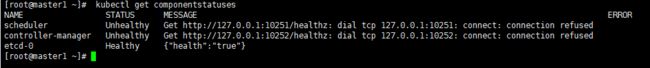

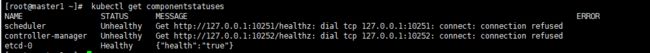

controller-managerhe 和 schedule状态为Unhealthy,是因为此时还没有部署这两个组件

验证kubernetes组件

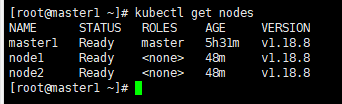

1、验证node状态,获取当前安装节点,可以查看到状态, ⻆⾊,启动市场,版本

kubectl get nodes

2、查看kubernetse服务组件状态,包括scheduler,controller-manager,etcd

kubectl get componentstatuses

3、查看pod的情况,master中的⻆⾊包括kube-apiserver,kube-scheduler,kube-controllermanager,etcd,coredns以pods形式部署在集群中,worker节点的kube-proxy也以pod的形 式部署。实际上pod是以其他控制器如daemonset的形式控制的

kubectl get pods -n kube-system

如果此时所有的pod组件都是running状态,说明集群已经部署好了。

配置kubectl命令补全

1、使⽤kubectl和kubernetes交互时候可以使⽤缩写模式也可以使⽤完整模式,如kubectl get

nodes和kubectl get no能实现⼀样的效果,为了提⾼⼯作效率,可以使⽤命令补全的⽅式加快

⼯作效率。

⽣成kubectl bash命令⾏补全shell

(1)安装组件

yum install bash-completion

(2)配置并重定向保存

kubectl completion bash >/etc/kubernetes/kubectl.sh

echo "source /etc/kubernetes/kubectl.sh" >>/root/.bashrc

cat /root/.bashrc

(3)加载shell环境变量,使配置⽣效

source /etc/kubernetes/kubectl.sh

- kubectl get componentstatuses,简写kubectl get cs获取组件状态

- kubectl get nodes,简写kubectl get no获取node节点列表

- kubectl get services,简写kubectl get svc获取服务列表

- kubectl get deployments,简写kubectl get deploy获取deployment列表

- kubectl get statefulsets,简写kubectl get sts获取有状态服务列表

kubernetes⾼可⽤集群

官网文档:https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/high-availability/

kubernetes基础知识

官网文档:https://kubernetes.io/zh/docs/tutorials/kubernetes-basics/

1、 集群与节点

kubernetes是⼀个开源的容器引擎管理平台,实现容器化应⽤的⾃动化部署,任务调度,弹性

伸缩,负载均衡等功能,cluster是由master和node两种⻆⾊组成

- master负责管理集群,master包含kube-apiserver,kube-controller-manager,kubescheduler,etcd组件

- node节点运⾏容器应⽤,由Container Runtime,kubelet和kube-proxy组成,其中

Container Runtime可能是Docker,rke,containerd,node节点可由物理机或者虚拟机组

成。

(1)、查看master组件⻆⾊

kubectl get componentstatuses

(2)、查看node节点列表

kubectl get nodes

(3)、查看node节点详情

kubectl describe node node-1

结果如下:

[root@master1 ~]# kubectl describe node node1

Name: node1

Roles:

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=node1

kubernetes.io/os=linux

Annotations: kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

projectcalico.org/IPv4Address: 192.168.12.179/24

projectcalico.org/IPv4IPIPTunnelAddr: 172.16.166.128

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Thu, 27 Aug 2020 19:34:19 +0800

Taints:

Unschedulable: false

Lease:

HolderIdentity: node1

AcquireTime:

RenewTime: Sat, 29 Aug 2020 14:45:20 +0800

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

NetworkUnavailable False Sat, 29 Aug 2020 09:38:39 +0800 Sat, 29 Aug 2020 09:38:39 +0800 CalicoIsUp Calico is running on this node

MemoryPressure False Sat, 29 Aug 2020 14:43:59 +0800 Sat, 29 Aug 2020 09:44:31 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Sat, 29 Aug 2020 14:43:59 +0800 Sat, 29 Aug 2020 09:44:31 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Sat, 29 Aug 2020 14:43:59 +0800 Sat, 29 Aug 2020 09:44:31 +0800 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Sat, 29 Aug 2020 14:43:59 +0800 Sat, 29 Aug 2020 09:44:31 +0800 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 192.168.12.179

Hostname: node1

Capacity:

cpu: 4

ephemeral-storage: 38815216Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 3861300Ki

pods: 110

Allocatable:

cpu: 4

ephemeral-storage: 35772103007

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 3758900Ki

pods: 110

System Info:

Machine ID: e950f2be692b4138b1e8aa7017400fbd

System UUID: A70E4D56-75E2-87D6-964F-44AC3F2FF70A

Boot ID: 6d7fc2ad-38f4-4f9e-996d-0552dd92e350

Kernel Version: 3.10.0-1127.19.1.el7.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://19.3.12

Kubelet Version: v1.18.8

Kube-Proxy Version: v1.18.8

PodCIDR: 172.16.1.0/24

PodCIDRs: 172.16.1.0/24

Non-terminated Pods: (2 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE

--------- ---- ------------ ---------- --------------- ------------- ---

kube-system calico-node-v2f5r 250m (6%) 0 (0%) 0 (0%) 0 (0%) 42h

kube-system kube-proxy-tk2bw 0 (0%) 0 (0%) 0 (0%) 0 (0%) 43h

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 250m (6%) 0 (0%)

memory 0 (0%) 0 (0%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

Events:

2、容器与应用

kubernetes是容器编排引擎,其负责容器的调度,管理和容器的运⾏,但kubernetes调度最⼩

单位并⾮是container,⽽是pod,pod中可包含多个container,通常集群中不会直接运⾏

pod,⽽是通过各种⼯作负载的控制器如Deployments,ReplicaSets,DaemonSets的⽅式运

⾏,为啥?因为控制器能够保证pod状态的⼀致性,正如官⽅所描述的⼀样“make sure the

current state match to the desire state”,确保当前状态和预期的⼀致,简单来说就是pod异常

了,控制器会在其他节点重建,确保集群当前运⾏的pod和预期设定的⼀致。

- pod是kubernetes中运⾏的最⼩单元

- pod中包含⼀个容器或者多个容器

- pod不会单独使⽤,需要有⼯作负载来控制,如Deployments,StatefulSets, DaemonSets,CronJobs等

- Container,容器是⼀种轻量化的虚拟化技术,通过将应⽤封装在镜像中,实现便捷部 署,应⽤分发。

- Pod,kubernetes中最⼩的调度单位,封装容器,包含⼀个pause容器和应⽤容器,容器

之间共享相同的命名空间,⽹络,存储,共享进程。 - Deployments,部署组也称应⽤,严格上来说是⽆状态化⼯作负载,另外⼀种由状态化⼯

组负载是StatefulSets,Deployments是⼀种控制器,可以控制⼯作负载的副本数

replicas,通过kube-controller-manager中的Deployments Controller实现副本数状态的控

制。

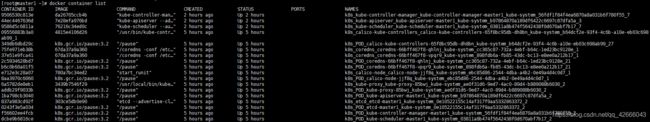

(1)查看所有pod

kubectl get pods -n kube-system

(2)查看所有容器

docker container list

(3)查看某个pod详情

kubectl describe pods calico-kube-controllers-65f8bc95db-dh8bn -n kube-system

结果如下:

[root@master1 ~]# kubectl describe pods calico-kube-controllers-65f8bc95db-dh8bn -n kube-system

Name: calico-kube-controllers-65f8bc95db-dh8bn

Namespace: kube-system

Priority: 2000000000

Priority Class Name: system-cluster-critical

Node: master1/192.168.12.181

Start Time: Thu, 27 Aug 2020 20:21:16 +0800

Labels: k8s-app=calico-kube-controllers

pod-template-hash=65f8bc95db

Annotations: cni.projectcalico.org/podIP: 172.16.137.70/32

cni.projectcalico.org/podIPs: 172.16.137.70/32

scheduler.alpha.kubernetes.io/critical-pod:

Status: Running

IP: 172.16.137.70

IPs:

IP: 172.16.137.70

Controlled By: ReplicaSet/calico-kube-controllers-65f8bc95db

Containers:

calico-kube-controllers:

Container ID: docker://09556883b3a09643a5979561f7dcfda0260bf4f637d754c97ccd2bfd309d0f2f

Image: calico/kube-controllers:v3.14.2

Image ID: docker-pullable://calico/kube-controllers@sha256:840ae8701d93ae236cb436af8bc82c50c1cb302941681a2581d11373c8b50ca7

Port:

Host Port:

State: Running

Started: Sat, 29 Aug 2020 09:39:23 +0800

Last State: Terminated

Reason: Error

Exit Code: 255

Started: Thu, 27 Aug 2020 20:22:06 +0800

Finished: Sat, 29 Aug 2020 09:35:35 +0800

Ready: True

Restart Count: 1

Readiness: exec [/usr/bin/check-status -r] delay=0s timeout=1s period=10s #success=1 #failure=3

Environment:

ENABLED_CONTROLLERS: node

DATASTORE_TYPE: kubernetes

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from calico-kube-controllers-token-kgg2h (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

calico-kube-controllers-token-kgg2h:

Type: Secret (a volume populated by a Secret)

SecretName: calico-kube-controllers-token-kgg2h

Optional: false

QoS Class: BestEffort

Node-Selectors: kubernetes.io/os=linux

Tolerations: CriticalAddonsOnly

node-role.kubernetes.io/master:NoSchedule

node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

3、服务访问

kubernetes中pod是实际运⾏的载体,pod依附于node中,node可能会出现故障,kubernetes

的控制器如replicasets会在其他node上重新拉起⼀个pod,新的pod会分配⼀个新的IP;再

者,应⽤部署时会包含多个副本replicas,如同一个应⽤deployments部署了3个pod副本,pod相

当于后端的Real Server,如何实现这三个应⽤访问呢?对于这种情况,我们⼀般会在Real

Server前⾯加⼀个负载均衡Load Balancer,service就是pod的负载均衡调度器,service将动

态的pod抽象为⼀个服务,应⽤程序直接访问service即可,service会⾃动将请求转发到后端的

pod。负责service转发规则有两种机制:iptables和ipvs,iptables通过设置DNAT等规则实现

负载均衡,ipvs通过ipvsadm设置转发规。

根据服务不同的访问⽅式,service分为如下⼏种类型:ClusterIP,NodePort,LoadBalancer

和_ExternalName,可通过type设置。

- ClusterIP,集群内部互访,与DNS结合实现集群内部的服务发现;

- NodePort,通过NAT将每个node节点暴露⼀个端⼝实现外部访问;

- LoadBalancer,实现云⼚商外部接⼊⽅式的接⼝,需要依赖云服务提供商实现具体技术 细节,如腾讯云实现与CLB集成;

- ExternalName,通过服务名字暴露服务名,当前可由ingress实现,将外部的请求以域名

转发的形式转发到集群,需要依附具体的外部实现,如nginx,traefik,各⼤云计算⼚商实 现接⼊细节。

pod是动态变化的,ip地址可能会变化(如node故障),副本数可能会变化,如应⽤扩展scale

up,应⽤锁容scale down等,service如何识别到pod的动态变化呢?答案是labels,通过

labels⾃动会过滤出某个应⽤的Endpoints,当pod变化时会⾃动更新Endpoints,不同的应⽤

会有由不同的label组成。labels相关可以参考

下https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/

创建应⽤

我们开始部署⼀个应⽤即deployments,kubernetes中包含各种workload如⽆状态化的

Deployments,有状态化的StatefulSets,守护进程的DaemonSets,每种workload对应不同的

应⽤场景,我们先以Deployments为例⼊⻔,其他workload均以此类似,⼀般⽽⾔,在

kubernetes中部署应⽤均以yaml⽂件⽅式部署,对于初学者⽽⾔,编写yaml⽂件太冗⻓,不

适合初学,我们先kubectl命令⾏⽅式实现API的接⼊。

1、部署nginx应⽤,部署三个副本

kubectl create deployment app-demo --image=nginx:1.7.9

![]()

2、查看应⽤列表,可以看到当前pod的状态均已正常,Ready是当前状态,AVAILABLE是⽬标

状态

kubectl get deployments

![]()

3、查看应⽤的详细信息,如下我们可以知道Deployments是通过ReplicaSets控制副本数的,由

Replicaset控制pod数

kubectl describe deployments app-demo

结果如下:

[root@master1 ~]# kubectl describe deployments app-demo

Name: app-demo

Namespace: default

CreationTimestamp: Sat, 29 Aug 2020 15:20:56 +0800

Labels: app=app-demo

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=app-demo

Replicas: 1 desired | 1 updated | 1 total | 1 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=app-demo

Containers:

nginx:

Image: nginx:1.7.9

Port:

Host Port:

Environment:

Mounts:

Volumes:

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets:

NewReplicaSet: app-demo-76f6796dcc (1/1 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 4m32s deployment-controller Scaled up replica set app-demo-76f6796dcc to 1

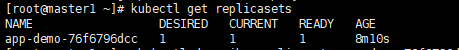

4、查看replicasets情况

(1) 查看replicasets列表

kubectl get replicasets

(2)查看replicasets详情

kubectl describe replicasets app-demo-76f6796dcc

结果如下:

[root@master1 ~]# kubectl describe replicasets app-demo-76f6796dcc

Name: app-demo-76f6796dcc

Namespace: default

Selector: app=app-demo,pod-template-hash=76f6796dcc

Labels: app=app-demo

pod-template-hash=76f6796dcc

Annotations: deployment.kubernetes.io/desired-replicas: 1

deployment.kubernetes.io/max-replicas: 2

deployment.kubernetes.io/revision: 1

Controlled By: Deployment/app-demo

Replicas: 1 current / 1 desired

Pods Status: 1 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=app-demo

pod-template-hash=76f6796dcc

Containers:

nginx:

Image: nginx:1.7.9

Port:

Host Port:

Environment:

Mounts:

Volumes:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 9m15s replicaset-controller Created pod: app-demo-76f6796dcc-rbsxj

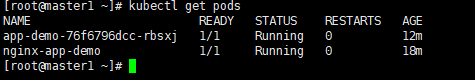

5、查看pod的情况,实际应⽤部署的载体,pod中部署了⼀个nginx的容器并分配了⼀个ip,可

通过该ip直接访问应⽤

(1)、 查看pod的列表,和replicasets⽣成的名称⼀致

kubectl get pods

(2)查看pod的详情

kubectl describe pods app-demo-76f6796dcc-rbsxj

结果如下:

[root@master1 ~]# kubectl describe pods app-demo-76f6796dcc-rbsxj

Name: app-demo-76f6796dcc-rbsxj

Namespace: default

Priority: 0

Node: node1/192.168.12.179

Start Time: Sat, 29 Aug 2020 15:20:55 +0800

Labels: app=app-demo

pod-template-hash=76f6796dcc

Annotations: cni.projectcalico.org/podIP: 172.16.166.129/32

cni.projectcalico.org/podIPs: 172.16.166.129/32

Status: Running

IP: 172.16.166.129

IPs:

IP: 172.16.166.129

Controlled By: ReplicaSet/app-demo-76f6796dcc

Containers:

nginx:

Container ID: docker://5065f85f22ea635a85ec09b09b2fbdc9361d5dc9e7b95443ba1cd2ed1c106259

Image: nginx:1.7.9

Image ID: docker-pullable://nginx@sha256:e3456c851a152494c3e4ff5fcc26f240206abac0c9d794affb40e0714846c451

Port:

Host Port:

State: Running

Started: Sat, 29 Aug 2020 15:25:16 +0800

Ready: True

Restart Count: 0

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-zwb9j (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-zwb9j:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-zwb9j

Optional: false

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled default-scheduler Successfully assigned default/app-demo-76f6796dcc-rbsxj to node1

Normal Pulling 13m kubelet, node1 Pulling image "nginx:1.7.9"

Normal Pulled 9m36s kubelet, node1 Successfully pulled image "nginx:1.7.9"

Normal Created 9m35s kubelet, node1 Created container nginx

Normal Started 9m35s kubelet, node1 Started container nginx

访问应⽤

kubernetes为每个pod都分配了⼀个ip地址,可通过该地址直接访问应⽤,相当于访问RS,但

⼀个应⽤是⼀个整体,由多个副本数组成,需要依赖于service来实现应⽤的负载均衡,

service我们探讨ClusterIP和NodePort的访问⽅式。

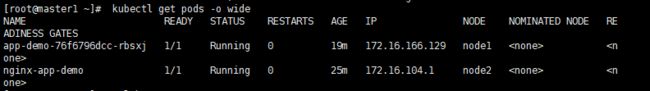

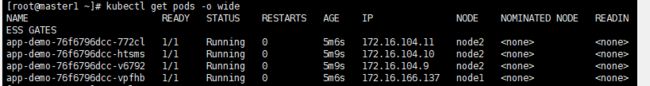

1、查看pod列表

kubectl get pods -o wide

curl http://172.16.166.129

结果如图:

[root@master1 ~]# curl http://172.16.166.129

Welcome to nginx!

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

Commercial support is available at

nginx.com.

Thank you for using nginx.

3、查看该pod访问日志

kubectl logs app-demo-76f6796dcc-rbsxj

kubectl exec -it app-demo-76f6796dcc-rbsxj /bin/bash

![]()

扩展应用

当应⽤程序的负载⽐较⾼⽆法满⾜应⽤请求时,⼀般我们会通过扩展RS的数量来实现,在

kubernetes中,扩展RS实际上通过扩展副本数replicas来实现,扩展RS⾮常便利,快速实现

弹性伸缩。kubernets能提供两种⽅式的伸缩能⼒:1. ⼿动伸缩能⼒scale up和scale down,2.

动态的弹性伸缩horizontalpodautoscalers,基于CPU的利⽤率实现⾃动的弹性伸缩,需要依赖

与监控组件如metrics server,当前未实现,后续再做深⼊探讨,本⽂以⼿动的scale的⽅式扩

展应⽤的副本数。

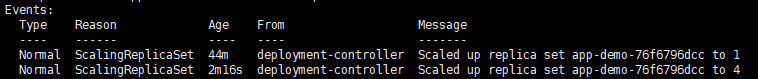

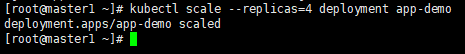

1、⼿动扩展副本数

(1)扩充4个副本

kubectl scale --replicas=4 deployment app-demo

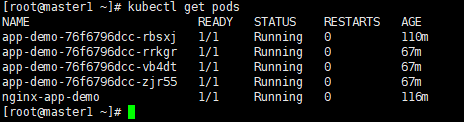

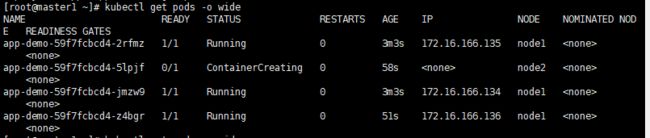

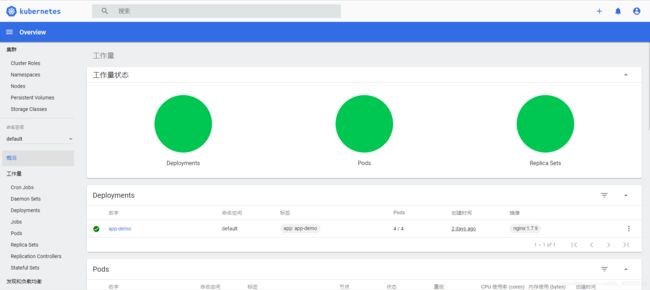

(2)查看副本扩展情况,deployments⾃动部署⼀个应⽤

kubectl get deployments.apps

![]()

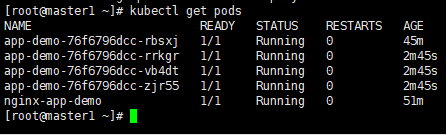

(3)查看deployment的详情,可以看到副本已扩充为4个

kubectl describe deployments.apps

kubectl get pods

暴露服务,内部访问

通过每一个pod的唯一标签来访问它

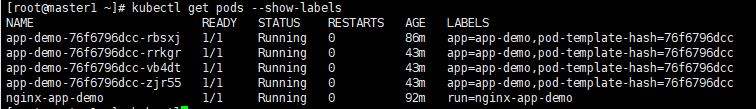

1、查看每个pod的标签

kubectl get pods --show-labels

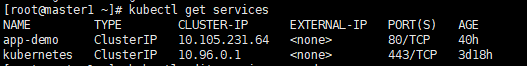

2、暴露service,其中port表示代理监听端⼝,target-port代表是容器的端⼝,type设置的是

service的类型

kubectl expose deployment app-demo --port=80 --protocol=TCP --target-port=80 --type ClusterIP

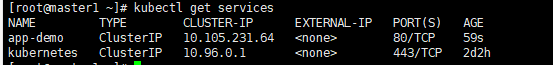

kubectl get services

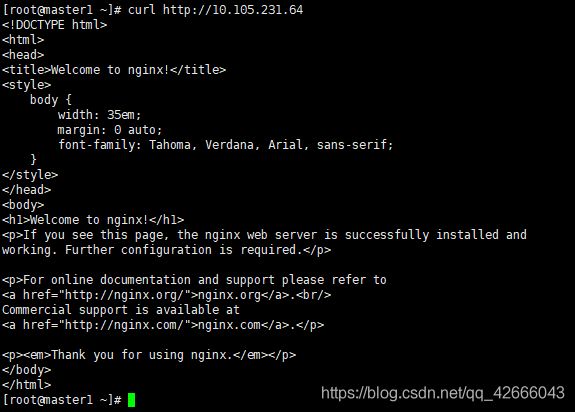

4、查看该pod的详情,其中endpoints锁定了4个副本的ip

kubectl describe services app-demo

curl http://10.105.231.64

服务转发

services本身就是一个负载均衡,在k8s中以轮询的方式实现了负载均衡。

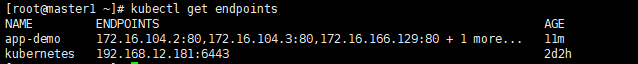

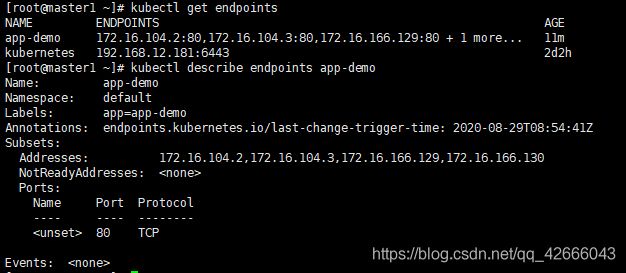

1、查看endpoints

kubectl get endpoints

kubectl describe endpoints app-demo

3、为实现负载均衡的效果,先分别修改4个副本的内容,便于结果的展示

(1)查看各副本名

kubectl get pods

kubectl exec -it app-demo-76f6796dcc-rbsxj /bin/bash

cd /usr/share/nginx/html

ls

echo "web1" >/usr/share/nginx/html/index.html #每个副本只需要更改相应的web2、web3、web4

exit

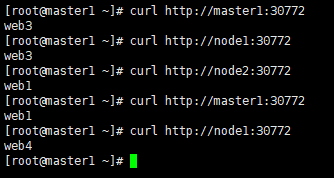

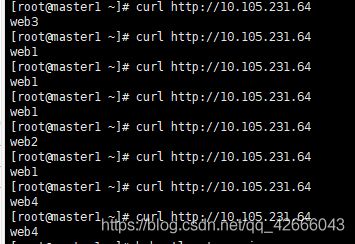

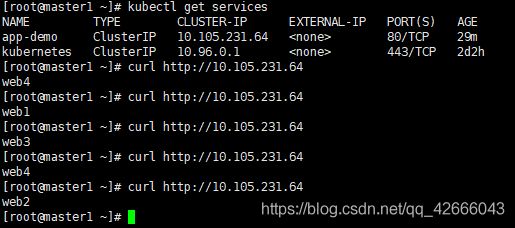

4.负载均衡效果如下:

(1)访问各副本ip效果如下图

(2)多次访问VIP效果如下图:

暴露外部访问

Service通过ClusterIP只能提供集群内部的应⽤访问,外部⽆法直接访问应⽤,如果需要外部

访问有如下⼏种⽅式:NodePort,LoadBalancer和Ingress,其中LoadBalancer需要由云服务

提供商实现,Ingress需要安装单独的Ingress Controller,⽇常测试可以通过NodePort的⽅式

实现,NodePort可以将node的某个端⼝暴露给外部⽹络访问。

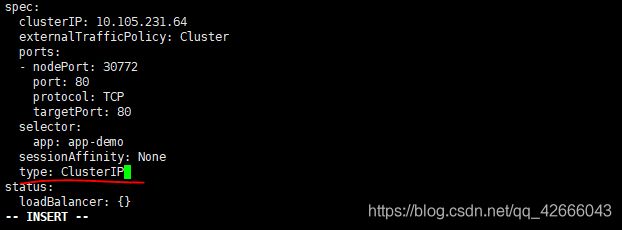

1、修改type的类型由ClusterIP修改为NodePort类型(或者重新创建,指定type的类型为

NodePort)

(1)查看services的类型

kubectl get services

(2) 通过edit修改type的类型

kubectl edit services app-demo

下拉到最后,更改ClusterIP为NodePort

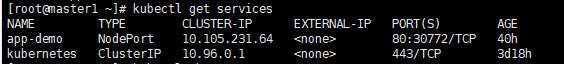

(3)再次查看service列表,可以知道service的type已经修改为NodePort,同时还保留ClusterIP的访问IP

kubectl get services

结果如图,就可以看到app-demo增加了额一个30772的端口

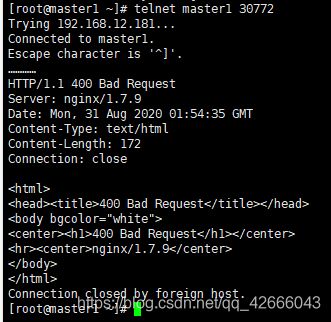

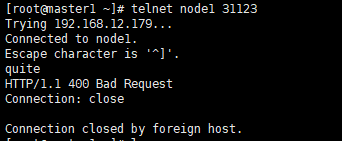

(4)远程访问(Telnet)测试

Telnet master1 30772

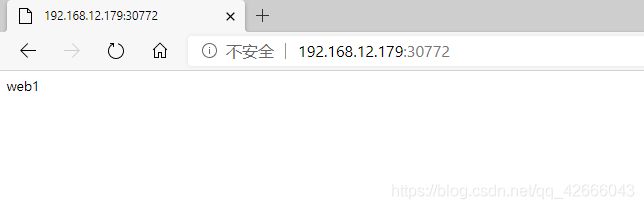

(5)浏览器测试,可访问任一节点的30772端口

2、通过NodePort访问应⽤程序,每个node的地址相当于vip,可以实现相同的负载均衡效果,

同时CluserIP功能依可⽤

kubectl get pods -o wide

3、NodePort转发原理,每个node上通过kube-proxy监听NodePort的端⼝,由后端的iptables

实现端⼝的转发

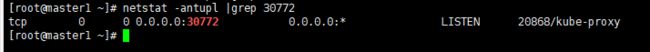

(1) NodePort监听端⼝

netstat -antupl |grep 30772

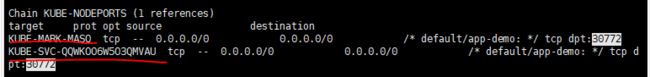

(2)查看nat表的转发规则,有两条规则KUBE-MARK-MASQ出⼝和KUBE-SVC-QQWKOO6W5O3QMVAU⼊站⽅向。

iptables -t nat -L -n |less

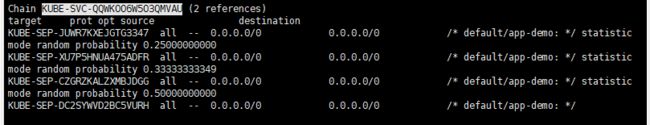

(3)查看⼊站的请求规则链KUBE-SVC-QQWKOO6W5O3QMVAU

iptables -t nat -L KUBE-SVC-QQWKOO6W5O3QMVAU -n

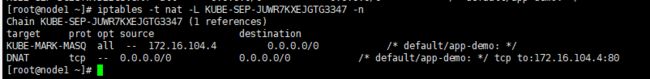

(4)继续查看转发链,包含有DNAT转发和KUBE-MARK-MASQ和出站返回的规则

iptables -t nat -L KUBE-SEP-JUWR7KXEJGTG3347 -n

service会话保持

1、编辑service的yaml文件,添加sessionAffinity参数和sessionAffinityConfig参数,将服务在一定时间内保持在同一个节点上

vim service-demo.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: service-demo

ports:

- name: http-80-port

protocol: TCP

port: 80

targetPort: 80

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 60 #保持的时长

service服务发现

1、选择DNS访问

(1)运行一个名为test的用完即删除的测试pod,参数–rm即指示该pod为一次性pod

kubectl run test --rm -it --image=centos:latest /bin/bash

(2)在测试pod里安装一些DNS解析工具

yum install bind-utils

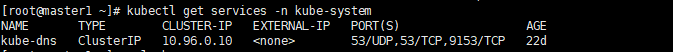

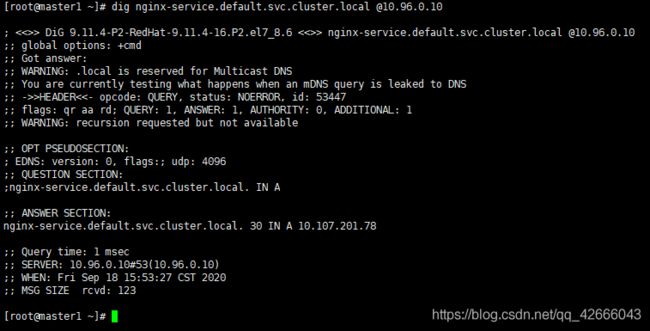

在master节点查看kube-dns的cluster-IP

kubectl get services -n kube-system

在test的pod中用dig命令查看该服务nginx-service的域名解析

dig nginx-service.default.svc.cluster.local @10.96.0.10

安装nginx ingress控制器

官方文档:https://docs.nginx.com/nginx-ingress-controller/installation/

1、安装git工具

yum install git

2、将kubernetes-ingress安装包拉取下来

安装包的github地址:https://github.com/nginxinc/kubernetes-ingress

git clone https://github.com/nginxinc/kubernetes-ingress.git

3、按照官方文档依次进行安装

以清单形式进行安装,官方安装文档:https://docs.nginx.com/nginx-ingress-controller/installation/installation-with-manifests/

4、安装好Ingress控制器后,就可以定义Ingress资源来实现七层负载转发了,大体上Ingress支持三种使用方式:1. 基于虚拟主机转发,2. 基于虚拟机主机URI转发,3. 支持TLS加密转发。

(1)环境准备,先创建一个nginx的Deployment应用,包含2个副本

kubectl create deploy ingress-demo --image=nginx:1.7.9

kubectl scale deploy ingress-demo --replicas=2

kubectl get deployments

(2)将服务暴露出去:

kubectl expose deploy ingress-demo --port=80

kubectl get services

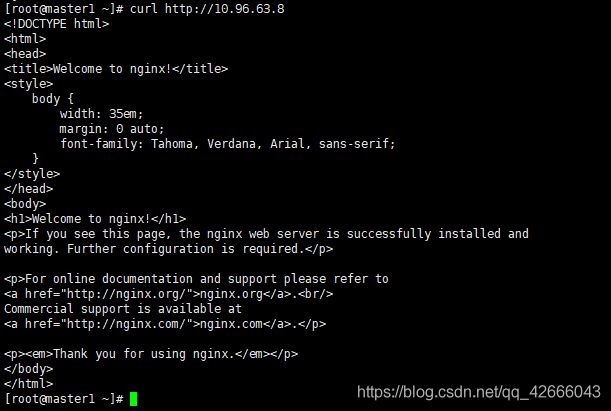

(3)集群内部可以访问:

curl http://10.96.63.8

编辑yaml文件

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo

spec:

rules:

- host: www.ingress-demo.cn

http:

paths:

- path: /

backend:

serviceName: ingress-demo

servicePort: 80

backend:

serviceName: ingress-demo

servicePort: 80

提交文件

kubectl apply -f ingress-demo.yaml

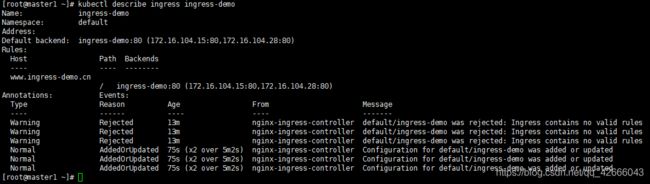

查看ingress服务

kubectl get ingress

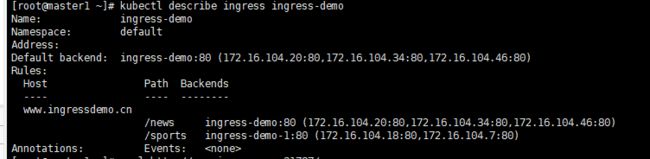

kubectl describe ingress ingress-demo

echo "192.168.12.180 www.ingress-demo.cn" >>/etc/hosts

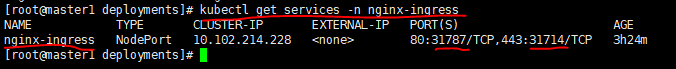

测试,用ingress-demo的服务端口号测试

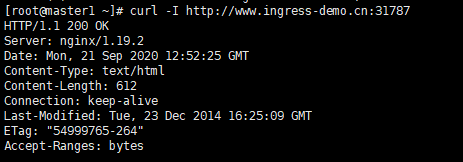

curl -I http://www.ingress-demo.cn:31787

curl http://www.ingress-demo.cn:31787

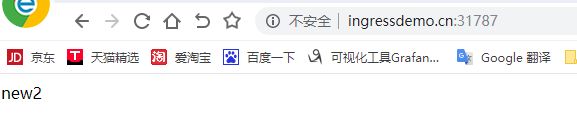

用外部浏览器进行访问,访问不了???待解决!!!

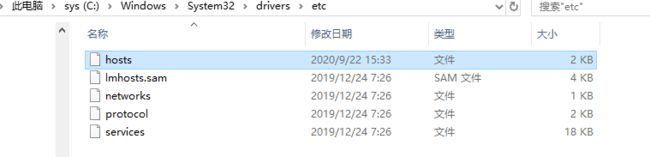

嘿嘿嘿,解决了!

编辑hosts文件,在windows系统中打开 C:\Windows\System32\drivers\etc\hosts 文件并添加主机配置。我添加的是自己的虚拟主机ip跟网站域名。

然后可以用浏览器访问啦

Ingress路径转发

Ingress支持URI格式的转发方式,同时支持URL重写

1、先前已经有了一个应用服务(ingress-demo),现在再创建一个应用实现路径转发。

(1)环境准备,先创建一个nginx的Deployment应用,包含2个副本

kubectl create deploy ingress-demo-1 --image=nginx:1.7.9

kubectl scale deploy ingress-demo-1 --replicas=2

kubectl get deployments

(2)将服务暴露出去:

kubectl expose deploy ingress-demo-1 --port=80

kubectl get services

2、编辑yaml文件,实现通过一个域名将请求转发至后端两个service

vim ingress-url-demo.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-demo

spec:

rules:

- host: www.ingressdemo.cn

http:

paths:

- path: /news

backend:

serviceName: ingress-demo

servicePort: 80

- path: /sports

backend:

serviceName: ingress-demo-1

servicePort: 80

backend:

serviceName: ingress-demo

servicePort: 80

3、提交yaml文件

kubectl apply -f nginx-ingress-uri-demo.yaml

kubectl get ingresses

![]()

4、查看该ingress详情,可以看到该域名已经配置了两条路由转发

kubectl describe ingresses ingress-demo

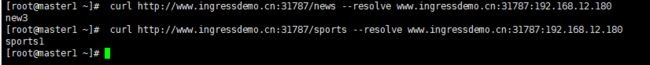

5、进行curl测试,测试结果为new3和sports1是因为我对pods中运行的nginx的HTML内容进行了修改。

5、进行curl测试,测试结果为new3和sports1是因为我对pods中运行的nginx的HTML内容进行了修改。

curl http://www.ingressdemo.cn:31787/news --resolve www.ingressdemo.cn:31787:192.168.12.180

curl http://www.ingressdemo.cn:31787/sports --resolve www.ingressdemo.cn:31787:192.168.12.180

滚动升级,回滚

在kubernetes中更新应⽤程序时可以将应⽤程序打包到镜像中,然后更新应⽤程序的镜像以实

现升级。默认Deployments的升级策略为RollingUpdate,其每次会更新应⽤中的25%的pod,

新建新的pod逐个替换,防⽌应⽤程序在升级过程中不可⽤。同时,如果应⽤程序升级过程中

失败,还可以通过回滚的⽅式将应⽤程序回滚到之前的状态,回滚时通过replicasets的⽅式实

现。

1、滚动升级

官网文档:https://kubernetes.io/zh/docs/tutorials/kubernetes-basics/update/update-intro/

(1)官网下载nginx的最新安装包,上传至根目录(各个节点操作)

nginx官网下载页面:http://nginx.org/en/download.html

rz

(2)更换nginx的镜像,将应⽤升级⾄最新版本

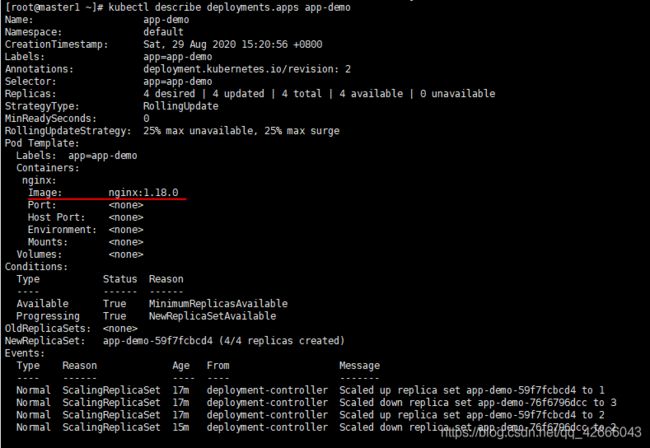

查看旧版本镜像信息,可以看到容器名是nginx,当前版本是V1.7.9

kubectl describe deployments.apps app-demo

kubectl set image deployments.apps app-demo nginx=nginx:1.18.0

- app-demo ——pod名

- nginx=nginx:1.18.0——第一个nginx:容器名;第二个:是镜像版本,后面的数字是要更新的版本号

(3)新开一个连接窗口,观察升级过程

kubectl get pods -w

kubectl get pods -o wide

kubectl describe deployments.apps app-demo

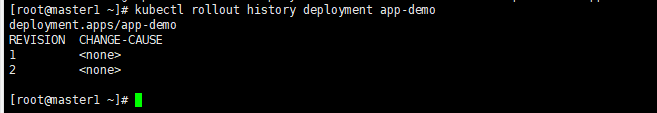

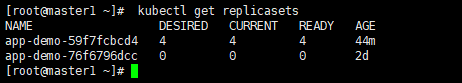

(1)查看滚动升级的版本,可以看到有两个版本,分别对应的两个不同的replicasets

kubectl rollout history deployment app-demo

kubectl get replicasets

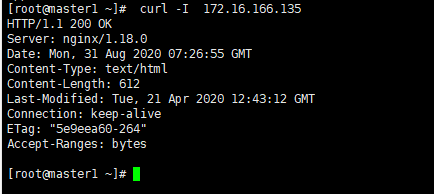

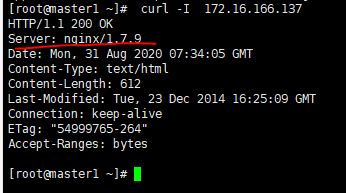

(3) 测试应⽤的升级情况,发现nginx已经升级到最新nginx/1.18.0版本

kubectl get pods -o wide

curl -I 172.16.166.135

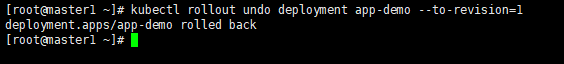

kubectl rollout undo deployment app-demo --to-revision=1

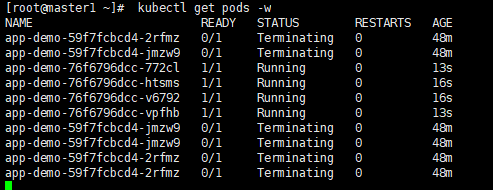

kubectl get pods -w

kubectl get pods -o wide

curl -I 172.16.166.137

kubectl命令行详解

官方文档:https://kubernetes.io/docs/reference/kubectl/overview/

1、查看k8s的配置文件,里面说明了当前api的版本号,集群等

cat /root/.kube/config

2、查看kubectl命令行

kubectl -h

dashboard安装和访问

官网参考文档:https://kubernetes.io/zh/docs/tasks/access-application-cluster/web-ui-dashboard/

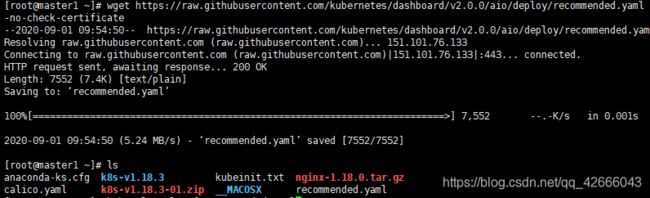

1、下载yaml文件

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0/aio/deploy/recommended.yaml

可能会出错,解决方案参考文档k8s部署过程中出现的错误记录

结果如图:

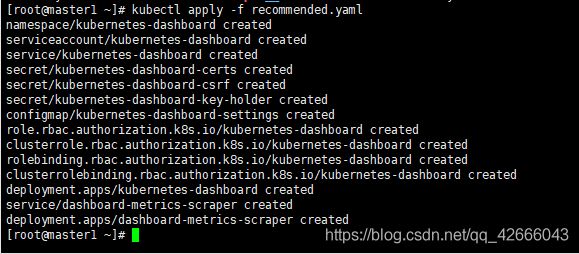

2、通过以下命令部署,会创建一系列配置文件

kubectl apply -f recommended.yaml

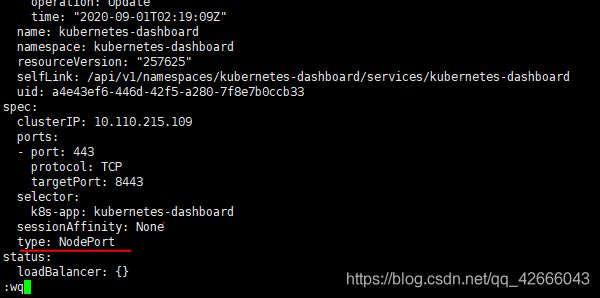

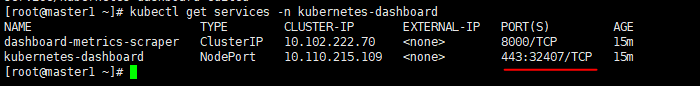

3、查看dashboard的服务列表,发现其服务类型为ClusterIP,只允许内网访问,需要将其更改为NodePort,允许外网访问

(1)查看services列表

kubectl get services -n kubernetes-dashboard

(2)编辑dashboard服务,修改服务类型。或者直接修改recommended.yaml,再重新进行提交

kubectl edit services kubernetes-dashboard -n kubernetes-dashboard

https://192.168.12.181:32407

需要创建一个用户,官方文档:https://github.com/kubernetes/dashboard/blob/master/docs/user/access-control/creating-sample-user.md

创建一个yaml文件

vim dashboard-rbac.yaml

添加如下内容

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

将yaml文件部署进来

kubectl apply -f dashboard-rbac.yaml

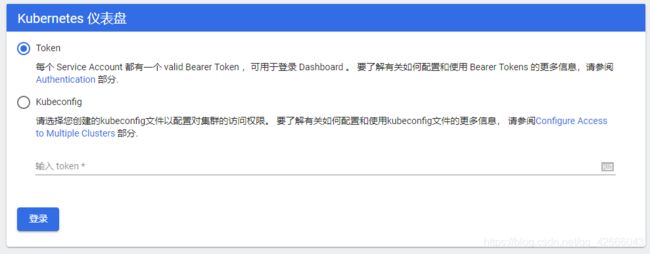

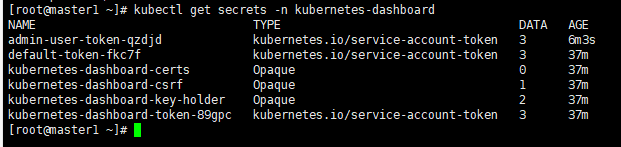

查看kubernetes-dashboard的token列表

kubectl get secrets -n kubernetes-dashboard

查看admin-user-token-qzdjd 用户的token,返回一个被base64加密存储的token

kubectl get secrets -n kubernetes-dashboard admin-user-token-qzdjd -o yaml

echo '上图中的token' | base64 -d

生成Yaml资源模板

(1)生成yaml资源模板

–dry-run=server|client|none,不指定时默认为client,只创建模板并不真正运行

-o yaml 以yaml文件的格式输出

kubectl create deployment test-demo1 --image=nginx:1.7.9 --dry-run -o yaml >test-demo1.yaml #将该文件输出到test-demo1.yaml文件中

编辑yaml文件,更改为需要的资源配置

vim test-demo1.yaml

微服务部署

参考连接微服务部署:蓝绿部署、滚动部署、灰度发布、金丝雀发布

本地nodejs项目部署到docker

1、node节点操作,搭建nodejs运行环境,安装运行node image

docker pull node:12.18.3

2、node节点操作,将项目打包上传到node上

rz

3、node节点操作,编写 Dockerfile (注意:开头必须大写,位置放在:和项目同处一个目录)

Dockerfile内容:

注意:这里的ENV PORT 和EXPOSE端口号 、项目中监听的端口号、下面步骤10中暴露服务的端口号三个要一致!!!

FROM node:12.18.3

MAINTAINER yzy

RUN mkdir -p /var/publish/cats

ADD ./cats /var/publish/cats

WORKDIR /var/publish/cats

RUN npm install

ENV HOST 0.0.0.0

ENV PORT 8888

EXPOSE 8888

CMD ["node","/var/publish/cats/dist/main.js"]

4、node节点操作,构建出镜像,注意后面的那个点一定要写,下面这条命令在Dockerfile所在的目录下执行

docker build -t cats:v1.0 .

5、master节点操作,部署应用

kubectl create deploy cats-demo --image=cats:v1.0

6、master节点操作,查看deploy

kubectl get deploy

7、master节点操作,查看pod

kubectl get pods -o wide

8、node节点操作,在node节点上查看容器

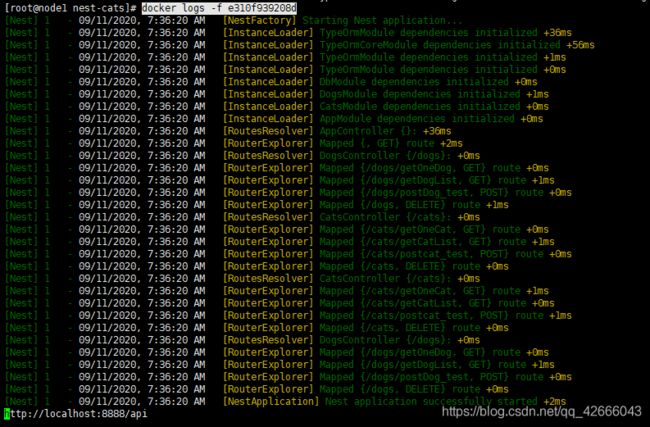

docker ps

9、node节点操作,查看该应用的容器运行日志

docker logs -f e310f939208d

10、master节点操作,发布服务

kubectl expose deployment 应用名 --port=8888 --protocol=TCP --target-port=8888 --type NodePort

11、任意节点操作,查看应用

curl 192.168.12.180:8888

12、打开浏览器,访问应用

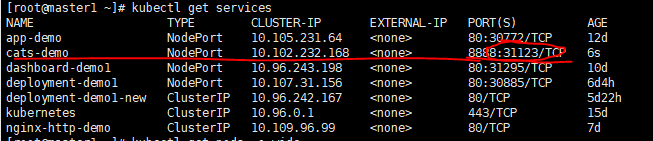

先查看services的对外端口

kubectl get services

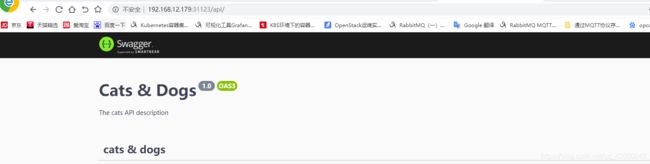

http://192.168.12.179:31123/api/

docker的使用

okcer image ls // 查看 image

docker image build -t ssr-project. // 常见image

docker rmi [containerID] // 删除指定镜像

docker run -it --rm -p 3000:3000 ssr-project // docker container run命令会从 image 文件生成容器。–rm参数,在容器终止运行后自动删除容器文件

docker ps // 列出本机正在运行的容器

docker ps -a // 列出本机所有容器,包括终止运行的容器

docker container ls // 列出本机正在运行的容器

docker container ls --all // 列出本机所有容器,包括终止运行的容器

docker container rm [containerID] // 删除指定容器

docker container kill [containerID] // 终止容器运行,相当于向容器里面的主进程发出 SIGKILL 信号

docker container stop [containerID] // 终止容器运行,相当于向容器里面的主进程发出 SIGTERM 信号,然后过一段时间再发出 SIGKILL 信号

docker container start [containerID] // 启动已经生成、已经停止运行的容器

docker container logs [containerID] // 用来查看 docker 容器的输出,即容器里面 Shell 的标准输出

docker container exec -it [containerID] /bin/bash // 用于进入一个正在运行的 docker 容器 进入容器就可以在容器的 Shell 执行命令了

docker container cp [containID]:[/path/to/file] . // 用于从正在运行的 Docker 容器里面,将文件拷贝到本机

参考链接:docker常用命令