Kubernetes监控篇

目录

1、K8S监控指标

1.1 Kubernetes本身监控

1.2 Pod监控

1.3 Prometheus监控K8S架构

2、部署Prometheus+Grafana

2.1 部署Prometheus

2.1.1 创建rbac

2.1.2 创建配置项

2.1.3 部署prometheus

2.1.4 配置service

2.1.5 访问prometheus

2.2 部署Grafana

3、参考网站

1、K8S监控指标

1.1 Kubernetes本身监控

• Node资源利用率 :一般生产环境几十个node,几百个node去监控

• Node数量 :一般能监控到node,就能监控到它的数量了,因为它是一个实例,一个node能跑多少个项目,也是需要去评估的,整体资源率在一个什么样的状态,什么样的值,所以需要根据项目,跑的资源利用率,还有值做一个评估的,比如再跑一个项目,需要多少资源。

• Pods数量(Node):其实也是一样的,每个node上都跑多少pod,不过默认一个node上能跑110个pod,但大多数情况下不可能跑这么多,比如一个128G的内存,32核cpu,一个java的项目,一个分配2G,也就是能跑50-60个,一般机器,pod也就跑几十个,很少很少超过100个。

• 资源对象状态 :比如pod,service,deployment,job这些资源状态,做一个统计。

1.2 Pod监控

• Pod数量(项目):你的项目跑了多少个pod的数量,大概的利益率是多少,好评估一下这个项目跑了多少个资源占有多少资源,每个pod占了多少资源。

• 容器资源利用率 :每个容器消耗了多少资源,用了多少CPU,用了多少内存

• 应用程序:这个就是偏应用程序本身的指标了,这个一般在我们运维很难拿到的,所以在监控之前呢,需要开发去给你暴露出来,这里有很多客户端的集成,客户端库就是支持很多语言的,需要让开发做一些开发量将它集成进去,暴露这个应用程序的想知道的指标,然后纳入监控,如果开发部配合,基本运维很难做到这一块,除非自己写一个客户端程序,通过shell/python能不能从外部获取内部的工作情况,如果这个程序提供API的话,这个很容易做到。

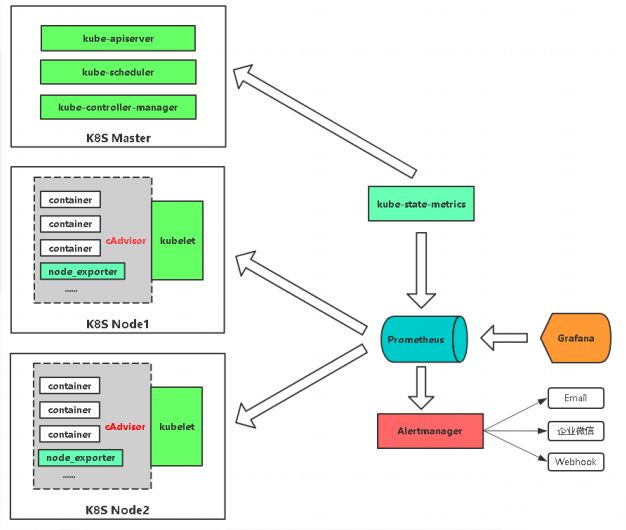

1.3 Prometheus监控K8S架构

如果想监控node的资源,就可以放一个node_exporter,这是监控node资源的,node_exporter是Linux上的采集器,你放上去你就能采集到当前节点的CPU、内存、网络IO,等待都可以采集的。

如果想监控容器,k8s内部提供cAdvisor采集器,pod呀,容器都可以采集到这些指标,都是内置的,不需要单独部署,只知道怎么去访问这个Cadvisor就可以了。

如果想监控k8s资源对象,会部署一个kube-state-metrics这个服务,它会定时的API中获取到这些指标,帮你存取到Prometheus里,要是告警的话,通过Alertmanager发送给一些接收方,通过Grafana可视化展示。

| 监控指标 |

具体实现 |

举例 |

| pod性能 |

cAdvisor |

容器CPU,内存利用率 |

| node性能 |

node-exporter |

节点CPU,内存利用率 |

| k8s资源对象 |

kube-state-metrics |

pod/deployment/service |

2、部署Prometheus+Grafana

2.1 部署Prometheus

2.1.1 创建rbac

现在先来创建rbac,因为部署它的主服务主进程要引用这几个服务。因为prometheus来连接你的API,从API中获取很多的指标,并且设置了绑定集群角色的权限,只能查看,不能修改。

cat prometheus-ra.yamlapiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system创建rbac

kubectl create -f prometheus-ra.yaml [root@VM-0-12-tlinux ~]# kubectl create -f prometheus-ra.yaml

clusterrole.rbac.authorization.k8s.io/prometheus created

serviceaccount/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created2.1.2 创建配置项

cat prometheus-config.yaml apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-nodes'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: 'kubernetes-services'

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: 'kubernetes-ingresses'

kubernetes_sd_configs:

- role: ingress

relabel_configs:

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

kubectl create -f prometheus-config.yaml [root@VM-0-12-tlinux ~]# kubectl create -f prometheus-config.yaml

configmap/prometheus-config created2.1.3 部署prometheus

cat prometheus.yaml apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: prometheus-deployment

name: prometheus

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- image: prom/prometheus:v2.0.0

name: prometheus

command:

- "/bin/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=24h"

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: "/prometheus"

name: data

- mountPath: "/etc/prometheus"

name: config-volume

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 1500Mi

serviceAccountName: prometheus

volumes:

- name: data

emptyDir: {}

- name: config-volume

configMap:

name: prometheus-config 执行下面命令

[root@VM-0-12-tlinux ~]# kubectl create -f prometheus.yaml

deployment.apps/prometheus created[root@VM-0-12-tlinux ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-65d9c796fc-jhrgt 0/1 Pending 0 3h24m

coredns-65d9c796fc-pb66f 1/1 Running 0 3h24m

ip-masq-agent-sn6nw 1/1 Running 0 3h21m

kube-proxy-qv9q9 1/1 Running 0 3h21m

l7-lb-controller-66c5cd4f46-fj6mt 1/1 Running 0 3h24m

prometheus-5dd6c4b799-9jjmm 1/1 Running 0 44s

tke-bridge-agent-4czmq 1/1 Running 0 3h21m

tke-cni-agent-kdnrd 1/1 Running 0 3h21m2.1.4 配置service

[root@VM-0-12-tlinux ~]# cat prometheus-service.yaml

apiVersion: v1

kind: Service

metadata:

name: prometheus-service

namespace: kube-system

labels:

app: prometheus-service

spec:

type: NodePort

ports:

- port: 80

name: http

targetPort: 9090

selector:

app: prometheus执行命令

[root@VM-0-12-tlinux ~]# kubectl create -f prometheus-service.yaml

service/prometheus-service created

[root@VM-0-12-tlinux ~]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hpa-metrics-service ClusterIP 172.20.255.174 443/TCP 3h27m

kube-dns ClusterIP 172.20.255.135 53/TCP,53/UDP 3h27m

prometheus-service NodePort 172.20.254.64 80:32264/TCP 14s 2.1.5 访问prometheus

点开链接:http://127.0.0.1:32264

http://${service IP}:${随机端口}/graph

2.2 部署Grafana

执行命令

docker run -d -p 3000:3000 --network=host --name grafana grafana/grafana3、参考网站

官方网站:Prometheus - Monitoring system & time series database

GitHub地址:Prometheus · GitHub

服务发现: Configuration | Prometheus