Dolphinscheduler配置Datax踩坑记录

1、tmp/dolphinscheduler/exec/process 下文件创建失败问题

dolphinscheduler调度datax任务时需要在tmp/dolphinscheduler/exec/process 目录下创建一系列临时文件,但是在worker运行日志中/opt/soft/dolphinscheduler/logs/dolphinscheduler-worker.log看到创建失败的报错

[taskAppId=TASK-1-10-13]:[178] - datax task failure

java.io.IOException: Directory ‘/tmp/dolphinscheduler/exec/process/1/1/10/13’ could not be created

发现该目录的权限是root,我dolphinscheduler是安装在dolphin用户下的,所以我要修改该机器的tmp文件权限

$ sudo chown -R dolphin:dolphin tmp

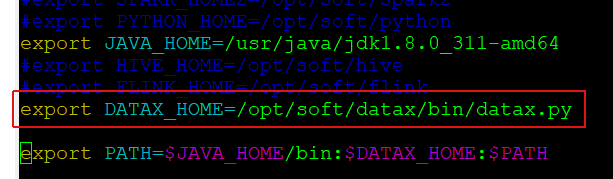

2、datax环境变量设置问题

使用dolphinscheduler调度datax任务时,数据源、任务都能创建成功,就是运行总是失败,还直接看不到日志,然后登录运行的worker机器,查看/opt/soft/dolphinscheduler/logs/dolphinscheduler-worker.log日志文件,看到提示ERR

[INFO] 2021-11-09 11:25:35.446 - [taskAppId=TASK-1-11-14]:[138] - -> python2.7: can’t open file ‘/opt/soft/datax/bin/datax.py/bin/datax.py’: [Errno 20] Not a directory

表示datax的路径配置错误,找不到该文件。

查看 vim /opt/soft/dolphinscheduler/conf/env/

这个路径是之前官方默认的,现在看不需要指定到bin以及运行文件,只要到安装目录即可。

将路径

export DATAX_HOME=/opt/soft/datax/bin/datax.py

改为

export DATAX_HOME=/opt/soft/datax

3、dolphinscheduler调度Datax执行mysql到hive的数据交换,因为默认数据源选择只能为mysql等关系型数据库,所以需要选择自定义模板,自定义配置连接地址等信息json。

配置文件模板(该配置是我最终成功版本的配置,部分参数需要根据你自己的信息进行配置)

{

"job": {

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"column": [

"*"

],

"connection": [

{

"jdbcUrl": [

"jdbc:mysql://xx.xx.xx.xx:3306/datatest?useUnicode=true&characterEncoding=utf8&useSSL=false"

],

"table": [

"test_table_info"

]

}

],

"password": "cloud",

"username": "root",

"where": ""

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"column": [

{

"name": "order_id",

"type": "string"

},

{

"name": "str_cd",

"type": "string"

},

{

"name": "gds_cd",

"type": "string"

},

{

"name": "pay_amnt",

"type": "string"

},

{

"name": "member_id",

"type": "string"

},

{

"name": "statis_date",

"type": "string"

}

],

"compress": "",

"defaultFS": "hdfs://你的hdfs namenode地址:9000",

"fieldDelimiter": ",",

"fileName": "hive_test_table_info",

"fileType": "text",

"path": "/hive/hive.db/hive_test_table_info",

"writeMode": "append"

}

}

}

],

"setting": {

"speed": {

"channel": "1"

}

}

}

}

3.1、该任务执行过程中遇到的第一个问题:与HDFS建立连接时出现IO异常

[job-0] ERROR Engine - 经DataX智能分析,该任务最可能的错误原因是:

com.alibaba.datax.common.exception.DataXException: Code:[HdfsWriter-06], Description:[与HDFS建立连接时出现IO异常.].

- java.net.ConnectException: Call From xxxxx/10.xx.xx.xx to 10.xx.1xx.1xx:8020 failed on connection exception: java.net.ConnectException: Connection refused;

- For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

因为我是按照网上的模板配置的hdfs端口8020,而实际我们的端口是9000。所以这里我把8020改成了9000.

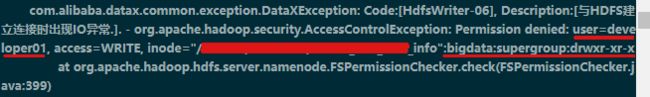

3.2 第二个问题还是:与HDFS建立连接时出现IO异常。但这次异常内容有所不同了

ERROR JobContainer - Exception when job run

com.alibaba.datax.common.exception.DataXException: Code:[HdfsWriter-06], Description:[与HDFS建立连接时出现IO异常.]. - org.apache.hadoop.security.AccessControlException: Permission denied: user=developer01, access=WRITE, inode="/hive/hive.db/hive_test_table_info":bigdata:supergroup:drwxr-xr-x

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:399)

这里因为我执行时采用的租户是developer01,而hdfs中拥有hive表写权限的是bigdata用户,所以在执行任务时要先配置并选择相应权限的租户

在安全中心–租户管理 菜单配置bigdata租户

编辑该datax任务,在保存时选择bigdata租户

3.3 执行中遇到的第三个问题是mysql连接的问题,这个在执行datax的mysql到mysql任务时也遇到过,这次自定义json文件时没留意,看到日志就想到了。

ERROR RetryUtil - Exception when calling callable, 异常Msg:DataX无法连接对应的数据库,可能原因是:1) 配置的ip/port/database/jdbc错误,无法连接。2) 配置的username/password错误,鉴权失败。请和DBA确认该数据库的连接信息是否正确。