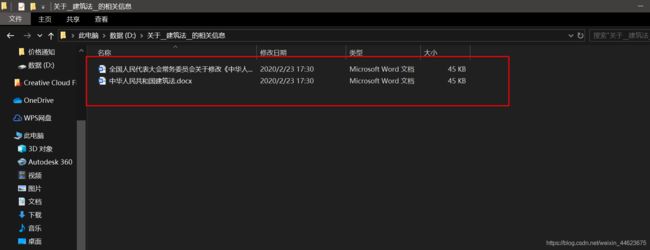

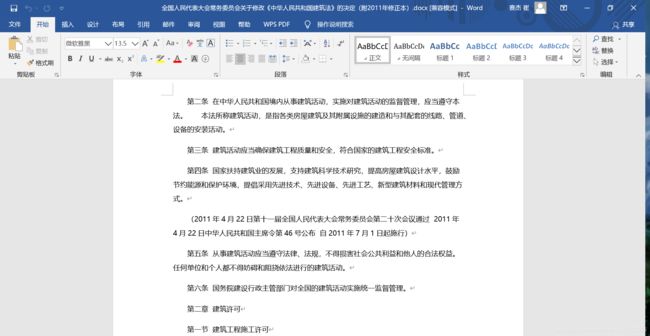

Python实现简单爬虫:爬取法律法规网数据库信息并分类写入word保存

一、项目简介

该项目用到的库都是做爬虫常用的库,包括Beautifulsoup4,requests,re等等在写入word文件这一步需要了解一下docx库的使用,可以实现按标题检索和按正文检索,可以指定存放文件夹等等。项目较为简单,但是在对数据进行清洗的时候较为复杂,适合刚如爬虫的新手练习!

二、代码展示

import time

from docx.enum.text import WD_ALIGN_PARAGRAPH

from docx.shared import Pt, Cm

from lxml import etree

from docx import Document

from docx.oxml.ns import qn

import requests

import random

import urllib.parse

from bs4 import BeautifulSoup

import lxml

import string

from concurrent.futures import ThreadPoolExecutor

import threading

import re

from docx.oxml import OxmlElement

from docx.oxml.ns import qn

def set_cell_border(cell, **kwargs): # 设置单元格上下左右边框属性(线性/颜色/粗细)

"""

Set cell`s border

Usage:

set_cell_border(

cell,

top={"sz": 12, "val": "single", "color": "#FF0000", "space": "0"},

bottom={"sz": 12, "color": "#00FF00", "val": "single"},

left={"sz": 24, "val": "dashed", "shadow": "true"},

right={"sz": 12, "val": "dashed"},

)

"""

tc = cell._tc

tcPr = tc.get_or_add_tcPr()

# check for tag existnace, if none found, then create one

tcBorders = tcPr.first_child_found_in("w:tcBorders")

if tcBorders is None:

tcBorders = OxmlElement('w:tcBorders')

tcPr.append(tcBorders)

# list over all available tags

for edge in ('left', 'top', 'right', 'bottom', 'insideH', 'insideV'):

edge_data = kwargs.get(edge)

if edge_data:

tag = 'w:{}'.format(edge)

# check for tag existnace, if none found, then create one

element = tcBorders.find(qn(tag))

if element is None:

element = OxmlElement(tag)

tcBorders.append(element)

# looks like order of attributes is important

for key in ["sz", "val", "color", "space", "shadow"]:

if key in edge_data:

element.set(qn('w:{}'.format(key)), str(edge_data[key]))

def get_headers():# 头部文件

#得到随机headers

user_agents = [

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; AcooBrowser; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0; Acoo Browser; SLCC1; .NET CLR 2.0.50727; Media Center PC 5.0; .NET CLR 3.0.04506)",

"Mozilla/4.0 (compatible; MSIE 7.0; AOL 9.5; AOLBuild 4337.35; Windows NT 5.1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/5.0 (Windows; U; MSIE 9.0; Windows NT 9.0; en-US)",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 2.0.50727; Media Center PC 6.0)",

"Mozilla/5.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 1.0.3705; .NET CLR 1.1.4322)",

"Mozilla/4.0 (compatible; MSIE 7.0b; Windows NT 5.2; .NET CLR 1.1.4322; .NET CLR 2.0.50727; InfoPath.2; .NET CLR 3.0.04506.30)",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN) AppleWebKit/523.15 (KHTML, like Gecko, Safari/419.3) Arora/0.3 (Change: 287 c9dfb30)",

"Mozilla/5.0 (X11; U; Linux; en-US) AppleWebKit/527+ (KHTML, like Gecko, Safari/419.3) Arora/0.6",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.2pre) Gecko/20070215 K-Ninja/2.1.1",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN; rv:1.9) Gecko/20080705 Firefox/3.0 Kapiko/3.0",

"Mozilla/5.0 (X11; Linux i686; U;) Gecko/20070322 Kazehakase/0.4.5",

"Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.8) Gecko Fedora/1.9.0.8-1.fc10 Kazehakase/0.5.6",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_3) AppleWebKit/535.20 (KHTML, like Gecko) Chrome/19.0.1036.7 Safari/535.20",

"Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; fr) Presto/2.9.168 Version/11.52",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.11 TaoBrowser/2.0 Safari/536.11",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.71 Safari/537.1 LBBROWSER",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E; LBBROWSER)",

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 732; .NET4.0C; .NET4.0E; LBBROWSER)",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.84 Safari/535.11 LBBROWSER",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E)",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E; QQBrowser/7.0.3698.400)",

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 732; .NET4.0C; .NET4.0E)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SV1; QQDownload 732; .NET4.0C; .NET4.0E; 360SE)",

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 732; .NET4.0C; .NET4.0E)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E)",

"Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.89 Safari/537.1",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.89 Safari/537.1",

"Mozilla/5.0 (iPad; U; CPU OS 4_2_1 like Mac OS X; zh-cn) AppleWebKit/533.17.9 (KHTML, like Gecko) Version/5.0.2 Mobile/8C148 Safari/6533.18.5",

"Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:2.0b13pre) Gecko/20110307 Firefox/4.0b13pre",

"Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:16.0) Gecko/20100101 Firefox/16.0",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11",

"Mozilla/5.0 (X11; U; Linux x86_64; zh-CN; rv:1.9.2.10) Gecko/20100922 Ubuntu/10.10 (maverick) Firefox/3.6.10"

]

user_agent = random.choice(user_agents)

headers = {'User-Agent': user_agent}

return headers

def get_catalogue_data(url): # 请求总标题网页数据

try:

data = requests.get(url=url, headers=get_headers())

soup = BeautifulSoup(data.content, 'lxml', from_encoding='utf-8')

return soup

except:

time.sleep(5)

return get_catalogue_data(url)

def get_related_nums(url): # 获取相关数据个数

soup = get_catalogue_data(url)

info = soup.select('body > div.main.pageWidth > div.mainCon.clearself > div > div > div.searTit')

re_nums = int(re.findall('(\d+)',str(info))[0])

if re_nums == 0:

return False

else:

return re_nums

def get_catalogue_pages(url): # 获取总标题页码

soup = get_catalogue_data(url)

last_page = re.findall('(\d+)',str(soup.select('#pagecount')))

if last_page :

return last_page

else:

if get_related_nums(url):

print('为你查到有%s个相关数据' % get_related_nums(url))

return last_page

else:

print('无相关记录')

return False

def get_title_urls(url_index_0,pages,q): # 得到每个标题的url

all_url = []

if pages:

for page in range(1,int(pages[0])+1):

url_index = url_index_0.format(page,q)

errors_url = []

try:

data = requests.get(url=url_index, headers=get_headers())

#soup = BeautifulSoup(data.content,'lxml',from_encoding='utf-8')

pattern = ''

urls = re.findall(pattern, data.text)

for url in urls:

url = 'http://search.chinalaw.gov.cn/' + url

all_url.append(url)

except:

time.sleep(5)

return get_title_urls(url_index_0,pages,q)

else:

url_index = url_index_0.format('',q)

try:

data = requests.get(url=url_index, headers=get_headers())

pattern = ''

urls = re.findall(pattern, data.text)

#print(data.text)

for url in urls:

url = 'http://search.chinalaw.gov.cn/' + url

all_url.append(url)

except:

time.sleep(5)

return get_title_urls(url_index_0, pages, q)

return all_url

def get_title_pages(title_url):# 得到详情页的页数

try:

data = requests.get(title_url,headers=get_headers())

soup = BeautifulSoup(data.content,'lxml',from_encoding='utf-8')

pages = int(re.findall('(\d+)',str(soup.select('#pagecount')))[0])

return pages

except:

return 1

def get_title_infos(title_url,pages):#得到详情页所有信息

attr = {}

title = {}

paragraphs = []

for page in range(1,pages+1):

time.sleep(1)

try:

title_url0 = title_url + '&PageIndex=' + str(page)

data = requests.get(title_url0, headers=get_headers())

html = etree.HTML(data.content.decode('utf-8'))

paragraphs_1 = html.xpath('string(/html/body/div[2]/div[2]/div/div/div[2]/div[2])')

paragraphs0 = []

for pa in paragraphs_1:

if pa == '':

pass

else:

paragraphs0.append(pa)

paragraphs_2 = ''.join(paragraphs0)

pa2 = paragraphs_2.split('\r\n')

pa3 = [x.strip() for x in pa2 if x.strip() != '']

if page == 1:

title0 = html.xpath('/html/body/div[2]/div[2]/div/div/div[2]/div[1]')

title.update({'title': title0[0].text})

attr_gov = html.xpath('/html/body/div[2]/div[2]/div/div/div[2]/table[1]/tr[1]/td[1]')

attr_gov_a = html.xpath('/html/body/div[2]/div[2]/div/div/div[2]/table[1]/tr[1]/td[2]')

attr.update({attr_gov[0].text: attr_gov_a[0].text})

attr_pdata = html.xpath('/html/body/div[2]/div[2]/div/div/div[2]/table[1]/tr[2]/td[1]')

attr_pdata_a = html.xpath('/html/body/div[2]/div[2]/div/div/div[2]/table[1]/tr[2]/td[2]')

attr.update({attr_pdata[0].text: attr_pdata_a[0].text})

attr_ddata = html.xpath('/html/body/div[2]/div[2]/div/div/div[2]/table[1]/tr[2]/td[3]')

attr_ddata_a = html.xpath('/html/body/div[2]/div[2]/div/div/div[2]/table[1]/tr[2]/td[4]')

attr.update({attr_ddata[0].text: attr_ddata_a[0].text})

attr_tok = html.xpath('/html/body/div[2]/div[2]/div/div/div[2]/table[1]/tr[3]/td[1]')

attr_tok_a = html.xpath('/html/body/div[2]/div[2]/div/div/div[2]/table[1]/tr[3]/td[2]')

attr.update({attr_tok[0].text: attr_tok_a[0].text})

attr_cl = html.xpath('/html/body/div[2]/div[2]/div/div/div[2]/table[1]/tr[3]/td[3]')

attr_cl_a = html.xpath('/html/body/div[2]/div[2]/div/div/div[2]/table[1]/tr[3]/td[4]')

attr.update({attr_cl[0].text: attr_cl_a[0].text})

paragraphs += pa3

else:

paragraphs += pa3

except:

time.sleep(5)

return get_title_infos(title_url,pages)

#print(paragraphs)

return title,attr,paragraphs

def wt(title,attr,paragraphs,q,mkd):# 向word写入信息

mkdir(mkd+'\关于__{}__的相关信息'.format(q))

document = Document()

document.styles['Normal'].font.name = u'微软雅黑'

# 设置文档基础字体为宋体‘normal'表示默认样式

document.styles['Normal'].element.rPr.rFonts.set(qn('w:eastAsia'), u'微软雅黑')

# 写入标题

title_p = document.add_paragraph()

run1 = title_p.add_run(title['title'])

run1.font.name = '微软雅黑'

# 设置西文字体为微软雅黑

run1.element.rPr.rFonts.set(qn('w:eastAsia'), u'微软雅黑')

# 设置中文字体为微软雅黑

title_p.alignment = WD_ALIGN_PARAGRAPH.CENTER

run1.font.size = Pt(13.5)

# 写入属性

table = document.add_table(rows=4, cols=4, style='Table Grid')

table_run1 = table.cell(0,0).paragraphs[0].add_run('公布机关:')

table_run1.font.size = Pt(9)

table.cell(0,0).Width = Cm(2.12)

table_run2 = table.cell(0, 1).paragraphs[0].add_run(attr['公布机关:'])

table_run2.font.size = Pt(9)

table.cell(0, 0).width = Cm(10.58)

table_run3 = table.cell(1, 0).paragraphs[0].add_run('公布日期:')

table_run3.font.size = Pt(9)

table.cell(1, 0).width = Cm(2.12)

table_run4 = table.cell(1, 1).paragraphs[0].add_run(attr['公布日期:'])

table_run4.font.size = Pt(9)

table.cell(1, 1).width = Cm(10.58)

table_run5 = table.cell(1, 2).paragraphs[0].add_run('施行日期:')

table_run5.font.size = Pt(9)

table.cell(1, 2).width = Cm(2.12)

table_run6 = table.cell(1, 3).paragraphs[0].add_run(attr['施行日期:'])

table_run6.font.size = Pt(9)

table_run7 = table.cell(3, 0).paragraphs[0].add_run('效力:')

table_run7.font.size = Pt(9)

table.cell(3, 0).width = Cm(2.12)

table_run8 = table.cell(3, 1).paragraphs[0].add_run(attr['效力:'])

table_run8.font.size = Pt(9)

table.cell(3, 1).width = Cm(10.58)

table_run9 = table.cell(3, 2).paragraphs[0].add_run('门类:')

table_run9.font.size = Pt(9)

table.cell(3, 2).width = Cm(2.12)

table_run10 = table.cell(3, 3).paragraphs[0].add_run(attr['门类:'])

table_run10.font.size = Pt(9)

for r in range(0,4):

for c in range(0,4):

set_cell_border(

table.cell(r,c),

top={"color": "#FFFFFF"},

bottom={"color": "#FFFFFF"},

left={"color": "#FFFFFF"},

right={"color": "#FFFFFF"},

)

# 写入段落

p1 = document.add_paragraph()

run_1 = p1.add_run(paragraphs[0])

run_1.font.size = Pt(10.5)

p1.alignment = WD_ALIGN_PARAGRAPH.CENTER

p2 = document.add_paragraph()

run_2 = p2.add_run(paragraphs[1])

run_2.font.size = Pt(12.5)

p2.alignment = WD_ALIGN_PARAGRAPH.CENTER

for p in paragraphs[2:]:

p1 = document.add_paragraph()

run_1 = p1.add_run(p)

run_1.font.size = Pt(10.5)

p1.paragraph_format.first_line_indent=Cm(0.74)

document.save('D:\关于__{}__的相关信息\{}.docx'.format(q,title['title']))

def mkdir(path): # 创建目录文件

import os

# function:新建文件夹

# path:str-从程序文件夹要要创建的目录路径(包含新建文件夹名)

# 去除首尾空格

path = path.strip() # strip方法只要含有该字符就会去除

# 去除首尾\符号

path = path.rstrip('\\')

# 判断路径是否存在

isExists = os.path.exists(path)

# 根据需要是否显示当前程序运行文件夹

# print("当前程序所在位置为:"+os.getcwd())

if not isExists:

os.makedirs(path)

print(path + '创建成功')

return True

else:

return False

def main():

mkd = str(input('请输入文件夹创建目录[如C:\\Users\\14983\Documents]:'))

choose = int(input('按标题检索[1] 按正文检索[2]:'))

url_0 = ''

url_index_0 = ''

if choose == 1:

url_0 = 'http://search.chinalaw.gov.cn/SearchLawTitle?effectLevel=&SiteID=124&PageIndex=&Sort=PublishTime&Query='

url_index_0 = 'http://search.chinalaw.gov.cn/SearchLawTitle?effectLevel=&SiteID=124&PageIndex={}&Sort=PublishTime&Query={}'

elif choose == 2:

url_0 = 'http://search.chinalaw.gov.cn/SearchLaw?effectLevel=&SiteID=124&PageIndex=&Sort=PublishTime&Query='

url_index_0 = 'http://search.chinalaw.gov.cn/SearchLaw?effectLevel=&SiteID=124&PageIndex={}&Sort=PublishTime&Query={}'

q = str(input('请输入查询关键字:'))

url_1 = url_0 + q

pages = get_catalogue_pages(url_1)

#print(pages)

nums = get_related_nums(url_1)

if nums :

print('全库有{}个相关数据'.format(str(nums)))

title_urls = get_title_urls(url_index_0,pages,q)

for title_url in title_urls:

try:

title_pages = get_title_pages(title_url)

title,attr,paragraphs = get_title_infos(title_url,title_pages)

wt(title,attr,paragraphs,q,mkd)

print('{}-----------完成!'.format(title['title']))

time.sleep(1)

except:

print('{}-----------失败!'.format(title['title']))

else:

print('全库没有相关数据')

return main()

print('该程序版权归崔sir(qq:1498381083 晚竹田)所有,未经许可不得用于商业用途。')

main()