zookeeper4-单机伪集群搭建

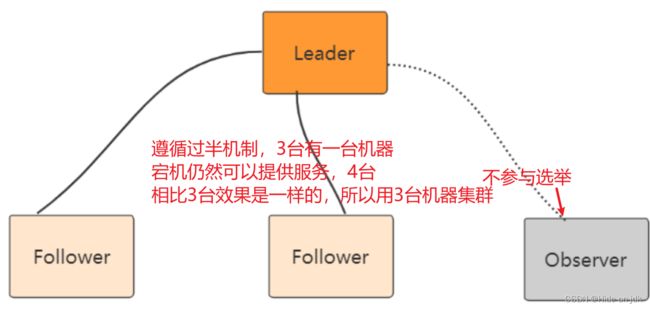

Zookeeper 集群模式:

Leader: 处理所有的事务请求(写请求),可以处理读请求,集群中只能有一个Leader

Follower:只能处理读请求,同时作为 Leader的候选节点,即如果Leader宕机,Follower节点

要参与到新的Leader选举中,有可能成为新的Leader节点。

Observer:只能处理读请求。不能参与选举

安裝步骤:

tar ‐zxvf apache‐zookeeper‐3.5.8‐bin.tar.gz

mv apache‐zookeeper‐3.5.8 zookeeper

cd zookeeper

cp conf/zoo_sample.cfg conf/zoo1.cfg

vim conf/zoo1.cfg

================

dataDir=/home/bzf/zookeeper/data/zookeeper1

clientPort=2181

server.1=192.168.25.111:2001:3001

server.2=192.168.25.111:2002:3002

server.3=192.168.25.111:2003:3003

server.4=192.168.25.111:2004:3004:observer

cd /home/bzf/zookeeper/data/zookeeper1

touch myid

echo 1 >> myid

======================

cp conf/zoo1.cfg conf/zoo2.cfg

vim conf/zoo2.cfg

dataDir=/home/bzf/zookeeper/data/zookeeper2

clientPort=2182

cd /home/bzf/zookeeper/data/zookeeper2

touch myid

echo 2 >> myid

====================

cp conf/zoo1.cfg conf/zoo3.cfg

vim conf/zoo3.cfg

dataDir=/home/bzf/zookeeper/data/zookeeper3

clientPort=2183

cd /home/bzf/zookeeper/data/zookeeper3

touch myid

echo 3 >> myid

==============

cp conf/zoo1.cfg conf/zoo4.cfg

vim conf/zoo4.cfg

dataDir=/home/bzf/zookeeper/data/zookeeper4

clientPort=2184

cd /home/bzf/zookeeper/data/zookeeper4

touch myid

echo 4 >> myid

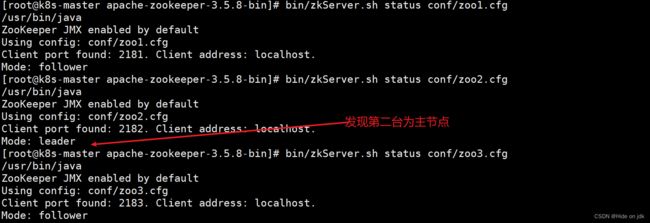

分别启动四个节点

bin/zkServer.sh start conf/zoo1.cfg

bin/zkServer.sh start conf/zoo2.cfg

bin/zkServer.sh start conf/zoo3.cfg

bin/zkServer.sh start conf/zoo4.cfg

bin/zkServer.sh status conf/zoo1.cfg

bin/zkServer.sh status conf/zoo2.cfg

bin/zkServer.sh status conf/zoo3.cfg

二:通过源代码操作zk集群

@Slf4j

public class MyCuratorClusterBaseOperations extends CuratorClusterBase {

//测试集群:每隔5s从集群中获取一次数据,如果此时集群中的某个节点突然宕机,观察现象:

//1.我们手动关闭2181的节点,发现服务没有报错仍然可以获取到数据

//2.我们手动关闭2181,2182节点,发现不能获取数据,集群失效

//3.我们手动启动2181节点,发现有能获取到数据

@Test

public void testCluster() throws Exception {

CuratorFramework curatorFramework = getCuratorFramework();

String pathWithParent = "/test";

byte[] bytes = curatorFramework.getData().forPath(pathWithParent);

System.out.println(new String(bytes));

while (true) {

try {

byte[] bytes2 = curatorFramework.getData().forPath(pathWithParent);

System.out.println(new String(bytes2));

TimeUnit.SECONDS.sleep(5);

} catch (Exception e) {

e.printStackTrace();

testCluster();

}

}

}

//测试集群:每隔5s从集群中获取一次数据,如果此时集群中的某个节点突然宕机,观察现象:

//1.我们手动关闭2181的节点,发现服务没有报错仍然可以获取到数据

//2.我们手动关闭2181,2182节点,发现不能获取数据,集群失效

//3.我们手动启动2181节点,发现有能获取到数据

public static void main(String[] args) throws Exception {

RetryPolicy retryPolicy = new ExponentialBackoffRetry(5 * 1000, 10);

String connectStr = "192.168.25.111:2181,192.168.25.111:2182,192.168.25.111:2183,192.168.25.111:2184";

CuratorFramework curatorFramework = CuratorFrameworkFactory.newClient(connectStr, retryPolicy);

curatorFramework.start();

String pathWithParent = "/test";

byte[] bytes = curatorFramework.getData().forPath(pathWithParent);

System.out.println(new String(bytes));

while (true) {

try {

byte[] bytes2 = curatorFramework.getData().forPath(pathWithParent);

System.out.println(new String(bytes2));

TimeUnit.SECONDS.sleep(5);

} catch (Exception e) {

e.printStackTrace();

}

}

}

}