本地k8s集群搭建保姆级教程(3)-安装k8集群

安装K8S集群

1 设置脚本,镜像源替换为阿里云镜像

1.1 增加镜像替换脚本

注意:请在master机器上执行

$ touch images.sh

$ chmod +x images.sh

添加脚本内容:

#!/bin/bash

set -x

if [ $# -ne 1 ];then

echo "The format is: ./`basename $0` kubernetes-version"

exit 1

fi

version=$1

images=`kubeadm config images list --kubernetes-version=${version} | awk -F '/' '{print $2}'`

for imageName in ${images[@]}; do

#containerd方式

ctr i pull registry.aliyuncs.com/google_containers/$imageName

ctr i tag registry.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

ctr i rm registry.aliyuncs.com/google_containers/$imageName

done

#替换dns镜像 注意版本需要调整

ctr i pull registry.aliyuncs.com/google_containers/coredns:v1.8.6

ctr i tag registry.aliyuncs.com/google_containers/coredns:v1.8.6 k8s.gcr.io/coredns/coredns:v1.8.6

ctr i rm registry.aliyuncs.com/google_containers/coredns:v1.8.6

1.2 查看k8s版本

$ kubeadm config images list

k8s.gcr.io/kube-apiserver:v1.24.1

k8s.gcr.io/kube-controller-manager:v1.24.1

k8s.gcr.io/kube-scheduler:v1.24.1

k8s.gcr.io/kube-proxy:v1.24.1

k8s.gcr.io/pause:3.7

k8s.gcr.io/etcd:3.5.3-0

k8s.gcr.io/coredns/coredns:v1.8.6

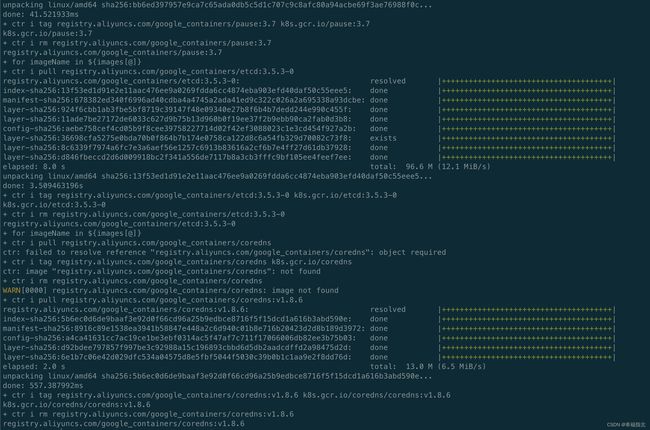

1.3 执行脚本

$ ./images.sh v1.24.1

2 安装集群

2.1 初始化master节点

在master机器上执行如下命令:

$ sysctl -w net.ipv4.ip_forward=1 #这里需要设置一下ipv4转发,不然下面要报错

$ kubeadm init \

--node-name=k8smaster \

--apiserver-advertise-address=192.168.56.3 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.24.1 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 --v=5

说明:

–apiserver-advertise-address= 你master节点的ip

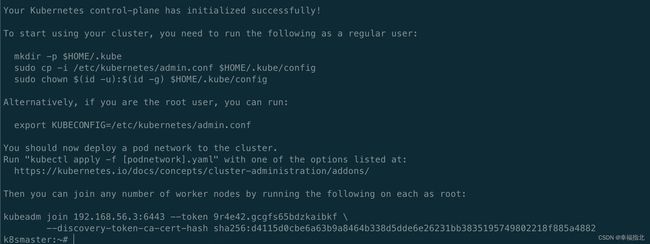

看到如下内容,说明master节点初始化成功

图片中最后一行记得要保存起来,worker节点加入到集群时使用

kubeadm join 192.168.56.3:6443 --token 9r4e42.gcgfs65bdzkaibkf \

--discovery-token-ca-cert-hash sha256:d4115d0cbe6a63b9a8464b338d5dde6e26231bb3835195749802218f885a4882

2.2 node worker节点加入到集群

分别在两台worker节点上执行如下指令:

$ sysctl -w net.ipv4.ip_forward=1 #这里需要设置一下ipv4转发,不然下面要报错

kubeadm join 192.168.56.3:6443 --token 9r4e42.gcgfs65bdzkaibkf \

--discovery-token-ca-cert-hash sha256:d4115d0cbe6a63b9a8464b338d5dde6e26231bb3835195749802218f885a4882

2.3 查看node信息

$ kubectl get nodes -A

The connection to the server localhost:8080 was refused - did you specify the right host or port?

fix 上面的错误信息

$ echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

$ source /etc/profile

$ kubectl get nodes -A

NAME STATUS ROLES AGE VERSION

k8smaster NotReady control-plane 5m2s v1.24.0

k8snode1 NotReady <none> 104s v1.24.0

k8snode2 NotReady <none> 88s v1.24.0

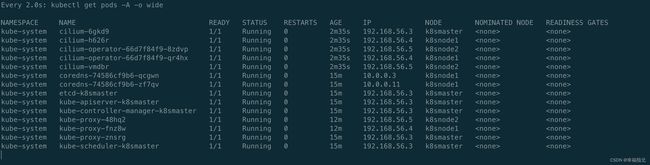

2.4 验证pod信息

$ watch kubectl get pods -A -o wide

kubectl get pods -A -o wide 2022-06-04 15:25:58

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-74586cf9b6-qcgwn 0/1 Pending 0 5m49s

kube-system coredns-74586cf9b6-zf7qv 0/1 Pending 0 5m49s

kube-system etcd-k8smaster 1/1 Running 0 6m4s

kube-system kube-apiserver-k8smaster 1/1 Running 0 6m3s

kube-system kube-controller-manager-k8smaster 1/1 Running 0 6m3s

kube-system kube-proxy-48hq2 1/1 Running 0 2m33s

kube-system kube-proxy-fnz8w 1/1 Running 0 2m49s

kube-system kube-proxy-znsrg 1/1 Running 0 5m50s

kube-system kube-scheduler-k8smaster 1/1 Running 0 6m3s

注意: 发现coredns状态是pending状态,因为我们的k8s组件还没安装网络组件, 接下来我们安装网络组件

3 安装k8s网络组件

这里我选择的是cilium网络组件

3.1 安装cilium网络组件

https://docs.cilium.io/en/v1.11/gettingstarted/k8s-install-helm/

采用helm方式安装(说明:helm在我们装机脚本中已安装过了)

$ helm repo add cilium https://helm.cilium.io/

"cilium" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "cilium" chart repository

Update Complete. ⎈Happy Helming!⎈

helm install cilium cilium/cilium --version 1.11.5 \

--namespace kube-system

观察所有pod都变成running, 说明安装成功

$ watch kubectl get pods -A -o wide

下篇

接下来我们进入下篇:

本地k8s集群搭建保姆级教程(4)-安装k8集群Dashboard

上篇

本地k8s集群搭建保姆级教程(2)-装机Alpine

结尾

此教程结束了,谢谢阅读!