SoC FPGA全连接神经网络实现数字手写体识别

SoC FPGA全连接神经网络实现数字手写体识别

- 一、全连接神经网络-DNN

-

- 1、网络结构

- 2、神经网络的训练

- 3、反向传播算法BP

- 4、DropOut

- 二、python获取数据集

-

- 1、获取数据集

- 2、查看获取数据集

- 3、图片参数转换数组

- 三、C实现全连接推理

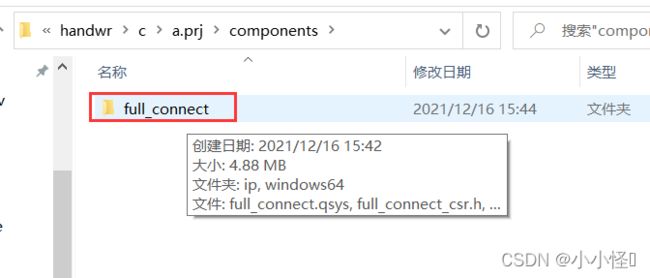

- 四、hls生成IP

- 五、挂载IP

- 六、地址映射

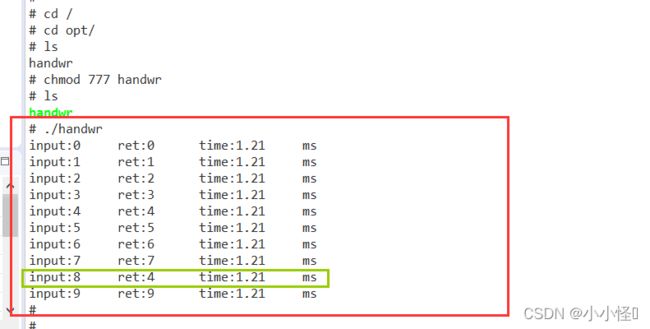

-

- 1、地址映射

- 2、识别结果

- 七、总结与参考资料

-

- 1、总结

- 2、参考资料

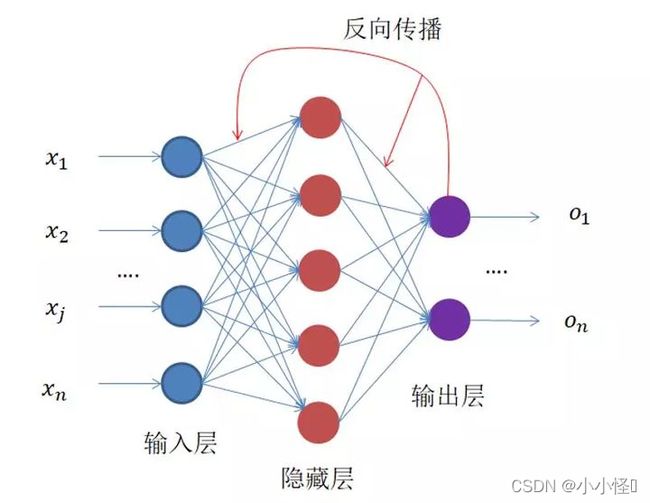

一、全连接神经网络-DNN

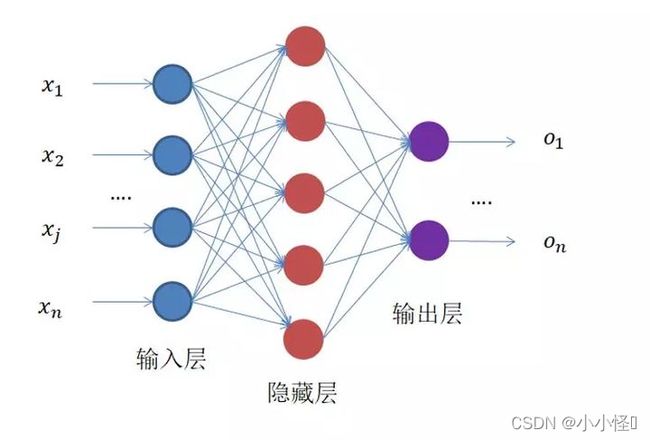

1、网络结构

- DNN的结构不固定,一般神经网络包括输入层、隐藏层和输出层,一个DNN结构只有一个输入层,一个输出层,输入层和输出层之间的都是隐藏层。每一层神经网络有若干神经元,层与层之间神经元相互连接,层内神经元互不连接,而且下一层神经元连接上一层所有的神经元。

- 隐藏层比较多(>2)的神经网络叫做深度神经网络(DNN的网络层数不包括输入层),深度神经网络的表达力比浅层网络更强,一个仅有一个隐含层的神经网络就能拟合任何一个函数,但是它需要很多很多的神经元。

- 优点:由于DNN几乎可以拟合任何函数,所以DNN的非线性拟合能力非常强。往往深而窄的网络要更节约资源。

- 缺点:DNN不太容易训练,需要大量的数据,很多技巧才能训练好一个深层网络。

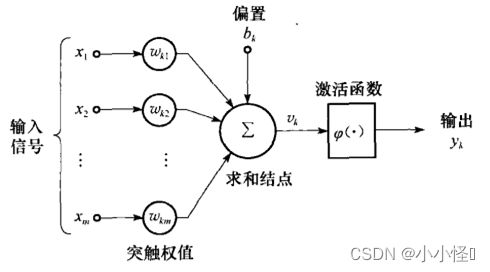

- 输入:一个感知器可以接收多个输入(x1,x2,…,xn|xi∈R)

- 权重:每一个输入都有一个权重wi∈R

- 偏置项:b∈R,就是上图中的w0

- 激活函数:也叫做非线性单元【提供网络的非线性建模能力】

- 输出:y=f(w∗x+b)

2、神经网络的训练

神经网络的复杂之处在于它的组成结构太复杂,神经元太多

这是一个只有两层的神经网络,假定输入x,我们规定隐层h和输出层o这两层都是z=wx+b和f(z)=11+e−z的组合,一旦输入样本x和标签y之后,模型就开始训练了。那么我们的问题就变成了求隐层的w、b和输出层的w、b四个参数的过程。

训练的目的是神经网络的输出和真实数据的输出"一样",但是在"一样"之前,模型输出和真实数据都是存在一定的差异,我们把这个"差异"作这样的一个参数e代表误差的意思,那么模型输出加上误差之后就等于真实标签了,作:y=wx+b+e

当我们有n对x和y那么就有n个误差e,我们试着把n个误差e都加起来表示一个误差总量,为了不让残差正负抵消我们取平方或者取绝对值,本文取平方。这种误差我们称为“残差”,也就是模型的输出的结果和真实结果之间的差值。损失函数Loss还有一种称呼叫做“代价函数Cost”,残差表达式如下:

L o s s = ∑ i = 1 n e 2 i = ∑ i = 1 n ( y i − ( w x i + b ) ) 2 Loss=∑i=1ne2i=∑i=1n(yi−(wxi+b))2 Loss=∑i=1ne2i=∑i=1n(yi−(wxi+b))2

现在我们要做的就是找到一个比较好的w和b,使得整个Loss尽可能的小,越小说明我们训练出来的模型越好。

3、反向传播算法BP

BP算法主要有以下三个步骤 :

- 前向计算每个神经元的输出值;

- 反向计算每个神经元的误差项e

- 最后用随机梯度下降算法迭代更新权重w和b。

图构解析【反向传播】

损失函数展开如下图:

L o s s = ∑ i = 1 n ( x 2 i w 2 + b 2 + 2 x i w b − 2 y i b − 2 x i y i w + y 2 i ) = A w 2 + B b 2 + C w b + D b + D w + E b + F Loss=∑i=1n(x2iw2+b2+2xiwb−2yib−2xiyiw+y2i)=Aw2+Bb2+Cwb+Db+Dw+Eb+F Loss=∑i=1n(x2iw2+b2+2xiwb−2yib−2xiyiw+y2i)=Aw2+Bb2+Cwb+Db+Dw+Eb+F

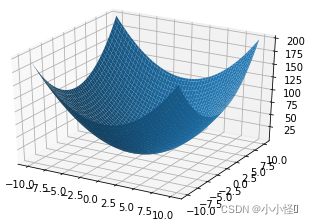

初始化一个wo和b0,带到Loss里面去,这个点(wo,bo,Losso)会出现在图示的某个位置,我们的目标是最低点。

x n + 1 = x n − η d f ( x ) / d x x_n+_1=x_n−ηdf(x)/dx xn+1=xn−ηdf(x)/dx

上式为梯度下降算法的公式;其中 d f ( x ) / d x df(x)/dx df(x)/dx为梯度,η是学习率,也就是每次挪动的步长,η大每次迭代的脚步就大,η小每次迭代的脚步就小,我们只有取到合适的η才能尽可能的接近最小值而不会因为步子太大越过了最小值。

4、DropOut

- DropOut是深度学习中常用的方法,主要是为了克服过拟合的现象。全连接网络极高的VC维,使得它的记忆能力非常强,甚至把一下无关紧要的细枝末节都记住,一来使得网络的参数过多过大,二来这样训练出来的模型容易过拟合。

- DropOut:是指在在一轮训练阶段临时关闭一部分网络节点。让这些关闭的节点相当去去掉。如下图所示去掉虚线圆和虚线,原则上是去掉的神经元是随机的。

二、python获取数据集

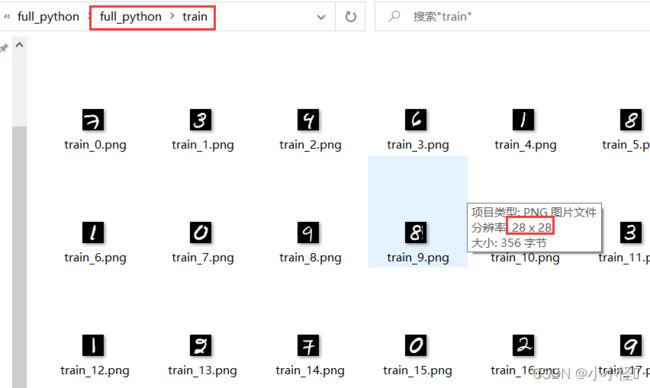

1、获取数据集

python实现创建文件并将获取的数据集导入对应文件

from tensorflow_core.examples.tutorials.mnist import input_data

from scipy import misc

import numpy as np

import os

mnist = input_data.read_data_sets('data',one_hot=True)

if not os.path.exists('train'): #创建 train 文件夹作为训练数据集,将训练集压缩包里的图片解压到 train 文件夹中

os.mkdir('train')

if not os.path.exists('test'): #创建 test 文件夹作为测试数据集,将测试集压缩包里的图片解压到 test 文件夹中

os.mkdir('test')

for (idx, img) in enumerate(mnist.train.images): #将压缩数据集中的 训练数据图片 解压到 train 文件夹下

img_arr = np.reshape(img, [28,28])

misc.imsave('train/train_' + str(idx) + '.png',img_arr)

for (idx, img) in enumerate(mnist.test.images): #将压缩数据集中的 测试数据图片 解压到 test 文件夹下

img_arr = np.reshape(img, [28,28])

misc.imsave('test/test_' + str(idx) + '.png',img_arr)

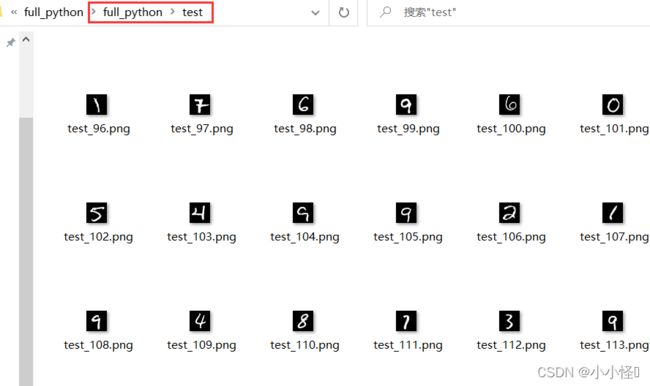

2、查看获取数据集

3、图片参数转换数组

将图片数据转换为数组形式

from PIL import Image

import numpy as np

def pre_dic(pic_path):

img = Image.open(pic_path)

reIm = img.resize((28, 28), Image.ANTIALIAS)

img_array = np.array(reIm.convert('L'))

nm_array = img_array.reshape([1, 784])

nm_array = nm_array.astype(np.float32)

img_ready = np.multiply(nm_array, 1.0/255.0)

return img_ready

if __name__ == '__main__':

pic_name = [

"0.png",

"1.png",

"2.png",

"3.png",

"4.png",

"5.png",

"6.png",

"7.png",

"8.png",

"9.png"]

save_pic_name = [

"input_0",

"input_1",

"input_2",

"input_3",

"input_4",

"input_5",

"input_6",

"input_7",

"input_8",

"input_9"

]

for i in range (10):

array = pre_dic(pic_name[i])

with open(save_pic_name[i]+'.h', 'w') as f:

new_str1 = str(array.tolist())

new_str2 = new_str1.replace('[','')

new_str3 = new_str2.replace(']','')

f.write("float "+save_pic_name[i]+"[784]={"+new_str3+"};")

f.close()

转换结果【举一例】

float input_5[784]={0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.250980406999588, 0.250980406999588, 1.0, 0.7176470756530762, 0.9921569228172302, 0.5490196347236633, 0.4745098352432251, 0.4745098352432251, 0.14901961386203766, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.35686275362968445, 0.8313726186752319, 0.9411765336990356, 0.9411765336990356, 0.9411765336990356, 0.9647059440612793, 0.9647059440612793, 0.9921569228172302, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.30980393290519714, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.5215686559677124, 0.9725490808486938, 0.988235354423523, 0.7921569347381592, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.9921569228172302, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.7764706611633301, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.8862745761871338, 0.988235354423523, 0.988235354423523, 0.6313725709915161, 0.9372549653053284, 0.6745098233222961, 0.6745098233222961, 0.40000003576278687, 0.43137258291244507, 0.15294118225574493, 0.15294118225574493, 0.15294118225574493, 0.6470588445663452, 0.988235354423523, 0.9647059440612793, 0.30588236451148987, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.8862745761871338, 0.988235354423523, 0.988235354423523, 0.4078431725502014, 0.1725490242242813, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.05490196496248245, 0.20784315466880798, 0.20784315466880798, 0.0784313753247261, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.501960813999176, 0.988235354423523, 0.988235354423523, 0.25882354378700256, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.3686274588108063, 0.988235354423523, 0.988235354423523, 0.3764706254005432, 0.16078431904315948, 0.16078431904315948, 0.07450980693101883, 0.07450980693101883, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.13725490868091583, 0.8352941870689392, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.7960785031318665, 0.7960785031318665, 0.6313725709915161, 0.4078431725502014, 0.06666667014360428, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.3176470696926117, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.9921569228172302, 0.988235354423523, 0.8352941870689392, 0.4117647409439087, 0.03921568766236305, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.3176470696926117, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.9921569228172302, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.7254902124404907, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.1411764770746231, 0.6196078658103943, 0.917647123336792, 0.572549045085907, 0.7176470756530762, 0.7725490927696228, 0.7490196228027344, 0.9921569228172302, 0.9921569228172302, 0.9921569228172302, 0.9921569228172302, 0.5058823823928833, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.011764707043766975, 0.04313725605607033, 0.007843137718737125, 0.02352941408753395, 0.027450982481241226, 0.02352941408753395, 0.545098066329956, 0.9450981020927429, 0.988235354423523, 0.988235354423523, 0.9764706492424011, 0.2980392277240753, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.09019608050584793, 0.8509804606437683, 0.988235354423523, 0.988235354423523, 0.501960813999176, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.8352941870689392, 0.988235354423523, 0.988235354423523, 0.9647059440612793, 0.30588236451148987, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.43137258291244507, 0.9568628072738647, 0.988235354423523, 0.988235354423523, 0.8627451658248901, 0.0784313753247261, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.05882353335618973, 0.05490196496248245, 0.0, 0.0, 0.125490203499794, 0.21176472306251526, 0.7333333492279053, 0.729411780834198, 0.960784375667572, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.8313726186752319, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.6196078658103943, 0.7568628191947937, 0.6784313917160034, 0.6784313917160034, 0.8588235974311829, 0.988235354423523, 0.9921569228172302, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.9529412388801575, 0.4235294461250305, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.4274510145187378, 0.960784375667572, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.9921569228172302, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.960784375667572, 0.4235294461250305, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.6941176652908325, 0.9450981020927429, 0.988235354423523, 0.988235354423523, 0.988235354423523, 0.9921569228172302, 0.9647059440612793, 0.9333333969116211, 0.9333333969116211, 0.41568630933761597, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.07450980693101883, 0.5176470875740051, 0.988235354423523, 0.988235354423523, 0.4705882668495178, 0.2705882489681244, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0};

三、C实现全连接推理

c语言实现全连接神经网络推理

//图片输入 28x28 = 784(像素点)

/********************推理函数******************

第一层:权重:784*64 偏置:64

第二层:权重:64*10 偏置:10

找到最大值结果输出 0-9

**********************************************/

#include四、hls生成IP

指定全连接c推理为从机组件【avalon_slave】

//图片输入 28x28 = 784(像素点)

/********************推理函数******************

第一层:权重:784*64 偏置:64

第二层:权重:64*10 偏置:10

找到最大值结果输出 0-9

**********************************************/

#include生成IP

init_hls.bat//hls初始化

i++ -march=x86-64 handwr.cpp.cpp //在x86-64上编译程序

i++ -march=CycloneV handwr.cpp.cpp//硬件编译,生成硬件语言

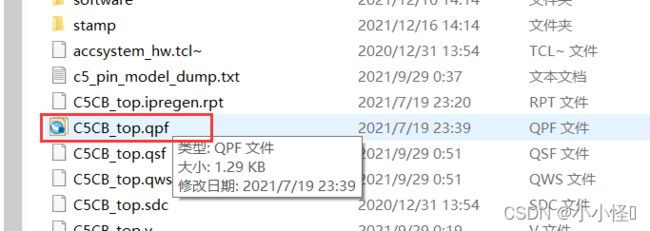

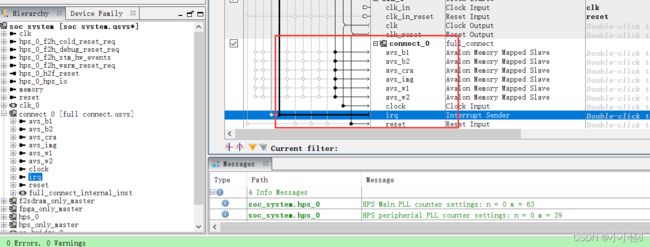

五、挂载IP

platform designer挂载生成的ip

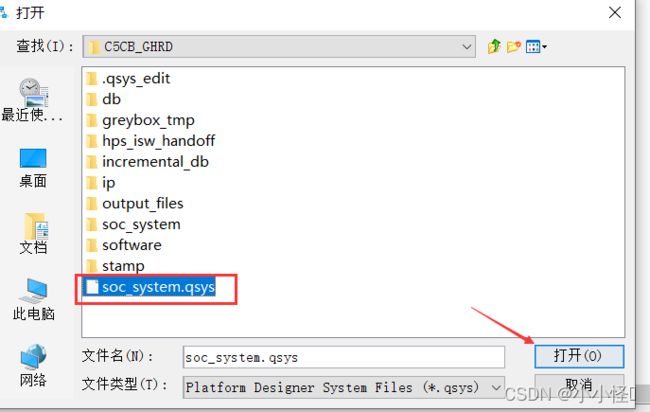

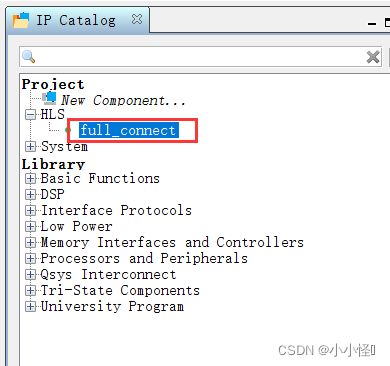

在hls目录下,找到ip;双击打开

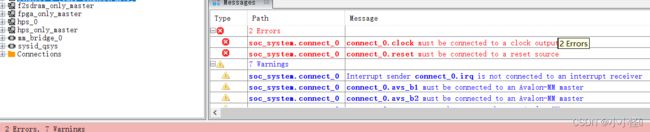

点击finish,出现报错如示【未连线】

开始连线【连线完成,重新分配基地址】

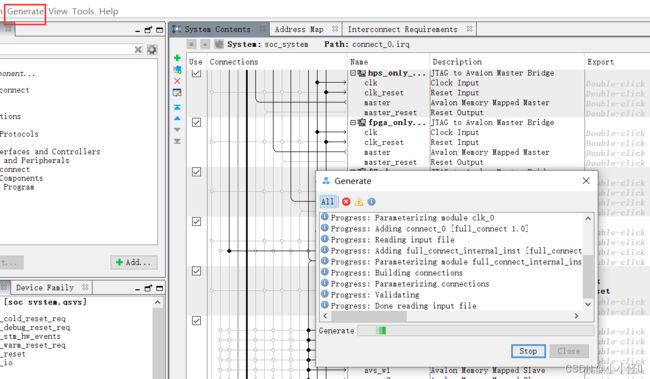

配置完成,点击generate HDL…

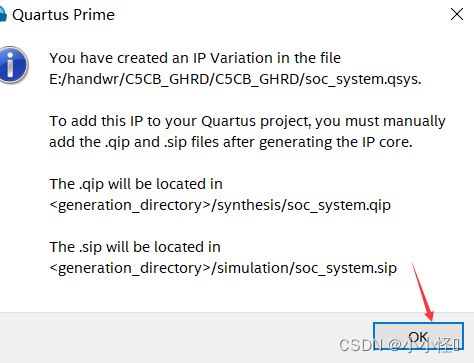

quartus会卡顿,弹出如示界面【弹出则配置成功,反之失败】

工程全编译

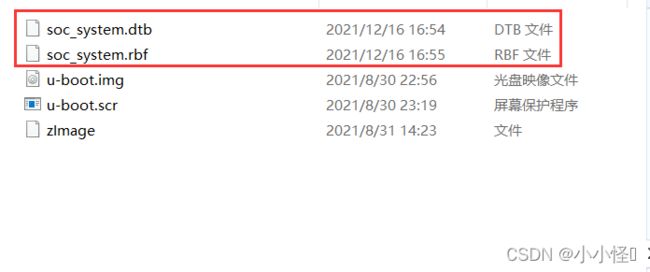

生成设备树、rbf以及头文件

替换镜像中的dtb以及rbf文件

接下来,用eclipse进行地址映射。

六、地址映射

1、地址映射

创建新工程,进行虚拟地址映射,源码如下

//GCC标准头文件

#include2、识别结果

七、总结与参考资料

1、总结

这篇文章由很多步骤都是之前做过的,因此像设备树生成、文件创建、增加外设以及板子验证等步骤都没做详细介绍。对于这些步骤,可以参考之前的文章;

SoC学习篇—实现hello FPGA打印

SoC学习篇—外设IP使用 PIO_LED 点灯

HLS学习篇—搭配hls环境及操作实例

2、参考资料

深度学习中的激活函数

全连接神经网络(DNN)