使用pytorch搭建卷积神经网络的一般写法

使用pytorch搭建卷积神经网络的一般写法(Alexnet为例)

- 前言

- 数据集

- 代码

-

- 1. 定义自己的transform

- 2. 定义自己的Dataset

- 2. 定义自己的DataLoader

- 3. 设置GPU还是CPU运行设备

- 4. 加载模型

- 5. 设置优化器

- 6. 定义损失函数

- 7. 开始训练+验证

- 整体代码

前言

本文使用pytorch简单搭建了一个Alexnet,代码流程可以作为模板,按照这个流程就可以写自己的训练代码了。

数据集

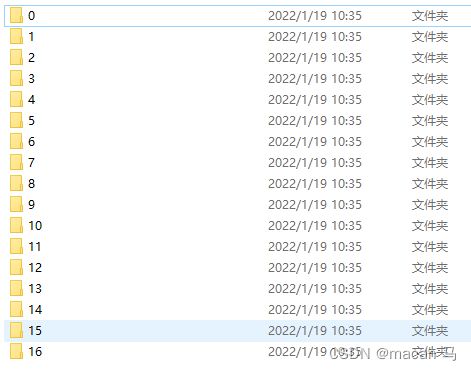

数据集使用的17flowers,一共有17个类别的花,做图片分类任务。17flowers数据集大概长成这样:

0-16这17个文件夹就是花的类别标签,每个文件夹里面是花图片:

代码

1. 定义自己的transform

transform 可以做很多数据增广的工作,这里我就简单地做了这三个

transform = transforms.Compose([

transforms.ToPILImage(),

transforms.ToTensor(),

transforms.Normalize(mean=[.5,.5,.5],std=[.5,.5,.5])

])

2. 定义自己的Dataset

写一个类,继承torch的Dataset,需要自己实现__init__、len、getitem 。其中__init__中最重要的是得到数据集的图片和标签信息,可以是数据集中所有图片的数组和标签数组,但是这样把所有数据集图片都放到内存中会造成内存不够用;一般情况就是,得到图片的保存地址和标签。len__中直接返回你在__init__中得到的所有图片的数量。pytorch会自动调用__getitem ,并传入一个索引值,这个索引值的取值范围是从0到你的所有图片数量,使用这个索引在__init__中定义的图片路径数组返回路径,然后读图片,并return图片和标签就好了。详细看如下代码:

from torch.utils.data import Dataset

import cv2

import os

class TrainAlexnetDataset(Dataset):

def __init__(self, cfg, transform, is_training = True):

super(TrainAlexnetDataset, self).__init__()

self.img_size = cfg["image_size"] # 图片大小

if is_training:

self.path = str(ROOT) + cfg["train"]["train_path"] # 训练集地址

else:

self.path = str(ROOT) + cfg["val"]["val_path"] # 测试集地址

self.image_files = [] # 保存图片地址

self.img_labels = [] # 保存标签本身

labels = os.listdir(self.path)

for l in labels:

l_path = self.path + "/" + l

for i in os.listdir(l_path):

self.image_files.append(l_path + "/" + i)

self.img_labels.append(int(l))

assert self.image_files, 'No images found in {}'.format(self.path)

self.transform = transform # 定义自己的transform

def __len__(self):

return len(self.image_files) # 返回数据集大小

def __getitem__(self, item):

img_path = self.image_files[item] # 获取当前图片地址

img = cv2.imread(img_path, cv2.IMREAD_COLOR | cv2.IMREAD_IGNORE_ORIENTATION)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB) # 读图片

img = cv2.resize(img, (self.img_size, self.img_size), interpolation=cv2.INTER_AREA) # resize

label = self.img_labels[item] # 读标签

return self.transform(img), label # 返回图片本身和标签

调用自己的Dataset:

train_dataset = TrainAlexnetDataset(cfg, transform = transform, is_training=True) # 训练集

val_dataset = TrainAlexnetDataset(cfg, transform=transform, is_training=False) # 测试集

2. 定义自己的DataLoader

从torch中import DataLoader,在DataLoader中传入一下参数

train_dataloader = DataLoader(train_dataset, batch_size = cfg["train"]["batch_size"], shuffle = True, num_workers=0, drop_last=False)

val_dataloader = DataLoader(val_dataset, batch_size = 1, shuffle = True, num_workers=0, drop_last=False)

3. 设置GPU还是CPU运行设备

# 设置GPU or CPU

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

4. 加载模型

# 加载模型

alexnet_model = Alexnet(cfg["train"]["class_num"], is_svm=False, writer = writer)

alexnet_model.to(device) # 将模型放到指定的设备上运行

5. 设置优化器

这里使用Adam优化模型,其中params 是模型需要训练的参数,这块可以手动指定哪些需要训练。

# 模型优化

optimizer = torch.optim.Adam(params = alexnet_model.parameters(),

lr=cfg["train"]["learning_rate"],

betas=(0.9, 0.999),

eps=1e-08,

weight_decay=0.0,

amsgrad=False

)

# 学习率更新策略

scheduler = torch.optim.lr_scheduler.StepLR(optimizer,

step_size=cfg["train"]["decay_iter"],

gamma=cfg["train"]["decay_rate"])

6. 定义损失函数

因为本文是多分类任务,所以使用交叉熵作为损失函数

# 定义损失函数

criterion = nn.CrossEntropyLoss()

7. 开始训练+验证

# 模型验证

labels = np.arange(0, 17)

best_f1 = 0

model_output = str(ROOT) + "/" + cfg["train"]["weights_file"]

# 训练

for epoch in range(1, cfg["train"]["epoch"]):

loss = None

for i,(imgs, labels) in enumerate(train_dataloader):

x = imgs.to(device)

y = labels.to(device)

pre_y = alexnet_model(x)

loss = criterion(pre_y, y)

if i % cfg["train"]["show_step"] == 0:

print(epoch, loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

scheduler.step()

# 保存loss

writer.add_scalar("train_loss", loss.item(), epoch)

# 验证

if epoch % cfg["val"]["val_step"] == 0:

model.eval()

pred_list = []

label_list = []

with torch.no_grad():

for j, (img, label) in enumerate(val_dataloader):

x = img.to(device)

y = label.to(device).item()

pre = alexnet_model(x) # .numpy()

pred_cls = torch.argmax(pre).item()

pred_list.append(pred_cls)

label_list.append(y)

y_pred = label_binarize(pred_list, classes=labels)

y_true = label_binarize(label_list, classes=labels)

f1 = f1_score(label_list, pred_list, average = "macro")

auc = roc_auc_score(y_true, y_pred,average='macro',multi_class='ovo')

writer.add_scalar("val_f1", f1, epoch)

writer.add_scalar("val_auc", auc, epoch)

print("epoch: {} F1-Score:{:.4f} AUC:{:.4f}".format(epoch, f1, auc))

if f1 > best_f1:

best_f1 = f1

print("epoch: {} F1-Score:{:.4f} AUC:{:.4f}".format(epoch, f1, auc))

torch.save(alexnet_model.state_dict(), model_output + "best.pt".format(f1, auc))

# 每个epoch,记录梯度,权值

for name, param in alexnet_model.named_parameters():

writer.add_histogram(name + '_grad', param.grad, epoch)

writer.add_histogram(name + '_data', param, epoch)

整体代码

整体代码如下:

# -*- coding: utf-8 -*-

"""

# Author: mabt

# File : train.py

# Date : 2022/1/18 17:31

# Last Modified by:

# Last Modified time: 2022/1/18 17:31

"""

import argparse

import os

import sys

import utils

import time

from torchvision import transforms

from datasets import TrainAlexnetDataset

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from sklearn.metrics import f1_score

from sklearn.metrics import roc_auc_score

from sklearn.preprocessing import label_binarize

import torch.nn as nn

import numpy as np

from Alexnet import Alexnet

import torch

import random

import torchvision.models as models

import pathlib as Path

ROOT = os.path.abspath(os.path.dirname(__file__))

os.environ['CUDA_VISIBLE_DEVICES'] = "0"

def init_seed(seed):

import torch.backends.cudnn as cudnn

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

cudnn.benchmark, cudnn.deterministic = (False, True) if seed == 0 else (True, False)

def train_alexnet(cfg):

# 记录训练过程中的数据

writer = SummaryWriter( comment='{}'.format(cfg["prefix"]))

# 加载训练集

transform = transforms.Compose([

transforms.ToPILImage(),

transforms.ToTensor(),

transforms.Normalize(mean=[.5,.5,.5],std=[.5,.5,.5])

])

train_dataset = TrainAlexnetDataset(cfg, transform = transform, is_training=True)

val_dataset = TrainAlexnetDataset(cfg, transform=transform, is_training=False)

train_dataloader = DataLoader(train_dataset, batch_size = cfg["train"]["batch_size"], shuffle = True, num_workers=0, drop_last=False)

val_dataloader = DataLoader(val_dataset, batch_size = 1, shuffle = True, num_workers=0, drop_last=False)

# 设置GPU or CPU

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 加载模型

alexnet_model = Alexnet(cfg["train"]["class_num"], is_svm=False, writer = writer)

alexnet_model.to(device)

# 设置优化器

optimizer = torch.optim.Adam(params = alexnet_model.parameters(),

lr=cfg["train"]["learning_rate"],

betas=(0.9, 0.999),

eps=1e-08,

weight_decay=0.0,

amsgrad=False

)

scheduler = torch.optim.lr_scheduler.StepLR(optimizer,

step_size=cfg["train"]["decay_iter"],

gamma=cfg["train"]["decay_rate"])

# 定义损失函数

criterion = nn.CrossEntropyLoss()

# 模型验证

labels = np.arange(0, 17)

best_f1 = 0

model_output = str(ROOT) + "/" + cfg["train"]["weights_file"]

# 训练

for epoch in range(1, cfg["train"]["epoch"]):

loss = None

for i,(imgs, labels) in enumerate(train_dataloader):

x = imgs.to(device)

y = labels.to(device)

pre_y = alexnet_model(x)

loss = criterion(pre_y, y)

if i % cfg["train"]["show_step"] == 0:

print(epoch, loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

scheduler.step()

# 保存loss

writer.add_scalar("train_loss", loss.item(), epoch)

# 验证

if epoch % cfg["val"]["val_step"] == 0:

pred_list = []

label_list = []

for j, (img, label) in enumerate(val_dataloader):

x = img.to(device)

y = label.to(device).item()

pre = alexnet_model(x) # .numpy()

pred_cls = torch.argmax(pre).item()

pred_list.append(pred_cls)

label_list.append(y)

y_pred = label_binarize(pred_list, classes=labels)

y_true = label_binarize(label_list, classes=labels)

f1 = f1_score(label_list, pred_list, average = "macro")

auc = roc_auc_score(y_true, y_pred,average='macro',multi_class='ovo')

writer.add_scalar("val_f1", f1, epoch)

writer.add_scalar("val_auc", auc, epoch)

print("epoch: {} F1-Score:{:.4f} AUC:{:.4f}".format(epoch, f1, auc))

if f1 > best_f1:

best_f1 = f1

print("epoch: {} F1-Score:{:.4f} AUC:{:.4f}".format(epoch, f1, auc))

torch.save(alexnet_model.state_dict(), model_output + "best.pt".format(f1, auc))

# 每个epoch,记录梯度,权值

for name, param in alexnet_model.named_parameters():

writer.add_histogram(name + '_grad', param.grad, epoch)

writer.add_histogram(name + '_data', param, epoch)

writer.close()

def parse_args():

parser = argparse.ArgumentParser(description = 'Train R-CNN')

parser.add_argument("--config", type = str, default = "./config/RCNN.yaml", help = "model config")

parser.add_argument("--prefix", type=str, default="Alexnet", help="model config")

args = parser.parse_args()

return args

if __name__ == "__main__":

args = parse_args()

cfg = utils.load_model_cfg(args.config)

utils.update_config(cfg, args)

# 固定随机种子,防止每次运行代码得到的结果都不一样

init_seed(1)

# 先预训练alexnet

train_alexnet(cfg)