python+mediapip 实现AI姿态检测健身姿态检测追踪项目

python+mediapip 实现AI姿态检测健身姿态检测追踪项目

最近研究mediapipe 这个东东,感觉有点意思,有点上瘾。如果实现了姿态检测,那么我们可以用这些姿态检测的坐标做一下项目了,比如说,如何检测健身举哑铃的动作检测,虽然功能十分简单,但是要用Python 去实现一个动作的检测,在代码层次来讲还是很繁琐的。 下面讲解一下如何使用python+opencv+mediapipe实现姿态检测,并对举哑铃这个动作进行识别。

要实现上面所说的功能,需要实现以下步骤,下面我们一步一步的实现下面的步骤,以完成整个的功能。

- Install and Import Dependencies

- Make Detections

- Determining Joints

- Calculate Angles

- Curl Counter

1. Install and Import Dependencies

首先是运行环境的检测, 要看下你的mediapipe 与opencv-python 依赖是成功安装,并且摄像头能成功的采集到你的那张帅脸,如果这些都没有问题,那么就可以进行下面的操作了。

pip install mediapipe opencv-python

import cv2

import mediapipe as mp

import numpy as np

mp_drawing = mp.solutions.drawing_utils

mp_pose = mp.solutions.pose

# VIDEO FEED

cap = cv2.VideoCapture(0)

while cap.isOpened():

ret, frame = cap.read()

cv2.imshow('Mediapipe Feed', frame)

if cv2.waitKey(10) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

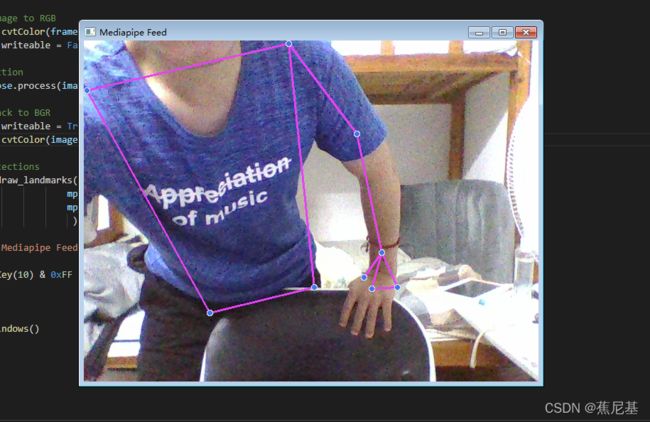

2. Make Detections

cap = cv2.VideoCapture(0)

## Setup mediapipe instance

## 开启我们的姿态检测进程函数,这里有两个指标检测置信度和跟踪置信度,作用是控制模型检测的准确度和灵敏度

with mp_pose.Pose(min_detection_confidence=0.5, min_tracking_confidence=0.5) as pose:

while cap.isOpened():

ret, frame = cap.read()

# Recolor image to RGB

## 摄像投的数据都是以BGR的形式,但是模型处理要以RGB,所以这里的颜色空间要进行转换

image = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

image.flags.writeable = False

# Make detection

results = pose.process(image)

# Recolor back to BGR

image.flags.writeable = True

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

# Render detections

##然后进行画点和连线的操作了

mp_drawing.draw_landmarks(image, results.pose_landmarks, mp_pose.POSE_CONNECTIONS,

mp_drawing.DrawingSpec(color=(245,117,66), thickness=2, circle_radius=2),

mp_drawing.DrawingSpec(color=(245,66,230), thickness=2, circle_radius=2)

)

cv2.imshow('Mediapipe Feed', image)

if cv2.waitKey(10) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

代码的功能我基本上已经在注释中阐述明白了, 完成了上面的步骤,那么基本上就能够检测到你的姿态的模型了。

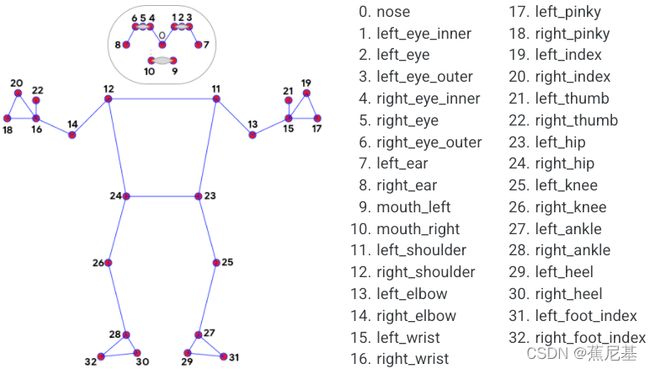

3.Determining Joints

下图是姿态检测模型中的33个关节点,我们会获取所需要的关节点的坐标,然后进行算法的计算,以达到我们所需的功能需求。下面我会获取我们的其中的关节坐标,然后计算肘部关节的角度,

# Extract landmarks

try:

landmarks = results.pose_landmarks.landmark

print(landmarks)

except:

pass

我们可以添加两行代码将我们每一个坐标点打出来看一下

可以看到,每个关节的坐标点都可以获取到。

可以看到,每个关节的坐标点都可以获取到。

另外,还可以用以下代码验证有多少个关节点,以及是哪一个关节点。

print(len(landmarks))

33

for lndmrk in mp_pose.PoseLandmark:

print(lndmrk)

PoseLandmark.NOSE

PoseLandmark.LEFT_EYE_INNER

PoseLandmark.LEFT_EYE

PoseLandmark.LEFT_EYE_OUTER

PoseLandmark.RIGHT_EYE_INNER

PoseLandmark.RIGHT_EYE

PoseLandmark.RIGHT_EYE_OUTER

PoseLandmark.LEFT_EAR

PoseLandmark.RIGHT_EAR

PoseLandmark.MOUTH_LEFT

PoseLandmark.MOUTH_RIGHT

PoseLandmark.LEFT_SHOULDER

PoseLandmark.RIGHT_SHOULDER

PoseLandmark.LEFT_ELBOW

PoseLandmark.RIGHT_ELBOW

PoseLandmark.LEFT_WRIST

PoseLandmark.RIGHT_WRIST

PoseLandmark.LEFT_PINKY

PoseLandmark.RIGHT_PINKY

PoseLandmark.LEFT_INDEX

PoseLandmark.RIGHT_INDEX

PoseLandmark.LEFT_THUMB

PoseLandmark.RIGHT_THUMB

PoseLandmark.LEFT_HIP

PoseLandmark.RIGHT_HIP

PoseLandmark.LEFT_KNEE

PoseLandmark.RIGHT_KNEE

PoseLandmark.LEFT_ANKLE

PoseLandmark.RIGHT_ANKLE

PoseLandmark.LEFT_HEEL

PoseLandmark.RIGHT_HEEL

PoseLandmark.LEFT_FOOT_INDEX

PoseLandmark.RIGHT_FOOT_INDEX

landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value].visibility

landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value]

landmarks[mp_pose.PoseLandmark.LEFT_WRIST.value]

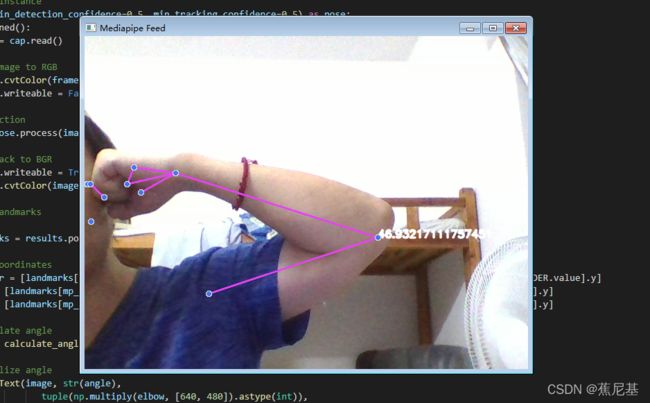

4. Calculate Angles

接下来 我们获取到了坐标,那么就需要用坐标计算出我们胳膊的角度了,用来判断是否有举哑铃这个动作。

def calculate_angle(a,b,c):

a = np.array(a) # First

b = np.array(b) # Mid

c = np.array(c) # End

radians = np.arctan2(c[1]-b[1], c[0]-b[0]) - np.arctan2(a[1]-b[1], a[0]-b[0])

angle = np.abs(radians*180.0/np.pi)

if angle >180.0:

angle = 360-angle

return angle

上面,就是用于计算坐标的Fun, 角度范围0-180。

如何获取我们所需要的左边呢?

shoulder = [landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value].x,landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value].y]

elbow = [landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value].x,landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value].y]

wrist = [landmarks[mp_pose.PoseLandmark.LEFT_WRIST.value].x,landmarks[mp_pose.PoseLandmark.LEFT_WRIST.value].y]

获取函数所需的坐标点。

然后看整体代码

cap = cv2.VideoCapture(0)

## Setup mediapipe instance

with mp_pose.Pose(min_detection_confidence=0.5, min_tracking_confidence=0.5) as pose:

while cap.isOpened():

ret, frame = cap.read()

# Recolor image to RGB

image = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

image.flags.writeable = False

# Make detection

results = pose.process(image)

# Recolor back to BGR

image.flags.writeable = True

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

# Extract landmarks

try:

landmarks = results.pose_landmarks.landmark

# Get coordinates

shoulder = [landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value].x,landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value].y]

elbow = [landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value].x,landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value].y]

wrist = [landmarks[mp_pose.PoseLandmark.LEFT_WRIST.value].x,landmarks[mp_pose.PoseLandmark.LEFT_WRIST.value].y]

# Calculate angle

angle = calculate_angle(shoulder, elbow, wrist)

# Visualize angle

cv2.putText(image, str(angle),

tuple(np.multiply(elbow, [640, 480]).astype(int)),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 255, 255), 2, cv2.LINE_AA

)

except:

pass

# Render detections

mp_drawing.draw_landmarks(image, results.pose_landmarks, mp_pose.POSE_CONNECTIONS,

mp_drawing.DrawingSpec(color=(245,117,66), thickness=2, circle_radius=2),

mp_drawing.DrawingSpec(color=(245,66,230), thickness=2, circle_radius=2)

)

cv2.imshow('Mediapipe Feed', image)

if cv2.waitKey(10) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

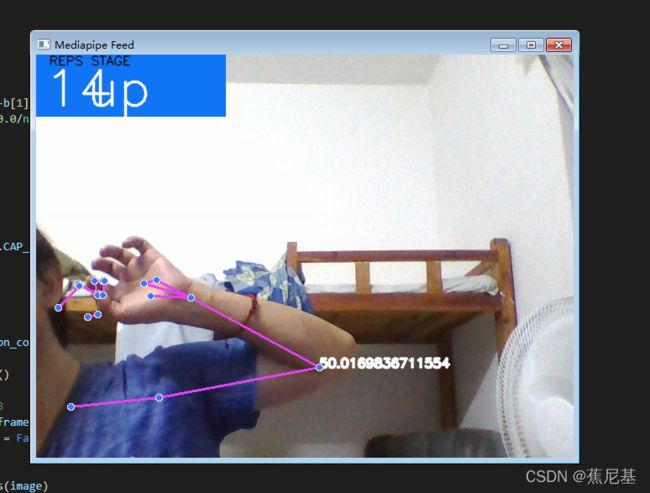

5.Curl Counter

那么就是最后一步了,为了实现举哑铃这个动作的检测,实现一个计数器的功能是最基本的。

cap = cv2.VideoCapture(0)

# Curl counter variables

counter = 0

stage = None

## Setup mediapipe instance

with mp_pose.Pose(min_detection_confidence=0.5, min_tracking_confidence=0.5) as pose:

while cap.isOpened():

ret, frame = cap.read()

# Recolor image to RGB

image = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

image.flags.writeable = False

# Make detection

results = pose.process(image)

# Recolor back to BGR

image.flags.writeable = True

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

# Extract landmarks

try:

landmarks = results.pose_landmarks.landmark

# Get coordinates

shoulder = [landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value].x,landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value].y]

elbow = [landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value].x,landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value].y]

wrist = [landmarks[mp_pose.PoseLandmark.LEFT_WRIST.value].x,landmarks[mp_pose.PoseLandmark.LEFT_WRIST.value].y]

# Calculate angle

angle = calculate_angle(shoulder, elbow, wrist)

# Visualize angle

cv2.putText(image, str(angle),

tuple(np.multiply(elbow, [640, 480]).astype(int)),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 255, 255), 2, cv2.LINE_AA

)

# Curl counter logic

if angle > 160:

stage = "down"

if angle < 30 and stage =='down':

stage="up"

counter +=1

print(counter)

except:

pass

# Render curl counter

# Setup status box

cv2.rectangle(image, (0,0), (225,73), (245,117,16), -1)

# Rep data

cv2.putText(image, 'REPS', (15,12),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0,0,0), 1, cv2.LINE_AA)

cv2.putText(image, str(counter),

(10,60),

cv2.FONT_HERSHEY_SIMPLEX, 2, (255,255,255), 2, cv2.LINE_AA)

# Stage data

cv2.putText(image, 'STAGE', (65,12),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0,0,0), 1, cv2.LINE_AA)

cv2.putText(image, stage,

(60,60),

cv2.FONT_HERSHEY_SIMPLEX, 2, (255,255,255), 2, cv2.LINE_AA)

# Render detections

mp_drawing.draw_landmarks(image, results.pose_landmarks, mp_pose.POSE_CONNECTIONS,

mp_drawing.DrawingSpec(color=(245,117,66), thickness=2, circle_radius=2),

mp_drawing.DrawingSpec(color=(245,66,230), thickness=2, circle_radius=2)

)

cv2.imshow('Mediapipe Feed', image)

if cv2.waitKey(10) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

感兴趣的朋友赶紧去试试吧,不停奥利给,看看计数多少个奥利给!