Pytorch——Dropout

文章目录

- 1.前言

- 2.制造人工数据

- 3.搭建神经网络模型

- 4.训练模型

- 5.可视化drop与不drop的情况

1.前言

过拟合让人头疼, 明明训练时误差已经降得足够低, 可是测试的时候误差突然飙升. 这很有可能就是出现了过拟合现象.下面将用可视化的形式来对比使用Dropout和不使用Dropout的情况。

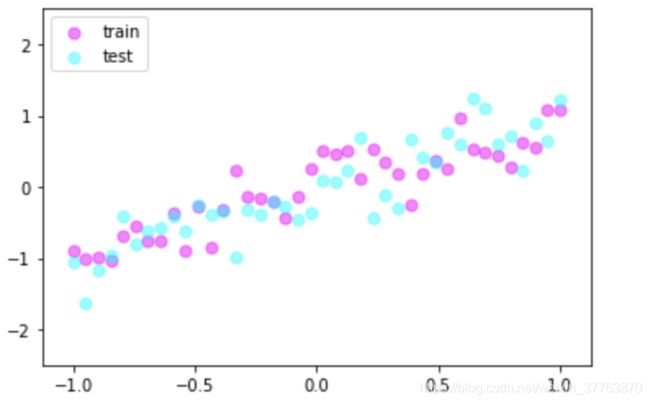

2.制造人工数据

import torch

import matplotlib.pyplot as plt

torch.manual_seed(123)

#制造训练数据

x = torch.unsqueeze(torch.linspace(-1,1,40), 1)

y = x + 0.3*torch.normal(torch.zeros(40,1), torch.ones(40,1)) #均值为0, 方差为1

#制造测试数据

test_x = torch.unsqueeze(torch.linspace(-1,1,40), 1)

test_y = test_x + 0.3*torch.normal(torch.zeros(40,1), torch.ones(40,1))

#可视化数据

plt.scatter(x.data.numpy(), y.data.numpy(), c = 'magenta', s = 50, alpha = 0.5, label = "train")

plt.scatter(test_x.data.numpy(), test_y.data.numpy(), c = 'cyan', s = 50, alpha = 0.5, label = "test")

plt.legend(loc = 'upper left')

plt.ylim((-2.5, 2.5))

plt.show()

3.搭建神经网络模型

我们在这里搭建两个神经网络, 一个没有 dropout, 一个有 dropout. 没有 dropout 的容易出现 过拟合, 那我们就命名为 net_overfitting, 另一个就是 net_dropped. torch.nn.Dropout(0.5) 这里的 0.5 指的是随机有 50% 的神经元会被关闭/丢弃.

net_overfitting = torch.nn.Sequential(

torch.nn.Linear(1,200),

torch.nn.ReLU(),

torch.nn.Linear(200,200),

torch.nn.ReLU(),

torch.nn.Linear(200,1)

)

net_dropped = torch.nn.Sequential(

torch.nn.Linear(1,200),

torch.nn.Dropout(0.5),

torch.nn.ReLU(),

torch.nn.Linear(200,200),

torch.nn.Dropout(0.5),

torch.nn.ReLU(),

torch.nn.Linear(200,1)

)

4.训练模型

训练的时候, 这两个神经网络分开训练. 训练的环境都一样.

optimizer_ofit = torch.optim.Adam(net_overfitting.parameters(), lr = 0.01)

optimizer_drop = torch.optim.Adam(net_dropped.parameters(), lr = 0.01)

loss_func = torch.nn.MSELoss()

for t in range(500):

pred_ofit = net_overfitting(x)

pred_drop = net_dropped(x)

loss_ofit = loss_func(pred_ofit, y)

loss_drop = loss_func(pred_drop, y)

optimizer_ofit.zero_grad()

optimizer_drop.zero_grad()

loss_ofit.backward()

loss_drop.backward()

optimizer_ofit.step()

optimizer_drop.step()

5.可视化drop与不drop的情况

optimizer_ofit = torch.optim.Adam(net_overfitting.parameters(), lr = 0.01)

optimizer_drop = torch.optim.Adam(net_dropped.parameters(), lr = 0.01)

loss_func = torch.nn.MSELoss()

for t in range(500):

pred_ofit = net_overfitting(x)

pred_drop = net_dropped(x)

loss_ofit = loss_func(pred_ofit, y)

loss_drop = loss_func(pred_drop, y)

optimizer_ofit.zero_grad()

optimizer_drop.zero_grad()

loss_ofit.backward()

loss_drop.backward()

optimizer_ofit.step()

optimizer_drop.step()

if t % 10 == 0: #每10步画一次图

#将神经网络转换成测试形式,画好图之后改回训练形式

net_overfitting.eval() # 因为 drop 网络在 train 的时候和 test 的时候参数不一样.

net_dropped.eval()

test_pred_ofit = net_overfitting(test_x) #测试数据放入模型,得到模型的预测值

test_pred_drop = net_dropped(test_x)

#可视化操作

plt.scatter(x.data.numpy(), y.data.numpy(), c = 'magenta', s = 50, alpha = 0.5, label = "train" )

plt.scatter(test_x.data.numpy(), test_y.data.numpy(),c = 'cyan', s = 50, alpha = 0.5, label = "test" )

plt.plot(test_x.data.numpy(), test_pred_ofit.data.numpy(),'r-',lw=5)

plt.plot(test_x.data.numpy(), test_pred_drop.data.numpy(),'b--',lw=5)

plt.text(0,-1.2,'overfitting loss = %.4f' % loss_func(test_pred_ofit,test_y),fontdict = {'size':20,'color':'red'})

plt.text(0,-1.5,'dropout loss = %.4f' % loss_func(test_pred_drop,test_y),fontdict={'size':20,'color':'blue'})

plt.legend(loc = 'upper left')

plt.ylim(-2.5,2.5)

plt.pause(0.1)

#net_overfitting.train() #预测完后,把神经网络放回去再训练

#net_dropped.train()