本周总结----ResNet 模型

一、Resnet论文解读

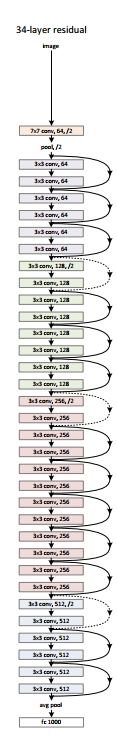

以resnet34为例介绍

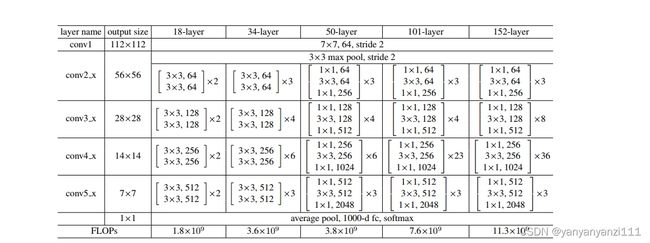

*图一 :res34层整体结构 图二: resnet的尺寸通道数表

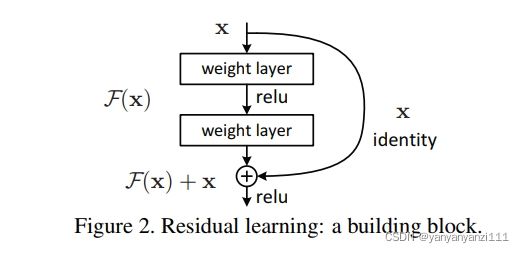

主通道上输入x进入一个卷积层后做BN,做relu,在进入一个卷积层后,与侧通道(x)相加之后,一起做BN, 做relu。

这个相加不是拼接,必须要求x的维度,与主通道上做完第二次卷积后的维度相同。是矩阵里每一个参数的相加(可以理解为两个形状相同的矩阵相加)。

要保证侧通道上x(identity)的维度,在主侧相加点的维数相同,有两种办法:1)用0来填充不过的维数,2)用1*1的卷积核扩充维度。-----论文里说用2方法好

原文如下

When the dimensions increase (dotted line shortcuts

in Fig. 3), we consider two options: (A) The shortcut still

performs identity mapping, with extra zero entries padded

for increasing dimensions. This option introduces no extra

parameter; (B) The projection shortcut in Eqn.(2) is used to

match dimensions (done by 1×1 convolutions). For both

options, when the shortcuts go across feature maps of two

sizes, they are performed with a stride of 2.

2、整体结构

resnet34首先经过 7 * 7 * 64的卷积层,经过maxpool层,进入残差块,一共进入了4种类型的残差块,最后通过averagpool和 全连接层。

3、代码

*残差块代码:

- 在搭建残差块代码时,要考虑到论文中几乎都是在重复使用残差块,但由于outputsize的改变和通道深度的改变,我们无法使用一个固定参数的残差块,所以我们要想想,是不是可以设置一些变量去控制,使用同一个残差块,以满足不同的需求。

我们先思考用到的所有的参数,看看哪些是变化的哪些是不变化的-----------残差块里设计到的所有参数:

1)卷积核的尺寸------ 3*3没变,深度变了--------outchannels inchannels变;

2)一张图片的输入尺寸------ c h w变了 影响了 s 和 p------ s和p 变;

3)由于我们还要考虑identity(侧通道,图一中虚侧线)的维数变化,我们还可以添加一个变量,用它来控制我们的identity的操作;

所以!我们可以设置,inchannels,outchannels ,stride,padding,以及downsample 为变量。

class BasicBlock(nn.Module):

def __init__(self, in_channel, out_channel, stride=1, padding=1, downsample=None): # 用downsample来控制是否要改输入的维度,也就是图片里的dot-shortcut

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel, kernel_size=3, stride=stride, padding=padding)

self.bn1 = nn.BatchNorm2d(out_channel)

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel, kernel_size=3, stride=1, padding=padding)

self.bn2 = nn.BatchNorm2d(out_channel)

self.down1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel, kernel_size=1, stride=2)

self.down = downsample

def forward(self, x):

shortcut = x

out = self.conv1(x)

out = F.relu(self.bn1(out))

out = self.conv2(out)

out = self.bn2(out)

if self.down is None:

out += shortcut

else:

shortcut = self.down1(shortcut)

out += shortcut

out = F.relu(out)

return out # [b c h w ]

主网络代码:

定义完残差块代码,就是主网络了,按照网络结构搭建就可以了:

'''Resnet34 输入大小为224 的图片'''

class ResNet(nn.Module):

def __init__(self):

super(ResNet, self).__init__()

self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3) # [64*112*112]

self.bn1 = nn.BatchNorm2d(64)

self.pool1 = nn.MaxPool2d(3, 2, 1)

'''残差块'''

self.block2 = BasicBlock(64, 64)

self.block3_1 = BasicBlock(64, 128, stride=2, downsample=True)

self.block3 = BasicBlock(128, 128)

self.block4_1 = BasicBlock(128, 256, stride=2, downsample=True)

self.block4 = BasicBlock(256, 256)

self.block5_1 = BasicBlock(256, 512, stride=2, downsample=True)

self.block5 = BasicBlock(512, 512)

self.pool2 = nn.AvgPool2d(7, 1)

self.fc1 = nn.Linear(512*1*1, 10)

def forward(self, x):

x = self.conv1(x)

x = F.relu(self.bn1(x))

x = self.pool1(x)

for i in range(3):

x = self.block2(x) # 进入第一个残差块

x = self.block3_1(x)

for i in range(3):

x = self.block3(x)

x = self.block4_1(x)

for i in range(5):

x = self.block4(x)

x = self.block5_1(x)

for i in range(2):

x = self.block5(x)

x = self.pool2(x)

x = x.view(-1, 512*1*1)

x = self.fc1(x)

return x

'''测试整体网络'''

# import torch

#

# input1 = torch.rand([36, 3, 224, 224])

# model = ResNet()

# out = model(input1)

# print(out)

所有p的计算都是按照 (n+2p-f)/s + 1 向下取整计算的

二、dataloader参数

所以 : for step,data in enumerate(trainloader)

step步过1,数据集会步过一个 batchsize张图片