Python吴恩达深度学习作业14 -- 残差网络 Residual Networks

残差网络

你将学习如何使用残差网络(ResNets)构建非常深的卷积网络。理论上讲,更深的网络可以表现更复杂的特征。但实际上,他们很难训练。引入的残差网络使你可以训练比以前实际可行的深层网络。

在此作业中,你将:

- 实现ResNets的基本构建块。

- 将这些模块放在一起,以实现和训练用于图像分类的最新神经网络。

这项作业将使用Keras完成。

import numpy as np

import tensorflow as tf

from keras import layers

from keras.layers import Input, Add, Dense, Activation, ZeroPadding2D, BatchNormalization, Flatten, Conv2D, AveragePooling2D, MaxPooling2D, GlobalMaxPooling2D

from keras.models import Model, load_model

from keras.preprocessing import image

from keras.utils import layer_utils

from keras.utils.data_utils import get_file

from keras.applications.imagenet_utils import preprocess_input

import pydot

from IPython.display import SVG

from keras.utils.vis_utils import model_to_dot

from keras.utils import plot_model

from resnets_utils import *

from keras.initializers import glorot_uniform

import scipy.misc

from matplotlib.pyplot import imshow

%matplotlib inline

import keras.backend as K

K.set_image_data_format('channels_last')

K.set_learning_phase(1)

Using TensorFlow backend.

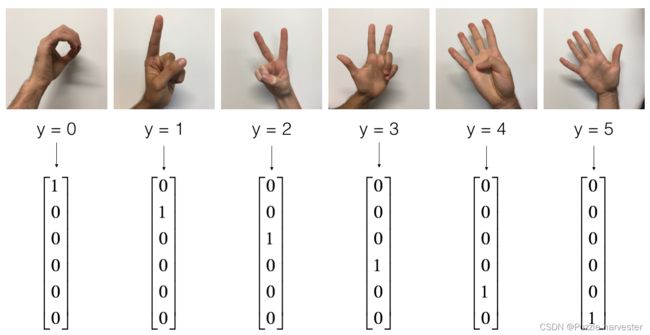

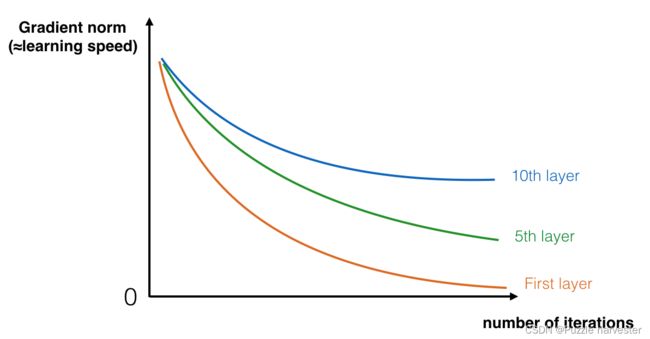

1 深层神经网络带来的问题

上周,你构建了第一个卷积神经网络。近年来,神经网络变得越来越深,网络已经从最初的几层(例如AlexNet)扩展到了一百多层。

深层网络的主要好处是可以表示非常复杂的特征。它还可以学习许多不同抽象级别的特征,从边缘(较低层)到非常复杂的特征(较深层)。但是,使用更深的网络并不总是好的。训练它们的一个巨大障碍是梯度的消失:非常深的网络通常具有迅速变为零的梯度信号,因此使梯度下降的速度令人难以忍受。更具体地说,在梯度下降过程中,当你从最后一层反向传播回第一层时,你需要在每一步上乘以权重矩阵,因此,梯度可以快速指数下降至零(或者在极少数情况下呈指数增长并“爆炸”为非常大的值)。

因此,在训练过程中,随着训练的进行,你可能会看到较早层的梯度的大小(或范数)非常快地减小到零:

随着训练,网络学习的速度开始迅速下降

你现在将通过构建残差网络来解决此问题!

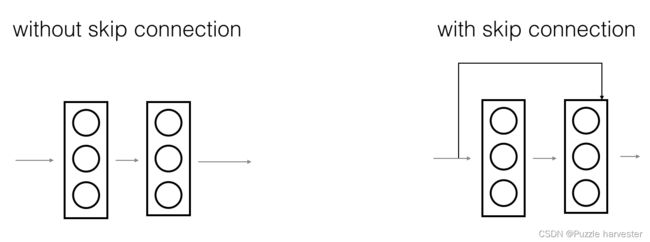

2 建立残差网络

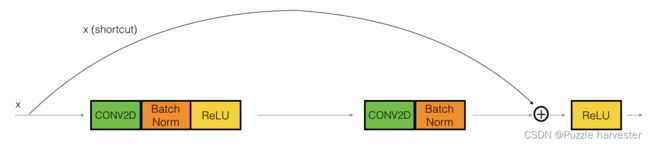

在ResNets中,“shortcut” 或者 "skip connection"允许将梯度直接反向传播到较早的层:

左图显示了通过网络的“主要路径”。右图为主路径添加了shortcut。通过将这些ResNet块彼此堆叠,可以形成一个非常深的网络。

我们在教程中还看到,使用带有shortcut的ResNet块可以非常容易学习标识功能。这意味着你可以堆叠在其他ResNet块上,而几乎不会损害训练集性能。(还有一些证据表明,学习标识功能甚至比skip connections有助于解决梯度消失问题–也说明了ResNets的出色性能。)

ResNet中主要使用两种类型的块,这主要取决于输入/输出尺寸是相同还是不同。你将要在作业中实现两者。

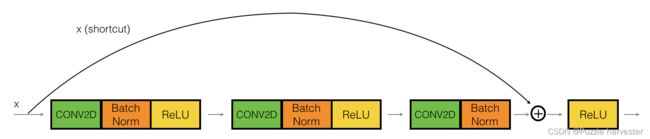

2.1 The identity block

The identity block是ResNets中使用的标准块,它对应于输入激活(例如 a [ l ] a^{[l]} a[l])与输出激活(例如 a [ l + 2 ] a^{[l+2]} a[l+2])。为了充实ResNet标识块中发生的不同步骤,下面是显示各个步骤的替代图:

上部路径是"shortcut path"。下部路径是"main path"。在此图中,我们还明确了每一层中的CONV2D和ReLu步骤。为了加快训练速度,我们还添加了BatchNorm步骤。不必担心实现起来很复杂-你会看到BatchNorm只需Keras中的一行代码!

在本练习中,你实际上将实现此识别块的功能稍微的版本,其中跳过连接将"skips over"3个隐藏层而不是2个。看起来像这样:

下面是各个步骤:

主路径的第一部分:

- 第一个CONV2D具有形状为(1,1)和步幅为(1,1)的 F 1 F_1 F1个滤波器。其填充为“

valid”,其名称应为conv_name_base + '2a'。使用0作为随机初始化的种子。 - 第一个BatchNorm标准化通道轴。它的名字应该是

bn_name_base + '2a'。 - 然后应用ReLU激活函数。

主路径的第二部分:

- 第二个CONV2D具有形状为 ( f , f ) (f,f) (f,f)的步幅为(1,1)的 F 2 F_2 F2个滤波器。其填充为“

same”,其名称应为conv_name_base + '2b'。使用0作为随机初始化的种子。 - 第二个BatchNorm标准化通道轴。它的名字应该是

bn_name_base + '2b'。 - 然后应用ReLU激活函数。

主路径的第三部分:

- 第三个CONV2D具有形状为(1,1)和步幅为(1,1)的 F 3 F_3 F3个滤波器。其填充为“

valid”,其名称应为conv_name_base + '2c'。使用0作为随机初始化的种子。 - 第三个BatchNorm标准化通道轴。它的名字应该是

bn_name_base + '2c'。请注意,此组件中没有ReLU激活函数。

最后一步:

- 将shortcut和输入添加在一起。

- 然后应用ReLU激活函数。

练习:实现ResNet的identity block。我们已经实现了主路径的第一部分,请仔细阅读此内容,以确保你知道它在做什么。你应该执行其余的工作。

- 要实现Conv2D步骤:See reference

- 要实现BatchNorm: See reference(axis:整数,需要标准化的轴(通常是通道轴) )

- 对于激活,请使用:Activation(‘relu’)(X)

- 要添加shortcut传递的值:See reference

def identity_block(X, f, filters, stage, block):

"""

参数:

X -- 形状的输入张量(m, n_H_prev, n_W_prev, n_C_prev)

f -- 整数,指定主路径的中间CONV窗口的形状

filters -- python整数列表,定义了主路径的CONV层中过滤器的数量

stage -- 整数,用于根据层在网络中的位置来命名层

block -- 字符串/字符,用于根据层在网络中的位置命名层

"""

conv_name_base = 'res' + str(stage) + block + '_branch'

bn_name_base = 'bn' + str(stage) + block + '_branch'

F1, F2, F3 = filters

# 保存输入值。稍后您将需要将其添加回主路径。

X_shortcut = X

# 主路径的第一个组件

X = Conv2D(filters = F1, kernel_size = (1, 1), strides = (1,1), padding = 'valid', name = conv_name_base + '2a', kernel_initializer = glorot_uniform(seed=0))(X)

X = BatchNormalization(axis = 3, name = bn_name_base + '2a')(X)

X = Activation('relu')(X)

# 主路径的第二部分

X = Conv2D(filters = F2, kernel_size = (f, f), strides = (1,1), padding = 'same', name = conv_name_base + '2b', kernel_initializer = glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name = bn_name_base + '2b')(X)

X = Activation('relu')(X)

# 主路径的第三部分

X = Conv2D(filters = F3, kernel_size = (1, 1), strides = (1,1), padding = 'valid', name = conv_name_base + '2c', kernel_initializer = glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name = bn_name_base + '2c')(X)

# 最后一步:添加shortcut值到主路径,并通过一个RELU激活

X = layers.add([X, X_shortcut])

X = Activation('relu')(X)

return X

tf.reset_default_graph()

with tf.Session() as test:

np.random.seed(1)

A_prev = tf.placeholder("float", [3, 4, 4, 6])

X = np.random.randn(3, 4, 4, 6)

A = identity_block(A_prev, f = 2, filters = [2, 4, 6], stage = 1, block = 'a')

test.run(tf.global_variables_initializer())

out = test.run([A], feed_dict={A_prev: X, K.learning_phase(): 0})

print("out = " + str(out[0][1][1][0]))

out = [0.19716819 0. 1.3561227 2.1713073 0. 1.3324987 ]

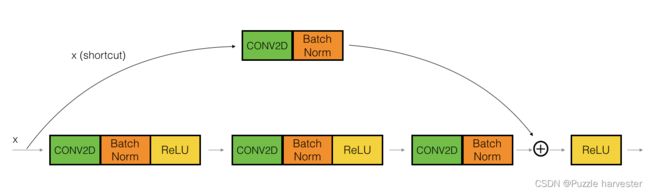

2.2 The convolutional block

你已经实现了ResNet中的识别块。接下来,ResNet“卷积块”是另一种类型的块。当输入和输出尺寸不匹配时,可以使用这种类型的块。与标识块的区别在于,shortcut路径中有一个CONV2D层:

shortcut路径中的CONV2D层用于将输入 x x x调整为另一个维度的大小,以便维度与最终需要添加到shortcut主路径所用的维度匹配。(这与讲座中讨论的矩阵 W s W_s Ws起到类似的作用。)例如,要将激活尺寸的高度和宽度减小2倍,可以使用步幅为2的1x1卷积。CONV2D层位于shortcut路径不使用任何非线性激活函数。它的主要作用是仅应用(学习的)线性函数来减小输入的维度,以使维度与之后的步骤匹配。

卷积块的细节如下:

主路径的第一部分:

- 第一个CONV2D具有形状为(1,1)和步幅为(s,s)的 F 1 F_1 F1个滤波器。其填充为"

valid",其名称应为conv_name_base + '2a'。 - 第一个BatchNorm标准化通道轴。其名字是

bn_name_base + '2a'。 - 然后应用ReLU激活函数。

主路径的第二部分:

- 第二个CONV2D具有 ( f , f ) (f,f) (f,f)的 F 2 F_2 F2滤波器和(1,1)的步幅。其填充为"

same",并且名称应为conv_name_base + '2b'。 - 第二个BatchNorm标准化通道轴。它的名字应该是

bn_name_base + '2b'。 - 然后应用ReLU激活函数。

主路径的第三部分:

- 第三个CONV2D的 F 3 F_3 F3滤波器为(1,1),步幅为(1,1)。其填充为"

valid",其名称应为conv_name_base + '2c'。 - 第三个BatchNorm标准化通道轴。它的名字应该是

bn_name_base + '2c'。请注意,此组件中没有ReLU激活函数。

Shortcut path:

- CONV2D具有形状为(1,1)和步幅为(s,s)的 F 3 F_3 F3个滤波器。其填充为"

valid",其名称应为conv_name_base + '1'。 - BatchNorm标准化通道轴。它的名字应该是

_name_base + '1'。

最后一步:

- 将Shortcut路径和主路径添加在一起。

- 然后应用ReLU激活函数。

练习:实现卷积模块。我们已经实现了主路径的第一部分;你应该执行其余的工作。和之前一样,使用0作为随机初始化的种子,以确保与评分器的一致性。

- Conv Hint

- BatchNorm Hint (axis:整数,需要标准化的轴(通常是特征轴))

- 激活函数请使用:Activation(‘relu’)(X)

- Addition Hint

def convolutional_block(X, f, filters, stage, block, s = 2):

"""

Implementation of the convolutional block as defined in Figure 4

Arguments:

X -- input tensor of shape (m, n_H_prev, n_W_prev, n_C_prev)

f -- integer, specifying the shape of the middle CONV's window for the main path

filters -- python list of integers, defining the number of filters in the CONV layers of the main path

stage -- integer, used to name the layers, depending on their position in the network

block -- string/character, used to name the layers, depending on their position in the network

s -- Integer, specifying the stride to be used

Returns:

X -- output of the convolutional block, tensor of shape (n_H, n_W, n_C)

"""

# defining name basis

conv_name_base = 'res' + str(stage) + block + '_branch'

bn_name_base = 'bn' + str(stage) + block + '_branch'

# Retrieve Filters

F1, F2, F3 = filters

# Save the input value

X_shortcut = X

##### MAIN PATH #####

# First component of main path

X = Conv2D(F1, (1, 1), strides = (s,s), name = conv_name_base + '2a', padding='valid', kernel_initializer = glorot_uniform(seed=0))(X)

X = BatchNormalization(axis = 3, name = bn_name_base + '2a')(X)

X = Activation('relu')(X)

### START CODE HERE ###

# Second component of main path (≈3 lines)

X = Conv2D(F2, (f, f), strides = (1, 1), name = conv_name_base + '2b',padding='same', kernel_initializer = glorot_uniform(seed=0))(X)

X = BatchNormalization(axis = 3, name = bn_name_base + '2b')(X)

X = Activation('relu')(X)

# Third component of main path (≈2 lines)

X = Conv2D(F3, (1, 1), strides = (1, 1), name = conv_name_base + '2c',padding='valid', kernel_initializer = glorot_uniform(seed=0))(X)

X = BatchNormalization(axis = 3, name = bn_name_base + '2c')(X)

##### SHORTCUT PATH #### (≈2 lines)

X_shortcut = Conv2D(F3, (1, 1), strides = (s, s), name = conv_name_base + '1',padding='valid', kernel_initializer = glorot_uniform(seed=0))(X_shortcut)

X_shortcut = BatchNormalization(axis = 3, name = bn_name_base + '1')(X_shortcut)

# Final step: Add shortcut value to main path, and pass it through a RELU activation (≈2 lines)

X = layers.add([X, X_shortcut])

X = Activation('relu')(X)

### END CODE HERE ###

return X

tf.reset_default_graph()

with tf.Session() as test:

np.random.seed(1)

A_prev = tf.placeholder("float", [3, 4, 4, 6])

X = np.random.randn(3, 4, 4, 6)

A = convolutional_block(A_prev, f = 2, filters = [2, 4, 6], stage = 1, block = 'a')

test.run(tf.global_variables_initializer())

out = test.run([A], feed_dict={A_prev: X, K.learning_phase(): 0})

print("out = " + str(out[0][1][1][0]))

out = [0.09018461 1.2348979 0.4682202 0.03671762 0. 0.65516603]

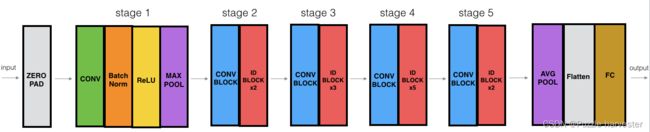

3 建立你的第一个ResNet模型(50层)

现在,你具有构建非常深的ResNet的必要块。下图详细描述了此神经网络的体系结构。图中的“ID BLOCK”代表“识别块”,“ID BLOCK x3”表示你应该将3个识别块堆叠在一起。

此ResNet-50模型的详细结构是:

- 零填充填充(3,3)的输入

- 阶段1:

- 2D卷积具有64个形状为(7,7)的滤波器,并使用(2,2)步幅,名称是“conv1”。

- BatchNorm应用于输入的通道轴。

- MaxPooling使用(3,3)窗口和(2,2)步幅。

- 阶段2:

- 卷积块使用三组大小为[64,64,256]的滤波器,“f”为3,“s”为1,块为“a”。

- 2个标识块使用三组大小为[64,64,256]的滤波器,“f”为3,块为“b”和“c”。

- 阶段3:

- 卷积块使用三组大小为[128,128,512]的滤波器,“f”为3,“s”为2,块为“a”。

- 3个标识块使用三组大小为[128,128,512]的滤波器,“f”为3,块为“b”,“c”和“d”。

- 阶段4:

- 卷积块使用三组大小为[256、256、1024]的滤波器,“f”为3,“s”为2,块为“a”。

- 5个标识块使用三组大小为[256、256、1024]的滤波器,“f”为3,块为“b”,“c”,“d”,“e”和“f”。

- 阶段5:

- 卷积块使用三组大小为[512、512、2048]的滤波器,“f”为3,“s”为2,块为“a”。

- 2个标识块使用三组大小为[256、256、2048]的滤波器,“f”为3,块为“b”和“c”。

- 2D平均池使用形状为(2,2)的窗口,其名称为“avg_pool”。

- Flatten层没有任何超参数或名称。

- 全连接(密集)层使用softmax激活将其输入减少为类数。名字是

'fc' + str(classes)。

练习:使用上图中的描述实现50层的ResNet。我们已经执行了第一阶段和第二阶段。请执行其余的步骤。(实现阶段3-5的语法应与阶段2的语法相似)请确保遵循上面文本中的命名。

你需要使用以下函数:

- Average pooling see reference

这是我们在以下代码中使用的其他函数: - Conv2D: See reference

- BatchNorm: See reference (axis: Integer, the axis that should be normalized (typically the features axis))

- Zero padding: See reference

- Max pooling: See reference

- Fully conected layer: See reference

- Addition: See reference

def ResNet50(input_shape = (64, 64, 3), classes = 6):

"""

Implementation of the popular ResNet50 the following architecture:

CONV2D -> BATCHNORM -> RELU -> MAXPOOL -> CONVBLOCK -> IDBLOCK*2 -> CONVBLOCK -> IDBLOCK*3

-> CONVBLOCK -> IDBLOCK*5 -> CONVBLOCK -> IDBLOCK*2 -> AVGPOOL -> TOPLAYER

Arguments:

input_shape -- shape of the images of the dataset

classes -- integer, number of classes

Returns:

model -- a Model() instance in Keras

"""

# 将输入定义为形状为input_shape的张量

X_input = Input(input_shape)

# 零填充

X = ZeroPadding2D((3, 3))(X_input)

# Stage 1

X = Conv2D(64, (7, 7), strides = (2, 2), name = 'conv1', kernel_initializer = glorot_uniform(seed=0))(X)

X = BatchNormalization(axis = 3, name = 'bn_conv1')(X)

X = Activation('relu')(X)

X = MaxPooling2D((3, 3), strides=(2, 2))(X)

# Stage 2

X = convolutional_block(X, f = 3, filters = [64, 64, 256], stage = 2, block='a', s = 1)

X = identity_block(X, 3, [64, 64, 256], stage=2, block='b')

X = identity_block(X, 3, [64, 64, 256], stage=2, block='c')

### START CODE HERE ###

# Stage 3 (≈4 lines)

# The convolutional block uses three set of filters of size [128,128,512], "f" is 3, "s" is 2 and the block is "a".

# The 3 identity blocks use three set of filters of size [128,128,512], "f" is 3 and the blocks are "b", "c" and "d".

X = convolutional_block(X, f = 3, filters=[128,128,512], stage = 3, block='a', s = 2)

X = identity_block(X, f = 3, filters=[128,128,512], stage= 3, block='b')

X = identity_block(X, f = 3, filters=[128,128,512], stage= 3, block='c')

X = identity_block(X, f = 3, filters=[128,128,512], stage= 3, block='d')

# Stage 4 (≈6 lines)

# The convolutional block uses three set of filters of size [256, 256, 1024], "f" is 3, "s" is 2 and the block is "a".

# The 5 identity blocks use three set of filters of size [256, 256, 1024], "f" is 3 and the blocks are "b", "c", "d", "e" and "f".

X = convolutional_block(X, f = 3, filters=[256, 256, 1024], block='a', stage=4, s = 2)

X = identity_block(X, f = 3, filters=[256, 256, 1024], block='b', stage=4)

X = identity_block(X, f = 3, filters=[256, 256, 1024], block='c', stage=4)

X = identity_block(X, f = 3, filters=[256, 256, 1024], block='d', stage=4)

X = identity_block(X, f = 3, filters=[256, 256, 1024], block='e', stage=4)

X = identity_block(X, f = 3, filters=[256, 256, 1024], block='f', stage=4)

# Stage 5 (≈3 lines)

# The convolutional block uses three set of filters of size [512, 512, 2048], "f" is 3, "s" is 2 and the block is "a".

# The 2 identity blocks use three set of filters of size [256, 256, 2048], "f" is 3 and the blocks are "b" and "c".

X = convolutional_block(X, f = 3, filters=[512, 512, 2048], stage=5, block='a', s = 2)

# filters should be [256, 256, 2048], but it fail to be graded. Use [512, 512, 2048] to pass the grading

X = identity_block(X, f = 3, filters=[256, 256, 2048], stage=5, block='b')

X = identity_block(X, f = 3, filters=[256, 256, 2048], stage=5, block='c')

# AVGPOOL (≈1 line). Use "X = AveragePooling2D(...)(X)"

# The 2D Average Pooling uses a window of shape (2,2) and its name is "avg_pool".

X = AveragePooling2D(pool_size=(2,2))(X)

### END CODE HERE ###

# output layer

X = Flatten()(X)

X = Dense(classes, activation='softmax', name='fc' + str(classes), kernel_initializer = glorot_uniform(seed=0))(X)

# Create model

model = Model(inputs = X_input, outputs = X, name='ResNet50')

return model

运行以下代码以构建模型图。如果你的实现不正确,则可以通过运行下面的model.fit(...)时检查准确性

model = ResNet50(input_shape = (64, 64, 3), classes = 6)

如在Keras教程笔记本中所见,在训练模型之前,你需要通过编译模型来配置学习过程。

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

现在可以训练模型了。 你唯一需要的就是数据集。

X_train_orig, Y_train_orig, X_test_orig, Y_test_orig, classes = load_dataset()

# Normalize image vectors

X_train = X_train_orig/255.

X_test = X_test_orig/255.

# Convert training and test labels to one hot matrices

Y_train = convert_to_one_hot(Y_train_orig, 6).T

Y_test = convert_to_one_hot(Y_test_orig, 6).T

print ("number of training examples = " + str(X_train.shape[0]))

print ("number of test examples = " + str(X_test.shape[0]))

print ("X_train shape: " + str(X_train.shape))

print ("Y_train shape: " + str(Y_train.shape))

print ("X_test shape: " + str(X_test.shape))

print ("Y_test shape: " + str(Y_test.shape))

number of training examples = 1080

number of test examples = 120

X_train shape: (1080, 64, 64, 3)

Y_train shape: (1080, 6)

X_test shape: (120, 64, 64, 3)

Y_test shape: (120, 6)

model.fit(X_train, Y_train, epochs = 20, batch_size = 32)

Epoch 1/20

1080/1080 [==============================] - 61s 56ms/step - loss: 1.9241 - accuracy: 0.4481

Epoch 2/20

1080/1080 [==============================] - 62s 58ms/step - loss: 0.7035 - accuracy: 0.8009

Epoch 3/20

1080/1080 [==============================] - 66s 61ms/step - loss: 0.5215 - accuracy: 0.8583

Epoch 4/20

1080/1080 [==============================] - 67s 62ms/step - loss: 0.3746 - accuracy: 0.8907

Epoch 5/20

1080/1080 [==============================] - 69s 64ms/step - loss: 0.5989 - accuracy: 0.8574

Epoch 6/20

1080/1080 [==============================] - 70s 65ms/step - loss: 0.4219 - accuracy: 0.8944

Epoch 7/20

1080/1080 [==============================] - 71s 66ms/step - loss: 0.3129 - accuracy: 0.9472

Epoch 8/20

1080/1080 [==============================] - 69s 64ms/step - loss: 0.1520 - accuracy: 0.9491

Epoch 9/20

1080/1080 [==============================] - 67s 62ms/step - loss: 0.1416 - accuracy: 0.9583

Epoch 10/20

1080/1080 [==============================] - 67s 62ms/step - loss: 0.0649 - accuracy: 0.9750

Epoch 11/20

1080/1080 [==============================] - 66s 62ms/step - loss: 0.0243 - accuracy: 0.9926

Epoch 12/20

1080/1080 [==============================] - 68s 63ms/step - loss: 0.0681 - accuracy: 0.9824

Epoch 13/20

1080/1080 [==============================] - 67s 62ms/step - loss: 0.0557 - accuracy: 0.9806

Epoch 14/20

1080/1080 [==============================] - 67s 62ms/step - loss: 0.0510 - accuracy: 0.9833

Epoch 15/20

1080/1080 [==============================] - 68s 63ms/step - loss: 0.0359 - accuracy: 0.9898

Epoch 16/20

1080/1080 [==============================] - 67s 62ms/step - loss: 0.0112 - accuracy: 0.9972

Epoch 17/20

1080/1080 [==============================] - 68s 63ms/step - loss: 0.0033 - accuracy: 1.0000

Epoch 18/20

1080/1080 [==============================] - 69s 64ms/step - loss: 0.0256 - accuracy: 0.9907

Epoch 19/20

1080/1080 [==============================] - 68s 63ms/step - loss: 0.0190 - accuracy: 0.9917

Epoch 20/20

1080/1080 [==============================] - 66s 61ms/step - loss: 0.0246 - accuracy: 0.9907

让我们看看这个模型(仅在训练了2个epoch)在测试集上的表现。

preds = model.evaluate(X_test, Y_test)

print ("Loss = " + str(preds[0]))

print ("Test Accuracy = " + str(preds[1]))

120/120 [==============================] - 2s 19ms/step

Loss = 0.2958054721355438

Test Accuracy = 0.9333333373069763

为了完成这项作业,我们已要求你仅在两个epoch内训练模型。尽管它表现不佳,也请继续提交作业; 为了检查正确性,在线评分器也将仅在几个epoch内运行你的代码。

示例训练模型(未提供)

完成此任务的正式(分级)部分后,如果需要,你还可以选择训练ResNet进行更多epoch。训练约20个epoch时,我们会获得更好的性能,但是在CPU上进行训练将需要一个多小时。

使用GPU,我们已经在SIGNS数据集上训练了自己的ResNet50模型的权重。你可以在下面的单元格中的测试集上加载并运行我们训练好的模型。加载模型可能需要大约1分钟。

# model = load_model('ResNet50.h5') #这里要读模型,我们可以先沿用老的模型

preds = model.evaluate(X_test, Y_test)

print ("Loss = " + str(preds[0]))

print ("Test Accuracy = " + str(preds[1]))

120/120 [==============================] - 2s 14ms/step

Loss = 0.2958054721355438

Test Accuracy = 0.9333333373069763

如果对ResNet50进行足够数量的迭代训练,则它将是用于图像分类的强大模型。我们希望你可以使用所学的知识并将其应用于你自己的分类问题,以实现最新的准确性。

恭喜你完成此作业!你现在已经实现了一个优异的图像分类系统!

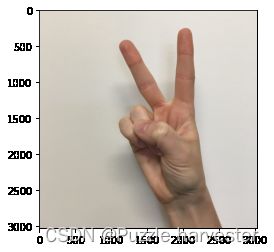

4 测试你自己的图片)

如果愿意,你也可以拍自己的手的照片并查看模型的输出。 你可以:

- 单击此笔记本上部栏中的"File",然后单击"Open"以在Coursera Hub上运行。

- 将图像添加到Jupyter Notebook的目录中,在"images"文件夹中

- 在以下代码中写下你的图片名称

- 运行代码,然后检查算法是否正确!

img_path = 'images/my_image.jpg'

img = image.load_img(img_path, target_size=(64, 64))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

print('Input image shape:', x.shape)

my_image = scipy.misc.imread(img_path)

imshow(my_image)

print("class prediction vector [p(0), p(1), p(2), p(3), p(4), p(5)] = ")

print(model.predict(x))

Input image shape: (1, 64, 64, 3)

d:\vr\virtual_environment\lib\site-packages\ipykernel_launcher.py:7: DeprecationWarning: `imread` is deprecated!

`imread` is deprecated in SciPy 1.0.0, and will be removed in 1.2.0.

Use ``imageio.imread`` instead.

import sys

class prediction vector [p(0), p(1), p(2), p(3), p(4), p(5)] =

[[1. 0. 0. 0. 0. 0.]]

你还可以通过运行以下代码来打印模型的摘要。

model.summary()

Model: "ResNet50"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

zero_padding2d_1 (ZeroPadding2D (None, 70, 70, 3) 0 input_1[0][0]

__________________________________________________________________________________________________

conv1 (Conv2D) (None, 32, 32, 64) 9472 zero_padding2d_1[0][0]

__________________________________________________________________________________________________

bn_conv1 (BatchNormalization) (None, 32, 32, 64) 256 conv1[0][0]

__________________________________________________________________________________________________

activation_4 (Activation) (None, 32, 32, 64) 0 bn_conv1[0][0]

__________________________________________________________________________________________________

max_pooling2d_1 (MaxPooling2D) (None, 15, 15, 64) 0 activation_4[0][0]

__________________________________________________________________________________________________

res2a_branch2a (Conv2D) (None, 15, 15, 64) 4160 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

bn2a_branch2a (BatchNormalizati (None, 15, 15, 64) 256 res2a_branch2a[0][0]

__________________________________________________________________________________________________

activation_5 (Activation) (None, 15, 15, 64) 0 bn2a_branch2a[0][0]

__________________________________________________________________________________________________

res2a_branch2b (Conv2D) (None, 15, 15, 64) 36928 activation_5[0][0]

__________________________________________________________________________________________________

bn2a_branch2b (BatchNormalizati (None, 15, 15, 64) 256 res2a_branch2b[0][0]

__________________________________________________________________________________________________

activation_6 (Activation) (None, 15, 15, 64) 0 bn2a_branch2b[0][0]

__________________________________________________________________________________________________

res2a_branch2c (Conv2D) (None, 15, 15, 256) 16640 activation_6[0][0]

__________________________________________________________________________________________________

res2a_branch1 (Conv2D) (None, 15, 15, 256) 16640 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

bn2a_branch2c (BatchNormalizati (None, 15, 15, 256) 1024 res2a_branch2c[0][0]

__________________________________________________________________________________________________

bn2a_branch1 (BatchNormalizatio (None, 15, 15, 256) 1024 res2a_branch1[0][0]

__________________________________________________________________________________________________

add_2 (Add) (None, 15, 15, 256) 0 bn2a_branch2c[0][0]

bn2a_branch1[0][0]

__________________________________________________________________________________________________

activation_7 (Activation) (None, 15, 15, 256) 0 add_2[0][0]

__________________________________________________________________________________________________

res2b_branch2a (Conv2D) (None, 15, 15, 64) 16448 activation_7[0][0]

__________________________________________________________________________________________________

bn2b_branch2a (BatchNormalizati (None, 15, 15, 64) 256 res2b_branch2a[0][0]

__________________________________________________________________________________________________

activation_8 (Activation) (None, 15, 15, 64) 0 bn2b_branch2a[0][0]

__________________________________________________________________________________________________

res2b_branch2b (Conv2D) (None, 15, 15, 64) 36928 activation_8[0][0]

__________________________________________________________________________________________________

bn2b_branch2b (BatchNormalizati (None, 15, 15, 64) 256 res2b_branch2b[0][0]

__________________________________________________________________________________________________

activation_9 (Activation) (None, 15, 15, 64) 0 bn2b_branch2b[0][0]

__________________________________________________________________________________________________

res2b_branch2c (Conv2D) (None, 15, 15, 256) 16640 activation_9[0][0]

__________________________________________________________________________________________________

bn2b_branch2c (BatchNormalizati (None, 15, 15, 256) 1024 res2b_branch2c[0][0]

__________________________________________________________________________________________________

add_3 (Add) (None, 15, 15, 256) 0 bn2b_branch2c[0][0]

activation_7[0][0]

__________________________________________________________________________________________________

activation_10 (Activation) (None, 15, 15, 256) 0 add_3[0][0]

__________________________________________________________________________________________________

res2c_branch2a (Conv2D) (None, 15, 15, 64) 16448 activation_10[0][0]

__________________________________________________________________________________________________

bn2c_branch2a (BatchNormalizati (None, 15, 15, 64) 256 res2c_branch2a[0][0]

__________________________________________________________________________________________________

activation_11 (Activation) (None, 15, 15, 64) 0 bn2c_branch2a[0][0]

__________________________________________________________________________________________________

res2c_branch2b (Conv2D) (None, 15, 15, 64) 36928 activation_11[0][0]

__________________________________________________________________________________________________

bn2c_branch2b (BatchNormalizati (None, 15, 15, 64) 256 res2c_branch2b[0][0]

__________________________________________________________________________________________________

activation_12 (Activation) (None, 15, 15, 64) 0 bn2c_branch2b[0][0]

__________________________________________________________________________________________________

res2c_branch2c (Conv2D) (None, 15, 15, 256) 16640 activation_12[0][0]

__________________________________________________________________________________________________

bn2c_branch2c (BatchNormalizati (None, 15, 15, 256) 1024 res2c_branch2c[0][0]

__________________________________________________________________________________________________

add_4 (Add) (None, 15, 15, 256) 0 bn2c_branch2c[0][0]

activation_10[0][0]

__________________________________________________________________________________________________

activation_13 (Activation) (None, 15, 15, 256) 0 add_4[0][0]

__________________________________________________________________________________________________

res3a_branch2a (Conv2D) (None, 8, 8, 128) 32896 activation_13[0][0]

__________________________________________________________________________________________________

bn3a_branch2a (BatchNormalizati (None, 8, 8, 128) 512 res3a_branch2a[0][0]

__________________________________________________________________________________________________

activation_14 (Activation) (None, 8, 8, 128) 0 bn3a_branch2a[0][0]

__________________________________________________________________________________________________

res3a_branch2b (Conv2D) (None, 8, 8, 128) 147584 activation_14[0][0]

__________________________________________________________________________________________________

bn3a_branch2b (BatchNormalizati (None, 8, 8, 128) 512 res3a_branch2b[0][0]

__________________________________________________________________________________________________

activation_15 (Activation) (None, 8, 8, 128) 0 bn3a_branch2b[0][0]

__________________________________________________________________________________________________

res3a_branch2c (Conv2D) (None, 8, 8, 512) 66048 activation_15[0][0]

__________________________________________________________________________________________________

res3a_branch1 (Conv2D) (None, 8, 8, 512) 131584 activation_13[0][0]

__________________________________________________________________________________________________

bn3a_branch2c (BatchNormalizati (None, 8, 8, 512) 2048 res3a_branch2c[0][0]

__________________________________________________________________________________________________

bn3a_branch1 (BatchNormalizatio (None, 8, 8, 512) 2048 res3a_branch1[0][0]

__________________________________________________________________________________________________

add_5 (Add) (None, 8, 8, 512) 0 bn3a_branch2c[0][0]

bn3a_branch1[0][0]

__________________________________________________________________________________________________

activation_16 (Activation) (None, 8, 8, 512) 0 add_5[0][0]

__________________________________________________________________________________________________

res3b_branch2a (Conv2D) (None, 8, 8, 128) 65664 activation_16[0][0]

__________________________________________________________________________________________________

bn3b_branch2a (BatchNormalizati (None, 8, 8, 128) 512 res3b_branch2a[0][0]

__________________________________________________________________________________________________

activation_17 (Activation) (None, 8, 8, 128) 0 bn3b_branch2a[0][0]

__________________________________________________________________________________________________

res3b_branch2b (Conv2D) (None, 8, 8, 128) 147584 activation_17[0][0]

__________________________________________________________________________________________________

bn3b_branch2b (BatchNormalizati (None, 8, 8, 128) 512 res3b_branch2b[0][0]

__________________________________________________________________________________________________

activation_18 (Activation) (None, 8, 8, 128) 0 bn3b_branch2b[0][0]

__________________________________________________________________________________________________

res3b_branch2c (Conv2D) (None, 8, 8, 512) 66048 activation_18[0][0]

__________________________________________________________________________________________________

bn3b_branch2c (BatchNormalizati (None, 8, 8, 512) 2048 res3b_branch2c[0][0]

__________________________________________________________________________________________________

add_6 (Add) (None, 8, 8, 512) 0 bn3b_branch2c[0][0]

activation_16[0][0]

__________________________________________________________________________________________________

activation_19 (Activation) (None, 8, 8, 512) 0 add_6[0][0]

__________________________________________________________________________________________________

res3c_branch2a (Conv2D) (None, 8, 8, 128) 65664 activation_19[0][0]

__________________________________________________________________________________________________

bn3c_branch2a (BatchNormalizati (None, 8, 8, 128) 512 res3c_branch2a[0][0]

__________________________________________________________________________________________________

activation_20 (Activation) (None, 8, 8, 128) 0 bn3c_branch2a[0][0]

__________________________________________________________________________________________________

res3c_branch2b (Conv2D) (None, 8, 8, 128) 147584 activation_20[0][0]

__________________________________________________________________________________________________

bn3c_branch2b (BatchNormalizati (None, 8, 8, 128) 512 res3c_branch2b[0][0]

__________________________________________________________________________________________________

activation_21 (Activation) (None, 8, 8, 128) 0 bn3c_branch2b[0][0]

__________________________________________________________________________________________________

res3c_branch2c (Conv2D) (None, 8, 8, 512) 66048 activation_21[0][0]

__________________________________________________________________________________________________

bn3c_branch2c (BatchNormalizati (None, 8, 8, 512) 2048 res3c_branch2c[0][0]

__________________________________________________________________________________________________

add_7 (Add) (None, 8, 8, 512) 0 bn3c_branch2c[0][0]

activation_19[0][0]

__________________________________________________________________________________________________

activation_22 (Activation) (None, 8, 8, 512) 0 add_7[0][0]

__________________________________________________________________________________________________

res3d_branch2a (Conv2D) (None, 8, 8, 128) 65664 activation_22[0][0]

__________________________________________________________________________________________________

bn3d_branch2a (BatchNormalizati (None, 8, 8, 128) 512 res3d_branch2a[0][0]

__________________________________________________________________________________________________

activation_23 (Activation) (None, 8, 8, 128) 0 bn3d_branch2a[0][0]

__________________________________________________________________________________________________

res3d_branch2b (Conv2D) (None, 8, 8, 128) 147584 activation_23[0][0]

__________________________________________________________________________________________________

bn3d_branch2b (BatchNormalizati (None, 8, 8, 128) 512 res3d_branch2b[0][0]

__________________________________________________________________________________________________

activation_24 (Activation) (None, 8, 8, 128) 0 bn3d_branch2b[0][0]

__________________________________________________________________________________________________

res3d_branch2c (Conv2D) (None, 8, 8, 512) 66048 activation_24[0][0]

__________________________________________________________________________________________________

bn3d_branch2c (BatchNormalizati (None, 8, 8, 512) 2048 res3d_branch2c[0][0]

__________________________________________________________________________________________________

add_8 (Add) (None, 8, 8, 512) 0 bn3d_branch2c[0][0]

activation_22[0][0]

__________________________________________________________________________________________________

activation_25 (Activation) (None, 8, 8, 512) 0 add_8[0][0]

__________________________________________________________________________________________________

res4a_branch2a (Conv2D) (None, 4, 4, 256) 131328 activation_25[0][0]

__________________________________________________________________________________________________

bn4a_branch2a (BatchNormalizati (None, 4, 4, 256) 1024 res4a_branch2a[0][0]

__________________________________________________________________________________________________

activation_26 (Activation) (None, 4, 4, 256) 0 bn4a_branch2a[0][0]

__________________________________________________________________________________________________

res4a_branch2b (Conv2D) (None, 4, 4, 256) 590080 activation_26[0][0]

__________________________________________________________________________________________________

bn4a_branch2b (BatchNormalizati (None, 4, 4, 256) 1024 res4a_branch2b[0][0]

__________________________________________________________________________________________________

activation_27 (Activation) (None, 4, 4, 256) 0 bn4a_branch2b[0][0]

__________________________________________________________________________________________________

res4a_branch2c (Conv2D) (None, 4, 4, 1024) 263168 activation_27[0][0]

__________________________________________________________________________________________________

res4a_branch1 (Conv2D) (None, 4, 4, 1024) 525312 activation_25[0][0]

__________________________________________________________________________________________________

bn4a_branch2c (BatchNormalizati (None, 4, 4, 1024) 4096 res4a_branch2c[0][0]

__________________________________________________________________________________________________

bn4a_branch1 (BatchNormalizatio (None, 4, 4, 1024) 4096 res4a_branch1[0][0]

__________________________________________________________________________________________________

add_9 (Add) (None, 4, 4, 1024) 0 bn4a_branch2c[0][0]

bn4a_branch1[0][0]

__________________________________________________________________________________________________

activation_28 (Activation) (None, 4, 4, 1024) 0 add_9[0][0]

__________________________________________________________________________________________________

res4b_branch2a (Conv2D) (None, 4, 4, 256) 262400 activation_28[0][0]

__________________________________________________________________________________________________

bn4b_branch2a (BatchNormalizati (None, 4, 4, 256) 1024 res4b_branch2a[0][0]

__________________________________________________________________________________________________

activation_29 (Activation) (None, 4, 4, 256) 0 bn4b_branch2a[0][0]

__________________________________________________________________________________________________

res4b_branch2b (Conv2D) (None, 4, 4, 256) 590080 activation_29[0][0]

__________________________________________________________________________________________________

bn4b_branch2b (BatchNormalizati (None, 4, 4, 256) 1024 res4b_branch2b[0][0]

__________________________________________________________________________________________________

activation_30 (Activation) (None, 4, 4, 256) 0 bn4b_branch2b[0][0]

__________________________________________________________________________________________________

res4b_branch2c (Conv2D) (None, 4, 4, 1024) 263168 activation_30[0][0]

__________________________________________________________________________________________________

bn4b_branch2c (BatchNormalizati (None, 4, 4, 1024) 4096 res4b_branch2c[0][0]

__________________________________________________________________________________________________

add_10 (Add) (None, 4, 4, 1024) 0 bn4b_branch2c[0][0]

activation_28[0][0]

__________________________________________________________________________________________________

activation_31 (Activation) (None, 4, 4, 1024) 0 add_10[0][0]

__________________________________________________________________________________________________

res4c_branch2a (Conv2D) (None, 4, 4, 256) 262400 activation_31[0][0]

__________________________________________________________________________________________________

bn4c_branch2a (BatchNormalizati (None, 4, 4, 256) 1024 res4c_branch2a[0][0]

__________________________________________________________________________________________________

activation_32 (Activation) (None, 4, 4, 256) 0 bn4c_branch2a[0][0]

__________________________________________________________________________________________________

res4c_branch2b (Conv2D) (None, 4, 4, 256) 590080 activation_32[0][0]

__________________________________________________________________________________________________

bn4c_branch2b (BatchNormalizati (None, 4, 4, 256) 1024 res4c_branch2b[0][0]

__________________________________________________________________________________________________

activation_33 (Activation) (None, 4, 4, 256) 0 bn4c_branch2b[0][0]

__________________________________________________________________________________________________

res4c_branch2c (Conv2D) (None, 4, 4, 1024) 263168 activation_33[0][0]

__________________________________________________________________________________________________

bn4c_branch2c (BatchNormalizati (None, 4, 4, 1024) 4096 res4c_branch2c[0][0]

__________________________________________________________________________________________________

add_11 (Add) (None, 4, 4, 1024) 0 bn4c_branch2c[0][0]

activation_31[0][0]

__________________________________________________________________________________________________

activation_34 (Activation) (None, 4, 4, 1024) 0 add_11[0][0]

__________________________________________________________________________________________________

res4d_branch2a (Conv2D) (None, 4, 4, 256) 262400 activation_34[0][0]

__________________________________________________________________________________________________

bn4d_branch2a (BatchNormalizati (None, 4, 4, 256) 1024 res4d_branch2a[0][0]

__________________________________________________________________________________________________

activation_35 (Activation) (None, 4, 4, 256) 0 bn4d_branch2a[0][0]

__________________________________________________________________________________________________

res4d_branch2b (Conv2D) (None, 4, 4, 256) 590080 activation_35[0][0]

__________________________________________________________________________________________________

bn4d_branch2b (BatchNormalizati (None, 4, 4, 256) 1024 res4d_branch2b[0][0]

__________________________________________________________________________________________________

activation_36 (Activation) (None, 4, 4, 256) 0 bn4d_branch2b[0][0]

__________________________________________________________________________________________________

res4d_branch2c (Conv2D) (None, 4, 4, 1024) 263168 activation_36[0][0]

__________________________________________________________________________________________________

bn4d_branch2c (BatchNormalizati (None, 4, 4, 1024) 4096 res4d_branch2c[0][0]

__________________________________________________________________________________________________

add_12 (Add) (None, 4, 4, 1024) 0 bn4d_branch2c[0][0]

activation_34[0][0]

__________________________________________________________________________________________________

activation_37 (Activation) (None, 4, 4, 1024) 0 add_12[0][0]

__________________________________________________________________________________________________

res4e_branch2a (Conv2D) (None, 4, 4, 256) 262400 activation_37[0][0]

__________________________________________________________________________________________________

bn4e_branch2a (BatchNormalizati (None, 4, 4, 256) 1024 res4e_branch2a[0][0]

__________________________________________________________________________________________________

activation_38 (Activation) (None, 4, 4, 256) 0 bn4e_branch2a[0][0]

__________________________________________________________________________________________________

res4e_branch2b (Conv2D) (None, 4, 4, 256) 590080 activation_38[0][0]

__________________________________________________________________________________________________

bn4e_branch2b (BatchNormalizati (None, 4, 4, 256) 1024 res4e_branch2b[0][0]

__________________________________________________________________________________________________

activation_39 (Activation) (None, 4, 4, 256) 0 bn4e_branch2b[0][0]

__________________________________________________________________________________________________

res4e_branch2c (Conv2D) (None, 4, 4, 1024) 263168 activation_39[0][0]

__________________________________________________________________________________________________

bn4e_branch2c (BatchNormalizati (None, 4, 4, 1024) 4096 res4e_branch2c[0][0]

__________________________________________________________________________________________________

add_13 (Add) (None, 4, 4, 1024) 0 bn4e_branch2c[0][0]

activation_37[0][0]

__________________________________________________________________________________________________

activation_40 (Activation) (None, 4, 4, 1024) 0 add_13[0][0]

__________________________________________________________________________________________________

res4f_branch2a (Conv2D) (None, 4, 4, 256) 262400 activation_40[0][0]

__________________________________________________________________________________________________

bn4f_branch2a (BatchNormalizati (None, 4, 4, 256) 1024 res4f_branch2a[0][0]

__________________________________________________________________________________________________

activation_41 (Activation) (None, 4, 4, 256) 0 bn4f_branch2a[0][0]

__________________________________________________________________________________________________

res4f_branch2b (Conv2D) (None, 4, 4, 256) 590080 activation_41[0][0]

__________________________________________________________________________________________________

bn4f_branch2b (BatchNormalizati (None, 4, 4, 256) 1024 res4f_branch2b[0][0]

__________________________________________________________________________________________________

activation_42 (Activation) (None, 4, 4, 256) 0 bn4f_branch2b[0][0]

__________________________________________________________________________________________________

res4f_branch2c (Conv2D) (None, 4, 4, 1024) 263168 activation_42[0][0]

__________________________________________________________________________________________________

bn4f_branch2c (BatchNormalizati (None, 4, 4, 1024) 4096 res4f_branch2c[0][0]

__________________________________________________________________________________________________

add_14 (Add) (None, 4, 4, 1024) 0 bn4f_branch2c[0][0]

activation_40[0][0]

__________________________________________________________________________________________________

activation_43 (Activation) (None, 4, 4, 1024) 0 add_14[0][0]

__________________________________________________________________________________________________

res5a_branch2a (Conv2D) (None, 2, 2, 512) 524800 activation_43[0][0]

__________________________________________________________________________________________________

bn5a_branch2a (BatchNormalizati (None, 2, 2, 512) 2048 res5a_branch2a[0][0]

__________________________________________________________________________________________________

activation_44 (Activation) (None, 2, 2, 512) 0 bn5a_branch2a[0][0]

__________________________________________________________________________________________________

res5a_branch2b (Conv2D) (None, 2, 2, 512) 2359808 activation_44[0][0]

__________________________________________________________________________________________________

bn5a_branch2b (BatchNormalizati (None, 2, 2, 512) 2048 res5a_branch2b[0][0]

__________________________________________________________________________________________________

activation_45 (Activation) (None, 2, 2, 512) 0 bn5a_branch2b[0][0]

__________________________________________________________________________________________________

res5a_branch2c (Conv2D) (None, 2, 2, 2048) 1050624 activation_45[0][0]

__________________________________________________________________________________________________

res5a_branch1 (Conv2D) (None, 2, 2, 2048) 2099200 activation_43[0][0]

__________________________________________________________________________________________________

bn5a_branch2c (BatchNormalizati (None, 2, 2, 2048) 8192 res5a_branch2c[0][0]

__________________________________________________________________________________________________

bn5a_branch1 (BatchNormalizatio (None, 2, 2, 2048) 8192 res5a_branch1[0][0]

__________________________________________________________________________________________________

add_15 (Add) (None, 2, 2, 2048) 0 bn5a_branch2c[0][0]

bn5a_branch1[0][0]

__________________________________________________________________________________________________

activation_46 (Activation) (None, 2, 2, 2048) 0 add_15[0][0]

__________________________________________________________________________________________________

res5b_branch2a (Conv2D) (None, 2, 2, 256) 524544 activation_46[0][0]

__________________________________________________________________________________________________

bn5b_branch2a (BatchNormalizati (None, 2, 2, 256) 1024 res5b_branch2a[0][0]

__________________________________________________________________________________________________

activation_47 (Activation) (None, 2, 2, 256) 0 bn5b_branch2a[0][0]

__________________________________________________________________________________________________

res5b_branch2b (Conv2D) (None, 2, 2, 256) 590080 activation_47[0][0]

__________________________________________________________________________________________________

bn5b_branch2b (BatchNormalizati (None, 2, 2, 256) 1024 res5b_branch2b[0][0]

__________________________________________________________________________________________________

activation_48 (Activation) (None, 2, 2, 256) 0 bn5b_branch2b[0][0]

__________________________________________________________________________________________________

res5b_branch2c (Conv2D) (None, 2, 2, 2048) 526336 activation_48[0][0]

__________________________________________________________________________________________________

bn5b_branch2c (BatchNormalizati (None, 2, 2, 2048) 8192 res5b_branch2c[0][0]

__________________________________________________________________________________________________

add_16 (Add) (None, 2, 2, 2048) 0 bn5b_branch2c[0][0]

activation_46[0][0]

__________________________________________________________________________________________________

activation_49 (Activation) (None, 2, 2, 2048) 0 add_16[0][0]

__________________________________________________________________________________________________

res5c_branch2a (Conv2D) (None, 2, 2, 256) 524544 activation_49[0][0]

__________________________________________________________________________________________________

bn5c_branch2a (BatchNormalizati (None, 2, 2, 256) 1024 res5c_branch2a[0][0]

__________________________________________________________________________________________________

activation_50 (Activation) (None, 2, 2, 256) 0 bn5c_branch2a[0][0]

__________________________________________________________________________________________________

res5c_branch2b (Conv2D) (None, 2, 2, 256) 590080 activation_50[0][0]

__________________________________________________________________________________________________

bn5c_branch2b (BatchNormalizati (None, 2, 2, 256) 1024 res5c_branch2b[0][0]

__________________________________________________________________________________________________

activation_51 (Activation) (None, 2, 2, 256) 0 bn5c_branch2b[0][0]

__________________________________________________________________________________________________

res5c_branch2c (Conv2D) (None, 2, 2, 2048) 526336 activation_51[0][0]

__________________________________________________________________________________________________

bn5c_branch2c (BatchNormalizati (None, 2, 2, 2048) 8192 res5c_branch2c[0][0]

__________________________________________________________________________________________________

add_17 (Add) (None, 2, 2, 2048) 0 bn5c_branch2c[0][0]

activation_49[0][0]

__________________________________________________________________________________________________

activation_52 (Activation) (None, 2, 2, 2048) 0 add_17[0][0]

__________________________________________________________________________________________________

average_pooling2d_1 (AveragePoo (None, 1, 1, 2048) 0 activation_52[0][0]

__________________________________________________________________________________________________

flatten_1 (Flatten) (None, 2048) 0 average_pooling2d_1[0][0]

__________________________________________________________________________________________________

fc6 (Dense) (None, 6) 12294 flatten_1[0][0]

==================================================================================================

Total params: 17,958,790

Trainable params: 17,907,718

Non-trainable params: 51,072

__________________________________________________________________________________________________

d_17[0][0]

__________________________________________________________________________________________________

average_pooling2d_1 (AveragePoo (None, 1, 1, 2048) 0 activation_52[0][0]

__________________________________________________________________________________________________

flatten_1 (Flatten) (None, 2048) 0 average_pooling2d_1[0][0]

__________________________________________________________________________________________________

fc6 (Dense) (None, 6) 12294 flatten_1[0][0]

==================================================================================================

Total params: 17,958,790

Trainable params: 17,907,718

Non-trainable params: 51,072

__________________________________________________________________________________________________