计算机视觉 – Computer Vision | CV

计算机视觉(Computer Vision)是人工智能领域的一个重要分支。它的目的是:看懂图片里的内容。

本文将介绍计算机视觉的基本概念、实现原理、8 个任务和 4 个生活中常见的应用场景。

计算机视觉为什么重要?

人的大脑皮层, 有差不多 70% 都是在处理视觉信息。 是人类获取信息最主要的渠道,没有之一。

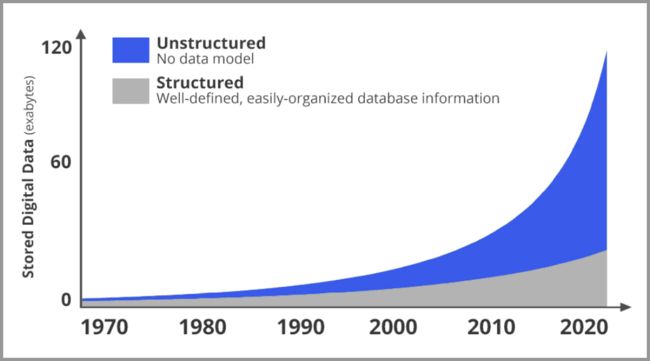

在网络世界,照片和视频(图像的集合)也正在发生爆炸式的增长!

下图是网络上新增数据的占比趋势图。灰色是结构化数据,蓝色是非结构化数据(大部分都是图像和视频)。可以很明显的发现,图片和视频正在以指数级的速度在增长。

而在计算机视觉出现之前,图像对于计算机来说是黑盒的状态。

一张图片对于机器只是一个文件。机器并不知道图片里的内容到底是什么,只知道这张图片是什么尺寸,多少MB,什么格式的。

如果计算机、人工智能想要在现实世界发挥重要作用,就必须看懂图片!这就是计算机视觉要解决的问题。

什么是计算机视觉 – CV?

计算机视觉是人工智能的一个重要分支,它要解决的问题就是:看懂图像里的内容。

比如:

- 图片里的宠物是猫还是狗?

- 图片里的人是老张还是老王?

- 这张照片里,桌子上放了哪些物品?

计算机视觉的原理是什么?

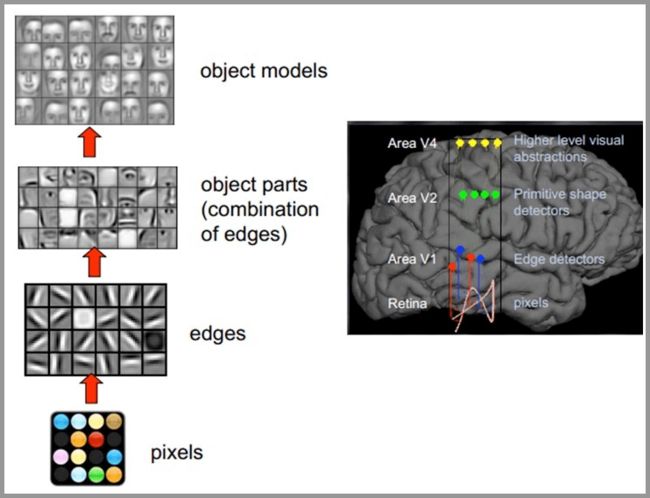

目前主流的基于深度学习的机器视觉方法,其原理跟人类大脑工作的原理比较相似。

人类的视觉原理如下:从原始信号摄入开始(瞳孔摄入像素 Pixels),接着做初步处理(大脑皮层某些细胞发现边缘和方向),然后抽象(大脑判定,眼前的物体的形状,是圆形的),然后进一步抽象(大脑进一步判定该物体是只气球)。

机器的方法也是类似:构造多层的神经网络,较低层的识别初级的图像特征,若干底层特征组成更上一层特征,最终通过多个层级的组合,最终在顶层做出分类。

计算机视觉的2大挑战

对于人类来说看懂图片是一件很简单的事情,但是对于机器来说这是一个非常难的事情,说 2 个典型的难点:

特征难以提取

同一只猫在不同的角度,不同的光线,不同的动作下。像素差异是非常大的。就算是同一张照片,旋转90度后,其像素差异也非常大!

所以图片里的内容相似甚至相同,但是在像素层面,其变化会非常大。这对于特征提取是一大挑战。

需要计算的数据量巨大

手机上随便拍一张照片就是1000*2000像素的。每个像素 RGB 3个参数,一共有1000 X 2000 X 3=6,000,000。随便一张照片就要处理 600万 个参数,再算算现在越来越流行的 4K 视频。就知道这个计算量级有多恐怖了。

CNN 解决了上面的两大难题

CNN 属于深度学习的范畴,它很好的解决了上面所说的2大难点:

- CNN 可以有效的提取图像里的特征

- CNN 可以将海量的数据(不影响特征提取的前提下)进行有效的降维,大大减少了对算力的要求

CNN 的具体原理这里不做具体说明,感兴趣的可以看看《一文看懂卷积神经网络-CNN(基本原理+独特价值+实际应用)》

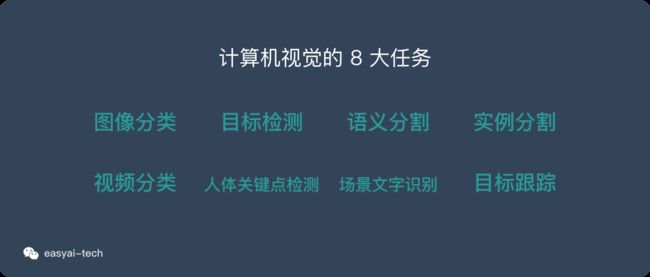

计算机视觉的 8 大任务

图像分类

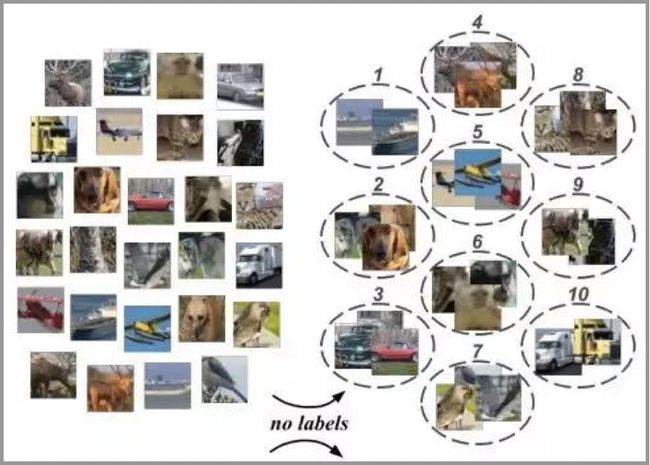

图像分类是计算机视觉中重要的基础问题。后面提到的其他任务也是以它为基础的。

举几个典型的例子:人脸识别、图片鉴黄、相册根据人物自动分类等。

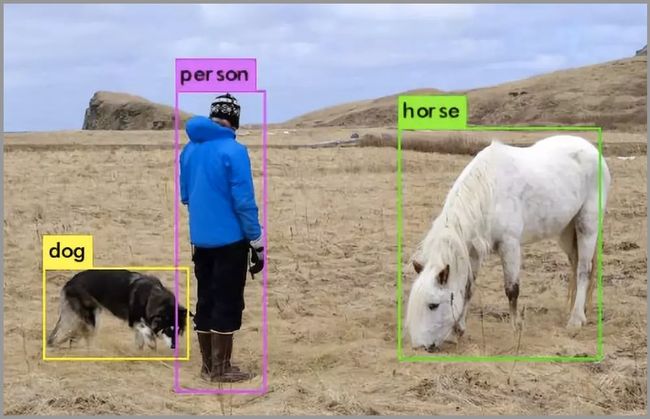

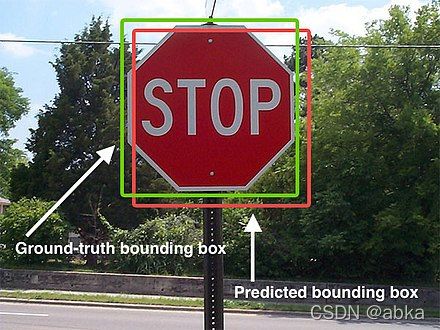

目标检测

目标检测任务的目标是给定一张图像或是一个视频帧,让计算机找出其中所有目标的位置,并给出每个目标的具体类别。

语义分割

它将整个图像分成像素组,然后对像素组进行标记和分类。语义分割试图在语义上理解图像中每个像素是什么(人、车、狗、树…)。

如下图,除了识别人、道路、汽车、树木等之外,我们还必须确定每个物体的边界。

实例分割

除了语义分割之外,实例分割将不同类型的实例进行分类,比如用 5 种不同颜色来标记 5 辆汽车。我们会看到多个重叠物体和不同背景的复杂景象,我们不仅需要将这些不同的对象进行分类,而且还要确定对象的边界、差异和彼此之间的关系!

视频分类

与图像分类不同的是,分类的对象不再是静止的图像,而是一个由多帧图像构成的、包含语音数据、包含运动信息等的视频对象,因此理解视频需要获得更多的上下文信息,不仅要理解每帧图像是什么、包含什么,还需要结合不同帧,知道上下文的关联信息。

人体关键点检测

体关键点检测,通过人体关键节点的组合和追踪来识别人的运动和行为,对于描述人体姿态,预测人体行为至关重要。

在 Xbox 中就有利用到这个技术。

场景文字识别

很多照片中都有一些文字信息,这对理解图像有重要的作用。

场景文字识别是在图像背景复杂、分辨率低下、字体多样、分布随意等情况下,将图像信息转化为文字序列的过程。

停车场、收费站的车牌识别就是典型的应用场景。

目标跟踪

目标跟踪,是指在特定场景跟踪某一个或多个特定感兴趣对象的过程。传统的应用就是视频和真实世界的交互,在检测到初始对象之后进行观察。

无人驾驶里就会用到这个技术。

CV 在日常生活中的应用场景

计算机视觉的应用场景非常广泛,下面列举几个生活中常见的应用场景。

- 门禁、支付宝上的人脸识别

- 停车场、收费站的车牌识别

- 上传图片或视频到网站时的风险识别

- 抖音上的各种道具(需要先识别出人脸的位置)

这里需要说明一下,条形码和二维码的扫描不算是计算机视觉。

这种对图像的识别,还是基于固定规则的,并不需要处理复杂的图像,完全用不到 AI 技术。

百度百科--计算机视觉

计算机视觉是一门研究如何使机器“看”的科学,更进一步的说,就是是指用摄影机和电脑代替人眼对目标进行识别、跟踪和测量等机器视觉,并进一步做图形处理,使电脑处理成为更适合人眼观察或传送给仪器检测的图像。作为一个科学学科,计算机视觉研究相关的理论和技术,试图建立能够从图像或者多维数据中获取‘信息’的人工智能系统。这里所 指的信息指Shannon定义的,可以用来帮助做一个“决定”的信息。因为感知可以看作是从感官信号中提 取信息,所以计算机视觉也可以看作是研究如何使人工系统从图像或多维数据中“感知”的科学。

中文名

计算机视觉

外文名

Computer Vision

目录

- 1 定义

- 2 解析

- 3 原理

- 4 相关

- ▪ 图像处理

- ▪ 模式识别

- ▪ 图像理解

- 5 现状

- 6 应用

- 7 异同

- 8 问题

- ▪ 识别

- ▪ 运动

- ▪ 场景重建

- ▪ 图像恢复

- 9 系统

- ▪ 图像获取

- ▪ 预处理

- ▪ 特征提取

- ▪ 检测分割

- ▪ 高级处理

- 10 要件

- 11 会议

- ▪ 顶级

- ▪ 较好

- 12 期刊

- ▪ 顶级

- ▪ 较好

定义

播报

计算机视觉是使用计算机及相关设备对生物视觉的一种模拟。它的主要任务就是通过对采集的图片或视频进行处理以获得相应场景的三维信息,就像人类和许多其他类生物每天所做的那样。

计算机视觉是一门关于如何运用照相机和计算机来获取我们所需的,被拍摄对象的数据与信息的学问。形象地说,就是给计算机安装上眼睛(照相机)和大脑(算法),让计算机能够感知环境。我们中国人的成语"眼见为实"和西方人常说的"One picture is worth ten thousand words"表达了视觉对人类的重要性。不难想象,具有视觉的机器的应用前景能有多么地宽广。

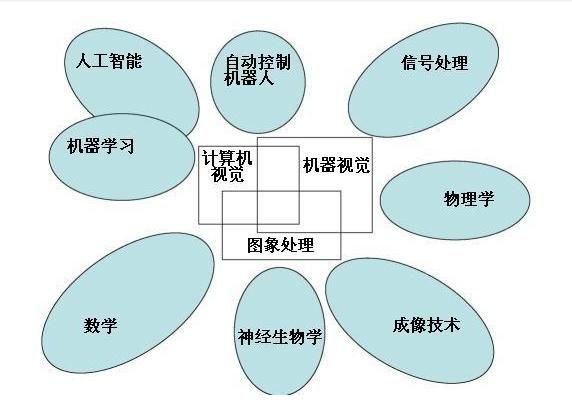

计算机视觉既是工程领域,也是科学领域中的一个富有挑战性重要研究领域。计算机视觉是一门综合性的学科,它已经吸引了来自各个学科的研究者参加到对它的研究之中。其中包括计算机科学和工程、信号处理、物理学、应用数学和统计学,神经生理学和认知科学等。

解析

播报

计算机视觉与其他领域的关系

视觉是各个应用领域,如制造业、检验、文档分析、医疗诊断,和军事等领域中各种智能/自主系统中不可分割的一部分。由于它的重要性,一些先进国家,例如美国把对计算机视觉的研究列为对经济和科学有广泛影响的科学和工程中的重大基本问题,即所谓的重大挑战(grand challenge)。计算机视觉的挑战是要为计算机和机器人开发具有与人类水平相当的视觉能力。机器视觉需要图象信号,纹理和颜色建模,几何处理和推理,以及物体建模。一个有能力的视觉系统应该把所有这些处理都紧密地集成在一起。作为一门学科,计算机视觉开始于60年代初,但在计算机视觉的基本研究中的许多重要进展是在80年代取得的。计算机视觉与人类视觉密切相关,对人类视觉有一个正确的认识将对计算机视觉的研究非常有益。为此我们将先介绍人类视觉。

原理

播报

计算机视觉就是用各种成像系统代替视觉器官作为输入敏感手段,由计算机来代替大脑完成处理和解释。计算机视觉的最终研究目标就是使计算机能象人那样通过视觉观察和理解世界,具有自主适应环境的能力。要经过长期的努力才能达到的目标。因此,在实现最终目标以前,人们努力的中期目标是建立一种视觉系统,这个系统能依据视觉敏感和反馈的某种程度的智能完成一定的任务。例如,计算机视觉的一个重要应用领域就是自主车辆的视觉导航,还没有条件实现像人那样能识别和理解任何环境,完成自主导航的系统。因此,人们努力的研究目标是实现在高速公路上具有道路跟踪能力,可避免与前方车辆碰撞的视觉辅助驾驶系统。这里要指出的一点是在计算机视觉系统中计算机起代替人脑的作用,但并不意味着计算机必须按人类视觉的方法完成视觉信息的处理。计算机视觉可以而且应该根据计算机系统的特点来进行视觉信息的处理。但是,人类视觉系统是迄今为止,人们所知道的功能最强大和完善的视觉系统。如在以下的章节中会看到的那样,对人类视觉处理机制的研究将给计算机视觉的研究提供启发和指导。因此,用计算机信息处理的方法研究人类视觉的机理,建立人类视觉的计算理论。这方面的研究被称为计算视觉(Computational Vision)。计算视觉可被认为是计算机视觉中的一个研究领域。

相关

播报

有不少学科的研究目标与计算机视觉相近或与此有关。这些学科中包括图像处理、模式识别或图像识别、景物分析、图象理解等。计算机视觉包括图像处理和模式识别,除此之外,它还包括空间形状的描述,几何建模以及认识过程。 [1] 实现图像理解是计算机视觉的终极目标。 [2]

图像处理

图像处理技术把输入图像转换成具有所希望特性的另一幅图像。例如,可通过处理使输出图象有较高的信-噪比,或通过增强处理突出图象的细节,以便于操作员的检验。在计算机视觉研究中经常利用图象处理技术进行预处理和特征抽取。

模式识别

模式识别技术根据从图象抽取的统计特性或结构信息,把图像分成予定的类别。例如,文字识别或指纹识别。在计算机视觉中模式识别技术经常用于对图象中的某些部分,例如分割区域的识别和分类。

图像理解

给定一幅图像,图象理解程序不仅描述图象本身,而且描述和解释图象所代表的景物,以便对图像代表的内容作出决定。在人工智能视觉研究的初期经常使用景物分析这个术语,以强调二维图象与三维景物之间的区别。图象理解除了需要复杂的图象处理以外还需要具有关于景物成像的物理规律的知识以及与景物内容有关的知识。

在建立计算机视觉系统时需要用到上述学科中的有关技术,但计算机视觉研究的内容要比这些学科更为广泛。计算机视觉的研究与人类视觉的研究密切相关。为实现建立与人的视觉系统相类似的通用计算机视觉系统的目标需要建立人类视觉的计算机理论。

现状

播报

计算机视觉领域的突出特点是其多样性与不完善性。这一领域的先驱可追溯到更早的时候,但是直到20世纪70年代后期,当计算机的性能提高到足以处理诸如图像这样的大规模数据时,计算机视觉才得到了正式的关注和发展。然而这些发展往往起源于其他不同领域的需要,因而何谓“计算机视觉问题”始终没有得到正式定义,很自然地,“计算机视觉问题”应当被如何解决也没有成型的公式。

尽管如此,人们已开始掌握部分解决具体计算机视觉任务的方法,可惜这些方法通常都仅适用于一群狭隘的目标(如:脸孔、指纹、文字等),因而无法被广泛地应用于不同场合。

对这些方法的应用通常作为某些解决复杂问题的大规模系统的一个组成部分(例如医学图像的处理,工业制造中的质量控制与测量)。在计算机视觉的大多数实际应用当中,计算机被预设为解决特定的任务,然而基于机器学习的方法正日渐普及,一旦机器学习的研究进一步发展,未来“泛用型”的电脑视觉应用或许可以成真。

人工智能所研究的一个主要问题是:如何让系统具备“计划”和“决策能力”?从而使之完成特定的技术动作(例如:移动一个机器人通过某种特定环境)。这一问题便与计算机视觉问题息息相关。在这里,计算机视觉系统作为一个感知器,为决策提供信息。另外一些研究方向包括模式识别和机器学习(这也隶属于人工智能领域,但与计算机视觉有着重要联系),也由此,计算机视觉时常被看作人工智能与计算机科学的一个分支。

物理是与计算机视觉有着重要联系的另一领域。

计算机视觉关注的目标在于充分理解电磁波——主要是可见光与红外线部分——遇到物体表面被反射所形成的图像,而这一过程便是基于光学物理和固态物理,一些尖端的图像感知系统甚至会应用到量子力学理论,来解析影像所表示的真实世界。同时,物理学中的很多测量难题也可以通过计算机视觉得到解决,例如流体运动。也由此,计算机视觉同样可以被看作是物理学的拓展。

另一个具有重要意义的领域是神经生物学,尤其是其中生物视觉系统的部分。

在整个20世纪中,人类对各种动物的眼睛、神经元、以及与视觉刺激相关的脑部组织都进行了广泛研究,这些研究得出了一些有关“天然的”视觉系统如何运作的描述(尽管仍略嫌粗略),这也形成了计算机视觉中的一个子领域——人们试图建立人工系统,使之在不同的复杂程度上模拟生物的视觉运作。同时计算机视觉领域中,一些基于机器学习的方法也有参考部分生物机制。

计算机视觉的另一个相关领域是信号处理。很多有关单元变量信号的处理方法,尤其是对时变信号的处理,都可以很自然的被扩展为计算机视觉中对二元变量信号或者多元变量信号的处理方法。但由于图像数据的特有属性,很多计算机视觉中发展起来的方法,在单元信号的处理方法中却找不到对应版本。这类方法的一个主要特征,便是他们的非线性以及图像信息的多维性,以上二点作为计算机视觉的一部分,在信号处理学中形成了一个特殊的研究方向。

除了上面提到的领域,很多研究课题同样可被当作纯粹的数学问题。例如,计算机视觉中的很多问题,其理论基础便是统计学,最优化理论以及几何学。

如何使既有方法通过各种软硬件实现,或说如何对这些方法加以修改,而使之获得合理的执行速度而又不损失足够精度,是现今电脑视觉领域的主要课题。

应用

播报

人类正在进入信息时代,计算机将越来越广泛地进入几乎所有领域。一方面是更多未经计算机专业训练的人也需要应用计算机,而另一方面是计算机的功能越来越强,使用方法越来越复杂。这就使人在进行交谈和通讯时的灵活性与在使用计算机时所要求的严格和死板之间产生了尖锐的矛盾。人可通过视觉和听觉,语言与外界交换信息,并且可用不同的方式表示相同的含义,而计算机却要求严格按照各种程序语言来编写程序,只有这样计算机才能运行。为使更多的人能使用复杂的计算机,必须改变过去的那种让人来适应计算机,来死记硬背计算机的使用规则的情况。而是反过来让计算机来适应人的习惯和要求,以人所习惯的方式与人进行信息交换,也就是让计算机具有视觉、听觉和说话等能力。这时计算机必须具有逻辑推理和决策的能力。具有上述能力的计算机就是智能计算机。

智能计算机不但使计算机更便于为人们所使用,同时如果用这样的计算机来控制各种自动化装置特别是智能机器人,就可以使这些自动化系统和智能机器人具有适应环境,和自主作出决策的能力。这就可以在各种场合取代人的繁重工作,或代替人到各种危险和恶劣环境中完成任务。

应用范围从任务,比如工业机器视觉系统,比方说,检查瓶子上的生产线加速通过,研究为人工智能和计算机或机器人,可以理解他们周围的世界。计算机视觉和机器视觉领域有显著的重叠。计算机视觉涉及的被用于许多领域自动化图像分析的核心技术。机器视觉通常指的是结合自动图像分析与其他方法和技术,以提供自动检测和机器人指导在工业应用中的一个过程。在许多计算机视觉应用中,计算机被预编程,以解决特定的任务,但基于学习的方法现在正变得越来越普遍。计算机视觉应用的实例包括用于系统:

(1)控制过程,比如,一个工业机器人 ;

(2)导航,例如,通过自主汽车或移动机器人;

(3)检测的事件,如,对视频监控和人数统计 ;

(4)组织信息,例如,对于图像和图像序列的索引数据库;

(5)造型对象或环境,如,医学图像分析系统或地形模型;

(6)相互作用,例如,当输入到一个装置,用于计算机人的交互;

(7)自动检测,例如,在制造业的应用程序。

其中最突出的应用领域是医疗计算机视觉和医学图像处理。这个区域的特征的信息从图像数据中提取用于使患者的医疗诊断的目的。通常,图像数据是在形式显微镜图像,X射线图像,血管造影图像,超声图像和断层图像。的信息,可以从这样的图像数据中提取的一个例子是检测的肿瘤,动脉粥样硬化或其他恶性变化。它也可以是器官的尺寸,血流量等。这种应用领域还支持通过提供新的信息,医学研究的测量例如,对脑的结构,或约医学治疗的质量。计算机视觉在医疗领域的应用还包括增强是由人类的解释,例如超声图像或X射线图像,以降低噪声的影响的图像。

第二个应用程序区域中的计算机视觉是在工业,有时也被称为机器视觉,在那里信息被提取为支撑的制造工序的目的。一个例子是质量控制,其中的信息或最终产品被以找到缺陷自动检测。另一个例子是,被拾取的位置和细节取向测量由机器人臂。机器视觉也被大量用于农业的过程,从散装材料,这个过程被称为去除不想要的东西,食物的光学分拣。

军事上的应用很可能是计算机视觉最大的地区之一。最明显的例子是探测敌方士兵或车辆和导弹制导。更先进的系统为导弹制导发送导弹的区域,而不是一个特定的目标,并且当导弹到达基于本地获取的图像数据的区域的目标做出选择。现代军事概念,如“战场感知”,意味着各种传感器,包括图像传感器,提供了丰富的有关作战的场景,可用于支持战略决策的信息。在这种情况下,数据的自动处理,用于减少复杂性和融合来自多个传感器的信息,以提高可靠性。

一个较新的应用领域是自主车,其中包括潜水,陆上车辆(带轮子,轿车或卡车的小机器人),高空作业车和无人机(UAV)。自主化水平,从完全独立的(无人)的车辆范围为汽车,其中基于计算机视觉的系统支持驱动程序或在不同情况下的试验。完全自主的汽车通常使用计算机视觉进行导航时,即知道它在哪里,或用于生产的环境(地图SLAM)和用于检测障碍物。它也可以被用于检测特定任务的特定事件,例如,一个UAV寻找森林火灾。支承系统的例子是障碍物警报系统中的汽车,以及用于飞行器的自主着陆系统。数家汽车制造商已经证明了系统的汽车自动驾驶,但该技术还没有达到一定的水平,就可以投放市场。有军事自主车型,从先进的导弹,无人机的侦察任务或导弹的制导充足的例子。太空探索已经正在使用计算机视觉,自主车比如,美国宇航局的火星探测漫游者和欧洲航天局的ExoMars火星漫游者。

其他应用领域包括:

(1)支持视觉特效制作的电影和广播,例如,摄像头跟踪(运动匹配)。

(2)监视。

异同

播报

计算机视觉,图象处理,图像分析,机器人视觉和机器视觉是彼此紧密关联的学科。如果你翻开带有上面这些名字的教材,你会发现在技术和应用领域上他们都有着相当大部分的重叠。这表明这些学科的基础理论大致是相同的,甚至让人怀疑他们是同一学科被冠以不同的名称。

然而,各研究机构,学术期刊,会议及公司往往把自己特别的归为其中某一个领域,于是各种各样的用来区分这些学科的特征便被提了出来。下面将给出一种区分方法,尽管并不能说这一区分方法完全准确。

计算机视觉的研究对象主要是映射到单幅或多幅图像上的三维场景,例如三维场景的重建。计算机视觉的研究很大程度上针对图像的内容。

图象处理与图像分析的研究对象主要是二维图像,实现图像的转化,尤其针对像素级的操作,例如提高图像对比度,边缘提取,去噪声和几何变换如图像旋转。这一特征表明无论是图像处理还是图像分析其研究内容都和图像的具体内容无关。

机器视觉主要是指工业领域的视觉研究,例如自主机器人的视觉,用于检测和测量的视觉。这表明在这一领域通过软件硬件,图像感知与控制理论往往与图像处理得到紧密结合来实现高效的机器人控制或各种实时操作。

模式识别使用各种方法从信号中提取信息,主要运用统计学的理论。此领域的一个主要方向便是从图像数据中提取信息。

还有一个领域被称为成像技术。这一领域最初的研究内容主要是制作图像,但有时也涉及到图像分析和处理。例如,医学成像就包含大量的医学领域的图像分析。

对于所有这些领域,一个可能的过程是你在计算机视觉的实验室工作,工作中从事着图象处理,最终解决了机器视觉领域的问题,然后把自己的成果发表在了模式识别的会议上。

问题

播报

几乎在每个计算机视觉技术的具体应用都要解决一系列相同的问题。这些经典的问题包括:

识别

一个计算机视觉,图像处理和机器视觉所共有的经典问题便是判定一组图像数据中是否包含某个特定的物体,图像特征或运动状态。这一问题通常可以通过机器自动解决,但是到目前为止,还没有某个单一的方法能够广泛的对各种情况进行判定:在任意环境中识别任意物体。现有技术能够也只能够很好地解决特定目标的识别,比如简单几何图形识别,人脸识别,印刷或手写文件识别或者车辆识别。而且这些识别需要在特定的环境中,具有指定的光照,背景和目标姿态要求。

广义的识别在不同的场合又演化成了几个略有差异的概念:

识别(狭义的):对一个或多个经过预先定义或学习的物体或物类进行辨识,通常在辨识过程中还要提供他们的二维位置或三维姿态。

鉴别:识别辨认单一物体本身。例如:某一人脸的识别,某一指纹的识别。

监测:从图像中发现特定的情况内容。例如:医学中对细胞或组织不正常技能的发现,交通监视仪器对过往车辆的发现。监测往往是通过简单的图象处理发现图像中的特殊区域,为后继更复杂的操作提供起点。

识别的几个具体应用方向:

基于内容的图像提取:在巨大的图像集合中寻找包含指定内容的所有图片。被指定的内容可以是多种形式,比如一个红色的大致是圆形的图案,或者一辆自行车。在这里对后一种内容的寻找显然要比前一种更复杂,因为前一种描述的是一个低级直观的视觉特征,而后者则涉及一个抽象概念(也可以说是高级的视觉特征),即‘自行车’,显然的一点就是自行车的外观并不是固定的。

姿态评估:对某一物体相对于摄像机的位置或者方向的评估。例如:对机器臂姿态和位置的评估。

光学字符识别对图像中的印刷或手写文字进行识别鉴别,通常的输出是将之转化成易于编辑的文档形式。

运动

基于序列图像的对物体运动的监测包含多种类型,诸如:

自体运动:监测摄像机的三维刚性运动。

图像跟踪:跟踪运动的物体。

场景重建

给定一个场景的二或多幅图像或者一段录像,场景重建寻求为该场景建立一个计算机模型/三维模型。最简单的情况便是生成一组三维空间中的点。更复杂的情况下会建立起完整的三维表面模型。

图像恢复

图像恢复的目标在于移除图像中的噪声,例如仪器噪声,模糊等。

系统

播报

计算机视觉系统的结构形式很大程度上依赖于其具体应用方向。有些是独立工作的,用于解决具体的测量或检测问题;也有些作为某个大型复杂系统的组成部分出现,比如和机械控制系统,数据库系统,人机接口设备协同工作。计算机视觉系统的具体实现方法同时也由其功能决定——是预先固定的抑或是在运行过程中自动学习调整。尽管如此,有些功能却几乎是每个计算机系统都需要具备的:

图像获取

一幅数字图像是由一个或多个图像感知器产生,这里的感知器可以是各种光敏摄像机,包括遥感设备,X射线断层摄影仪,雷达,超声波接收器等。取决于不同的感知器,产生的图片可以是普通的二维图像,三维图组或者一个图像序列。图片的像素值往往对应于光在一个或多个光谱段上的强度(灰度图或彩色图),但也可以是相关的各种物理数据,如声波,电磁波或核磁共振的深度,吸收度或反射度。

预处理

在对图像实施具体的计算机视觉方法来提取某种特定的信息前,一种或一些预处理往往被采用来使图像满足后继方法的要求。例如:

二次取样保证图像坐标的正确;

平滑去噪来滤除感知器引入的设备噪声;

提高对比度来保证实现相关信息可以被检测到;

调整尺度空间使图像结构适合局部应用。

特征提取

从图像中提取各种复杂度的特征。例如:

线,边缘提取;

局部化的特征点检测如边角检测,斑点检测;

更复杂的特征可能与图像中的纹理形状或运动有关。

检测分割

在图像处理过程中,有时会需要对图像进行分割来提取有价值的用于后继处理的部分,例如

筛选特征点;

分割一或多幅图片中含有特定目标的部分。

高级处理

到了这一步,数据往往具有很小的数量,例如图像中经先前处理被认为含有目标物体的部分。这时的处理包括:

验证得到的数据是否符合前提要求;

估测特定系数,比如目标的姿态,体积;

对目标进行分类。

高级处理有理解图像内容的含义,是计算机视觉中的高阶处理,主要是在图像分割的基础上再经行对分割出的图像块进行理解,例如进行识别等操作。

要件

播报

光源布局影响大需审慎考量。

正确的选择镜组,考量倍率、空间、尺寸、失真… 。

选择合适的摄影机(CCD),考量功能、规格、稳定性、耐用...。

视觉软件开发需靠经验累积,多尝试、思考问题的解决途径。

以创造精度的不断提升,缩短处理时间为最终目标。

end。

会议

播报

顶级

ICCV:International Conference on Computer Vision,国际计算机视觉大会

CVPR:International Conference on Computer Vision and Pattern Recognition,国际计算机视觉与模式识别大会

ECCV:European Conference on Computer Vision,欧洲计算机视觉大会

较好

ICIP:International Conference on Image Processing,国际图像处理大会

BMVC:British Machine Vision Conference,英国机器视觉大会

ICPR:International Conference on Pattern Recognition,国际模式识别大会

ACCV:Asian Conference on Computer Vision,亚洲计算机视觉大会

期刊

播报

顶级

PAMI:IEEE Transactions on Pattern Analysis and Machine Intelligence,IEEE 模式分析与机器智能杂志

IJCV:International Journal on Computer Vision,国际计算机视觉杂志

较好

TIP:IEEE Transactions on Image Processing,IEEE图像处理杂志

CVIU:Computer Vision and Image Understanding,计算机视觉与图像理解

PR:Pattern Recognition,模式识别

PRL:Pattern Recognition Letters,模式识别快报

计算机视觉

计算机视觉(Computer vision)

(zh.wikipedia.org)

计算机视觉(Computer vision)是一门研究如何使机器“看”的科学,更进一步的说,就是指用摄影机和计算机代替人眼对目标进行识别、跟踪和测量等机器视觉,并进一步做图像处理,用计算机处理成为更适合人眼观察或传送给仪器检测的图像[1]。

作为一门科学学科,计算机视觉研究相关的理论和技术,试图创建能够从图像或者多维数据中获取“信息”的人工智能系统。这里所指的信息指香农定义的,可以用来帮助做一个“决定”的信息。因为感知可以看作是从感官信号中提取信息,所以计算机视觉也可以看作是研究如何使人工系统从图像或多维数据中“感知”的科学。

作为一个工程学科,计算机视觉寻求基于相关理论与模型来创建计算机视觉系统。这类系统的组成部分包括:

- 过程控制(例如工业机器人和无人驾驶汽车)

- 事件监测(例如图像监测)

- 信息组织(例如图像数据库和图像序列的索引创建)

- 物体与环境建模(例如工业检查,医学图像分析和拓扑建模)

- 交感互动(例如人机互动的输入设备)

计算机视觉同样可以被看作是生物视觉的一个补充。在生物视觉领域中,人类和各种动物的视觉都得到了研究,从而创建了这些视觉系统感知信息过程中所使用的物理模型。另一方面,在计算机视觉中,靠软件和硬件实现的人工智能系统得到了研究与描述。生物视觉与计算机视觉进行的学科间交流为彼此都带来了巨大价值。

计算机视觉包含如下一些分支:画面重建,事件监测,目标跟踪,目标识别,机器学习,索引创建,图像恢复等。

NASA火星探测车的双摄影机系统

目录

- 1计算机视觉的发展现状

- 2相邻领域的异同

- 3计算机视觉的经典问题

- 3.1识别

- 3.2运动

- 3.3场景重建

- 3.4图像恢复

- 4计算机视觉系统

- 5影响视觉系统的要件

- 6参考文献

- 7外部链接

- 8参见

计算机视觉的发展现状

计算机视觉与其他领域的关系

计算机视觉与其他领域的关系

计算机视觉领域的突出特点是其多样性与不完善性。

这一领域的先驱可追溯到更早的时候,但是直到20世纪70年代后期,当计算机的性能提高到足以处理诸如图像这样的大规模数据时,计算机视觉才得到了正式的关注和发展。然而这些发展往往起源于其他不同领域的需要,因而何谓“计算机视觉问题”始终没有得到正式定义,很自然地,“计算机视觉问题”应当被如何解决也没有成型的公式。

尽管如此,人们已开始掌握部分解决具体计算机视觉任务的方法,可惜这些方法通常都仅适用于一群狭隘的目标(如:脸孔、指纹、文字等),因而无法被广泛地应用于不同场合。

对这些方法的应用通常作为某些解决复杂问题的大规模系统的一个组成部分(例如医学图像的处理,工业制造中的质量控制与测量)。在计算机视觉的大多数实际应用当中,计算机被预设为解决特定的任务,然而基于机器学习的方法正日渐普及,一旦机器学习的研究进一步发展,未来“泛用型”的电脑视觉应用或许可以成真。

人工智能所研究的一个主要问题是:如何让系统具备“计划”和“决策能力”?从而使之完成特定的技术动作(例如:移动一个机器人通过某种特定环境)。这一问题便与计算机视觉问题息息相关。在这里,计算机视觉系统作为一个感知器,为决策提供信息。另外一些研究方向包括模式识别和机器学习(这也隶属于人工智能领域,但与计算机视觉有着重要联系),也由此,计算机视觉时常被看作人工智能与计算机科学的一个分支。

物理是与计算机视觉有着重要联系的另一领域。

计算机视觉关注的目标在于充分理解电磁波——主要是可见光与红外线部分——遇到物体表面被反射所形成的图像,而这一过程便是基于光学物理和固态物理,一些尖端的图像传感器甚至会应用到量子力学理论,来解析影像所表示的真实世界。同时,物理学中的很多测量难题也可以通过计算机视觉得到解决,例如流体运动。也由此,计算机视觉同样可以被看作是物理学的拓展。

另一个具有重要意义的领域是神经生物学,尤其是其中生物视觉系统的部分。

在整个20世纪中,人类对各种动物的眼睛、神经元、以及与视觉刺激相关的脑部组织都进行了广泛研究,这些研究得出了一些有关“天然的”视觉系统如何运作的描述(尽管仍略嫌粗略),这也形成了计算机视觉中的一个子领域——人们试图创建人工系统,使之在不同的复杂程度上模拟生物的视觉运作。同时计算机视觉领域中,一些基于机器学习的方法也有参考部分生物机制。

计算机视觉的另一个相关领域是信号处理。很多有关单元变量信号的处理方法,尤其对是时变信号的处理,都可以很自然的被扩展为计算机视觉中对二元变量信号或者多元变量信号的处理方法。但由于图像数据的特有属性,很多计算机视觉中发展起来的方法,在单元信号的处理方法中却找不到对应版本。这类方法的一个主要特征,便是他们的非线性以及图像信息的多维性,以上二点作为计算机视觉的一部分,在信号处理学中形成了一个特殊的研究方向。

除了上面提到的领域,很多研究课题同样可被当作纯粹的数学问题。例如,计算机视觉中的很多问题,其理论基础便是统计学,最优化理论以及几何学。

如何使既有方法通过各种软硬件实现,或说如何对这些方法加以修改,而使之获得合理的执行速度而又不损失足够精度,是现今电脑视觉领域的主要课题。

相邻领域的异同

计算机视觉,图像处理,图像分析,机器人视觉和机器视觉是彼此紧密关联的学科。如果你翻开带有上面这些名字的教材,你会发现在技术和应用领域上他们都有着相当大部分的重叠。这表明这些学科的基础理论大致是相同的,甚至让人怀疑他们是同一学科被冠以不同的名称。

然而,各研究机构,学术期刊,会议及公司往往把自己特别的归为其中某一个领域,于是各种各样的用来区分这些学科的特征便被提了出来。下面将给出一种区分方法,尽管并不能说这一区分方法完全准确。

计算机视觉的研究对象主要是映射到单幅或多幅图像上的三维场景,例如三维场景的重建。计算机视觉的研究很大程度上针对图像的内容。

图像处理与图像分析的研究对象主要是二维图像,实现图像的转化,尤其针对像素级的操作,例如提高图像对比度,边缘提取,去噪声和几何变换如图像旋转。这一特征表明无论是图像处理还是图像分析其研究内容都和图像的具体内容无关。

机器视觉主要是指工业领域的视觉研究,例如自主机器人的视觉,用于检测和测量的视觉。这表明在这一领域通过软件硬件,图像感知与控制理论往往与图像处理得到紧密结合来实现高效的机器人控制或各种实时操作。

模式识别使用各种方法从信号中提取信息,主要运用统计学的理论。此领域的一个主要方向便是从图像数据中提取信息。

还有一个领域被称为成像技术。这一领域最初的研究内容主要是制作图像,但有时也涉及到图像分析和处理。例如,医学成像就包含大量的医学领域的图像分析。

对于所有这些领域,一个可能的过程是你在计算机视觉的实验室工作,工作中从事着图象处理,最终解决了机器视觉领域的问题,然后把自己的成果发表在了模式识别的会议上。

计算机视觉的经典问题

几乎在每个计算机视觉技术的具体应用都要解决一系列相同的问题。这些经典的问题包括:

识别

一个计算机视觉,图像处理和机器视觉所共有的经典问题便是判定一组图像数据中是否包含某个特定的物体,图像特征或运动状态。这一问题通常可以通过机器自动解决,但是到目前为止,还没有某个单一的方法能够广泛的对各种情况进行判定:在任意环境中识别任意物体。现有技术能够也只能够很好地解决特定目标的识别,比如简单几何图形识别,人脸识别,印刷或手写文件识别或者车辆识别。而且这些识别需要在特定的环境中,具有指定的光照,背景和目标姿态要求。

广义的识别在不同的场合又演化成了几个略有差异的概念:

- 识别(狭义的):对一个或多个经过预先定义或学习的物体或物类进行辨识,通常在辨识过程中还要提供他们的二维位置或三维姿态。

- 鉴别:识别辨认单一物体本身。例如:某一人脸的识别,某一指纹的识别。

- 监测:从图像中发现特定的情况内容。例如:医学中对细胞或组织不正常技能的发现,交通监视仪器对过往车辆的发现。监测往往是通过简单的图象处理发现图像中的特殊区域,为后继更复杂的操作提供起点。

识别的几个具体应用方向:

- 基于内容的图像提取:在巨大的图像集合中寻找包含指定内容的所有图片。被指定的内容可以是多种形式,比如一个红色的大致是圆形的图案,或者一辆自行车。在这里对后一种内容的寻找显然要比前一种更复杂,因为前一种描述的是一个低级直观的视觉特征,而后者则涉及一个抽象概念(也可以说是高级的视觉特征),即‘自行车’,显然的一点就是自行车的外观并不是固定的。

- 姿态评估:对某一物体相对于摄像机的位置或者方向的评估。例如:对机器臂姿态和位置的评估。

- 光学字符识别对图像中的印刷或手写文字进行识别鉴别,通常的输出是将之转化成易于编辑的文档形式。

运动

基于序列图像的对物体运动的监测包含多种类型,诸如:

- 自体运动:监测摄像机的三维刚性运动。

- 图像跟踪:跟踪运动的物体。

场景重建

给定一个场景的二或多幅图像或者一段录像,场景重建寻求为该场景创建一个三维模型。最简单的情况便是生成一组三维空间中的点。更复杂的情况下会创建起完整的三维表面模型。

图像恢复

图像恢复的目标在于移除图像中的噪声,例如仪器噪声、动态模糊等。

计算机视觉系统

计算机视觉系统的结构形式很大程度上依赖于其具体应用方向。有些是独立工作的,用于解决具体的测量或检测问题;也有些作为某个大型复杂系统的组成部分出现,比如和机械控制系统,数据库系统,人机接口设备协同工作。计算机视觉系统的具体实现方法同时也由其功能决定——是预先固定的抑或是在运行过程中自动学习调整。尽管如此,有些功能却几乎是每个计算机系统都需要具备的:

- 图像获取:一幅数字图像是由一个或多个图像传感器产生,这里的传感器可以是各种光敏摄像机,包括遥感设备,X射线断层摄影仪,雷达,超声波接收器等。取决于不同的传感器,产生的图片可以是普通的二维图像,三维图组或者一个图像序列。图片的像素值往往对应于光在一个或多个光谱段上的强度(灰度图或彩色图),但也可以是相关的各种物理数据,如声波,电磁波或核磁共振的深度,吸收度或反射度。

- 预处理:在对图像实施具体的计算机视觉方法来提取某种特定的信息前,一种或一些预处理往往被采用来使图像满足后继方法的要求。例如:

- 二次取样保证图像坐标的正确

- 平滑去噪来滤除传感器引入的设备噪声

- 提高对比度来保证实现相关信息可以被检测到

- 调整尺度空间使图像结构适合局部应用

- 特征提取:从图像中提取各种复杂度的特征。例如:

- 线、边缘提取和脊侦测

- 局部化的特征点检测如边角检测、斑点检测

更复杂的特征可能与图像中的纹理形状或运动有关。

- 检测/分割:在图像处理过程中,有时会需要对图像进行分割来提取有价值的用于后继处理的部分,例如:

- 筛选特征点

- 分割一或多幅图片中含有特定目标的部分

- 高级处理:到了这一步,数据往往具有很小的数量,例如图像中经先前处理被认为含有目标物体的部分。这时的处理包括:

- 验证得到的数据是否符合前提要求

- 估测特定系数,比如目标的姿态、体积

- 对目标进行分类

影响视觉系统的要件

- 光源布局影响大需审慎考量。

- 正确的选择镜组,考量倍率、空间、尺寸、有损。

- 选择合适的摄影机(CCD),考量功能、规格、稳定性、耐用。

- 视觉软件开发需靠经验累积,多尝试、思考问题的解决途径。

- 以创造精度的不断提升,缩短处理时间为最终目标。

参考文献

- ^ 黄亚勤。基于视线跟踪技术的眼控鼠标研究与实现[D].西华大学, 2011.

外部链接

- Machine Perception of Three-Dimensional Solids - the paper mentioned by Joseph Mundy in the video

- CVonline: The Evolving, Distributed, Non-Proprietary, On-Line Compendium of Computer Vision (页面存档备份,存于互联网档案馆)

- Introduction to computer vision(464KB pdf file)

- CMU's Computer Vision Homepage(页面存档备份,存于互联网档案馆)

- Fudan University's Computer Vision Lab(页面存档备份,存于互联网档案馆)

- Keith Price's Annotated Computer Vision Bibliography(页面存档备份,存于互联网档案馆) and the Official Mirror Site Keith Price's Annotated Computer Vision Bibliography(页面存档备份,存于互联网档案馆)

- HIPR2 image processing teaching package(页面存档备份,存于互联网档案馆)

- USC Iris computer vision conference list(页面存档备份,存于互联网档案馆)

- How to come up with new research ideas in computer vision? (in Chinese)(页面存档备份,存于互联网档案馆)

- People in Computer Vision(页面存档备份,存于互联网档案馆)

- The Computer Vision Genealogy Project(页面存档备份,存于互联网档案馆)

参见

- 人工智能与模式识别

- 图像处理

- 自动光学检查

- 开放源代码计算机视觉库:OpenCV

- 计算机科学主题

- 机器人学主题

摘自:https://zh.wikipedia.org/wiki/%E8%AE%A1%E7%AE%97%E6%9C%BA%E8%A7%86%E8%A7%89

计算机视觉

Computer vision

From Wikipedia

Computer vision is an interdisciplinary scientific field that deals with how computers can gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to understand and automate tasks that the human visual system can do.[1][2][3]

Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions.[4][5][6][7] Understanding in this context means the transformation of visual images (the input of the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory.[8]

The scientific discipline of computer vision is concerned with the theory behind artificial systems that extract information from images. The image data can take many forms, such as video sequences, views from multiple cameras, multi-dimensional data from a 3D scanner, or medical scanning device. The technological discipline of computer vision seeks to apply its theories and models to the construction of computer vision systems.

Sub-domains of computer vision include scene reconstruction, object detection, event detection, video tracking, object recognition, 3D pose estimation, learning, indexing, motion estimation, visual servoing, 3D scene modeling, and image restoration.[6]

Contents

- 1Definition

- 2History

- 3Related fields

- 3.1Solid-state physics

- 3.2Neurobiology

- 3.3Signal processing

- 3.4Robotic navigation

- 3.5Other fields

- 3.6Distinctions

- 4Applications

- 4.1Medicine

- 4.2Machine Vision

- 4.3Military

- 4.4Autonomous vehicles

- 4.5Tactile Feedback

- 5Typical tasks

- 5.1Recognition

- 5.2Motion analysis

- 5.3Scene reconstruction

- 5.4Image restoration

- 6System methods

- 6.1Image-understanding systems

- 7Hardware

- 8See also

- 8.1Lists

- 9References

- 10Further reading

- 11External links

Definition

Computer vision is an interdisciplinary field that deals with how computers and can be made to gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to automate tasks that the human visual system can do.[1][2][3] "Computer vision is concerned with the automatic extraction, analysis and understanding of useful information from a single image or a sequence of images. It involves the development of a theoretical and algorithmic basis to achieve automatic visual understanding."[9] As a scientific discipline, computer vision is concerned with the theory behind artificial systems that extract information from images. The image data can take many forms, such as video sequences, views from multiple cameras, or multi-dimensional data from a medical scanner.[10] As a technological discipline, computer vision seeks to apply its theories and models for the construction of computer vision systems.

History

In the late 1960s, computer vision began at universities which were pioneering artificial intelligence. It was meant to mimic the human visual system, as a stepping stone to endowing robots with intelligent behavior.[11] In 1966, it was believed that this could be achieved through a summer project, by attaching a camera to a computer and having it "describe what it saw".[12][13]

What distinguished computer vision from the prevalent field of digital image processing at that time was a desire to extract three-dimensional structure from images with the goal of achieving full scene understanding. Studies in the 1970s formed the early foundations for many of the computer vision algorithms that exist today, including extraction of edges from images, labeling of lines, non-polyhedral and polyhedral modeling, representation of objects as interconnections of smaller structures, optical flow, and motion estimation.[11]

The next decade saw studies based on more rigorous mathematical analysis and quantitative aspects of computer vision. These include the concept of scale-space, the inference of shape from various cues such as shading, texture and focus, and contour models known as snakes. Researchers also realized that many of these mathematical concepts could be treated within the same optimization framework as regularization and Markov random fields.[14] By the 1990s, some of the previous research topics became more active than the others. Research in projective 3-D reconstructions led to better understanding of camera calibration. With the advent of optimization methods for camera calibration, it was realized that a lot of the ideas were already explored in bundle adjustment theory from the field of photogrammetry. This led to methods for sparse 3-D reconstructions of scenes from multiple images. Progress was made on the dense stereo correspondence problem and further multi-view stereo techniques. At the same time, variations of graph cut were used to solve image segmentation. This decade also marked the first time statistical learning techniques were used in practice to recognize faces in images (see Eigenface). Toward the end of the 1990s, a significant change came about with the increased interaction between the fields of computer graphics and computer vision. This included image-based rendering, image morphing, view interpolation, panoramic image stitching and early light-field rendering.[11]

Recent work has seen the resurgence of feature-based methods, used in conjunction with machine learning techniques and complex optimization frameworks.[15][16] The advancement of Deep Learning techniques has brought further life to the field of computer vision. The accuracy of deep learning algorithms on several benchmark computer vision data sets for tasks ranging from classification, segmentation and optical flow has surpassed prior methods.[citation needed]

Related fields

Object detection in a photograph

Solid-state physics

Solid-state physics is another field that is closely related to computer vision. Most computer vision systems rely on image sensors, which detect electromagnetic radiation, which is typically in the form of either visible or infrared light. The sensors are designed using quantum physics. The process by which light interacts with surfaces is explained using physics. Physics explains the behavior of optics which are a core part of most imaging systems. Sophisticated image sensors even require quantum mechanics to provide a complete understanding of the image formation process.[11] Also, various measurement problems in physics can be addressed using computer vision, for example motion in fluids.

Neurobiology

Neurobiology, specifically the study of the biological vision system. Over the last century, there has been an extensive study of eyes, neurons, and the brain structures devoted to processing of visual stimuli in both humans and various animals. This has led to a coarse, yet complicated, description of how "real" vision systems operate in order to solve certain vision-related tasks. These results have led to a sub-field within computer vision where artificial systems are designed to mimic the processing and behavior of biological systems, at different levels of complexity. Also, some of the learning-based methods developed within computer vision (e.g. neural net and deep learning based image and feature analysis and classification) have their background in biology.

Some strands of computer vision research are closely related to the study of biological vision – indeed, just as many strands of AI research are closely tied with research into human consciousness, and the use of stored knowledge to interpret, integrate and utilize visual information. The field of biological vision studies and models the physiological processes behind visual perception in humans and other animals. Computer vision, on the other hand, studies and describes the processes implemented in software and hardware behind artificial vision systems. Interdisciplinary exchange between biological and computer vision has proven fruitful for both fields.[17]

Signal processing

Yet another field related to computer vision is signal processing. Many methods for processing of one-variable signals, typically temporal signals, can be extended in a natural way to processing of two-variable signals or multi-variable signals in computer vision. However, because of the specific nature of images there are many methods developed within computer vision that have no counterpart in processing of one-variable signals. Together with the multi-dimensionality of the signal, this defines a subfield in signal processing as a part of computer vision.

Robotic navigation

Robot navigation sometimes deals with autonomous path planning or deliberation for robotic systems to navigate through an environment.[18] A detailed understanding of these environments is required to navigate through them. Information about the environment could be provided by a computer vision system, acting as a vision sensor and providing high-level information about the environment and the robot.

Other fields

Beside the above-mentioned views on computer vision, many of the related research topics can also be studied from a purely mathematical point of view. For example, many methods in computer vision are based on statistics, optimization or geometry. Finally, a significant part of the field is devoted to the implementation aspect of computer vision; how existing methods can be realized in various combinations of software and hardware, or how these methods can be modified in order to gain processing speed without losing too much performance. Computer vision is also used in fashion ecommerce, inventory management, patent search, furniture, and the beauty industry.[citation needed]

Distinctions

The fields most closely related to computer vision are image processing, image analysis and machine vision. There is a significant overlap in the range of techniques and applications that these cover. This implies that the basic techniques that are used and developed in these fields are similar, something which can be interpreted as there is only one field with different names. On the other hand, it appears to be necessary for research groups, scientific journals, conferences and companies to present or market themselves as belonging specifically to one of these fields and, hence, various characterizations which distinguish each of the fields from the others have been presented. In image processing, the input is an image and the output is an image as well, whereas in computer vision, an image or a video is taken as an input and the output could be an enhanced image, an understanding of the content of an image or even a behaviour of a computer system based on such understanding.

Computer graphics produces image data from 3D models, computer vision often produces 3D models from image data.[19] There is also a trend towards a combination of the two disciplines, e.g., as explored in augmented reality.

The following characterizations appear relevant but should not be taken as universally accepted:

- Image processing and image analysis tend to focus on 2D images, how to transform one image to another, e.g., by pixel-wise operations such as contrast enhancement, local operations such as edge extraction or noise removal, or geometrical transformations such as rotating the image. This characterization implies that image processing/analysis neither require assumptions nor produce interpretations about the image content.

- Computer vision includes 3D analysis from 2D images. This analyzes the 3D scene projected onto one or several images, e.g., how to reconstruct structure or other information about the 3D scene from one or several images. Computer vision often relies on more or less complex assumptions about the scene depicted in an image.

- Machine vision is the process of applying a range of technologies & methods to provide imaging-based automatic inspection, process control and robot guidance[20] in industrial applications.[17] Machine vision tends to focus on applications, mainly in manufacturing, e.g., vision-based robots and systems for vision-based inspection, measurement, or picking (such as bin picking[21]). This implies that image sensor technologies and control theory often are integrated with the processing of image data to control a robot and that real-time processing is emphasised by means of efficient implementations in hardware and software. It also implies that the external conditions such as lighting can be and are often more controlled in machine vision than they are in general computer vision, which can enable the use of different algorithms.

- There is also a field called imaging which primarily focuses on the process of producing images, but sometimes also deals with processing and analysis of images. For example, medical imaging includes substantial work on the analysis of image data in medical applications.

- Finally, pattern recognition is a field which uses various methods to extract information from signals in general, mainly based on statistical approaches and artificial neural networks.[22] A significant part of this field is devoted to applying these methods to image data.

Photogrammetry also overlaps with computer vision, e.g., stereophotogrammetry vs. computer stereo vision.

Applications

Applications range from tasks such as industrial machine vision systems which, say, inspect bottles speeding by on a production line, to research into artificial intelligence and computers or robots that can comprehend the world around them. The computer vision and machine vision fields have significant overlap. Computer vision covers the core technology of automated image analysis which is used in many fields. Machine vision usually refers to a process of combining automated image analysis with other methods and technologies to provide automated inspection and robot guidance in industrial applications. In many computer-vision applications, the computers are pre-programmed to solve a particular task, but methods based on learning are now becoming increasingly common. Examples of applications of computer vision include systems for:

Learning 3D shapes has been a challenging task in computer vision. Recent advances in deep learning has enabled researchers to build models that are able to generate and reconstruct 3D shapes from single or multi-view depth maps or silhouettes seamlessly and efficiently [19]

- Automatic inspection, e.g., in manufacturing applications;

- Assisting humans in identification tasks, e.g., a species identification system;[23]

- Controlling processes, e.g., an industrial robot;

- Detecting events, e.g., for visual surveillance or people counting, e.g., in the restaurant industry;

- Interaction, e.g., as the input to a device for computer-human interaction;

- Modeling objects or environments, e.g., medical image analysis or topographical modeling;

- Navigation, e.g., by an autonomous vehicle or mobile robot; and

- Organizing information, e.g., for indexing databases of images and image sequences.

- Tracking surfaces or planes in 3D coordinates for allowing Augmented Reality experiences.

Medicine

DARPA's Visual Media Reasoning concept video

One of the most prominent application fields is medical computer vision, or medical image processing, characterized by the extraction of information from image data to diagnose a patient. An example of this is detection of tumours, arteriosclerosis or other malign changes; measurements of organ dimensions, blood flow, etc. are another example. It also supports medical research by providing new information: e.g., about the structure of the brain, or about the quality of medical treatments. Applications of computer vision in the medical area also includes enhancement of images interpreted by humans—ultrasonic images or X-ray images for example—to reduce the influence of noise.

Machine Vision

A second application area in computer vision is in industry, sometimes called machine vision, where information is extracted for the purpose of supporting a production process. One example is quality control where details or final products are being automatically inspected in order to find defects. Another example is measurement of position and orientation of details to be picked up by a robot arm. Machine vision is also heavily used in agricultural process to remove undesirable food stuff from bulk material, a process called optical sorting.[24]

Military

Military applications are probably one of the largest areas for computer vision. The obvious examples are detection of enemy soldiers or vehicles and missile guidance. More advanced systems for missile guidance send the missile to an area rather than a specific target, and target selection is made when the missile reaches the area based on locally acquired image data. Modern military concepts, such as "battlefield awareness", imply that various sensors, including image sensors, provide a rich set of information about a combat scene which can be used to support strategic decisions. In this case, automatic processing of the data is used to reduce complexity and to fuse information from multiple sensors to increase reliability.

Autonomous vehicles

Artist's concept of Curiosity, an example of an uncrewed land-based vehicle. Notice the stereo camera mounted on top of the rover.

One of the newer application areas is autonomous vehicles, which include submersibles, land-based vehicles (small robots with wheels, cars or trucks), aerial vehicles, and unmanned aerial vehicles (UAV). The level of autonomy ranges from fully autonomous (unmanned) vehicles to vehicles where computer-vision-based systems support a driver or a pilot in various situations. Fully autonomous vehicles typically use computer vision for navigation, e.g. for knowing where it is, or for producing a map of its environment (SLAM) and for detecting obstacles. It can also be used for detecting certain task-specific events, e.g., a UAV looking for forest fires. Examples of supporting systems are obstacle warning systems in cars and systems for autonomous landing of aircraft. Several car manufacturers have demonstrated systems for autonomous driving of cars, but this technology has still not reached a level where it can be put on the market. There are ample examples of military autonomous vehicles ranging from advanced missiles to UAVs for recon missions or missile guidance. Space exploration is already being made with autonomous vehicles using computer vision, e.g., NASA's Curiosity and CNSA's Yutu-2 rover.

Tactile Feedback

Rubber artificial skin layer with flexible structure for shape estimation of micro-undulation surfaces

Above is a silicon mold with a camera inside containing many different point markers. When this sensor is pressed against the surface the silicon deforms and the position of the point markers shift. A computer can then take this data and determine how exactly the mold is pressed against the surface. This can be used to calibrate robotic hands in order to make sure they can grasp objects effectively.

Materials such as rubber and silicon are being used to create sensors that allow for applications such as detecting micro undulations and calibrating robotic hands. Rubber can be used in order to create a mold that can be placed over a finger, inside of this mold would be multiple strain gauges. The finger mold and sensors could then be placed on top of a small sheet of rubber containing an array of rubber pins. A user can then wear the finger mold and trace a surface. A computer can then read the data from the strain gauges and measure if one or more of the pins is being pushed upward. If a pin is being pushed upward then the computer can recognize this as an imperfection in the surface. This sort of technology is useful in order to receive accurate data of the imperfections on a very large surface.[25] Another variation of this finger mold sensor are sensors that contain a camera suspended in silicon. The silicon forms a dome around the outside of the camera and embedded in the silicon are point markers that are equally spaced. These cameras can then be placed on devices such as robotic hands in order to allow the computer to receive highly accurate tactile data.[26]

Other application areas include:

- Support of visual effects creation for cinema and broadcast, e.g., camera tracking (matchmoving).

- Surveillance.

- Driver drowsiness detection[citation needed]

- Tracking and counting organisms in the biological sciences[27]

Typical tasks

Each of the application areas described above employ a range of computer vision tasks; more or less well-defined measurement problems or processing problems, which can be solved using a variety of methods. Some examples of typical computer vision tasks are presented below.

Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g., in the forms of decisions.[4][5][6][7] Understanding in this context means the transformation of visual images (the input of the retina) into descriptions of the world that can interface with other thought processes and elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory.[8]

Recognition

The classical problem in computer vision, image processing, and machine vision is that of determining whether or not the image data contains some specific object, feature, or activity. Different varieties of the recognition problem are described in the literature.[28]

- Object recognition (also called object classification) – one or several pre-specified or learned objects or object classes can be recognized, usually together with their 2D positions in the image or 3D poses in the scene. Blippar, Google Goggles and LikeThat provide stand-alone programs that illustrate this functionality.

- Identification – an individual instance of an object is recognized. Examples include identification of a specific person's face or fingerprint, identification of handwritten digits, or identification of a specific vehicle.

- Detection – the image data are scanned for a specific condition. Examples include detection of possible abnormal cells or tissues in medical images or detection of a vehicle in an automatic road toll system. Detection based on relatively simple and fast computations is sometimes used for finding smaller regions of interesting image data which can be further analyzed by more computationally demanding techniques to produce a correct interpretation.

Currently, the best algorithms for such tasks are based on convolutional neural networks. An illustration of their capabilities is given by the ImageNet Large Scale Visual Recognition Challenge; this is a benchmark in object classification and detection, with millions of images and 1000 object classes used in the competition.[29] Performance of convolutional neural networks on the ImageNet tests is now close to that of humans.[29] The best algorithms still struggle with objects that are small or thin, such as a small ant on a stem of a flower or a person holding a quill in their hand. They also have trouble with images that have been distorted with filters (an increasingly common phenomenon with modern digital cameras). By contrast, those kinds of images rarely trouble humans. Humans, however, tend to have trouble with other issues. For example, they are not good at classifying objects into fine-grained classes, such as the particular breed of dog or species of bird, whereas convolutional neural networks handle this with ease.[citation needed]

Several specialized tasks based on recognition exist, such as:

- Content-based image retrieval – finding all images in a larger set of images which have a specific content. The content can be specified in different ways, for example in terms of similarity relative a target image (give me all images similar to image X) by utilizing reverse image search techniques, or in terms of high-level search criteria given as text input (give me all images which contain many houses, are taken during winter, and have no cars in them).

Computer vision for people counter purposes in public places, malls, shopping centres

- Pose estimation – estimating the position or orientation of a specific object relative to the camera. An example application for this technique would be assisting a robot arm in retrieving objects from a conveyor belt in an assembly line situation or picking parts from a bin.

- Optical character recognition (OCR) – identifying characters in images of printed or handwritten text, usually with a view to encoding the text in a format more amenable to editing or indexing (e.g. ASCII).

- 2D code reading – reading of 2D codes such as data matrix and QR codes.

- Facial recognition

- Shape Recognition Technology (SRT) in people counter systems differentiating human beings (head and shoulder patterns) from objects

Motion analysis

Several tasks relate to motion estimation where an image sequence is processed to produce an estimate of the velocity either at each points in the image or in the 3D scene, or even of the camera that produces the images. Examples of such tasks are:

- Egomotion – determining the 3D rigid motion (rotation and translation) of the camera from an image sequence produced by the camera.

- Tracking – following the movements of a (usually) smaller set of interest points or objects (e.g., vehicles, objects, humans or other organisms[27]) in the image sequence. This has vast industry applications as most of high running machineries can be monitored in this way.

- Optical flow – to determine, for each point in the image, how that point is moving relative to the image plane, i.e., its apparent motion. This motion is a result both of how the corresponding 3D point is moving in the scene and how the camera is moving relative to the scene.

Scene reconstruction

Given one or (typically) more images of a scene, or a video, scene reconstruction aims at computing a 3D model of the scene. In the simplest case the model can be a set of 3D points. More sophisticated methods produce a complete 3D surface model. The advent of 3D imaging not requiring motion or scanning, and related processing algorithms is enabling rapid advances in this field. Grid-based 3D sensing can be used to acquire 3D images from multiple angles. Algorithms are now available to stitch multiple 3D images together into point clouds and 3D models.[19]

Image restoration

The aim of image restoration is the removal of noise (sensor noise, motion blur, etc.) from images. The simplest possible approach for noise removal is various types of filters such as low-pass filters or median filters. More sophisticated methods assume a model of how the local image structures look, to distinguish them from noise. By first analysing the image data in terms of the local image structures, such as lines or edges, and then controlling the filtering based on local information from the analysis step, a better level of noise removal is usually obtained compared to the simpler approaches.

An example in this field is inpainting.

System methods

The organization of a computer vision system is highly application-dependent. Some systems are stand-alone applications that solve a specific measurement or detection problem, while others constitute a sub-system of a larger design which, for example, also contains sub-systems for control of mechanical actuators, planning, information databases, man-machine interfaces, etc. The specific implementation of a computer vision system also depends on whether its functionality is pre-specified or if some part of it can be learned or modified during operation. Many functions are unique to the application. There are, however, typical functions that are found in many computer vision systems.

- Image acquisition – A digital image is produced by one or several image sensors, which, besides various types of light-sensitive cameras, include range sensors, tomography devices, radar, ultra-sonic cameras, etc. Depending on the type of sensor, the resulting image data is an ordinary 2D image, a 3D volume, or an image sequence. The pixel values typically correspond to light intensity in one or several spectral bands (gray images or colour images), but can also be related to various physical measures, such as depth, absorption or reflectance of sonic or electromagnetic waves, or nuclear magnetic resonance.[24]

- Pre-processing – Before a computer vision method can be applied to image data in order to extract some specific piece of information, it is usually necessary to process the data in order to assure that it satisfies certain assumptions implied by the method. Examples are:

- Re-sampling to assure that the image coordinate system is correct.

- Noise reduction to assure that sensor noise does not introduce false information.

- Contrast enhancement to assure that relevant information can be detected.

- Scale space representation to enhance image structures at locally appropriate scales.

- Feature extraction – Image features at various levels of complexity are extracted from the image data.[24] Typical examples of such features are:

- Lines, edges and ridges.

- Localized interest points such as corners, blobs or points.

More complex features may be related to texture, shape or motion.

- Detection/segmentation – At some point in the processing a decision is made about which image points or regions of the image are relevant for further processing.[24] Examples are:

- Selection of a specific set of interest points.

- Segmentation of one or multiple image regions that contain a specific object of interest.

- Segmentation of image into nested scene architecture comprising foreground, object groups, single objects or salient object[30] parts (also referred to as spatial-taxon scene hierarchy),[31] while the visual salience is often implemented as spatial and temporal attention.

- Segmentation or co-segmentation of one or multiple videos into a series of per-frame foreground masks, while maintaining its temporal semantic continuity.[32][33]

- High-level processing – At this step the input is typically a small set of data, for example a set of points or an image region which is assumed to contain a specific object.[24] The remaining processing deals with, for example:

- Verification that the data satisfy model-based and application-specific assumptions.

- Estimation of application-specific parameters, such as object pose or object size.

- Image recognition – classifying a detected object into different categories.

- Image registration – comparing and combining two different views of the same object.

- Decision making Making the final decision required for the application,[24] for example:

- Pass/fail on automatic inspection applications.

- Match/no-match in recognition applications.

- Flag for further human review in medical, military, security and recognition applications.

Image-understanding systems

Image-understanding systems (IUS) include three levels of abstraction as follows: low level includes image primitives such as edges, texture elements, or regions; intermediate level includes boundaries, surfaces and volumes; and high level includes objects, scenes, or events. Many of these requirements are entirely topics for further research.

The representational requirements in the designing of IUS for these levels are: representation of prototypical concepts, concept organization, spatial knowledge, temporal knowledge, scaling, and description by comparison and differentiation.

While inference refers to the process of deriving new, not explicitly represented facts from currently known facts, control refers to the process that selects which of the many inference, search, and matching techniques should be applied at a particular stage of processing. Inference and control requirements for IUS are: search and hypothesis activation, matching and hypothesis testing, generation and use of expectations, change and focus of attention, certainty and strength of belief, inference and goal satisfaction.[34]

Hardware

New iPad includes LiDAR sensor

There are many kinds of computer vision systems; however, all of them contain these basic elements: a power source, at least one image acquisition device (camera, ccd, etc.), a processor, and control and communication cables or some kind of wireless interconnection mechanism. In addition, a practical vision system contains software, as well as a display in order to monitor the system. Vision systems for inner

spaces, as most industrial ones, contain an illumination system and may be placed in a controlled environment. Furthermore, a completed system includes many accessories such as camera supports, cables and connectors.

Most computer vision systems use visible-light cameras passively viewing a scene at frame rates of at most 60 frames per second (usually far slower).

A few computer vision systems use image-acquisition hardware with active illumination or something other than visible light or both, such as structured-light 3D scanners, thermographic cameras, hyperspectral imagers, radar imaging, lidar scanners, magnetic resonance images, side-scan sonar, synthetic aperture sonar, etc. Such hardware captures "images" that are then processed often using the same computer vision algorithms used to process visible-light images.

While traditional broadcast and consumer video systems operate at a rate of 30 frames per second, advances in digital signal processing and consumer graphics hardware has made high-speed image acquisition, processing, and display possible for real-time systems on the order of hundreds to thousands of frames per second. For applications in robotics, fast, real-time video systems are critically important and often can simplify the processing needed for certain algorithms. When combined with a high-speed projector, fast image acquisition allows 3D measurement and feature tracking to be realised.[35]

Egocentric vision systems are composed of a wearable camera that automatically take pictures from a first-person perspective.

As of 2016, vision processing units are emerging as a new class of processor, to complement CPUs and graphics processing units (GPUs) in this role.[36]

See also

- Computational imaging

- Computational photography

- Computer audition

- Egocentric vision

- Machine vision glossary

- Space mapping

- Teknomo–Fernandez algorithm

- Vision science

- Visual agnosia

- Visual perception

- Visual system

Lists

- Outline of computer vision

- List of emerging technologies

- Outline of artificial intelligence

References

- ^ Jump up to:a b Dana H. Ballard; Christopher M. Brown (1982). Computer Vision. Prentice Hall. ISBN 978-0-13-165316-0.

- ^ Jump up to:a b Huang, T. (1996-11-19). Vandoni, Carlo, E (ed.). Computer Vision : Evolution And Promise (PDF). 19th CERN School of Computing. Geneva: CERN. pp. 21–25. doi:10.5170/CERN-1996-008.21. ISBN 978-9290830955.

- ^ Jump up to:a b Milan Sonka; Vaclav Hlavac; Roger Boyle (2008). Image Processing, Analysis, and Machine Vision. Thomson. ISBN 978-0-495-08252-1.

- ^ Jump up to:a b Reinhard Klette (2014). Concise Computer Vision. Springer. ISBN 978-1-4471-6320-6.

- ^ Jump up to:a b Linda G. Shapiro; George C. Stockman (2001). Computer Vision. Prentice Hall. ISBN 978-0-13-030796-5.

- ^ Jump up to:a b c Tim Morris (2004). Computer Vision and Image Processing. Palgrave Macmillan. ISBN 978-0-333-99451-1.

- ^ Jump up to:a b Bernd Jähne; Horst Haußecker (2000). Computer Vision and Applications, A Guide for Students and Practitioners. Academic Press. ISBN 978-0-13-085198-7.

- ^ Jump up to:a b David A. Forsyth; Jean Ponce (2003). Computer Vision, A Modern Approach. Prentice Hall. ISBN 978-0-13-085198-7.

- ^ http://www.bmva.org/visionoverview Archived 2017-02-16 at the Wayback Machine The British Machine Vision Association and Society for Pattern Recognition Retrieved February 20, 2017

- ^ Murphy, Mike. "Star Trek's "tricorder" medical scanner just got closer to becoming a reality".

- ^ Jump up to:a b c d Richard Szeliski (30 September 2010). Computer Vision: Algorithms and Applications. Springer Science & Business Media. pp. 10–16. ISBN 978-1-84882-935-0.

- ^ Papert, Seymour (1966-07-01). "The Summer Vision Project". MIT AI Memos (1959 - 2004). hdl:1721.1/6125.

- ^ Margaret Ann Boden (2006). Mind as Machine: A History of Cognitive Science. Clarendon Press. p. 781. ISBN 978-0-19-954316-8.

- ^ Takeo Kanade (6 December 2012). Three-Dimensional Machine Vision. Springer Science & Business Media. ISBN 978-1-4613-1981-8.

- ^ Nicu Sebe; Ira Cohen; Ashutosh Garg; Thomas S. Huang (3 June 2005). Machine Learning in Computer Vision. Springer Science & Business Media. ISBN 978-1-4020-3274-5.

- ^ William Freeman; Pietro Perona; Bernhard Scholkopf (2008). "Guest Editorial: Machine Learning for Computer Vision". International Journal of Computer Vision. 77 (1): 1. doi:10.1007/s11263-008-0127-7. ISSN 1573-1405.

- ^ Jump up to:a b Steger, Carsten; Markus Ulrich; Christian Wiedemann (2018). Machine Vision Algorithms and Applications (2nd ed.). Weinheim: Wiley-VCH. p. 1. ISBN 978-3-527-41365-2. Retrieved 2018-01-30.

- ^ Murray, Don, and Cullen Jennings. "Stereo vision based mapping and navigation for mobile robots." Proceedings of International Conference on Robotics and Automation. Vol. 2. IEEE, 1997.

- ^ Jump up to:a b c Soltani, A. A.; Huang, H.; Wu, J.; Kulkarni, T. D.; Tenenbaum, J. B. (2017). "Synthesizing 3D Shapes via Modeling Multi-View Depth Maps and Silhouettes With Deep Generative Networks". Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition: 1511–1519. doi:10.1109/CVPR.2017.269. hdl:1721.1/126644. ISBN 978-1-5386-0457-1. S2CID 31373273.

- ^ Turek, Fred (June 2011). "Machine Vision Fundamentals, How to Make Robots See". NASA Tech Briefs Magazine. 35 (6). pages 60–62

- ^ "The Future of Automated Random Bin Picking".

- ^ Chervyakov, N. I.; Lyakhov, P. A.; Deryabin, M. A.; Nagornov, N. N.; Valueva, M. V.; Valuev, G. V. (2020). "Residue Number System-Based Solution for Reducing the Hardware Cost of a Convolutional Neural Network". Neurocomputing. 407: 439–453. doi:10.1016/j.neucom.2020.04.018. S2CID 219470398. Convolutional neural networks (CNNs) represent deep learning architectures that are currently used in a wide range of applications, including computer vision, speech recognition, identification of albuminous sequences in bioinformatics, production control, time series analysis in finance, and many others.

- ^ Wäldchen, Jana; Mäder, Patrick (2017-01-07). "Plant Species Identification Using Computer Vision Techniques: A Systematic Literature Review". Archives of Computational Methods in Engineering. 25 (2): 507–543. doi:10.1007/s11831-016-9206-z. ISSN 1134-3060. PMC 6003396. PMID 29962832.

- ^ Jump up to:a b c d e f E. Roy Davies (2005). Machine Vision: Theory, Algorithms, Practicalities. Morgan Kaufmann. ISBN 978-0-12-206093-9.

- ^ Ando, Mitsuhito; Takei, Toshinobu; Mochiyama, Hiromi (2020-03-03). "Rubber artificial skin layer with flexible structure for shape estimation of micro-undulation surfaces". ROBOMECH Journal. 7 (1): 11. doi:10.1186/s40648-020-00159-0. ISSN 2197-4225.

- ^ Choi, Seung-hyun; Tahara, Kenji (2020-03-12). "Dexterous object manipulation by a multi-fingered robotic hand with visual-tactile fingertip sensors". ROBOMECH Journal. 7 (1): 14. doi:10.1186/s40648-020-00162-5. ISSN 2197-4225.

- ^ Jump up to:a b Bruijning, Marjolein; Visser, Marco D.; Hallmann, Caspar A.; Jongejans, Eelke; Golding, Nick (2018). "trackdem: Automated particle tracking to obtain population counts and size distributions from videos in r". Methods in Ecology and Evolution. 9 (4): 965–973. doi:10.1111/2041-210X.12975. ISSN 2041-210X.

- ^ Forsyth, David; Ponce, Jean (2012). Computer vision: a modern approach. Pearson.

- ^ Jump up to:a b Russakovsky, Olga; Deng, Jia; Su, Hao; Krause, Jonathan; Satheesh, Sanjeev; Ma, Sean; Huang, Zhiheng; Karpathy, Andrej; Khosla, Aditya; Bernstein, Michael; Berg, Alexander C. (December 2015). "ImageNet Large Scale Visual Recognition Challenge". International Journal of Computer Vision. 115 (3): 211–252. doi:10.1007/s11263-015-0816-y. hdl:1721.1/104944. ISSN 0920-5691. S2CID 2930547.

- ^ A. Maity (2015). "Improvised Salient Object Detection and Manipulation". arXiv:1511.02999 [cs.CV].

- ^ Barghout, Lauren. "Visual Taxometric Approach to Image Segmentation Using Fuzzy-Spatial Taxon Cut Yields Contextually Relevant Regions." Information Processing and Management of Uncertainty in Knowledge-Based Systems. Springer International Publishing, 2014.

- ^ Liu, Ziyi; Wang, Le; Hua, Gang; Zhang, Qilin; Niu, Zhenxing; Wu, Ying; Zheng, Nanning (2018). "Joint Video Object Discovery and Segmentation by Coupled Dynamic Markov Networks" (PDF). IEEE Transactions on Image Processing. 27 (12): 5840–5853. Bibcode:2018ITIP...27.5840L. doi:10.1109/tip.2018.2859622. ISSN 1057-7149. PMID 30059300. S2CID 51867241. Archived from the original (PDF) on 2018-09-07. Retrieved 2018-09-14.

- ^ Wang, Le; Duan, Xuhuan; Zhang, Qilin; Niu, Zhenxing; Hua, Gang; Zheng, Nanning (2018-05-22). "Segment-Tube: Spatio-Temporal Action Localization in Untrimmed Videos with Per-Frame Segmentation" (PDF). Sensors. 18 (5): 1657. Bibcode:2018Senso..18.1657W. doi:10.3390/s18051657. ISSN 1424-8220. PMC 5982167. PMID 29789447.

- ^ Shapiro, Stuart C. (1992). Encyclopedia of Artificial Intelligence, Volume 1. New York: John Wiley & Sons, Inc. pp. 643–646. ISBN 978-0-471-50306-4.

- ^ Kagami, Shingo (2010). "High-speed vision systems and projectors for real-time perception of the world". 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition - Workshops. IEEE Computer Society Conference on Computer Vision and Pattern Recognition - Workshops. Vol. 2010. pp. 100–107. doi:10.1109/CVPRW.2010.5543776. ISBN 978-1-4244-7029-7. S2CID 14111100.

- ^ Seth Colaner (January 3, 2016). "A Third Type Of Processor For VR/AR: Movidius' Myriad 2 VPU". www.tomshardware.com.

Further reading

- David Marr (1982). Vision. W. H. Freeman and Company. ISBN 978-0-7167-1284-8.

- Azriel Rosenfeld; Avinash Kak (1982). Digital Picture Processing. Academic Press. ISBN 978-0-12-597301-4.

- Barghout, Lauren; Lawrence W. Lee (2003). Perceptual information processing system. U.S. Patent Application 10/618,543. ISBN 978-0-262-08159-7.

- Berthold K.P. Horn (1986). Robot Vision. MIT Press. ISBN 978-0-262-08159-7.

- Michael C. Fairhurst (1988). Computer Vision for robotic systems. Prentice Hall. ISBN 978-0-13-166919-2.

- Olivier Faugeras (1993). Three-Dimensional Computer Vision, A Geometric Viewpoint. MIT Press. ISBN 978-0-262-06158-2.

- Tony Lindeberg (1994). Scale-Space Theory in Computer Vision. Springer. ISBN 978-0-7923-9418-1.

- James L. Crowley and Henrik I. Christensen (Eds.) (1995). Vision as Process. Springer-Verlag. ISBN 978-3-540-58143-7.

- Gösta H. Granlund; Hans Knutsson (1995). Signal Processing for Computer Vision. Kluwer Academic Publisher. ISBN 978-0-7923-9530-0.

- Reinhard Klette; Karsten Schluens; Andreas Koschan (1998). Computer Vision – Three-Dimensional Data from Images. Springer, Singapore. ISBN 978-981-3083-71-4.

- Emanuele Trucco; Alessandro Verri (1998). Introductory Techniques for 3-D Computer Vision. Prentice Hall. ISBN 978-0-13-261108-4.

- Bernd Jähne (2002). Digital Image Processing. Springer. ISBN 978-3-540-67754-3.

- Richard Hartley and Andrew Zisserman (2003). Multiple View Geometry in Computer Vision. Cambridge University Press. ISBN 978-0-521-54051-3.

- Gérard Medioni; Sing Bing Kang (2004). Emerging Topics in Computer Vision. Prentice Hall. ISBN 978-0-13-101366-7.

- R. Fisher; K Dawson-Howe; A. Fitzgibbon; C. Robertson; E. Trucco (2005). Dictionary of Computer Vision and Image Processing. John Wiley. ISBN 978-0-470-01526-1.

- Nikos Paragios and Yunmei Chen and Olivier Faugeras (2005). Handbook of Mathematical Models in Computer Vision. Springer. ISBN 978-0-387-26371-7.

- Wilhelm Burger; Mark J. Burge (2007). Digital Image Processing: An Algorithmic Approach Using Java. Springer. ISBN 978-1-84628-379-6.

- Pedram Azad; Tilo Gockel; Rüdiger Dillmann (2008). Computer Vision – Principles and Practice. Elektor International Media BV. ISBN 978-0-905705-71-2.

- Richard Szeliski (2010). Computer Vision: Algorithms and Applications. Springer-Verlag. ISBN 978-1848829343.

- J. R. Parker (2011). Algorithms for Image Processing and Computer Vision (2nd ed.). Wiley. ISBN 978-0470643853.

- Richard J. Radke (2013). Computer Vision for Visual Effects. Cambridge University Press. ISBN 978-0-521-76687-6.

- Nixon, Mark; Aguado, Alberto (2019). Feature Extraction and Image Processing for Computer Vision (4th ed.). Academic Press. ISBN 978-0128149768.

External links

- USC Iris computer vision conference list

- Computer vision papers on the web A complete list of papers of the most relevant computer vision conferences.

- Computer Vision Online News, source code, datasets and job offers related to computer vision.

- Keith Price's Annotated Computer Vision Bibliography

- CVonline Bob Fisher's Compendium of Computer Vision.

- British Machine Vision Association Supporting computer vision research within the UK via the BMVC and MIUA conferences, Annals of the BMVA (open-source journal), BMVA Summer School and one-day meetings

- Computer Vision Container, Joe Hoeller GitHub: Widely adopted open-source container for GPU accelerated computer vision applications. Used by researchers, universities, private companies as well as the U.S. Gov't.

hide

- v

- t

- e

Computer vision

Categories

- Datasets

- Digital geometry

- Commercial systems

- Feature detection

- Geometry

- Image sensor technology

- Learning

- Morphology

- Motion analysis

- Noise reduction techniques

- Recognition and categorization

- Research infrastructure

- Researchers

- Segmentation

- Software

Technologies

- Computer stereo vision

- 3D reconstruction

- 3D reconstruction from multiple images

- Free viewpoint television

- Structure from motion

- Visual hull

- Motion capture

Applications

- Augmented reality

- Autonomous vehicles

- Face recognition

- Image search

- Optical character recognition

- Remote sensing

- Robots

Main category

show

- v

- t

- e

Differentiable computing

Authority control: National libraries

- France (data)

- United States

Categories:

- Computer vision

- Artificial intelligence

- Image processing

- Packaging machinery

参考:

一文看懂计算机视觉-CV(基本原理+2大挑战+8大任务+4个应用)

https://zh.wikipedia.org/wiki/%E8%AE%A1%E7%AE%97%E6%9C%BA%E8%A7%86%E8%A7%89

https://en.wikipedia.org/wiki/Computer_vision