手把手带你玩转k8s-ConfigMap与持久化存储

前言

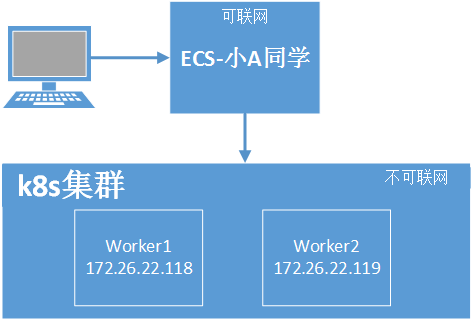

k8s的存储方式有很多,本文重点讲一下ConfigMap和持久化存储-nfs。这也是k8s常用的存储方式。干讲的话不太好讲,所以会以nginx为例,因为nginx刚好同时需要以上两种存储方式。这里先简单的画一下图。

本系列教程一共使用了三台服务器,其中一台可以联网,两台不可联网。k8s集群不可联网。

nginx存储类型分析

使用nginx的时候,最为关注的主要有以下两个目录:

/etc/nginx/conf.d和/usr/share/nginx/html

一个是配置文件目录,一个是静态资源目录。而一般生产上习惯使用ConfigMap去存储配置文件,静态资源则使用nfs持久化存储。

配置文件

├── /etc/nginx/conf.d

├── default.conf

├── a.conf

├── b.conf

└── c.conf

静态资源

├── /usr/share/nginx/html

├── index.html

├── a.html

├── b.html

└── c.html

ConfigMap

创建工作目录

mkdir -p /mldong/k8s/nginx

进入工作目录

cd /mldong/k8s/nginx

使用yaml定义方式

定义单个

cat <<EOF > /mldong/k8s/nginx/nginx-cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-cm

namespace: mldong-test

data:

a.conf: |-

server {

listen 80;

server_name a.mldong.com;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

EOF

定义多个

cat <> /mldong/k8s/nginx/nginx-cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-cm

namespace: mldong-test

data:

a.conf: |-

server {

listen 80;

server_name a.mldong.com;

location / {

root /usr/share/nginx/html/a;

index index.html index.htm;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

b.conf: |-

server {

listen 80;

server_name b.mldong.com;

location / {

root /usr/share/nginx/html/b;

index index.html index.htm;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

EOF

创建命令

kubectl apply -f nginx_cm.yaml

查看命名空间下的所有ConfigMap

kubectl get configmap -n mldong-test

查看指定ConfigMap详情

kubectl describe configmap nginx-cm -n mldong-test

ConfigMap===>kind

nginx-cm===>metadata.name

mldong-test===>metadata.namespace

删除定义的ConfigMap

kubectl delete -f nginx_cm.yaml

使用外部文件定义

当前目录下新建a.conf、b.conf

a.conf

cat <<EOF > /mldong/k8s/nginx/a.conf

server {

listen 80;

server_name a.mldong.com;

location / {

root /usr/share/nginx/html/a;

index index.html index.htm;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

EOF

b.conf

cat < /mldong/k8s/nginx/b.conf

server {

listen 80;

server_name b.mldong.com;

location / {

root /usr/share/nginx/html/b;

index index.html index.htm;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

EOF

创建命令

创建单个

kubectl create configmap nginx-cm --from-file=a.conf -n mldong-test

创建多个

kubectl create configmap nginx-cm --from-file=a.conf --from-file=b.conf -n mldong-test

默认情况下,文件名即为key,如要修改则例:

kubectl create configmap nginx-cm --from-file=xxxx=a.conf -n mldong-test

删除创建的ConfigMap

kubectl delete configmap nginx-cm -n mldong-test

查看命名空间下的所有ConfigMap

kubectl get configmap -n mldong-test

查看指定ConfigMap详情

kubectl describe configmap nginx-cm -n mldong-test

ConfigMap===>kind

nginx-cm===>metadata.name

mldong-test===>metadata.namespace

持久化存储

这里持久化存储使用的是nfs,在阿里云上叫nas。没有的要先开通-有免费版本。

开通nas

搜索nas

创建过程略

需要要注意的地方就是区域选对就可以了。

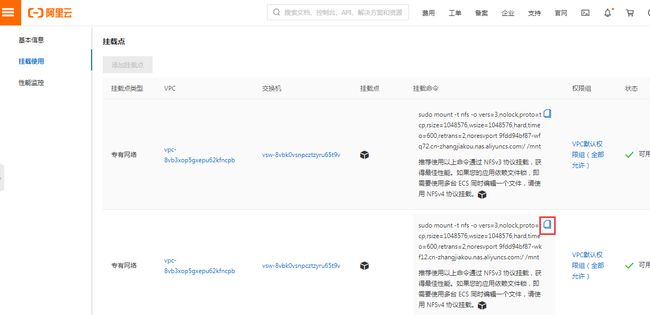

查看配置详情

挂载

复制挂载命令,在小A服务器执行

sudo mount -t nfs -o vers=3,nolock,proto=tcp,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2,noresvport 9fdd94bf87-wkf12.cn-zhangjiakou.nas.aliyuncs.com:/ /mnt

/mnt为要挂载的本地目录,如没有,则需自行创建。也可以换成别的目录。

查看挂载情况

df -h | grep aliyun

取消挂载

umount /mnt

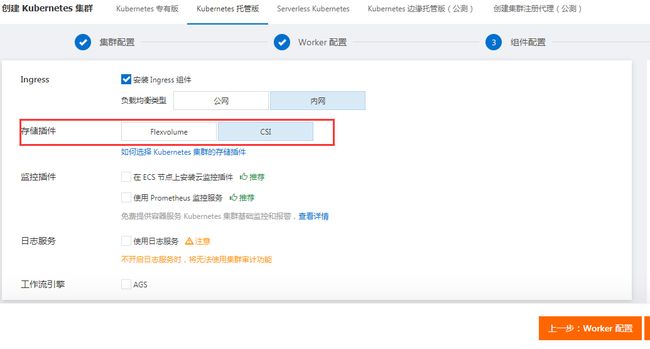

存储插件

阿里云集群存储插件有两个Flexvolume和CSI,官方推荐使用CSI,选择后,集群会默认安装该插件。

··

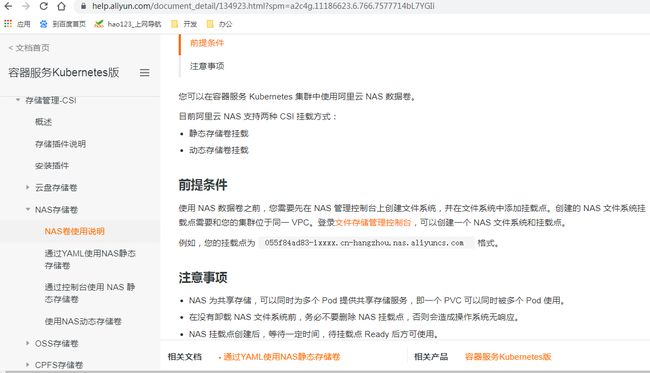

动手之前,建议先完整的看一下文档。

存储插件已默认安装

验证安装

- 执行以下命令,输出若干(节点个数)Running状态的Pod列表 。

kubectl get pod -n kube-system | grep csi-plugin

- 执行以下命令,输出一个Running状态的Pod列表。

kubectl get pod -n kube-system | grep csi-provisioner

开始使用

本文先使用NAS静态存储卷的方式实战,主要是怕一下子弄太多,会有些乱。亲自试了一下,这文档的yaml有坑。如果创建不了,可以使用控制台创建,然后学习其yaml。

创建NAS静态存储卷

nginx-pv.yaml

cat <<EOF > /mldong/k8s/nginx/nginx-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nginx-pv

labels:

alicloud-pvname: nginx-pv

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

csi:

driver: nasplugin.csi.alibabacloud.com

volumeHandle: nginx-pv

volumeAttributes:

server: "9fdd94bf87-wfq72.cn-zhangjiakou.nas.aliyuncs.com"

path: "/"

vers: "3"

storageClassName: nas

EOF

说明

driver:驱动类型。本例中取值为nasplugin.csi.alibabacloud.com,表示使用阿里云NAS CSI插件。volumeHandle:配置PV的名称。server:NAS挂载点。path:挂载子目录。vers:挂载NAS数据卷的NFS协议版本号,推荐使用v3;极速类型NAS只支持v3。

注:pv是所有命名空间共用的,所以不需要加命名空间

创建PV

kubectl apply -f nginx-pv.yaml

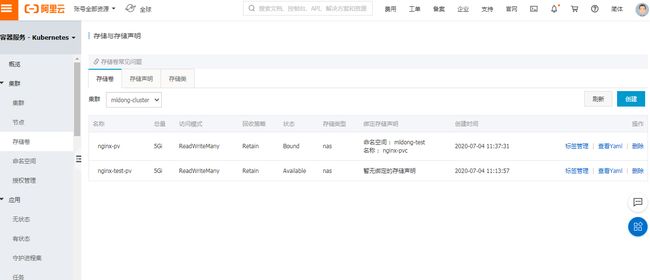

查看PV

kubectl get pv

Available->成功,Released->失败

查看PV详情

kubectl describe pv

删除PV

kubectl delete -f nginx-pv.yaml

nginx-pvc.yaml

注:pvc有命名空间,所以要加上命名空间

cat <<EOF > /mldong/k8s/nginx/nginx-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

annotations:

pv.kubernetes.io/bind-completed: 'yes'

pv.kubernetes.io/bound-by-controller: 'yes'

finalizers:

- kubernetes.io/pvc-protection

name: nginx-pvc

namespace: mldong-test

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

selector:

matchLabels:

alicloud-pvname: nginx-pv

storageClassName: nas

volumeMode: Filesystem

volumeName: nginx-pv

EOF

这里主要的alicloud-pvname为pv的metadata.name

创建PVC

kubectl apply -f nginx-pvc.yaml

查看PVC

kubectl get pvc -n mldong-test

Bound->成功,Pending->失败

查看PVC详情

kubectl describe pvc -n mldong-test

删除PVC

kubectl delete -f nginx-pvc.yaml

开始挂载

k8s的存储卷都是要先定义再挂载的,刚才我们定义了两种类型的存储券,configmap和pvc。

cat <<EOF > /mldong/k8s/nginx/nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-pod

namespace: mldong-test

spec:

selector:

matchLabels:

app: nginx-pod

replicas: 1

template:

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: registry-vpc.cn-zhangjiakou.aliyuncs.com/mldong/java/nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

name: port

protocol: TCP

volumeMounts:

- name: nginx-pvc

mountPath: "/usr/share/nginx/html"

- name: nginx-cm

mountPath: "/etc/nginx/conf.d"

volumes:

- name: nginx-pvc

persistentVolumeClaim:

claimName: nginx-pvc

- name: nginx-cm

configMap:

name: nginx-cm

EOF

说明:

volumeMount.name==>volumes.namevolumeMount.mountPath==> 容器目录persistentVolumeClaim.claimName==>定义的pvc的名称configMap.name==>定义的configmap的名称---为文件分割,k8s会帮处理

创建pod

kubectl apply -f nginx-deployment.yaml

查看pod

kubectl get pods -n mldong-test

小A服务器创建文件

├── /mnt

├── a

└── index.html

└── b

└── index.html

进入容器

kubectl exec -it pod-name -n mldong-test -- bash

查看配置文件是否存在

ls /etc/nginx/conf.d/

查看静态资源是否存在

ls /usr/share/nginx/html

创建Service

文件

cat <<EOF > /mldong/k8s/nginx/nginx-service.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-nodeport

namespace: mldong-test

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 32180

selector:

app: nginx-pod

EOF

发布命令

kubectl apply -f nginx-service.yaml

查看Service

kubectl get Service -n mldong-test

访问服务

访问节点1

curl worker1:32180

访问节点2

curl worker2:32180

访问域名a

curl -H 'Host: a.mldong.com' worker1:32180

访问域名b

curl -H 'Host: b.mldong.com' worker1:32180

小结

本文以nginx为例,讲解了如何使用ConfigMap和持久化存储,虽然是基于云平台的,不过其基本的流程都差不多。

-

先确定要部署的服务的存储类型

-

如果是ConfigMap

- 定义配置

-

如果是pv

- 定义pv

- 定义pvc

-

定义pod挂载

-

定义service发布服务