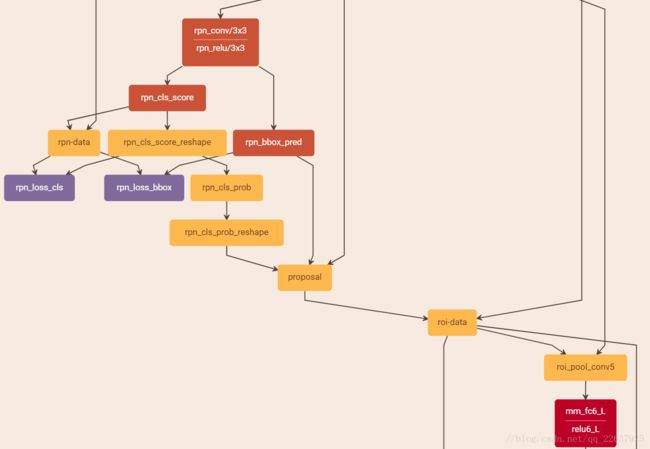

faster rcnn源码理解(三)之proposal_layer(网络中的proposal)

layer {

name: "rpn_conv/3x3"

type: "Convolution"

bottom: "P3_aggregate"

top: "rpn/output"

param { lr_mult: 1.0 }

param { lr_mult: 2.0 }

convolution_param {

num_output: 64

kernel_size: 3 pad: 1 stride: 1

weight_filler { type: "gaussian" std: 0.01 }

bias_filler { type: "constant" value: 0 }

}

}

layer {

name: "rpn_relu/3x3"

type: "ReLU"

bottom: "rpn/output"

top: "rpn/output"

}

layer {

name: "rpn_cls_score"

type: "Convolution"

bottom: "rpn/output"

top: "rpn_cls_score"

param { lr_mult: 1.0 }

param { lr_mult: 2.0 }

convolution_param {

num_output: 18 # 2(bg/fg) * 9(anchors)

kernel_size: 1 pad: 0 stride: 1

weight_filler { type: "gaussian" std: 0.01 }

bias_filler { type: "constant" value: 0 }

}

}

layer {

name: "rpn_bbox_pred"

type: "Convolution"

bottom: "rpn/output"

top: "rpn_bbox_pred"

param { lr_mult: 1.0 }

param { lr_mult: 2.0 }

convolution_param {

num_output: 36 # 4 * 9(anchors)

kernel_size: 1 pad: 0 stride: 1

weight_filler { type: "gaussian" std: 0.01 }

bias_filler { type: "constant" value: 0 }

}

}

首先有几个问题: 从rpn_conv/3x3输出了rpn/output, 这个output作为rpn_bbox_pred和rpn_cls_score的输入,不知道是什么样子?而且rpn_bbox_pred和rpn_cls_score都是卷积层而已,到底是怎么输出得分和候选框的?这里的rpn_bbox_pred涉及到的候选框和rpn_data层里生成的候选框有什么区别?

layer {

name: 'proposal'

type: 'Python'

bottom: 'rpn_cls_prob_reshape'

bottom: 'rpn_bbox_pred'

bottom: 'im_info'

top: 'rpn_rois'

# top: 'rpn_scores'

python_param {

module: 'rpn.proposal_layer'#nms

layer: 'ProposalLayer'

param_str: "'feat_stride': 8"

}

}

# --------------------------------------------------------

# Faster R-CNN

# Copyright (c) 2015 Microsoft

# Licensed under The MIT License [see LICENSE for details]

# Written by Ross Girshick and Sean Bell

# --------------------------------------------------------

import caffe

import numpy as np

import yaml

from fast_rcnn.config import cfg

from generate_anchors import generate_anchors

from fast_rcnn.bbox_transform import bbox_transform_inv, clip_boxes

from fast_rcnn.nms_wrapper import nms

DEBUG = False

class ProposalLayer(caffe.Layer):

"""

Outputs object detection proposals by applying estimated bounding-box

transformations to a set of regular boxes (called "anchors").

"""

def setup(self, bottom, top):

# parse the layer parameter string, which must be valid YAML

layer_params = yaml.load(self.param_str)

self._feat_stride = layer_params['feat_stride']

anchor_scales = layer_params.get('scales', (8, 16, 32))

self._anchors = generate_anchors(scales=np.array(anchor_scales))

self._num_anchors = self._anchors.shape[0]

if DEBUG:

print 'feat_stride: {}'.format(self._feat_stride)

print 'anchors:'

print self._anchors

# rois blob: holds R regions of interest, each is a 5-tuple

# (n, x1, y1, x2, y2) specifying an image batch index n and a

# rectangle (x1, y1, x2, y2)

top[0].reshape(1, 5)

# scores blob: holds scores for R regions of interest

if len(top) > 1:

top[1].reshape(1, 1, 1, 1)

def forward(self, bottom, top):

# Algorithm:

#

# for each (H, W) location i

# generate A anchor boxes centered on cell i

# apply predicted bbox deltas at cell i to each of the A anchors

# clip predicted boxes to image

# remove predicted boxes with either height or width < threshold

# sort all (proposal, score) pairs by score from highest to lowest

# take top pre_nms_topN proposals before NMS

# apply NMS with threshold 0.7 to remaining proposals

# take after_nms_topN proposals after NMS

# return the top proposals (-> RoIs top, scores top)

# assert bottom[0].data.shape[0] == 1, \

# 'Only single item batches are supported'

num_images = bottom[0].data.shape[0]

#assert num_images == cfg.TRAIN.IMS_PER_BATCH

if self.phase == 0:

cfg_key = 'TRAIN'

else:

cfg_key = 'TEST'

# = str(self.phase) # either 'TRAIN' or 'TEST'

pre_nms_topN = cfg[cfg_key].RPN_PRE_NMS_TOP_N

post_nms_topN = cfg[cfg_key].RPN_POST_NMS_TOP_N

assert post_nms_topN % num_images == 0

post_nms_topN_per_images = post_nms_topN / num_images

nms_thresh = cfg[cfg_key].RPN_NMS_THRESH

min_size = cfg[cfg_key].RPN_MIN_SIZE

# the first set of _num_anchors channels are bg probs

# the second set are the fg probs, which we want

all_scores = bottom[0].data[:, self._num_anchors:, :, :]

all_bbox_deltas = bottom[1].data

all_im_info = bottom[2].data

if DEBUG:

print 'im_size: ({}, {})'.format(all_im_info[0], all_im_info[1])

print 'scale: {}'.format(all_im_info[2])

# 1. Generate proposals from bbox deltas and shifted anchors

height, width = all_scores.shape[-2:]

if DEBUG:

print 'score map size: {}'.format(all_scores.shape)

# Enumerate all shifts

shift_x = np.arange(0, width) * self._feat_stride

shift_y = np.arange(0, height) * self._feat_stride

shift_x, shift_y = np.meshgrid(shift_x, shift_y)

shifts = np.vstack((shift_x.ravel(), shift_y.ravel(),

shift_x.ravel(), shift_y.ravel())).transpose()

# Enumerate all shifted anchors:

#

# add A anchors (1, A, 4) to

# cell K shifts (K, 1, 4) to get

# shift anchors (K, A, 4)

# reshape to (K*A, 4) shifted anchors

A = self._num_anchors

K = shifts.shape[0]

anchors = self._anchors.reshape((1, A, 4)) + \

shifts.reshape((1, K, 4)).transpose((1, 0, 2))

anchors = anchors.reshape((K * A, 4))

all_blobs = np.empty((0, 5), dtype=np.float32)

for i in xrange(num_images):

bbox_deltas = all_bbox_deltas[i:i+1, :]

# Transpose and reshape predicted bbox transformations to get them

# into the same order as the anchors:

#

# bbox deltas will be (1, 4 * A, H, W) format

# transpose to (1, H, W, 4 * A)

# reshape to (1 * H * W * A, 4) where rows are ordered by (h, w, a)

# in slowest to fastest order

bbox_deltas = bbox_deltas.transpose((0, 2, 3, 1)).reshape((-1, 4))

scores = all_scores[i:i+1, :]

# Same story for the scores:

#

# scores are (1, A, H, W) format

# transpose to (1, H, W, A)

# reshape to (1 * H * W * A, 1) where rows are ordered by (h, w, a)

scores = scores.transpose((0, 2, 3, 1)).reshape((-1, 1))

# Convert anchors into proposals via bbox transformations

proposals = bbox_transform_inv(anchors, bbox_deltas)

im_info = all_im_info[i]

# 2. clip predicted boxes to image

proposals = clip_boxes(proposals, im_info[:2])

# 3. remove predicted boxes with either height or width < threshold

# (NOTE: convert min_size to input image scale stored in im_info[2])

keep = _filter_boxes(proposals, min_size * im_info[2])

proposals = proposals[keep, :]

scores = scores[keep]

# 4. sort all (proposal, score) pairs by score from highest to lowest

# 5. take top pre_nms_topN (e.g. 6000)

order = scores.ravel().argsort()[::-1]

if pre_nms_topN > 0:

order = order[:pre_nms_topN]

proposals = proposals[order, :]

scores = scores[order]

# 6. apply nms (e.g. threshold = 0.7)

# 7. take after_nms_topN (e.g. 300)

# 8. return the top proposals (-> RoIs top)

keep = nms(np.hstack((proposals, scores)), nms_thresh)

if post_nms_topN_per_images > 0:

keep = keep[:post_nms_topN_per_images]

proposals = proposals[keep, :]

scores = scores[keep]

# Output rois blob

# Our RPN implementation only supports a single input image, so all

# batch inds are 0

batch_inds = np.ones((proposals.shape[0], 1), dtype=np.float32) * i

blob = np.hstack((batch_inds, proposals.astype(np.float32, copy=False)))

all_blobs = np.vstack((all_blobs, blob))

top[0].reshape(*(all_blobs.shape))

top[0].data[...] = all_blobs

# [Optional] output scores blob

# if len(top) > 1:

# top[1].reshape(*(scores.shape))

# top[1].data[...] = scores

def backward(self, top, propagate_down, bottom):

"""This layer does not propagate gradients."""

pass

def reshape(self, bottom, top):

"""Reshaping happens during the call to forward."""

pass

def _filter_boxes(boxes, min_size):

"""Remove all boxes with any side smaller than min_size."""

ws = boxes[:, 2] - boxes[:, 0] + 1

hs = boxes[:, 3] - boxes[:, 1] + 1

keep = np.where((ws >= min_size) & (hs >= min_size))[0]

return keep