K-MEANS聚类——Python实现

一、概述

(1)物以类聚,人以群分,聚类分析是一种重要的多变量统计方法,但记住其实它是一种数据分析方法,不能进行统计推断的。当然,聚类分析主要应用在市场细分等领域,也经常采用聚类分析技术来实现对抽样框的分层。它和分类不同,它属于无监督问题。

(2)常用聚类方法:K-means聚类、密度聚类方法DBSCAN、

二、K-MEANS算法

基本概念:

- 要得到簇的个数,需要指定k值

- 质心:均值,即向量各维取平均即可。

- 距离的度量:常用欧式距离(先标准化)

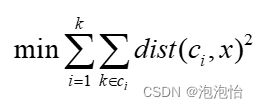

- 优化目标:

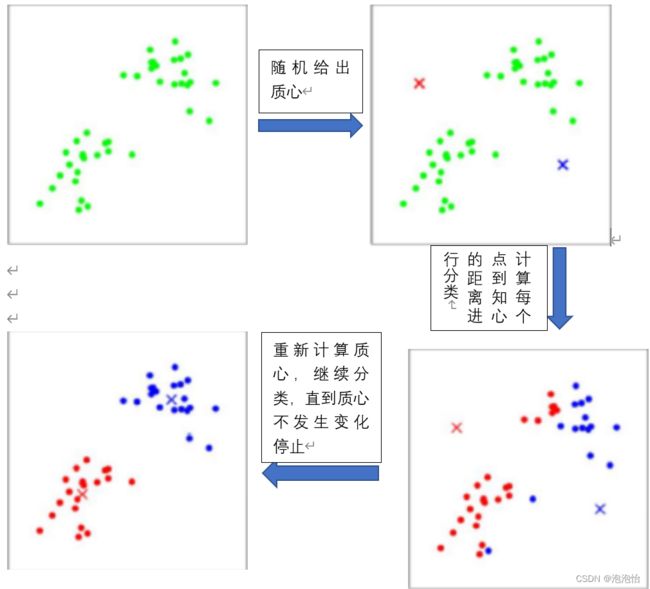

三、工作流程

四、优缺点:

优点:操作简单,快速,适合常规数据集

缺点:

- k值很难确定

- 复杂度与样本呈线性关系

- 很难发现任意形状的簇

五、代码实现

1.导包

%matplotlib inline

import numpy as np

import matplotlib.pyplot as plt

from sklearn.cluster import KMeans

from sklearn.preprocessing import StandardScaler

from sklearn.datasets import make_blobs #生成数据函数

from sklearn import metrics2.生成平面数据点+标准化

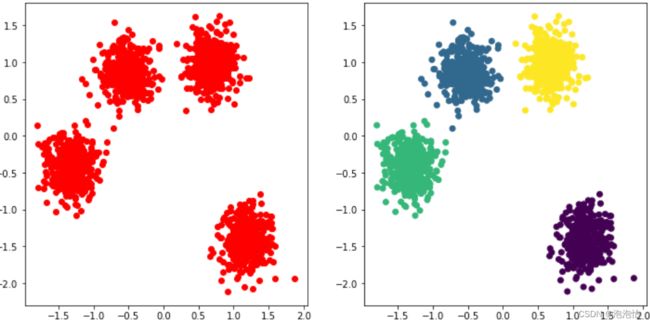

n_samples = 1500

X,y = make_blobs(n_samples=n_samples,centers=4,random_state=170)

X = StandardScaler().fit_transform(X) #标准化3.调用kmeans包

Kmeans=KMeans(n_clusters=4,random_state=170)

Kmeans.fit(X)4.可视化效果

plt.figure(figsize=(12,6))

plt.subplot(121)

plt.scatter(X[:,0],X[:,1],c='r')

plt.title("聚类前数据图")

plt.subplot(122)

plt.scatter(X[:,0],X[:,1],c=Kmeans.labels_)

plt.title("聚类后数据图")

plt.show()

结果如图:

六、K-MEANS算法程序,代码如下:

class KMEANS:

def_init_(self,data,num_clustres):

self.data=data

self.num_clustres=num_clustres

def train(self,max_iterations):

#1.先随机选择k个中心点

centroids=KMEANS.centroids_init(self.data,self.num_clustres)

#2.开始训练

num_exxamples=self.data.shape[0]

closest_ centroids_ids=np.empty((num_examples,1))

for _ in range(max_iterations):

#3得到当前每个样本到k个中心点的距离,找最近的

closest_centroids_ids=KMEANS.centroids_find_closest(self.data,centroids)

#进行中心点位置更新

centroids=KMEANS.centroids_compute(self.data,closest_centroids_ids,self.num_clustres)

return centroids,closest_ centroids_ids接下来是三个方法:

def centroids_init(self,data,num_clustres):

num_examples=data.shape[0]

random_ids=np.random.permutation(num_examples)

centroids=data[random_ids[:num__clustres],:]

return centroids

def centroids_find_closest(self,data,centroids ) :

num_examples = self.data.shape[0]

num_centroids = centroids.shape[0]

closest_centroids_ids = np.zeros((num_examples,1))

for example_index in range( num_examples) :

distance = np.zeros( num_centroids,1)

for centroid_index in range(num_centroids) :

distance_diff = data[example_index, : ] - centroids[centroid_index,distance[centroid_index]

= np.sum(distance_diff**2)

closest_centroids_ids[example_index] = np.argmin(distance)

return closest_centroids_ids

def centroids_compute(self ,data,closest_centroids_ids,num_clustres):

num_features = data.shape[0]

centroids = np.zeros((num_ciustres,num_features))

for centnoid_id in range(num_clustres) :

closest_ids = closest_centroids_ids == centroid_id

centroids[closest_ids] = np.mean( aareturn centroids.flatten(),:],axis=0)

return centroids